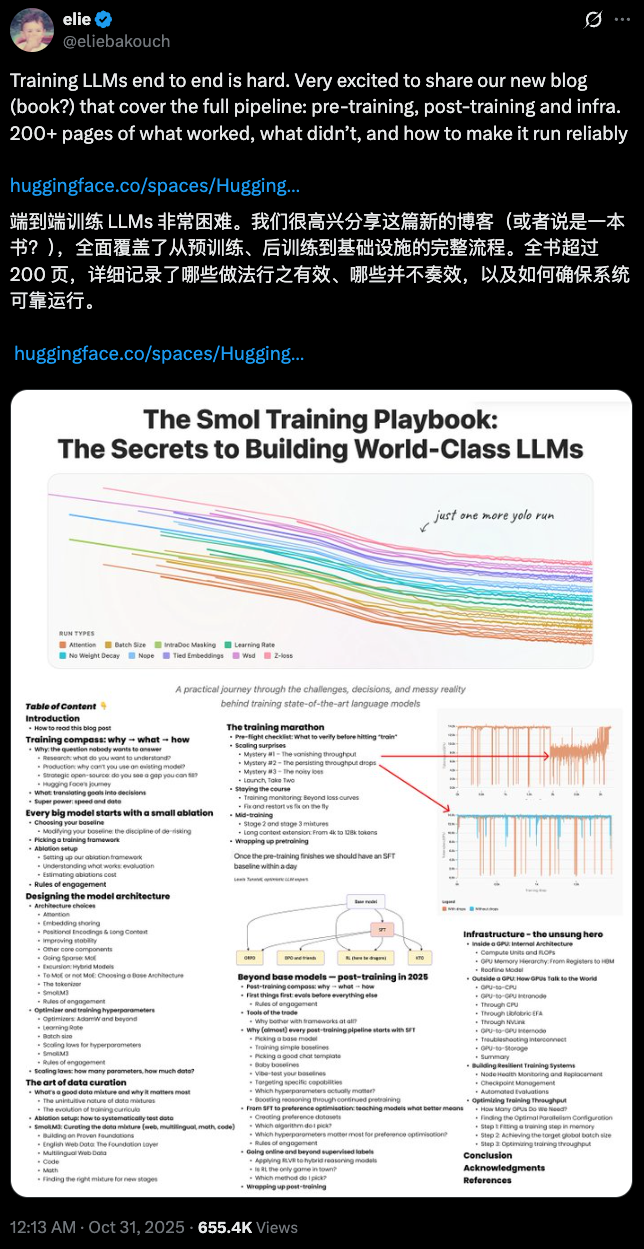

214-Page Internal Guide: *Smol Training Manual – Secrets to Building World-Class LLMs*

What It Really Takes to Train a High-Performance LLM Today

Published research looks neat and logical:

A carefully designed architecture, curated datasets, and adequate compute yield ideal results.

Papers present perfect experiments, clean ablation studies, and “obvious” decisions — viewed with hindsight.

Reality is messier:

They rarely capture 2:00 a.m. data-loader debugging, the shock of sudden loss spikes, or tracking down a hidden tensor-parallel bug that has sabotaged weeks of work. In truth, the process is full of trial-and-error and discarded ideas that never make it to print.

Recently, Hugging Face released a 214-page internal manual — Smol Training Playbook: The Secrets to Building World-Class LLMs — detailing real-world challenges and decision-making complexities in training cutting-edge models.

It drew over 655.4K views in one day.

Original link: https://huggingface.co/spaces/HuggingFaceTB/smol-training-playbook

Below is a condensed, practical strategy from Hugging Face’s blog and their SmolLM3 training experience. For full insights, read the original post.

---

Phase 1 — Decide Before You Train

Training a high-performance LLM is high-risk. Answer three strategic questions first:

- Why train?

- What to train?

- How to train?

Avoid burning compute when you shouldn’t train at all.

Step 1 — Clarify Goals (Why → What)

Ask: Can existing models solve the problem with prompt engineering or fine-tuning?

Pretraining from scratch usually makes sense only for:

- Research — exploring architectural innovations

- Production — specialized domains or constraints (e.g., edge deployment)

- Strategic open source — filling ecosystem gaps (e.g., deployable small models)

Example:

Hugging Face’s goal for SmolLM3: Fill the gap for powerful, efficient, small on-device models → picked a dense 3B Llama-style architecture.

---

Step 2 — Use Ablation Experiments to De-Risk

LLMs behave non-intuitively. Only ablation testing can confirm the right decision.

Effective ablation requirements:

- Iterate quickly

- Strong discriminative power

- Change one variable at a time

Why strict?

Complex interactions mean multi-variable changes mask causal effects.

Adopt each proven change into the baseline, then test another — yielding cumulative, explainable, reversible gains.

Follow the de-risking principle:

Only adopt architecture or hyperparameter changes backed by evidence of improved performance or measurable engineering gains.

> Gains include:

> - Better target capabilities

> - Faster inference

> - Lower memory usage

> - Greater stability — without hurting core metrics

Cost reality:

For SmolLM3, ablations + debugging consumed >50% of total cost (161,280 GPU·h).

The team tested only modifications with strong potential impact.

---

SmolLM3 Key Architecture Choices

- Attention: GQA (Grouped Query Attention) → compresses KV cache, improves inference efficiency with minimal quality loss.

- Embedding: Shared input/output embeddings → saves parameters, reallocates budget to deeper networks for small model gains.

- Positional encoding: RNoPE (alternating RoPE and NoPE) → retains short-context strengths, improves long-context generalization potential.

- Data: Intra-document masking → blocks cross-document attention; stabilizes training; improves long-context handling.

- Hyperparameters:

- Optimizer: AdamW (stable, reliable)

- LR schedule: WSD (Warmup–Stable–Decay) → more flexible than cosine decay and adjustable mid-training.

---

Phase 2 — Build Pre-Training Foundations

Data defines the model’s abilities.

Use a multi-stage curriculum:

- Early: Broad coverage, high-volume general datasets → baseline distribution.

- Later (LR decay phase): Inject small amounts of high-quality data (e.g., Stack-Edu, FineMath4+) → shapes final behavior in low-LR steady learning without overwriting earlier skills.

Multilingual Planning Starts with the Tokenizer

Metrics to measure:

- Fertility: avg. tokens per word (lower = better efficiency)

- Continued-word proportion: how often common words get split (lower = better)

SmolLM3 choice: Llama-3.2 tokenizer — balanced multilingual coverage, reasonable size, fast training (minimal redundant tokens).

---

Phase 3 — Scaling Up

At large scale, failures and bottlenecks are inevitable.

Case: 11T-token training for SmolLM3

1. Throughput & Data Bottlenecks

- Initial issue: Throughput drop traced to shared FSx storage eviction causing missing pages → IO jitter.

- Fix: Download full 24 TB corpus to each node’s local NVMe RAID (`/scratch`) → stable high throughput.

- Secondary issue: Throughput decline with step count → Indexed nanosets dataloader slowed.

- Fix: Switched to TokenizedBytes loader → raw byte splits, avoided hot-index bottlenecks.

Lesson: Address physical bottlenecks first — IO/storage, then loader complexity — use shortest-path fixes.

---

2. Subtle Tensor Parallel Bug

At ~1T tokens, evaluations lagged.

Systematically ruled out data, optimizers, LR schedules, evaluation pipeline.

Root cause: Each TP rank reused the same random seed → correlated weight initialization, reduced effective representation space.

Fix: Restart at 1T tokens, giving each TP rank an independent seed.

Lesson: Even tiny settings can scale into big problems.

---

Phase 4 — Post-Training for Polished Behavior

Pre-training = raw capabilities.

Post-training = stable, controllable assistant behavior.

SmolLM3’s Hybrid Reasoning

- `/think` → chain-of-thought reasoning

- `/no_think` → direct conclusions

---

Post-Training Steps

- Mid-Training (Continued Pre-Training)

- Add large-scale distilled reasoning data → strong reasoning patterns before instruction alignment.

- Boost: reasoning benchmark ×3.

- SFT (Supervised Fine-Tuning)

- Loss on assistant tokens only → focuses on answer quality

- Avoids question continuation habit

- Preference Optimization

- Align style and trade-offs with human preferences

- Use lower LR (≈×0.1 SFT LR) → avoid catastrophic forgetting

- RLVR (Reinforcement Learning via Verifiable Rewards)

- Autonomously refine strategies when tasks are auto-verifiable

- Risk: reward hacking via long CoTs → mitigate with length penalties

---

Final Sequence:

- Mid-training → build reasoning foundation

- SFT → basic assistant behavior

- Preference optimization → human alignment

- RLVR → fine-grained improvement

- Applied with hybrid reasoning control across all phases.

Guiding principle:

Design every signal for controllability & verifiability → stronger capabilities and practical usability.

---

Broader Workflow Link

These engineering lessons parallel AI content creation workflows:

Platforms like AiToEarn官网 combine:

- AI generation

- Multi-channel publishing

- Analytics

- Model ranking

Allowing deployment & monetization across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X.

Efficiency and precision — crucial in both LLM training and large-scale creative production.

---

In summary:

Successful LLM training demands:

- Strategic decisions pre-training

- Disciplined ablations

- Robust data handling

- Precise scaling fixes

- Targeted post-training

And the wisdom to know: sometimes, the most important choice is not to train at all.