65-year-old Turing Award Winner Finally Stops Reporting to 28-year-old Dropout — How Zuckerberg Drove Him Away

The Stubborn Old Man Standing Against the LLM Tide — Might Be Leaving Meta

In Silicon Valley, high-profile executives leaving to start their own companies is commonplace.

But if Yann LeCun leaves, it’s different — he’s a heavyweight who can make Mark Zuckerberg personally come knocking.

LeCun is:

- One of the three giants of deep learning

- A Turing Award laureate

- The founding father of Meta AI Research

His departure would carry serious weight.

For years, LeCun’s stance has been contrarian: standing firmly against the world’s hottest LLM trend, holding a metaphorical sign reading — “You’re on the wrong track.”

Now, the Financial Times reports he may be preparing a startup and talking with investors.

At present, it’s rumor — claiming LeCun has resigned would be premature.

Nevertheless, his silence amid the media storm speaks volumes.

---

12 Years of Meta and LeCun — From Courting Talent to Possible Separation

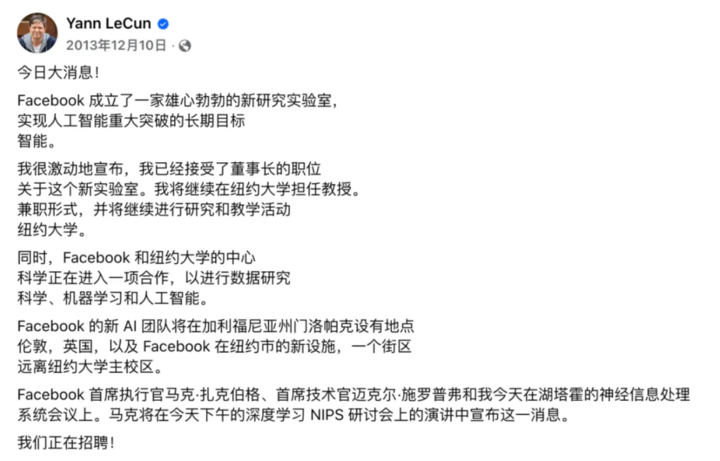

Zuckerberg’s 2013 Gamble

The relationship began in 2013, during the deep learning boom.

2012: Geoffrey Hinton, Alex Krizhevsky, and Ilya Sutskever submitted AlexNet to ILSVRC-2012, hitting a top-5 error rate of ~15.3%.

Suddenly — neural networks were taken seriously.

The Talent Wars

- Google: Acquired Hinton’s DNNresearch

- Microsoft Research: Rapid AI expansion

Facebook — transitioning from PC to mobile internet — needed AI for:

- News feed ranking

- Photo recognition

- Content moderation

But FB’s AI fell behind competitors. Zuckerberg needed academic gravitas to attract top talent.

Enter Yann LeCun

At NYU for over a decade, LeCun had a long history:

- 1989 at Bell Labs: Developed CNN for handwritten digit recognition — later a cornerstone of computer vision.

For years, deep learning was unfashionable. Only after 2012’s ImageNet win, LeCun was vindicated.

---

Zuckerberg’s Offer

- Max funding with resource freedom

- Academic freedom — keep NYU role

- Full authority to build the Facebook AI Research (FAIR) lab

By late 2013, LeCun was officially Head of FAIR, with offices in:

- New York

- Menlo Park

- London

Initial focus:

- Recruiting from top universities

- Embedding deep learning into Facebook products

- Advancing academic research

- Training new AI talent

Key Early Wins

- DeepFace (2014): 97.35% facial recognition accuracy

- Feed algorithms optimized via deep learning — improved ad CTR

---

FAIR’s Academic Footprint

- Published papers at top conferences

- Organized workshops

- Supervised PhD students

- Turing Award (with Hinton & Bengio) cemented his status

PyTorch Born at FAIR

- Led by Soumith Chintala, open-sourced in 2017

- Features: Dynamic computation graph, Python-native, easy debugging

- Quickly became a global AI research staple

Soumith himself just left Meta: “I don’t want to spend my whole life working on PyTorch.”

---

Philosophical Clash: Cat vs ChatGPT

At ChatGPT’s global surge onset, LeCun and Zuckerberg enjoyed a short “honeymoon.”

2023 saw Meta open-source the LLaMA model series.

- Competitors went closed-source

- Meta’s aim: Make LLaMA the “Android” of AI

LeCun supported open source — but remained skeptical about LLMs.

Why LLMs Are “A Dead End” in LeCun’s View

- LLMs predict next words based on statistical correlation — not understanding

- Susceptible to “hallucinations”

- Lack persistent memory systems of animals

- Cannot plan complex sequences

> “Your cat has a better world model than any AI system today.”

A 4-year-old absorbs \(10^{15}\) bytes visually — gaining basic physical and linguistic intuition.

LLMs, even after “reading the internet,” still lack such grounding.

---

His Roadmap — World Models & JEPA

Core idea:

Let AI learn by observing the world, not just memorizing text.

Modules:

- Perception

- World model

- Memory

- Action

JEPA Approach

- Self-supervised learning

- Predict abstract features from related inputs (e.g., sequential video frames)

- Focus on key factors, not exhaustive detail generation

Predicted: If successful, could surpass LLM methods in 3–5 years.

The challenge — can Meta wait that long?

---

Meta’s Restructuring — End of FAIR’s Autonomy

Initial promise:

FAIR conducted long-term, fundamental AI research.

Now:

Generative AI race forces short-term deliverables.

Structural Changes

- Formation of Super Intelligence Lab

- FAIR merged with foundational and applied AI teams

- Research must align directly with product goals

Publication freedom curtailed — now requires cross-team and management approval.

LeCun reportedly considered resigning over the new policy.

---

Leadership Shifts

- Alexandr Wang appointed Chief AI Officer — reports to Zuckerberg

- LeCun now reports to Wang

- Shengjia Zhao named Chief AI Scientist of the lab

Inside Meta — it’s known LeCun disagrees with new direction.

The final push: Layoffs affecting both FAIR and product AI teams, including Yuandong Tian.

---

Conclusion — What’s Next?

Meta is moving away from fundamental research without short-term returns.

FAIR’s golden era has closed.

If LeCun leaves:

- He’ll regain freedom to pursue his world model vision

- No corporate reporting lines

- Can prove (or disprove) his “LLMs are a dead end” claim

For Meta:

- Needs practical generative AI across all products

- Fewer dissenting voices internally

One day — if LLMs stall — people may recall the stubborn old man who stood in protest.

---

Key takeaway:

LeCun’s voice — like many contrarians in tech — keeps the field from narrowing to short-term corporate goals.

For independent researchers and creators, open-source platforms like AiToEarn官网 and AiToEarn开源地址 offer tools to generate, publish, and monetize AI content globally — enabling bold visions without corporate constraints.