# 2025-10-24 · Zhejiang

---

## Introduction

With the rapid growth of AI Agents, a new term — **Context Engineering** — has emerged. Many are asking:

- How does it differ from **Prompt Engineering**?

- Is it just another buzzword?

This guide will explore:

1. **Concept Definition** — basic principles and components.

2. **Industry Practices** — real-world product implementations.

3. **Future Outlook** — where Context Engineering might evolve.

We’ll also address:

- Why Context Engineering is essential.

- Why *Claude Code* excels.

- How *Manus* optimizes Agents.

- Why *Spec-Driven Development* + Context Engineering may replace *Vibe Coding* + Prompt Engineering.

---

## Concept Definition

### What Is Context Engineering?

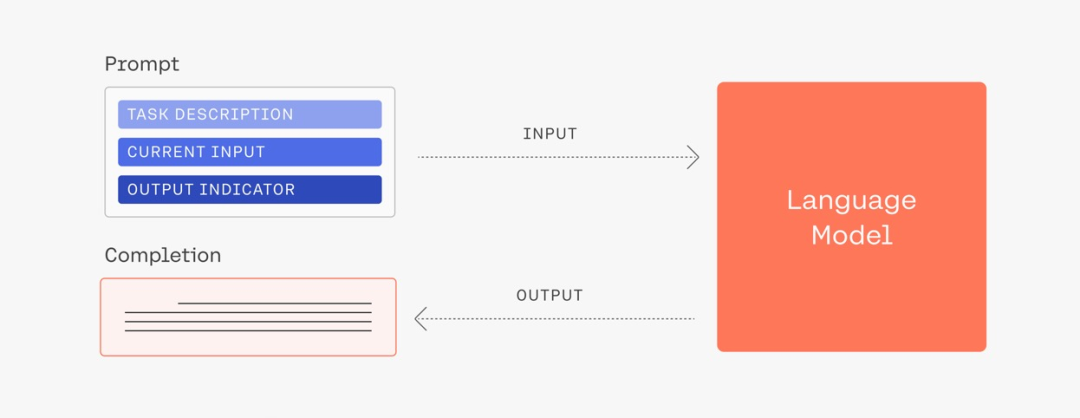

**Context Engineering** is the design of dynamic systems that supply **the right information and tools in the right format** to large language models (LLMs) — enabling them to work effectively.

**Context** is **everything the model “sees” before responding** — not just a single prompt. The challenge is to fill the model’s **limited context window** with **highly relevant** information.

**Core characteristics:**

- Dynamic system construction

- Accurate information delivery

- Proper formatting

- Task-enabling design

*Source: Internet*

---

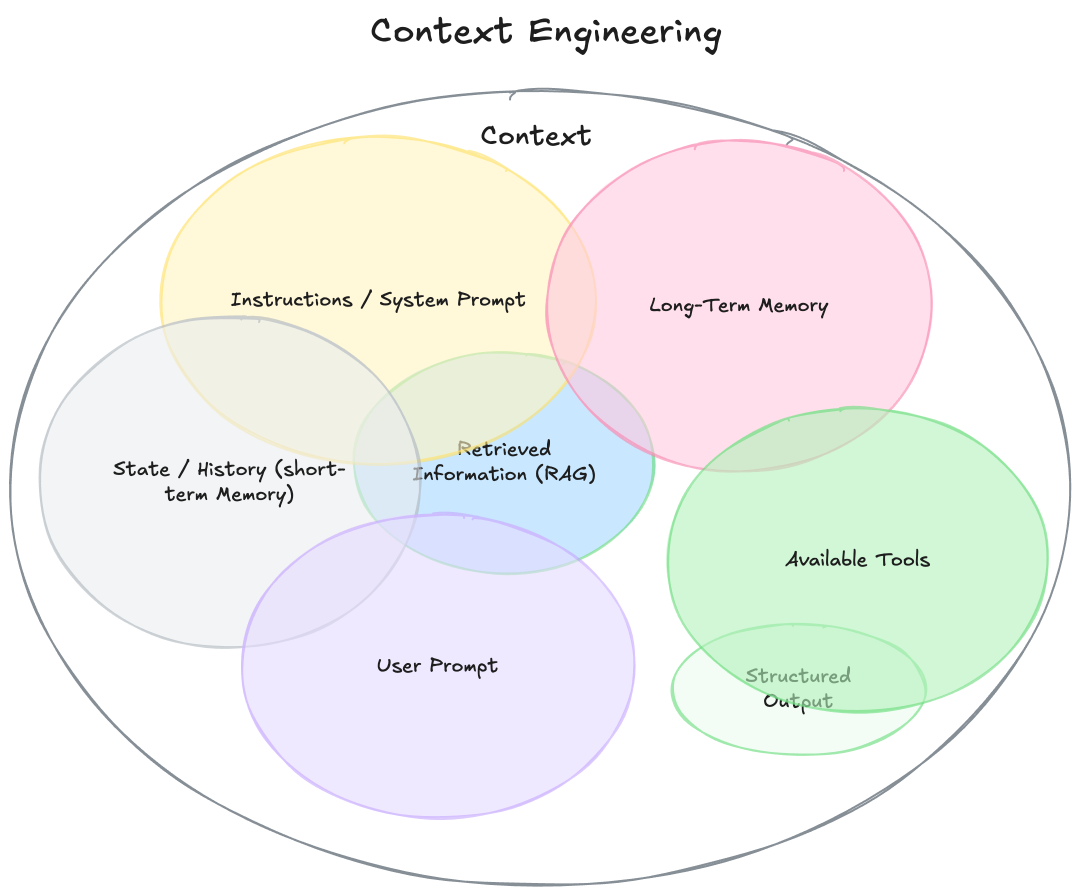

### Key Components

A full Context Engineering system typically includes:

1. **Instructions / System Prompt** — defines model behavior with examples, rules, constraints.

2. **User Prompt** — the task or question being asked.

3. **Short-Term Memory** — conversation history for ongoing context.

4. **Long-Term Memory** — retained knowledge across sessions.

5. **Retrieved Information (RAG)** — relevant external data sources.

6. **Available Tools** — callable functions or APIs.

7. **Structured Output** — required output formats, e.g., JSON.

---

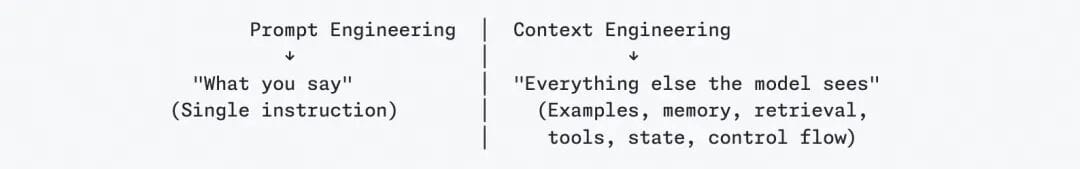

### Prompt Engineering vs Context Engineering

**Paradigm Shift** from wording-focused prompt crafting to system-level context construction:

| Dimension | Prompt Engineering | Context Engineering |

|-----------|-------------------|---------------------|

| **Focus** | Wording techniques | Complete context delivery |

| **Scope** | Specific prompts | Systemic, multi-source inputs |

---

**Summary:** Context Engineering moves beyond phrase crafting, enabling LLMs to operate with **rich, structured situational awareness**. Combined with methods like **Spec-Driven Development**, it creates stronger AI workflows.

**Pro Tip:** Open-source platforms like [AiToEarn官网](https://aitoearn.ai/) integrate **AI generation, publishing, and analytics** for multi-platform delivery — a practical tool for implementing context-rich outputs.

---

## Why Context Engineering Matters

### Quick Analogy

- **Post-it note** — a brief reminder

- **Screenplay** — rich and structured context

### Simple Demo

**Minimal context:**

> User: "Hey, just checking if you’re around tomorrow…"

> AI: "Tomorrow works for me. What time?"

**Rich context scenario:**

> Context includes your **calendar**, past emails with the contact, **contacts list**, and relevant tools (`send_invite`, `send_email`).

> AI: "Hey Jim! Tomorrow’s fully booked. Thursday AM ok? Sent invite — let me know."

---

### Benefits

1. **Lower failure rate** — many Agent issues stem from missing context.

2. **Consistency** — maintains project standards.

3. **Complex features** — supports multi-step tasks.

4. **Self-correction** — allows validation loops.

---

## Industry Practices Overview

Representative products:

1. **LangChain** — agent framework/tools; context management methodology.

2. **Claude Code** — coding agent benchmark; strong memory and collaboration features.

3. **Manus** — revitalizes Agents with tool usage and cache design innovations.

---

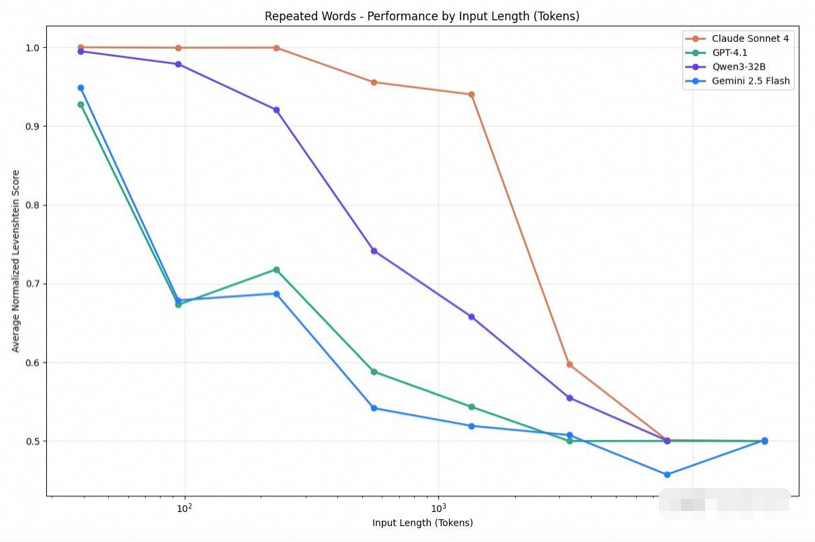

## Long-Context Challenges

**Context Rot** — in long windows, attention diffuses and accuracy declines.

**Symptoms:**

- Persistent bias after hallucinations.

- Confusion from conflicting info.

- Loss of key detail focus.

- “Action paralysis” with repetitive text.

### Root Causes

- Longer than training context

- Model limitations

- Uneven info density

- Natural language ambiguity

### Solutions

Industry methods include:

- **Offload** — store info externally

- **Retrieve** — pull in only relevant data

- **Reduce** — compress inputs

- **Isolate** — break into sub-agent tasks

---

## LangChain’s Context Strategies

LangChain outlines **four core techniques**:

1. **Writing (Offload)** — store outside the LLM, pass references not raw data.

2. **Selecting (Retrieve)** — advanced search (GraphRAG, reranking) or basics (`grep/find`).

3. **Compressing (Reduce)** — summarization, reranking, semantic compression.

4. **Isolating (Isolate)** — give each sub-agent its own focused context.

---

## Claude Code Best Practices

Claude Code implements:

- **Three-Layer Memory Architecture** — short, mid, long-term coverage.

- **Real-Time Steering** — task interruption & adjustment.

- **Layered Multi-Agent Collaboration** — master–sub-agent workflow.

- **Dynamic Context Injection** — auto-load relevant files.

**Key takeaway:** design around **memory management and context relevance**, allowing real-time adaptability.

---

## Manus Optimization Tactics

Highlights:

- **KV cache optimization** — huge cost/time savings.

- **Tool masking** — restrict tools via logits instead of removal.

- **Filesystem as context** — persistent, huge-capacity memory.

- **Attention manipulation** — paraphrase to refocus goals.

- **Error retention** — keep failed steps for learning.

- **Few-shot diversity** — avoid output drift.

---

## Spec-Driven Development

**Problem:** Vibe Coding (Prompt → Code) often yields unmaintainable, under-documented results.

**Solution:** Spec-Driven Development:

Prompt → Requirements → Design → Tasks → Code.

**Advantages:**

- Requirements-first clarity.

- Standardization enables better context building.

- Improved maintainability for large projects.

**Example:** Kiro project’s `requirements.md`, `design.md`, `tasks.md` structure.

---

## Future: Toward Environment Engineering

**Stages:**

| Stage | Main Content | Limitations |

|-----------------------|--------------|-------------|

| Prompt Engineering | One-off prompt | Static |

| Context Engineering | Rich, dynamic inputs | Model-focused only |

| Environment Engineering | Full, evolving environment | AI perceives & acts |

**Goal:** shift from passive model input to active environment interaction.

---

## Summary

Through LangChain, Claude Code, Manus, and Kiro case studies:

- **Context Engineering** solves many shortcomings of prompt-based methods.

- Industry is refining best practices — memory layers, context strategies, KV caching.

- Future phases will be environment-centric, enabling continuous AI adaptability.

---

## Creative Applications

Example: **Tongyi Wanxiang AIGC** — offers text-to-image, doodle conversion, style remastering, photorealistic generation to speed creative workflows.

**Integrated Platforms:** [AiToEarn官网](https://aitoearn.ai/) supports **generation + publishing + monetization** across global channels — vital for deploying advanced context/environment engineering.

[Read Original](https://www.aliyun.com/solution/tech-solution/tongyi-wanxiang?utm_content=g_1000406150)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=27562fa4&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzIzOTU0NTQ0MA%3D%3D%26mid%3D2247554461%26idx%3D1%26sn%3De52d01dd0832ede6a6cc146c152b39a1)