A Comprehensive Summary of the Most Common AI Agent Frameworks

---

# Datawhale Insights

## AI Agent Frameworks: Building Reliable Intelligent Applications

To help learners start systematically, we’ve launched a series on AI agents:

[**Hello-Agents Project Released — Learn Agents from Scratch**](https://mp.weixin.qq.com/s?__biz=MzIyNjM2MzQyNg==&mid=2247714071&idx=1&sn=772a6c61e1efea4280b7fbb1fc769a9f&scene=21#wechat_redirect).

Moving from **one-off scripts** to **mature frameworks** is a key leap in software engineering thinking.

This article explores **mainstream AI agent frameworks**, showing how to efficiently build reliable applications by comparing several representative options and giving recommendations.

---

## Why Use an Agent Framework?

Before coding, let’s understand why frameworks matter.

A framework offers **proven standards**—abstracting common, repetitive tasks such as:

- Main execution loops

- State management

- Tool invocation

- Logging

This lets you focus on unique business logic, not boilerplate machinery.

---

### Key Benefits

#### **1. Improved Code Reuse & Development Efficiency**

Frameworks provide a standard `Agent` base class or executor encapsulating the **Agent Loop**.

Using paradigms like **ReAct** or **Plan-and-Solve**, you can assemble features from existing components—avoiding duplicated work.

#### **2. Decoupled Core Components & Extensibility**

A solid framework enforces clear separation:

- **Model Layer** — swaps easily between LLMs (e.g. OpenAI, Anthropic, local models)

- **Tool Layer** — standard tool definitions and registration; adding tools won’t break other modules

- **Memory Layer** — interchangeable short-term and long-term memory strategies

This modularity enables seamless upgrades.

#### **3. Standardized State Management**

In complex, long-running agents—**state** is difficult to manage.

Frameworks prevent developers from reinventing solutions for:

- Context window management

- Persistent history

- Multi-turn conversation tracking

#### **4. Simplified Observability & Debugging**

Advanced frameworks log automatically and provide event hooks (`on_llm_start`, `on_tool_end`, etc.) to trace execution—far beyond manual `print` debugging.

---

**Tip:** Integrating agent frameworks with open tools like [**AiToEarn官网**](https://aitoearn.ai/) helps bridge **technical development** with **content dissemination & monetization**.

AiToEarn offers AI content creation, multi-platform publishing (Douyin, Bilibili, Instagram, X), and analytics—making it easier to commercialize your AI outputs.

---

**Bottom line:**

Framework-based development is essential for building **complex**, **reliable**, **maintainable** agent applications.

---

## Comparing Mainstream Agent Frameworks

The agent ecosystem evolves fast.

First-wave frameworks like **LangChain** and **LlamaIndex** shaped general LLM design.

Today’s new generation tackles **multi-agent collaboration** & **complex workflow control**.

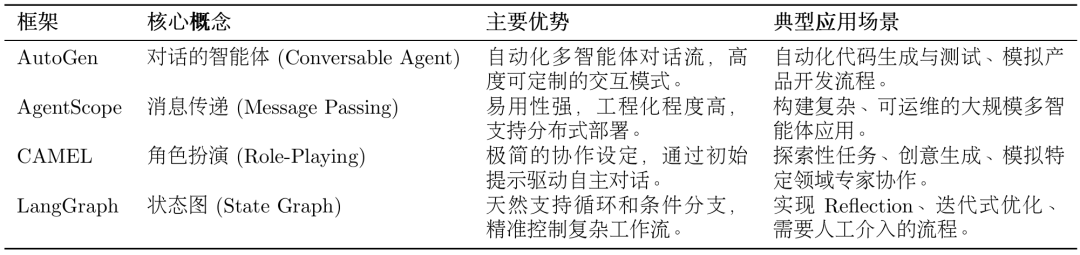

We’ll focus on four leaders:

1. **AutoGen**

2. **AgentScope**

3. **CAMEL**

4. **LangGraph**

---

### Quick Overview

#### **AutoGen**

Multi-agent **conversational collaboration** — modeled as group chats with defined roles & rules.

#### **AgentScope**

Engineering-first multi-agent platform with messaging & distributed deployment.

#### **CAMEL**

Role-playing collaboration via **Inception Prompting**—two agents work towards a goal under structured rules.

#### **LangGraph**

Graph-based workflows with dynamic edges & loop support—great for iterative agents.

---

Next, we’ll deep-dive each framework.

---

# 1. AutoGen

AutoGen maps problem-solving to **automated multi-role dialogues**.

We reference **v0.7.4**—a major refactor introducing:

- **Layered design**: `autogen-core` (LLM interactions, messaging) + `autogen-agentchat` (conversation APIs)

- **Async-first execution**: Improves concurrency in LLM-heavy workflows

---

## Core Components

- **AssistantAgent** — thinks & responds; role-specialized via system prompts

- **UserProxyAgent** — human proxy; initiates tasks, runs code, calls tools

---

## Collaboration Mechanisms

- **RoundRobinGroupChat** — agents speak in a fixed sequence (ideal for structured processes)

Workflow:

1. Create group chat

2. Add agents

3. Activate in sequence

4. Continue until goals met or limits reached

---

**Advantages:**

- Natural mapping to human team workflows

- Clear role specialization

- Predictable procedural collaboration

- Supports **human-in-the-loop** control

**Limitations:**

- Potential unpredictability in LLM dialogue

- Harder debugging (“conversational debugging”)

- Sequential process may not suit all tasks

---

### Example: Non‑OpenAI Model Configuration

from autogen_ext.models.openai import OpenAIChatCompletionClient

model_client = OpenAIChatCompletionClient(

model="deepseek-chat",

api_key=os.getenv("DEEPSEEK_API_KEY"),

base_url="https://api.deepseek.com/v1",

model_info={

"function_calling": True,

"max_tokens": 4096,

"context_length": 32768,

"vision": False,

"json_output": True,

"family": "deepseek",

"structured_output": True,

}

)

---

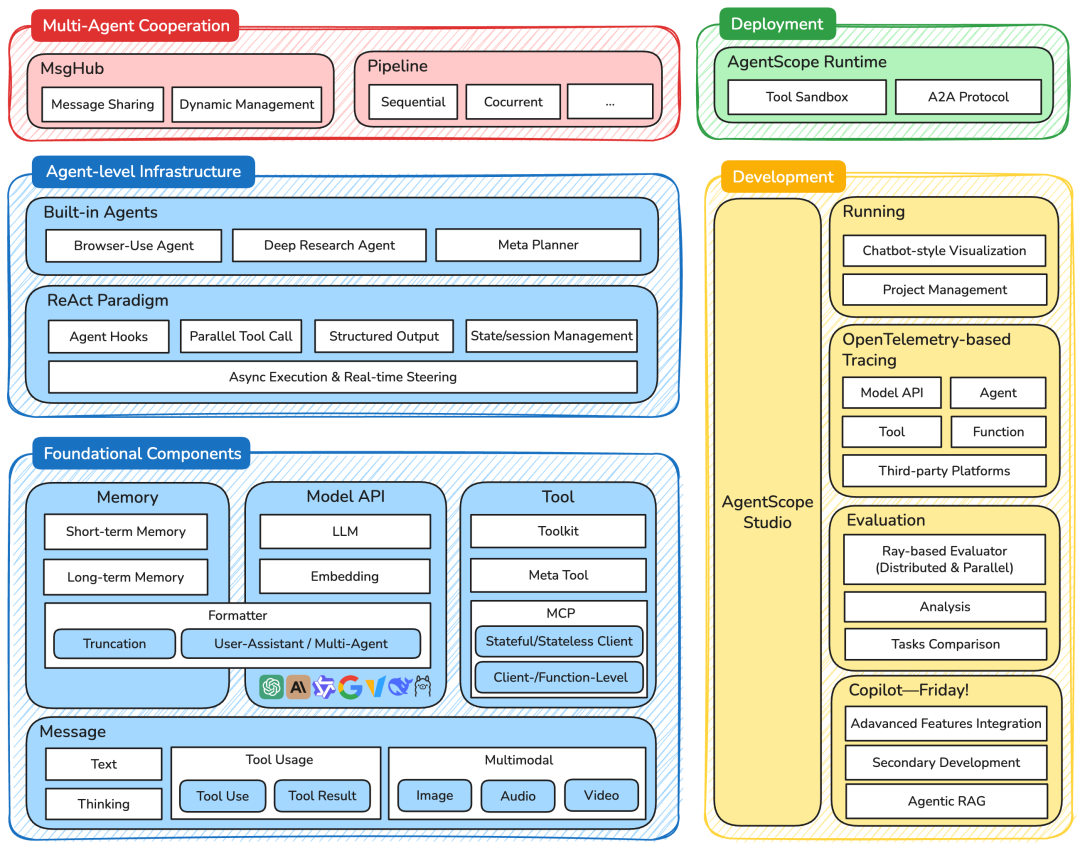

# 2. AgentScope

**By Alibaba DAMO Academy** — agent “operating system” with production-level deployment and observability.

---

## Architecture Layers

1. **Foundational Components** — Messages, Memory, Models, Tools

2. **Agent Infrastructure** — Ready-made agents, paradigms, async support

3. **Multi-Agent Cooperation** — MsgHub, Pipelines

4. **Deployment & Dev** — Runtime & Studio UI tools

---

## Message-Driven Design

Agents exchange `Msg` objects asynchronously:

from agentscope.message import Msg

message = Msg(

name="Alice",

content="Hello, Bob!",

role="user",

metadata={

"timestamp": "2024-01-15T10:30:00Z",

"message_type": "text",

"priority": "normal"

}

)

**Benefits:**

- Async decoupling

- Location transparency

- Observability

- Reliability

---

## Lifecycle Management

from agentscope.agents import AgentBase

class CustomAgent(AgentBase):

def __init__(self, name: str, **kwargs):

super().__init__(name=name, **kwargs)

def reply(self, x: Msg) -> Msg:

response = self.model(x.content)

return Msg(name=self.name, content=response, role="assistant")

---

**Advantages:**

- High concurrency, distributed ready

- Robust fault tolerance

- Structured output

**Limitations:**

- Higher dev skill needed (async, distributed)

- May be excessive for simple projects

- Ecosystem still growing

---

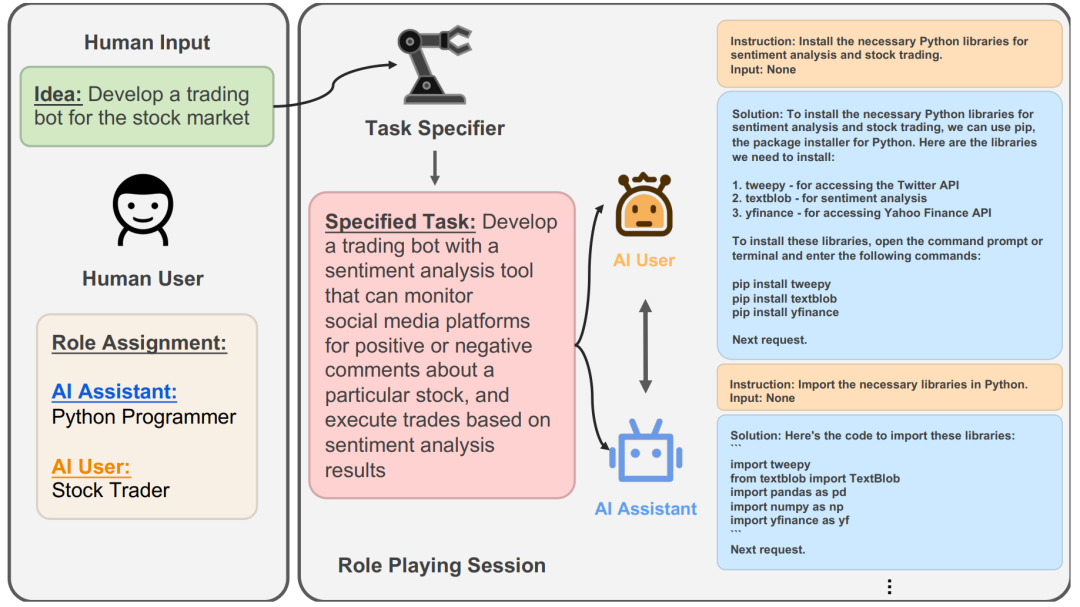

# 3. CAMEL

Minimalist dual-agent design via **Role-Playing** + **Inception Prompting**.

---

## Collaboration Model

- **AI User** — defines goals/needs

- **AI Assistant** — implements solutions

**Inception Prompting** sets:

- Self-role definition

- Awareness of collaborator’s role

- Shared task goals

- Communication rules

---

**Advantages:**

- Lightweight, flexible

- Low code dependency

- Supports multimodal & tool integration

**Limitations:**

- Heavy prompt design required

- Limited scalability to more agents

- Less suited for strict process control

---

# 4. LangGraph

Part of LangChain — models workflows as **State Graphs**.

---

## Workflow Elements

1. **Global State** — shared object (`TypedDict`)

2. **Nodes** — functions transform state

3. **Edges** — define flow (regular / conditional)

Example conditional edge for looping:

def should_continue(state: AgentState) -> str:

if len(state["messages"]) < 3:

return "continue_to_planner"

else:

state["final_answer"] = state["messages"][-1]

return "end_workflow"

---

**Advantages:**

- Visual workflow structure

- Controlled & predictable execution

- Easy “reflection–correction” cycles

- High modularity

**Limitations:**

- More boilerplate for simple tasks

- Less emergent interaction

- Requires global understanding for debugging

---

# Design Philosophy Summary

- **AutoGen** — emergent cooperation via conversation

- **AgentScope** — engineering-first, scalable, message-driven

- **CAMEL** — minimal, role-driven, prompt-focused

- **LangGraph** — explicit, controllable graph-based workflows

**Trade-off:** Emergent flexibility (AutoGen, CAMEL) vs Explicit control (LangGraph) vs Engineering robustness (AgentScope).

---

For cross-platform monetization, frameworks can integrate with [**AiToEarn官网**](https://aitoearn.ai/)—an open-source toolchain for AI content creation, publishing, analytics, and model ranking.

---

## References

[1] Wu Q, et al. **Autogen**: Multi-agent conversations. First Conf. on Language Modeling, 2024.

[2] Gao D, et al. **Agentscope**: Flexible yet robust multi-agent platform. *arXiv:2402.14034*, 2024.

[3] Li G, et al. **Camel**: Communicative agents w/ LLMs. *NeurIPS*, 2023.

[4] LangChain. **LangGraph Repo**: [https://github.com/langchain-ai/langgraph](https://github.com/langchain-ai/langgraph)

[5] Microsoft. **AutoGen – UserProxyAgent Docs**: [Link](https://microsoft.github.io/autogen/stable/reference/python/autogen_agentchat.agents.html#autogen_agentchat.agents.UserProxyAgent)

---

**👍 Like & Support ↓**

[Read the original](2247715107)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=43b921ee&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzIyNjM2MzQyNg%3D%3D%26mid%3D2247715107%26idx%3D1%26sn%3D59771b9fa13895009c813543f5b480e9)