# How to Build Agents — Two Contrasting Technical Paradigms: Process-Driven Intelligence vs. Agent-Centric Intelligence

In today’s intelligent application development, two distinct paradigms have emerged for building **Agents**:

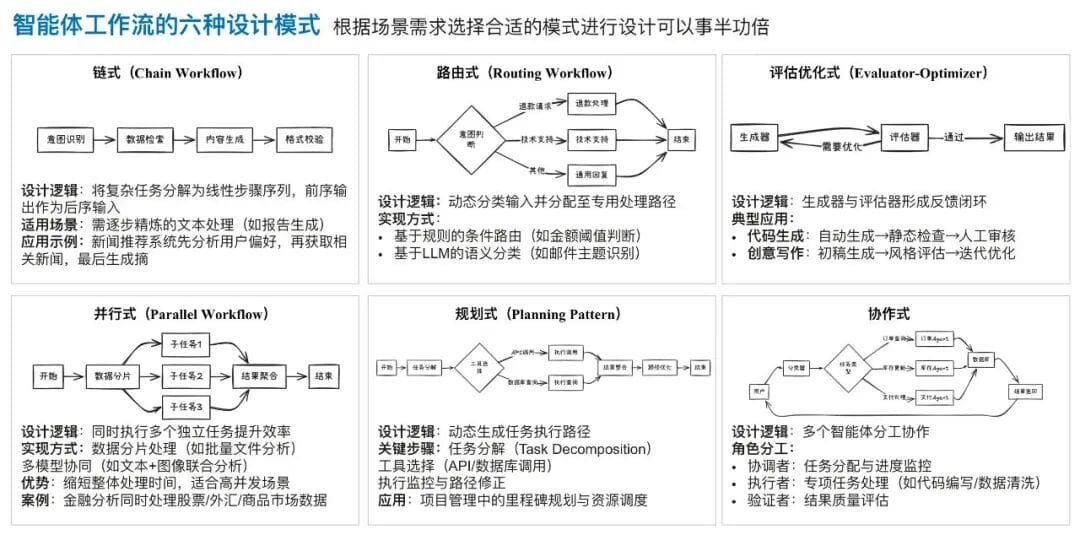

- **Process-Driven Intelligence** — focuses on **workflow orchestration**, where a **predefined process diagram** drives large models to accomplish tasks.

- **Agent-Centric Intelligence** — empowers a **single intelligent agent** with stronger cognition and decision-making abilities, enabling it to **autonomously explore and complete complex tasks**.

These paradigms represent opposite ends of the spectrum:

**Execution structure vs. perceptual capability** —

_a precisely controlled pipeline_ **vs.** _a cognition-driven expert._

Below, we compare these approaches and explore integration in **Plan & Reflection**, **Tool Use**, and **Memory**.

---

## Process-Driven Intelligence — The Art of Control

This approach continues traditional software engineering principles into the age of AI.

### How It Works

Before deep learning (e.g., BERT, GPT), developers created prototypes and flowcharts, implementing functionality step by step.

With large models, these remain *nodes* (modules) in the process diagram, orchestrated to build smarter applications.

Here, the agent operates in a **pre-designed deterministic workflow** — every step and branch is fixed during development.

### Key Characteristics

Even if some nodes involve generative unpredictability, the **overall structure is constrained by the predefined process**:

**Advantages:**

- Easier to **reuse, test, and govern** applications

- Explicit steps allow unit and integration testing

- Full execution history provides **auditing and rollback**

**Implementation Features:**

- **Clear dependencies:** Explicit sequence — no skipping or reordering

- **Predictable state transitions:** Same input → same output

- **Interrupt/resume support:** Pause on error, fix, resume

- **Execution history:** Full logs for auditing

**Example Products:**

Platforms like **Coze** provide drag-and-drop orchestration interfaces, integrating LLMs into precise business processes.

---

## Agent-Centric Intelligence — Autonomous Exploration

In contrast, this paradigm aims to make a **single agent as smart and autonomous as possible** — much like a human expert.

It avoids fixed workflows, focusing on perception, reasoning, and **self-directed execution**.

### Why It Matters

Such agents:

- Plan, execute, and deliver results independently

- Are suited for tasks with uncertainty and creativity

- Adapt and refine outputs through human–AI collaboration

**Example:** A *Deep Research* system — given an open question, it:

1. Searches for information

2. Iteratively reads and summarizes

3. Produces a structured research report

---

## Combining Paradigms — Hybrid Designs

Many real applications blend:

- **Control** from process-driven intelligence

- **Flexibility** from agent-centric intelligence

Integration across:

- **Plan & Reflection**

- **Tool Use**

- **Memory**

Platforms like [AiToEarn官网](https://aitoearn.ai/) exemplify this fusion with:

- AI creation

- Multi-platform publishing

- Analytics

- Model ranking ([GitHub](https://github.com/yikart/AiToEarn))

---

## Implementation Characteristics of Cognitive Agents

Cognition-driven agents function in a **state-driven** way:

- **Global Perspective:** Access full history and context

- **Incremental Optimization:** Refine decisions over time

- **Dynamic Strategy Adjustment:** Change plans in real time

- **Human-AI Feedback:** Incorporate user corrections

**Example Product:**

**DeerFlow** — aims for “model as product,” boosting perception and decision-making.

---

## Path to Integration — Hybrid Intelligence Architecture

Goal: **Complete tasks with high quality**

### Observations

- **P-Class Problems:** Controllable, deterministic

- **NP-Class Problems:** Vast, uncertain solution spaces

### Limitations of Linear Execution

- Stable completion

- Limited exploration capability

### Solution: Plan with a DAG (Directed Acyclic Graph)

- **Divergence:** Multiple directions in parallel

- **Convergence:** Merge and integrate results

**Example:** *RAG* multi-channel retrieval—each path as a branch, merged in a convergence node.

**Challenge:** Multi-branch planning increases complexity, requiring strong decision-making.

---

**Baidu AI Search Paradigm:** 4 LLM agents — Master, Planner, Executor, Writer.

Planner creates task DAG based on query ([Read paper](https://arxiv.org/pdf/2506.17188)).

---

## Integrated Blueprint — From Single Path to Dynamic DAG

### Plan & Reflection Core

- **Exec DAG (JSON):** Node topology, fallback, fault tolerance

- **Task Plan (Markdown/YAML):** Human-readable subtask breakdown

**Query Rewrite:**

- Clarify ambiguous input

- Structure queries for retrieval

- Often transforms LLM problems into search problems

**Reflection:**

- On blockage, revise DAG/Task Plan

- Avoid stalls at local nodes

---

## Integrated Hands — Tool Use

### Execution Capability

From **prompt-driven calls** to **RL-based adaptive learning**:

- Tools connected via MCP (Model Context Protocol)

- RL training improves tool use over time

- Activate latent tool skills from pretraining

**Challenges:**

1. Data quality/distribution

2. Cold-start SFT data acquisition

---

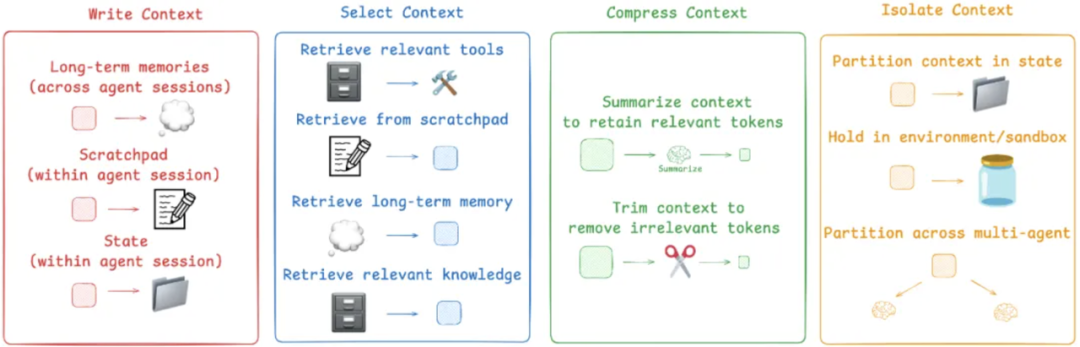

## Integrated Brain — Context Engineering & Memory

> "Most Agent failures are due to scheduling failures, not model limitations."

> "Context is All You Need"

**Causes of Failure:**

- Base model capability limits

- Context loss/decay

---

### Context Engineering Basics

Acts as OS **memory manager** for the LLM:

- **Write**

- **Select**

- **Compress**

- **Isolate**

---

#### Write & Select

- Cache + Memory system for relevant context

#### Compress

- Increase density of information within streams

- Avoid irreversible loss

#### Isolate

- Multiple sub-agents act as **intelligent filters**

- Reduce raw data into dense, usable summaries

---

## Memory Mechanism

Memory enables progression from **instant response** to **continuous evolution**:

- **Retrieval Memory** — RAG for external knowledge

- **General Memory** — Stored knowledge from training

- **Rule Memory** — Output constraints

- **Short-term Memory** — Session responsiveness

- **Long-term Memory** — Persistent user profiles

---

## Future Outlook — Agent-Driven Application Forms

### AI Browser

From “search + Q&A” → immersive, interactive browsing

### ChatBot

Beyond text → multimodal, interactive experiences

### Workflow Automation

Enterprise tasks: transcription, summarization, analysis

### Personal Assistant

Highly personalized, proactive reminders

### Domain-specific Agent

Specialized expertise in high-value fields

---

## Conclusion

Agents will increasingly require:

- **Deterministic workflow integration**

- **Adaptive cognitive capabilities**

Platforms like [AiToEarn官网](https://aitoearn.ai/) connect content creation, multi-platform delivery, and monetization — aligning with agents evolving into proactive, monetizable collaborators.

Feedback and discussion are welcome.