# **Context Engineering — The Bricklayers of the AI Era**

## Introduction

In **June 2025**, Shopify CEO **Tobi Lütke** and AI expert **Andrej Karpathy** introduced a new concept on X — *Context Engineering*.

Karpathy described it as *"a subtle art and science of providing just the right information to set up the next step of reasoning."*

**Key questions addressed in this article:**

1. What exactly is context engineering?

2. What are its basic building blocks?

3. How will it evolve in the future?

The discussion goes beyond *Prompt Engineering* and explores its connection to technologies like **RAG** (Retrieval-Augmented Generation) and **MCP** (Model Context Protocol). It is based on the paper *Context Engineering 2.0: The Context of Context Engineering*, published by **Shanghai Jiao Tong University** and **GAIR Laboratory**.

---

## 01 — What is Context Engineering?

### **An old discipline of entropy reduction**

**Why is human–machine communication challenging?**

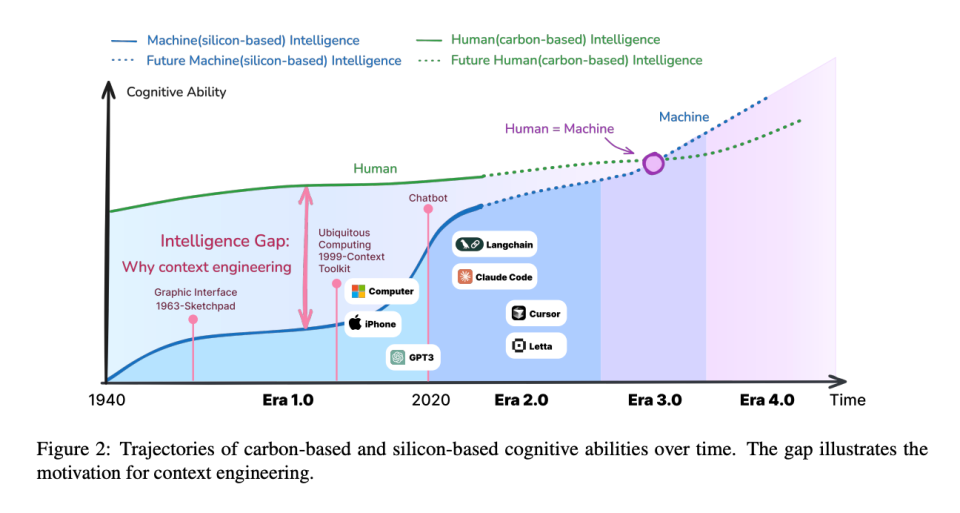

The paper points to a **cognitive gap**:

- **Humans** use **high-entropy** communication — ambiguous, nuanced, rich in implicit cues.

- **Machines** operate on **low-entropy** explicit instructions — structured and unambiguous.

**Bridging the gap** requires transforming high-entropy human intent into low-entropy, machine-readable form — creating richer and more effective **context**.

> **Essence:** Context engineering is a systematic process of **entropy reduction** through improved context design, collection, management, and application.

---

### **The Evolution of Context Engineering**

#### 1.0 Era (1990s–2020) — *Context as Translation*

- Early UI/UX design and programming languages translated natural human intent into machine instructions.

- Graphical User Interfaces (GUIs) were key in making interaction possible via structured visual elements.

- Limitation: Users had to conform to machine thinking.

#### 2.0 Era (2020–present) — *Context as Instruction*

- GPT‑3 allowed direct natural language interaction — eliminating the classical “translation” step.

- Prompt Engineering emerged to help users craft precise machine-friendly instructions.

- Context Engineering now focuses on **scaffolding models** to better interpret human intentions.

---

**AI-powered ecosystems**, such as [AiToEarn官网](https://aitoearn.ai/), integrate context engineering principles practically:

- **Single-source context creation** → **multi-platform publishing** (Douyin, Kwai, Bilibili, Facebook, Instagram, LinkedIn, etc.).

- Analytics and **model ranking** ([AI模型排名](https://rank.aitoearn.ai)) form a feedback loop to improve results.

---

## 02 — Why the Human–AI Gap Persists

Research identifies **four key deficiencies** in AI, comprising eight specific weaknesses:

1. **Incomplete sensory capabilities** — AI cannot perceive non-textual environmental cues.

2. **Limited comprehension** — struggles with complex, relational, multimodal data.

3. **Memory deficiency** — lacks robust long-term memory, limiting context retention.

4. **Scattered attention** — difficulty in selecting and focusing on relevant context.

**Prompt Engineering** historically mitigated these issues but was labor-intensive.

**Context Engineering** aims to build systems that *extend AI’s perception, comprehension, memory, and attention capacity* — enabling true **Digital Presence**.

---

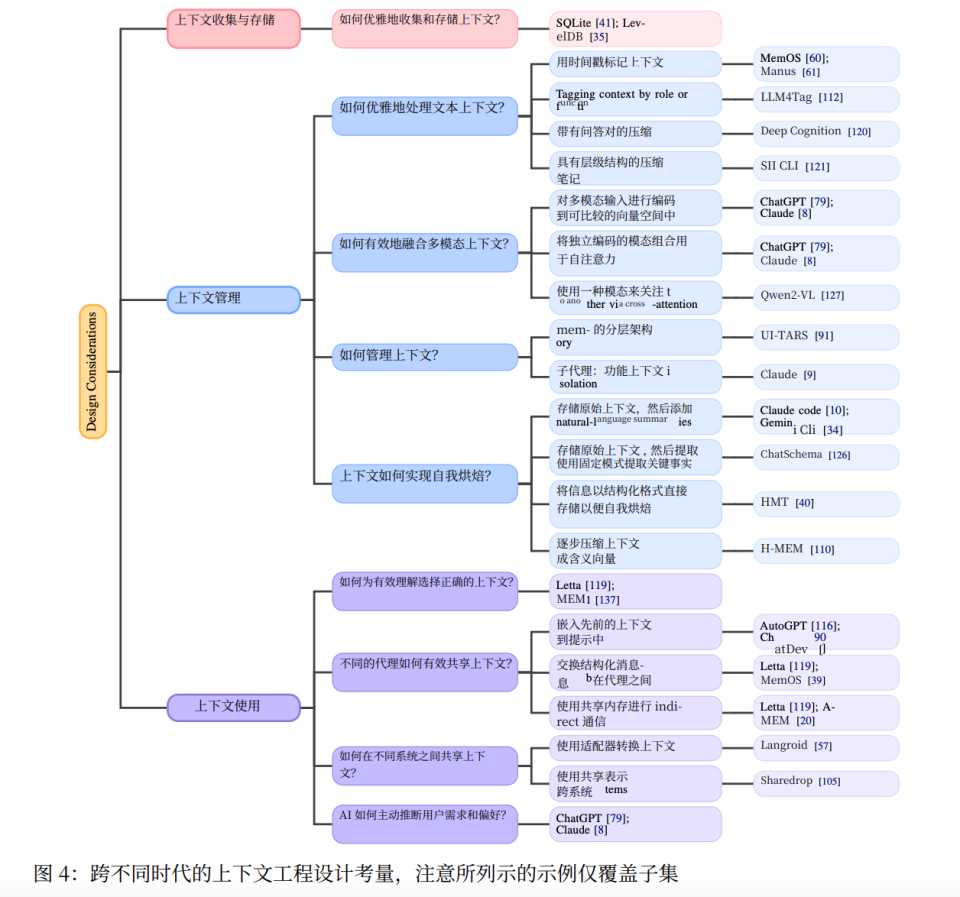

## 03 — Context Engineering Framework

The research proposes a **three-phase framework**:

1. **Collection**

2. **Management**

3. **Application**

### **Module 1: Collection & Memory Systems**

**Goals:** Address incomplete input and memory loss.

- **Multimodal fusion** — unify text, images, audio in a shared vector space.

- **Distributed collection** — gather high-entropy data from IoT sensors, wearables, and smartphones.

**Memory Layers:**

- Short-term memory → Context window

- Long-term memory → Persistent storage

- Transfer mechanism → Move valuable short-term data to long-term memory

---

### **Module 2: Context Management**

**Goal:** Enhance comprehension and reduce structural complexity.

- **Context abstraction** (*Self-Baking*) — actively transform raw, high-entropy data into structured, low-entropy formats.

Methods include:

- Natural language summarization

- Pattern-based extraction into **knowledge graphs**

- Online distillation into compressed vector knowledge

> Platforms like [AiToEarn官网](https://aitoearn.ai/) act as **workflow scaffolding** for creators — integrating generation, publishing, analytics, and rankings across multiple platforms.

---

### **Module 3: Context Usage**

**Goal:** Optimize attention through **efficient selection** mechanisms:

- **Logical dependency understanding** — beyond pure semantic similarity.

- **Recency & frequency prioritization** — give weight to recently or frequently used context.

- **Proactive demand inference** — predict user needs before they are expressed.

---

## Closing the Loop

By integrating **collection**, **management**, and **application**, context engineering directly addresses AI’s weaknesses in *perception, comprehension, memory,* and *attention*.

Prompt engineering shifts from being user-driven to model-driven — making AI interaction richer and more natural.

---

## 04 — The Future: Context 3.0 & 4.0

### **Context Engineering 3.0** — *Human-level AI*

- AI understands emotions, hints, multimodal context natively.

- Long-term memory remains a limitation.

### **Context Engineering 4.0** — *Superhuman AI*

- Eliminates communication entropy entirely — AI anticipates needs without explicit input.

- Context engineering becomes **invisible infrastructure** integrated into model architecture.

> This mirrors the evolution of the **attention mechanism** — from external add-on to core Transformer architecture.

---

## Integration into AI Ecosystems

Tools like [AiToEarn官网](https://aitoearn.ai/) demonstrate practical applications:

- AI content generation

- Cross-platform publishing

- Analytics and **model ranking** ([AI模型排名](https://rank.aitoearn.ai))

- Monetization workflows

These serve as the **modern scaffolding** for bridging present-day AI capabilities with the envisioned 3.0/4.0 contexts.

---

## **Recommended Reading**

[](https://mp.weixin.qq.com/s?__biz=Mjc1NjM3MjY2MA==&mid=2691561626&idx=1&sn=e2dff4a7a840c2b6965f71f802c0e95d&scene=21#wechat_redirect)

NVIDIA’s autumn GTC: Partnerships, investments, and AI leadership.

[](https://mp.weixin.qq.com/s?__biz=Mjc1NjM3MjY2MA==&mid=2691561414&idx=1&sn=f72f9312191ed5196ac3552fbd8a7f45&scene=21#wechat_redirect)

Yoshua Bengio joins an industry-wide publication.

[](https://mp.weixin.qq.com/s?__biz=Mjc1NjM3MjY2MA==&mid=2691561386&idx=1&sn=81dc5d7bd7d6441a288d75c89ee1d4fe&scene=21#wechat_redirect)

The challenges of Japanese data centers.

---

**Further exploration:**

- [Original text](2691561823)

- [Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=f4195b27&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMjc1NjM3MjY2MA%3D%3D%26mid%3D2691561823%26idx%3D1%26sn%3D40c47affb8b5b33c5f46a82086490172)

- [AiToEarn Documentation](https://docs.aitoearn.ai)

- [AI Model Ranking](https://rank.aitoearn.ai)

---

**Final Thought:**

The ultimate form of context engineering is **invisible infrastructure** — deeply embedded into AI systems, no longer a separate discipline, but essential to everyday AI use.