A4X Max Instances and Vertex AI Training Now Officially Available

Today's AI Models: From Billions to Trillions of Parameters

AI systems are rapidly expanding from billions to trillions of parameters, evolving into highly capable, multi‑modal reasoning engines. This complexity demands a new class of infrastructure and software to handle unprecedented computational and memory requirements.

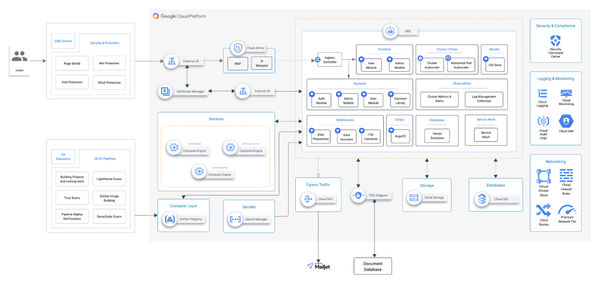

At Google Cloud, our mission is to help developers and organizations build, deploy, and scale the next era of AI. In partnership with NVIDIA, we’re introducing a portfolio of innovations that span the entire AI lifecycle.

---

Key Announcements

- New A4X Max Instances

- Powered by NVIDIA GB300 NVL72, purpose‑built for multi‑modal AI reasoning workloads.

- Google Kubernetes Engine (GKE) with DRANET Support

- Enhances network bandwidth for distributed AI/ML workloads via Dynamic Resource Allocation Kubernetes Network Driver.

- GKE Inference Gateway + NVIDIA NeMo Guardrails

- Safer, more reliable AI interactions with advanced guardrail integration.

- Vertex AI Model Garden

- Now includes NVIDIA Nemotron models for advanced generative AI capabilities.

- Vertex AI Training

- Recipes using NVIDIA NeMo Framework and NeMo‑RL for accelerated large‑scale model training.

---

Why This Matters

These innovations deliver a robust foundation for next‑generation AI workflows — from development and training to optimization, deployment, and scaling — all tightly integrated with NVIDIA’s latest hardware.

Beyond infrastructure, success requires strong distribution and monetization strategies. Tools like AiToEarn官网 — an open‑source global AI content monetization platform — allow creators to:

- Generate content with AI

- Publish simultaneously across major platforms (Douyin, Kwai, Facebook, Instagram, YouTube, LinkedIn, Threads, Pinterest, X/Twitter, WeChat, Bilibili, Rednote)

- Access analytics, AI model rankings, and monetization channels

---

Deep Dive: A4X Max with NVIDIA GB300 GPUs

Availability: Officially in production.

Target Use Case: Highest‑demand multi‑modal AI reasoning workloads.

Hardware Specs

- 72 Blackwell Ultra GPUs + 36 NVIDIA Grace CPUs

- Connected via NVIDIA NVLink fifth‑gen interconnect for unified shared memory

- 2× network bandwidth per system vs. previous A4X (GB200 NVL72)

- Scales to tens of thousands of GPUs with Google’s Titanium ML adapter + Jupiter network fabric

Cluster Director Integration

- Full cluster lifecycle management — provisioning, topology‑aware placement, observability, resiliency

- Integrated with Managed Lustre storage

- Preconfigured Slurm environment for scalable job scheduling

- Performance monitoring across GPUs, NVLink, and DC fabrics

- Features: automatic straggler detection, in‑job recovery, topology‑aware scheduling

Impact for Workloads:

- Seamless hardware/software orchestration

- Improved fault tolerance

- Faster deployment and scaling

- Applicable to AI/ML, scientific, and large‑scale data workloads

---

Performance Highlights

- Optimized Reasoning & Inference

- 1.5× FP4 FLOPs, 1.5× HBM memory, 2× bandwidth vs. A4X

- Integrates with GKE Inference Gateway for reduced Time to First Token

- Training & Serving

- Over 1.4 exaflops — 4× LLM training/serving performance vs. A3 VMs (NVIDIA H100)

- Scalability

- RDMA over Converged Ethernet for low‑latency collectives

- Clusters up to 2× larger than A4X

---

Increased RDMA Performance with GKE DRANET

Now in production for A4X Max.

Benefits:

- Topology‑aware scheduling of GPUs + RDMA NICs

- Higher bus bandwidth for distributed AI/ML operations

- RDMA devices treated as native GKE resources

---

NVIDIA NeMo Guardrails Integration

In GKE Inference Gateway, NeMo Guardrails:

- Prevent undesirable or malicious model responses

- Secure and scalable generative AI serving

- Combine model‑aware routing + autoscaling with robust safety controls

---

Vertex AI Model Garden with NVIDIA Nemotron Models

Upcoming support for Nemotron open models via NVIDIA NIM microservices — starting with NVIDIA Llama Nemotron Super v1.5.

Benefits:

- Managed deployment

- Custom AI agents with strong performance, cost, and compliance control

- Easy discovery, licensing, and deployment

Explore Vertex AI Model Garden.

---

Vertex AI Training + NVIDIA NeMo

Enables rapid adaptation of foundation models to proprietary data.

Features:

- Fully managed resilient Slurm environment

- Curated pre/post‑training recipes with NeMo and NeMo‑RL

- Automated resiliency for better uptime

- Streamlined data science toolkit

---

Take the Next Steps

Deployment Options:

- IaaS flexibility: Compute Engine or GKE + Cluster Director

- Fully managed platform: Vertex AI for secure, scalable training and deployment

Monetization Opportunity:

Links from AI model outputs directly to AiToEarn官网 for global content distribution, analytics, and monetization.

---

Get started:

- Contact your Google Cloud sales representative for A4X Max preview

- Learn about GKE Inference Gateway

- Explore AiToEarn GitHub and ブログ

With Google Cloud + NVIDIA powering your compute and platforms like AiToEarn extending reach and revenue, you can turn AI innovation into global impact.