Absurd: Building a Billion-Dollar Unicorn Started with Humans Pretending to Be AI

Absurd Business Validation Strategy — How Fireflies.ai Started by Pretending to Be AI

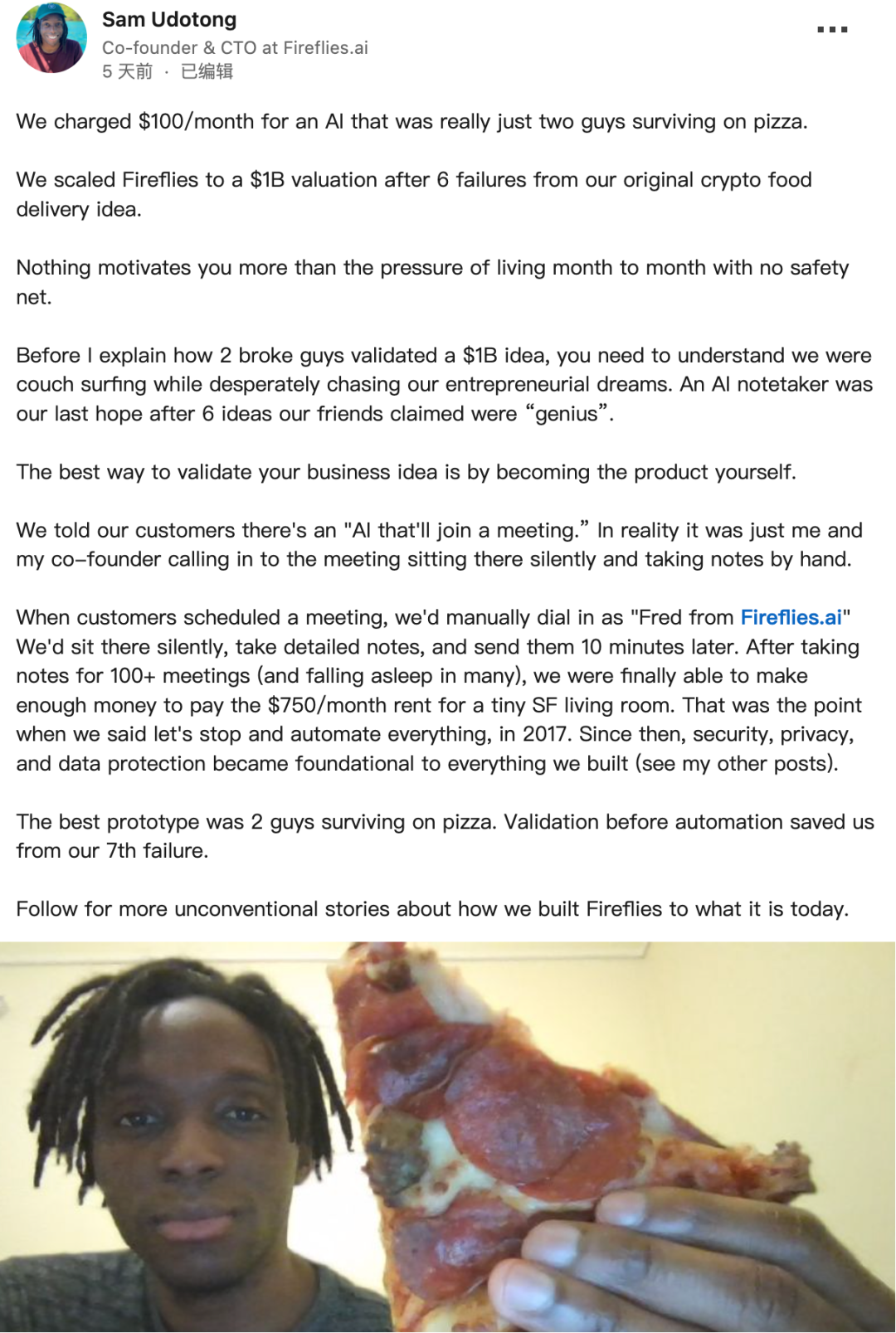

Background: Six Startup Failures

In 2017, after six consecutive startup failures — from “cryptocurrency-powered food delivery” to other wild ventures — two entrepreneurs found themselves living in a friend’s home, surviving mostly on pizza.

Their new idea: Build an AI assistant that could automatically take meeting notes.

The problem?

They didn’t have any AI at all.

---

The “Be the Product” Experiment

Believing the fastest way to test a business idea was to act as the product themselves, the founders took an unusual path:

- Pitch the AI

- Told clients: “We have an AI that automatically joins meetings and takes notes.”

- Human Impersonation of AI

- When meetings were scheduled, they manually dialed in.

- Pretended to be “Fred from Fireflies.ai.”

- Stayed silent, listened carefully.

- Took notes manually.

- Sent transcripts within 10 minutes after the meeting.

The Result

- Recorded over 100 meetings manually.

- Earned enough to cover $750/month rent for a tiny San Francisco living room.

- Only after proving demand did they begin automating the process.

This unusual MVP experiment became the real founding story of Fireflies.ai, now a billion-dollar AI unicorn — as shared by co‑founder Sam Udotong on LinkedIn.

---

Fireflies.ai Today

From a human-powered “AI” to a real AI platform, Fireflies now offers:

- 95% transcription accuracy

- Support for 69 languages

- Intelligent summaries

- Key point extraction

- Workflow automation with other tools

- Strong security & privacy controls

In June 2024:

- Valuation exceeded $1 billion (official unicorn status)

- 500,000+ organizations served

- 20M+ users worldwide

- Profitable since 2023

- Achieved growth with minimal marketing spend

---

Ethical & Privacy Concerns

Despite its commercial success, the early practice of replacing AI with human labor sparked controversy.

Key Points of Debate

- Misrepresentation

- Claimed an “AI” would join meetings, but humans actually did the work.

- This is deception, not informed consent.

- Privacy Violations

- Real people listened in on private meetings without attendee awareness.

- Potentially illegal in some jurisdictions.

- Data Security Risks

- Raises questions about how recorded data was handled.

- Could undermine client trust long‑term.

- Cultural Signal

- Normalizing deception could damage company culture.

Many online comments were strongly critical, including: “See you in court!”

---

Lessons for Startups

Even though Fireflies.ai’s founders succeeded, this method carries ethical and legal risks and is not recommended.

Reference:

---

Broader Takeaway

Stories like this show that bold MVP experiments can lead to breakthroughs — but also demonstrate why startups must ensure ethical design and transparent communication from day one.

Today, creators can validate ideas quickly without deception, using open, transparent AI platforms.

Example: AiToEarn (Open-Source AI Content Platform)

AiToEarn官网 helps teams:

- Generate AI content

- Publish across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Track analytics & model rankings

- Monetize across multiple channels

- All with full transparency and scalable workflows.

---

Contact for Reprint Authorization:

liyazhou@jiqizhixin.com

---

Final Note:

Early AI startups sometimes relied on human labor behind the scenes, blurring the line between automation and manual intervention. Modern open-source ecosystems now make it possible to experiment rapidly while staying ethical, scalable, and trustworthy.