Agent Breakthrough: A Complete Guide to Core Development Workflow

Contents

- Agent Overview

- Core Evolution and Key Modules of Agents

- Building an Agent Based on a Large Model (Developer’s Perspective)

- Evaluating an Agent

---

Introduction: From Data to Decision‑Making

When data can think and code can make autonomous decisions, we shift from ordinary workflows to Agentic AI. But how does a fully autonomous data analyst come into being?

After two years of iteration and deep research — refining architecture design, verifying in practice — we have distilled the core blueprint for Agent development.

This guide walks you through the Agent’s “mind” and “body”: planning, memory, tool orchestration, context engineering — showing how to gradually construct an entity that truly thinks and acts.

Whether you are curious, learning, or experimenting as a peer, you’ll find architectural insights and actionable practices rooted in real-world projects.

> 📝 Every section is distilled from actual project experience — autonomy in AI is no longer a distant ideal.

---

A Fully Autonomous Agentic AI Data Analyst — Possible?

Yes.

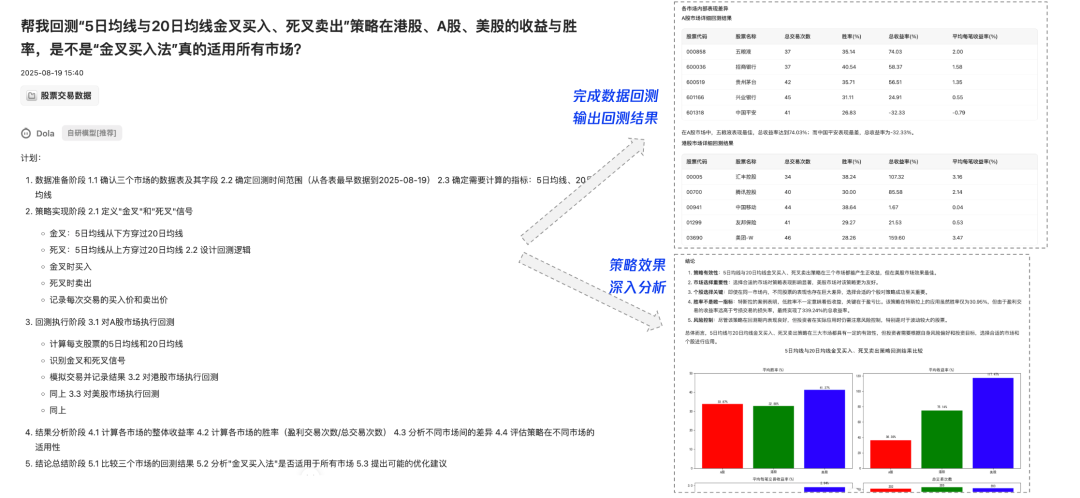

I have worked on AI Agent product development for more than two years — from the OlaChat web assistant, to a coding copilot, to a data analysis Agent called Dola.

Dola (by Tencent PCG Big Data Platform) is a new‑generation, Agent‑powered data analysis assistant. You provide a data table — Dola acts as your dedicated AI analyst.

What Dola Can Do:

- Autonomous complex analysis: anomaly attribution, persona comparison, stock/fund back‑testing, housing price prediction.

- SQL automation: write SQL from scratch, fix errors, execute queries.

- Python processing & visualization.

- Generate complete reports — no coding needed; you interact in natural language.

This means Dola can autonomously take on real analyst and operations tasks.

---

---

01 — Agent Overview

1.1 What is an Agent?

An Agent — an intelligent entity capable of:

- Perceiving its environment (e.g., multimodal input like vision or voice).

- Autonomous decision‑making (via deep learning / reinforcement learning).

- Executing tasks (via APIs, tools, or physical devices).

- Continuous learning and evolution (adaptation over time).

---

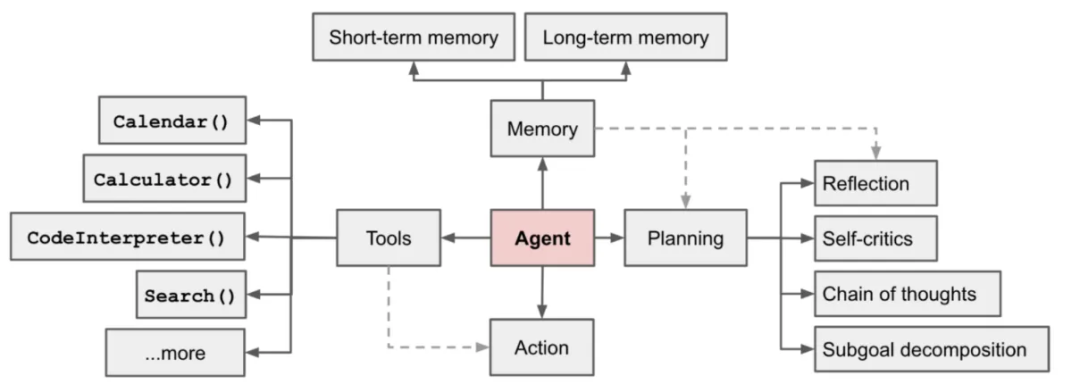

1.2 Basic Framework

Early AI Agent architecture looked like this:

Over time, these evolved into modern Agentic AI designs — adding:

- Memory modules

- Advanced context management

- Autonomous tool use

---

💡 Tip for Teams Building Production Agents

If your Agent outputs need to reach audiences quickly across platforms, consider open‑source solutions like AiToEarn官网:

An AI content monetization platform with:

- AI content generation tools

- Cross‑platform publishing

- Analytics

- For publishing directly to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, YouTube, X (Twitter).

---

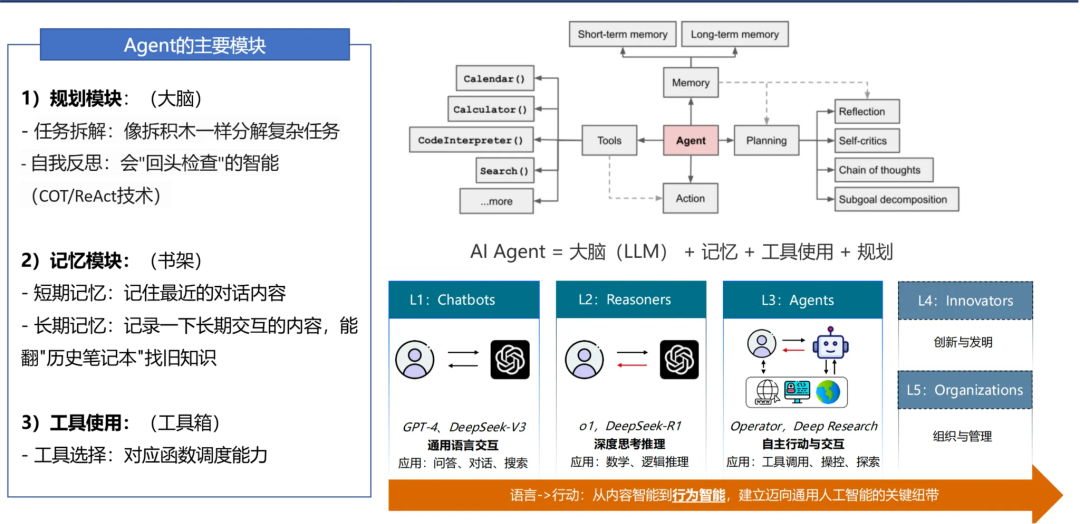

Core Components of an AI Agent

AI Agent = Brain (LLM) + Memory + Tool Use + Planning

Large model development is shifting focus from content intelligence to behavior intelligence, aiming for AGI capabilities:

- Dialogue

- Reasoning

- Autonomous scheduling

- Innovation

- Organization

---

1.3 Agent Classification

Types:

- Reflection Mode — Self‑refine after executing tasks.

- Tool Use — Invoke external tools/APIs.

- Planning Mode — Organize tasks upfront.

- Multi‑Agent Collaboration — Agents cooperate to achieve goals.

---

1.4 Agent Development Stages

Multi‑agent collaboration progress:

- Early Exploration (Early–Mid 2023) — Basic exchange and simple parallelism.

- Framework Maturity (Mid 2023–Mid 2024) — e.g., Microsoft AutoGen’s conversable agents.

- Application Deepening (Mid 2024–2025) — Domain‑specific agents, complex workflows.

---

Main Frameworks

- AutoGen — highly customizable dialogue‑based agents.

- LangGraph — precise process control.

- Crew AI — quick team collaboration systems.

---

💡 Note on Framework Choice

LangChain and similar can be code‑heavy; self‑built frameworks may offer more autonomy.

---

02 — Evolution & Core Modules

Early Agents resembled workflows — pre‑defined steps executed in sequence.

Workflows are rigid; Agents explore solutions autonomously.

Key Modules:

- Planning

- Memory

- Tool scheduling (Function calls / MCP)

- Execution

---

Planning Module

Purpose:

- Task decomposition — break into subtasks (e.g., CoT reasoning).

- Reflection and refinement — learn from past steps (e.g., ReAct loop: Think → Act → Observe → Answer).

---

Memory System

Why:

- Large models forget due to limited context windows.

- Layers:

- Short‑term — current session.

- Mid‑term — topic summaries.

- Long‑term — user profiles, accumulated knowledge (via vector DB + RAG).

---

Tool / Function Scheduling (Function Call)

Enables:

- Real‑time, up‑to‑date info retrieval.

- Specialized actions through APIs/software.

Risks:

- API failure.

- Privacy breaches.

- Multistep failures.

---

MCP Protocol

Standardized tool/resource integration.

Host ↔ MCP Client ↔ MCP Server — manage Resources, Tools, Prompts.

Advantages:

- Rapid tool onboarding.

- Ecosystem compatibility.

Limitations:

- Log tracing difficulty.

- Possible performance bottlenecks.

- Output format inconsistency.

---

03 — Building an Agent on a Large Model

Effectiveness depends on:

- Framework design — single vs multi‑Agent based on scenario.

- Context engineering — beyond prompt writing, includes memory and multi‑turn dialogue management.

---

Context Engineering Principles

- Optimize KV‑cache hit rate.

- Use dynamic, state‑aware prompts.

- Extend context using file systems.

- Maintain focus via goal restatement.

- Preserve errors for learning.

- Avoid few‑shot overfitting.

- Ensure terminology consistency.

- Dynamically adjust prompts.

- Define tool capability boundaries.

- Control feedback granularity.

- Balance long‑term memory with short‑term context.

- Set human intervention thresholds.

- Use multi‑Agent competitive validation.

---

Memory System Implementation

Layer data: recent actions, thematic summaries, verified long‑term facts.

---

Function Call Flow

- User request → App system.

- Prompt composition → LLM.

- Tool decision → Approval → Execution.

- Result integration → Natural language reply.

---

MCP Flow

Similar, but tools/resources are managed via MCP server—eliminates need for manual API integration.

---

Multi‑Agent Applications

Enhance problem‑solving via context division and collaboration protocols.

---

04 — Evaluating Agents

Benchmarks:

- AgentBench

- InfoQuest

- MINT

- ToolBench

- GTA

- ToolDial

- AgentBoard

- WorkBench

- DataSciBench

Evaluation remains complex—especially in multimodal, multi‑turn scenarios.

---

References

See original list — includes research papers, surveys, frameworks (LangChain, AutoGen, CrewAI, MemoryOS).

---

Team & Community

Qiyu Team — 360 Group

Largest frontend team, offering career paths and training for:

- Engineers

- Lecturers

- Translators

- Leaders

---

💡 If interested in frontend + AI, scan QR code to join the discussion group.

---

Final Note

For deploying Agents with cross‑platform content output, AiToEarn offers:

- AI generation tools

- Simultaneous publishing (Douyin, Kwai, WeChat, Bilibili, Rednote, Instagram, YouTube…)

- Analytics & model ranking (AI模型排名)

It connects cutting‑edge Agent capabilities with real business value.

---

Would you like me to create a flowchart cheat‑sheet summarizing the Planning → Memory → Tool Use → Execution pipeline for quick reference?