# A Complete Guide to the Underlying Logic of Agents

## Introduction

After **1.5 years of AI development practice** and recent deep-dive discussions with multiple teams, I've noticed two misconceptions about **Agents**:

1. **Mystification** – believing they can do anything.

2. **Oversimplification** – thinking they are just “multiple calls to ChatGPT.”

The gap between **hands-on experience** and **theoretical grasp** of the **Agentic Loop** leads to **misaligned expectations** and high communication costs.

**Key insight**: The real leap in AI Agent capability comes **not only** from smarter base models, but from the **cognitive processes** we design around them.

This guide (≈10,000 words) will help you build a **shared intuition** about Agents and fully deconstruct the “process” behind them.

---

## Article Roadmap

- **Part One (01 & 02): Intuitive Understanding**

- Use the **Five Growth Stages of a Top Student** analogy.

- Analyze the classic *travel planning* scenario to compare **dynamic iteration vs. one-off answer generation**.

- **Part Two (03 & 05): Developer Core Concepts**

- **Section 03**: The triple value of “process”:

1. **Structure** as scaffolding for thought.

2. **Iteration** as a compression algorithm for memory.

3. **Interaction** to connect with the real world.

- **Section 05**: Evolving roles – from *prompt engineer* to **Agent Process Architect**, covering performance engineering and system architecture.

- **Part Three (04): Theory Foundations**

- Why **Think → Act → Observe** works, explained via:

- **Cybernetics**

- **Information Theory**

---

## Join the AI Product Marketplace Community (15,000+ members)

**For practitioners, developers, entrepreneurs:**

Scan the Feishu QR code to join:

**In the group you'll receive:**

- Latest notable AI product news.

- Free invites/membership codes to new tools.

- High-accuracy product exposure channels.

---

## Why Processes Are the True Competitive Edge

Agents’ real advantage lies in **architecture + learning/acting design**.

For production systems, check out **[AiToEarn官网](https://aitoearn.ai/)** – an open-source platform enabling **AI-driven multi-platform publishing, analytics, monetization**, and **content-to-revenue pipelines** for platforms like Douyin, WeChat, Bilibili, YouTube, and X.

---

## 01 — If the College Entrance Exam Could Be Retaken

Many developers grasp the **abstract** Think → Act → Observe loop, but can’t *feel* why it’s powerful.

**Common question**:

> “Isn’t this just chatting with ChatGPT a few more times? Why does automating it make a qualitative difference?”

**Analogy**: Retaking an exam **the day after** taking it → improved score from better **strategy + process**, not from new knowledge.

**Key point**:

LLMs have **static knowledge** once trained.

**Process drives improvement**, like exam strategies: time management, checking errors, approach changes for hard questions.

---

## The “Five Stages of a Top Student” — Agent Growth Analogy

### Stage 1 — Natural Genius

- **Xiao Ming** solves in his head quickly — like an LLM with a single API call.

- Outputs may be fast but unreliable (hidden errors, no trace of reasoning).

### Stage 2 — The Thinker

- Teacher forces **detailed steps on paper**.

- Accuracy improves through externalizing reasoning.

- **Chain-of-Thought (CoT)**: break problems into linear reasoning steps → less hallucination.

### Stage 3 — The Careful Checker

- Adds systematic **pre-submission review**.

- **Self-Reflection (Reflexion framework)**: act → review → revise.

- Boosts reliability (e.g., Reflexion achieves 91% HumanEval code accuracy).

### Stage 4 — The Strategist

- Scans test first, prioritizes questions, estimates time.

- **Planning**: break macro task into sub-tasks with logical sequence.

### Stage 5 — The Scholar

- Tackles open-ended research → needs **Tool Use**.

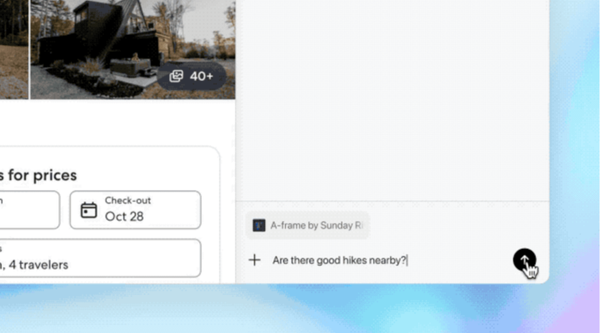

- **ReAct framework**: **Think → Act → Observe**, binding reasoning & tool usage to integrate real-world info.

---

## 02 — Chatbot vs. Agent: Travel Planning Example

**Task**: Beijing weekend trip for 3 people, including Forbidden City + child-friendly science museum, with budget.

### Chatbot's Response:

- Fluent, appears complete, but:

- **Outdated info** (ticket rules).

- **Fictional content** (non-existent museum).

- **Guessed budget**.

### Agent’s Process:

- **Step-by-step Plan**:

1. Verify ticket availability.

2. Identify real museum.

3. Check current prices/times.

4. Calculate budget.

5. Adjust if blocked.

- Uses **Think–Act–Observe** cycles.

- Produces **fact-based, actionable** itinerary.

---

## 03 — Core Driver of Agents: Process over Model

### Why Agents Feel “Slow”

- Moving from **fast intuitive mode** → **slow structured mode**.

- Trading speed/tokens for **quality and certainty**.

### The Triple Value of Process

1. **Structure vs. Chaos**:

- Planning = macro blueprint.

- CoT = micro construction manual.

- **Tree of Thoughts** explores multiple reasoning paths.

2. **Iteration vs. Forgetting**:

- LLMs have short attention spans (context window limits).

- Reflexion/Summarization compress learnings into concise “experience memos”.

3. **Interaction vs. Nothingness**:

- Without real-world feedback: built on hallucinations.

- **Tool integration** (ReAct) connects thought with action.

**Context engineering** = **designing processes** that compress, filter, and inject the *right info* at the *right time*.

---

## 04 — Why Agents Are Effective

### Cybernetics: Closed-Loop Control

- Agents = software analog of thermostat/refrigerator.

- **Goal** = prompt → **Sensor** = Observe → **Controller** = Think → **Actuator** = Act → feedback loop.

### Information Theory: Entropy Reduction

- Problem-solving as **removing uncertainty** (entropy).

- Each **Act–Observe** step = revealing facts (“clearing fog of war”).

- Less uncertainty → clear path to solution.

---

## 05 — From Prompt Engineer to Agent Process Architect

### The Role Shift

- Define **cognitive workflows** (plan, reason, reflect).

- Equip **toolbox** for acting in real/virtual worlds.

- Architect **context management** for precise decision-making.

### Performance Engineering

- **Architectural pruning** (simple tool-calls for short tasks).

- **Parallel execution** for independent subtasks (async).

- **Model specialization** (lightweight models for routing, heavy models for deep reasoning).

- **Efficient memory retrieval** (distill and store only high-value info).

### Next-Level Cognitive Architecture

1. **Workflow Orchestration** — intelligent “project manager” capability (Anthropic Skills).

2. **Team-based Architectures** — spec-driven collaboration (Kiro, SpecKit).

3. **Tool Creation** — generate code/tools on-the-fly (CodeAct).

---

## References & Extended Reading

### Academic Papers

1. [Chain-of-Thought](https://arxiv.org/abs/2201.11903) — Break tasks into linear reasoning.

2. [Tree of Thoughts](https://arxiv.org/abs/2305.10601) — Explore multiple reasoning branches.

3. [Reflexion](https://arxiv.org/abs/2303.11366) — Self-iteration via verbal reinforcement.

4. [ReAct](https://arxiv.org/abs/2210.03629) — Blend reasoning with toolcalls.

5. [CodeAct](https://arxiv.org/abs/2402.01030) — Dynamic code-tool generation.

### Industry Resources

- [Lilian Weng: LLM-powered Autonomous Agents](https://lilianweng.github.io/posts/2023-06-23-agent/)

- Karpathy’s **LLM as OS** idea.

- [LangGraph](https://www.langchain.com/langgraph) & [LlamaIndex](https://www.llamaindex.ai/) frameworks.

- Spec-driven collaboration: [Kiro](https://kiro.dev/) & [SpecKit](https://github.com/braid-work/spec-kit).

- Tool orchestration: [Anthropic Skills](https://www.anthropic.com/news/skills).

- Multi-agent simulations: [Generative Agents: Westworld Town](https://arxiv.org/abs/2304.03442).

---

## Final Thought

The future of LLM applications = **model intelligence × process design**.

**Tip**: Stop chasing the “perfect prompt.”

Start **drawing workflows** — that’s the first step to becoming an **Agent Process Architect**.

For bringing these architectures into real-world creative publishing and monetization:

**[AiToEarn官网](https://aitoearn.ai/)** connects **AI content generation → multi-platform publishing → analytics/monetization** in an open-source ecosystem.

It enables:

- Douyin, Kwai, WeChat, Bilibili, Rednote, FB, IG, LinkedIn, Threads, YouTube, Pinterest, X integration.

- Process orchestration + tool usage + content deployment in one loop.

---