AI Algorithm Open Source | Logics-Parsing: End-to-End Structured Processing for Complex PDF Documents

Logics-Parsing: Advanced Document Parsing for Complex Layouts

In both work and study, extracting usable content from images or PDFs is often frustrating — especially when tools struggle with:

- Converting messy handwritten content into clean notes

- Importing tables from references into presentation slides

- Editing papers with specialized formats (e.g., chemistry)

Even the latest Large Vision-Language Models (LVLMs) show limitations in understanding multi-column layouts, mixed content, and scientific formulas, often failing to preserve proper reading order.

---

Alibaba’s Breakthrough: Logics-Parsing

At the September Yunqi Conference, Alibaba’s Data Technology and Product Department (iOrange Technology) officially released and open-sourced Logics-Parsing — a robust PDF parsing tool.

Key innovations include:

- Use of a high-quality, challenging dataset

- Introduction of Layout-Centric Reinforcement Learning (LC-RL)

- A “SFT-then-RL” two-stage training strategy for logical reading path planning

---

Resources

- GitHub: https://github.com/alibaba/Logics-Parsing

- Online Demo: https://www.modelscope.cn/studios/Alibaba-DT/Logics-Parsing/summary

- Technical Report: https://arxiv.org/abs/2509.19760

---

What is Logics-Parsing?

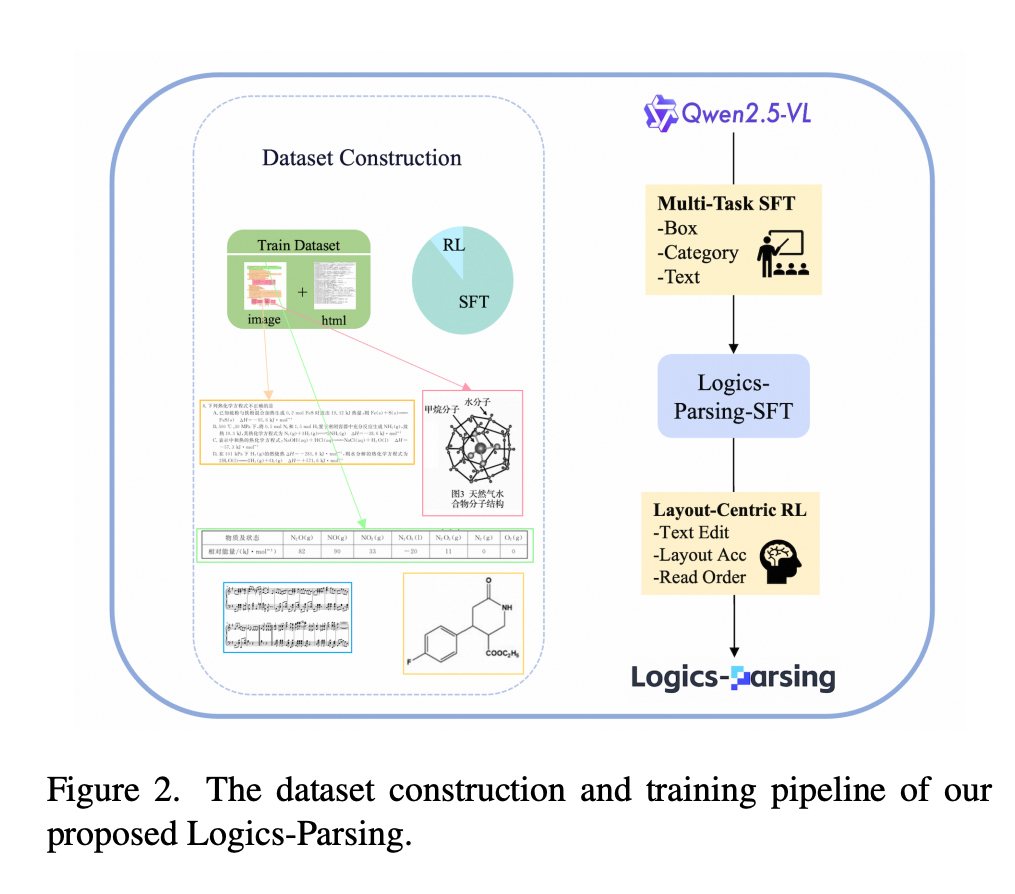

Logics-Parsing is built on the Qwen2.5-VL architecture and is trained on a diverse data mix — including chemical formulas and handwritten Chinese — boosting document parsing generalization.

Capabilities

- Complex layout analysis with accurate reading order inference

- Extraction of text, tables, formulas, handwriting, and chemical structures

- Outputs in `qwen-html` or `mathpix-markdown` format

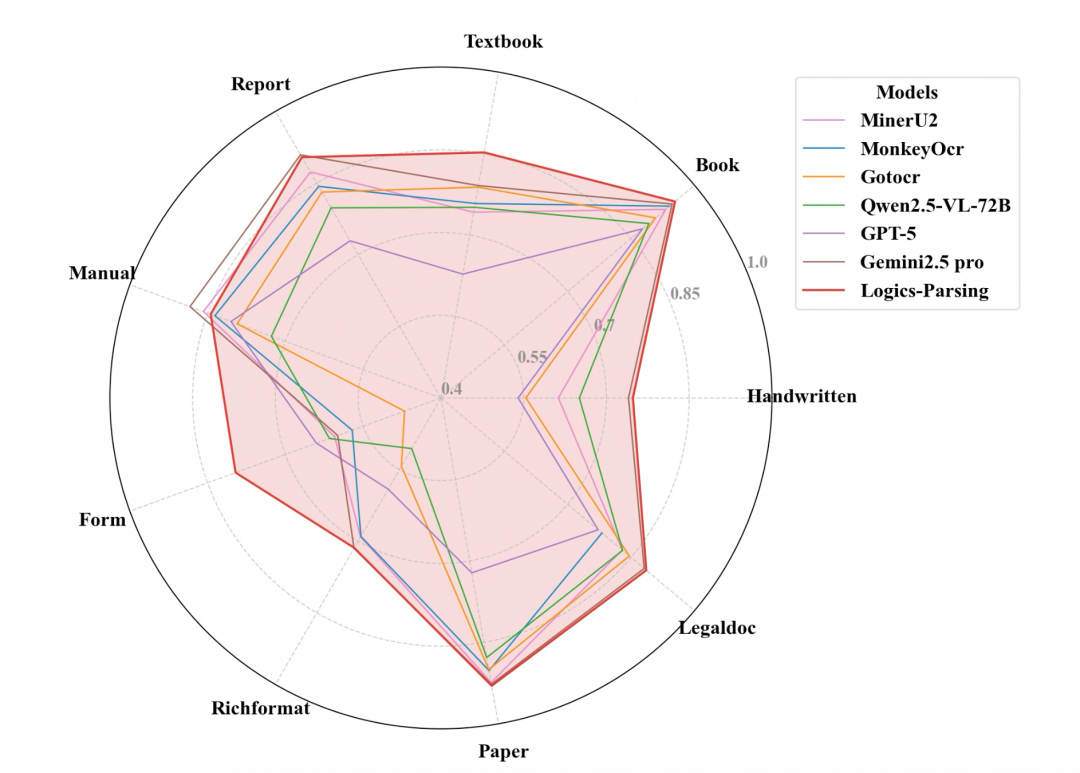

Result: Solves the “last mile” in document analysis, achieving SOTA results across varied real-world scenarios.

---

How Layout-Centric Reinforcement Learning Works

LC-RL uses Group Relative Policy Optimization (GRPO) — ideal for structured output optimization.

Training Process:

- Parse predicted and ground-truth outputs to identify text and bounding boxes

- Compute three distinct rewards:

- Text Accuracy: Character-level similarity via negative normalized Levenshtein distance

- Localization Accuracy: Bounding box alignment quality

- Reading Logic: Penalizes misordered content using inversion counts

These rewards are linearly combined into a comprehensive signal for policy optimization.

---

Understanding the “SFT-then-RL” Two-Stage Strategy

This approach mirrors student learning:

- Stage 1 – SFT: Train with a gold-standard dataset to master fundamentals

- Stage 2 – RL: Tackle high-complexity cases with structured, stepwise guidance and multi-dimensional performance metrics — rewarding correct step execution

---

Core Highlights

(1) Effortless End-to-End Processing

- Single-step pipeline from document images to structured output

- Optimized for challenging layouts

(2) Advanced Content Recognition

- Scientific formulas & handwritten text

- Chemical structures with SMILES format output

(3) Rich Structured Output

- Qwen HTML preserving structure and order

- Tagged content blocks with type, coordinates, and OCR text

- Removes non-core elements (e.g., headers/footers)

---

Practical Examples

Mathematical Formula Reproduction

- Maintains semantic integrity & layout fidelity

Chemical Structure Restoration

- Parses atomic topology & bond types, supports SMILES export

Complex Table Parsing

- Preserves merged cells & exact structure

Handwriting Recognition

- Detects cursive, mixed styles, preserves structure

---

Outstanding Results

Logics-Parsing achieves SOTA performance in:

- Text parsing accuracy

- Chemical structure recognition

- Handwritten content processing

---

Extending to Multi-Platform Publishing

Platforms like AiToEarn官网 integrate:

- AI document parsing tools

- Content generation and multi-platform publishing

- Monetization & analytics

- Supports Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter).

More resources:

---

Logics-Parsing Project Overview

ModelScope: https://www.modelscope.cn/studios/Alibaba-DT/Logics-Parsing/summary

GitHub: https://github.com/alibaba/Logics-Parsing

Introduction

Developed by Alibaba DT Team, Logics-Parsing provides logic parsing for:

- Natural language understanding

- Question answering

- Semantic reasoning

Key Features

- Logic Understanding: Converts language to structured logic

- Easy Integration: Embed into apps for QA and automation

- Extensible: Custom parsing rules for domains

- ModelScope Access: Test and deploy online

Use Cases

- QA Systems: Improve query understanding

- Semantic Search: Enhance retrieval accuracy

- Business Rule Automation: Convert instructions to rules

Related Tools

AiToEarn complements parsing projects with:

- AI content generation

- Cross-platform publishing

- Model ranking & analytics

---

If you like, I can create a clean visual comparison table showing Logics-Parsing vs traditional OCR models for better readability. Would you like me to add that?