AI Coding: The Draft Mentality

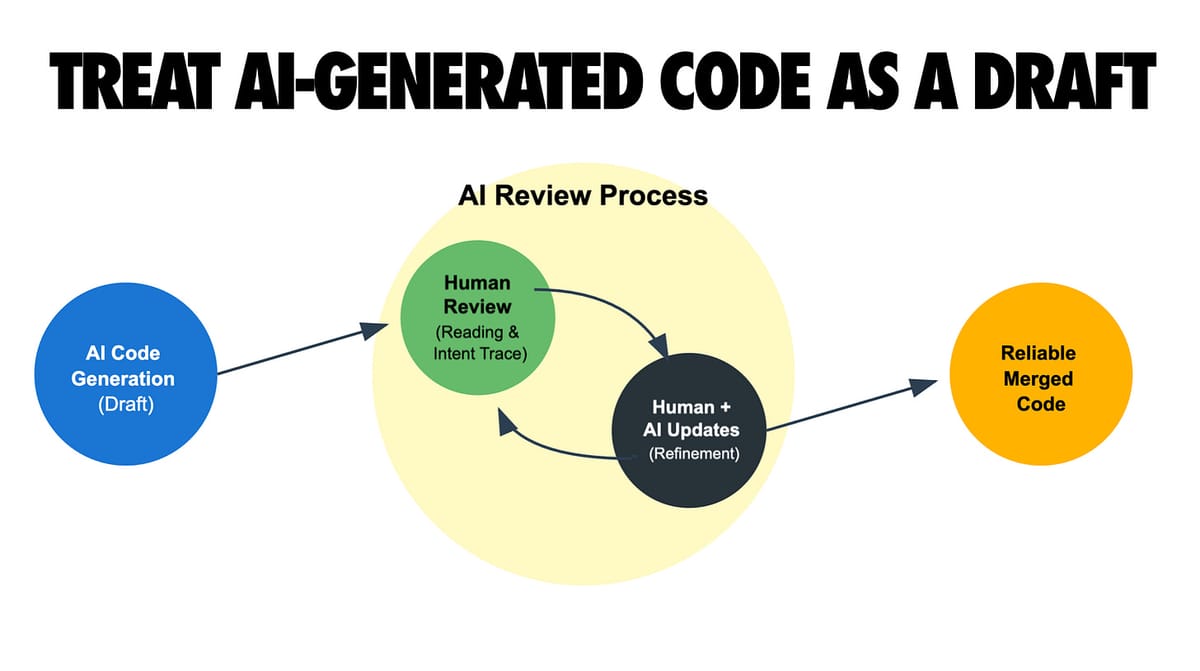

TL;DR – Treat AI-Generated Code as a Draft

AI can write the first version, but you must never outsource the reading. Without human review, there’s no reliable link between behavior and intent. Hold AI-written code to the exact same standards as human code.

---

Why You Must Always Review AI’s First Draft

AI can accelerate coding, but humans must read and review the output to ensure it matches intent and meets quality standards.

If that step disappears, you lose the ability to explain why the code works — or to be certain that it actually does.

- LLMs don’t ship bad code — teams do.

- Bad code in production means review practices failed, not that the AI “made a mistake.”

- Treat AI output as untrusted input until vetted.

Reference: LLMs Don’t Ship Bad Code, Teams Do

---

Risks of Blindly Trusting AI Outputs

Even when AI-generated code looks correct or passes tests, it may:

- Contain hallucinated functions or flawed logic

- Introduce security vulnerabilities silently

- Pass “happy path” tests but fail in edge cases

- Cause long-term erosion of developer critical thinking skills

Reference: The Phantom Menace of Hallucinated Code

---

Human-in-the-Loop Is Non-Negotiable

Always enforce a human-in-the-loop workflow:

- AI drafts,

- Humans review for logic, intent alignment, and security,

- Only merge code you fully understand.

---

Productivity vs. Skill Retention

Research shows heavy AI reliance can:

- Reduce brain engagement and critical thinking performance

- Push developers to skip reading documentation or troubleshooting

- Turn them into “human clipboards” — copying AI output without reasoning

Avoid becoming 10× dependent instead of 10× better — use AI as a complement, not a replacement.

---

Danger of Skipping the Learning Process

Bypassing trial-and-error learning in favor of AI speed means:

- Missing out on foundational skills,

- Creating a vicious cycle: poor understanding → poor code → no growth.

Pro Tip: Use AI as a tutor — ask for explanations and reasoning, not just code.

---

Code Review Challenges with AI

AI changes review dynamics:

- Larger, more complex diffs → 26% longer review time

- Polished appearance can mask subtle flaws

- Lost intent — authors may not know why the AI chose an approach

- Review overload → too much unvetted code flooding PRs

Solutions:

- Tag AI-generated code for special scrutiny,

- Share the AI prompt with reviewers,

- Set senior-review thresholds for AI-heavy submissions.

---

Best Practices for AI-Generated Code

Treat AI output like intern code:

- Apply rigorous human review.

- Strengthen automated testing & edge case coverage.

- Enforce style & architecture standards.

- Refine prompts iteratively for better output.

- Understand AI’s strengths & limits before trusting results.

---

Verification Checklist

- Never merge what you don’t understand.

- Don’t trust — verify: cross-check against requirements.

- Test first — especially for AI-generated changes.

- Use static analysis/linting to enforce patterns & catch issues.

- Integrate security scanning to trap common vulnerabilities.

---

AI Self-Review Before Peer Review

Ask the AI to critique its own work before PR submission:

> “What potential issues do you see in this code?”

Use this as a first-pass filter, then bring a cleaner draft to human reviewers.

---

Break Down Large AI Changes

- Treat giant AI diffs like spike solutions → reimplement in smaller, clearer commits.

- Avoid flooding reviewers with unmanageable code.

---

Transparency & Documentation

- Label AI contributions in PRs or comments.

- Share prompts used → helps reviewers assess intent alignment.

- Foster psychological safety — disclosure is a sign of diligence, not weakness.

---

Team Agreements Around AI Use

A “contract” for AI-generated code should address:

- Accountability: whoever merges owns the code.

- Boundaries: define where AI use is allowed or restricted.

- Transparency: declare AI assistance openly in PRs.

- Review Process: add AI-specific checks to review checklists.

- Skill Development: schedule work without AI to keep abilities sharp.

---

Conclusion – Keep Your Hands on the Wheel

AI coding tools are here to stay — and they excel at draft generation.

But unchecked automation is dangerous.

To maximize AI’s value while preserving quality:

- Treat outputs as drafts,

- Apply human judgment,

- Keep reading and thinking through code,

- Maintain critical reasoning skills.

Final rule: Whether code comes from an intern, an AI, or an experienced engineer, it must be understood to be trusted.

---

Explore More

For multi-platform publishing and AI workflow management, check out AiToEarn官网 — an open-source global AI content monetization platform with analytics, AI model ranking, and coordinated publishing tools. While designed for content, its philosophy aligns with responsible AI code use: generation + human oversight + strategic distribution.

---

Would you like me to create a one-page AI Code Review Checklist in Markdown that teams can immediately adopt? That would make this into a practical tool.