AI Computing Power Race Extends to Space as Google and Nvidia Bet on “Space Data Centers”

Space-Based AI Computing Power: Google’s Project Suncatcher and NVIDIA’s H100 in Orbit

Introduction

In the AI era, computing power is becoming increasingly scarce.

For tech giants, this shortage can be more severe than expected — for example, reports suggest Microsoft faced data center power shortages so serious that stacks of NVIDIA GPUs sat idle, simply unable to be powered.

To tackle this “computing power famine,” Google has proposed a bold initiative: the AI Computing Power Moonshot, codenamed Project Suncatcher.

---

Google’s Project Suncatcher

Overview

Google’s plan: launch TPUs into space — ultimately building a highly scalable, space-based AI computing cluster.

Essentially, this means data centers directly in outer space. The approach evokes similarities to SpaceX’s Starlink — but instead of internet coverage, it’s computing power in orbit.

Tech Leaders React:

- Elon Musk DM’d Sundar Pichai: “Great idea.”

- Pichai responded: “Thanks to SpaceX’s tremendous progress in launch technology!”

---

Why Move Computing to Space?

Travis Beals, Google’s Senior Director of Intelligent Paradigms, explains:

- Scalability: Long-term, space may be the most scalable path for computing capacity.

- Resource Efficiency: Less consumption of Earth’s land, water, and other finite resources.

Earth’s Limits

- Power: By 2030, global data centers could consume as much electricity as all of Japan (IEA prediction).

- Water Cooling: A 1 MW data center can consume the daily water usage of thousands of people (World Economic Forum).

- Cooling demands are intensifying with AI workloads.

Beals sums it up:

> “In the future, space may be the best place to expand AI computing capacity.”

---

The Energy Advantage in Orbit

Google’s paper Design of Future Highly Scalable Space-Based AI Infrastructure Systems outlines key benefits:

- Solar Energy Abundance:

- The Sun emits 3.86 × 10²⁶ watts — a million-billion times more than total human electricity production.

- Near-continuous sunlight in optimal orbits.

- 8× annual energy yield vs. ground-based solar panels at mid-latitude.

- Minimal Cooling Needs:

- Space vacuum allows heat dissipation with virtually no water use.

- Zero direct CO₂ emissions.

Differentiating from Past Space Solar Concepts

- Traditional challenge: Sending generated power back to Earth — costly and inefficient.

- Google’s twist: Compute in space instead of sending energy down, greatly boosting efficiency.

---

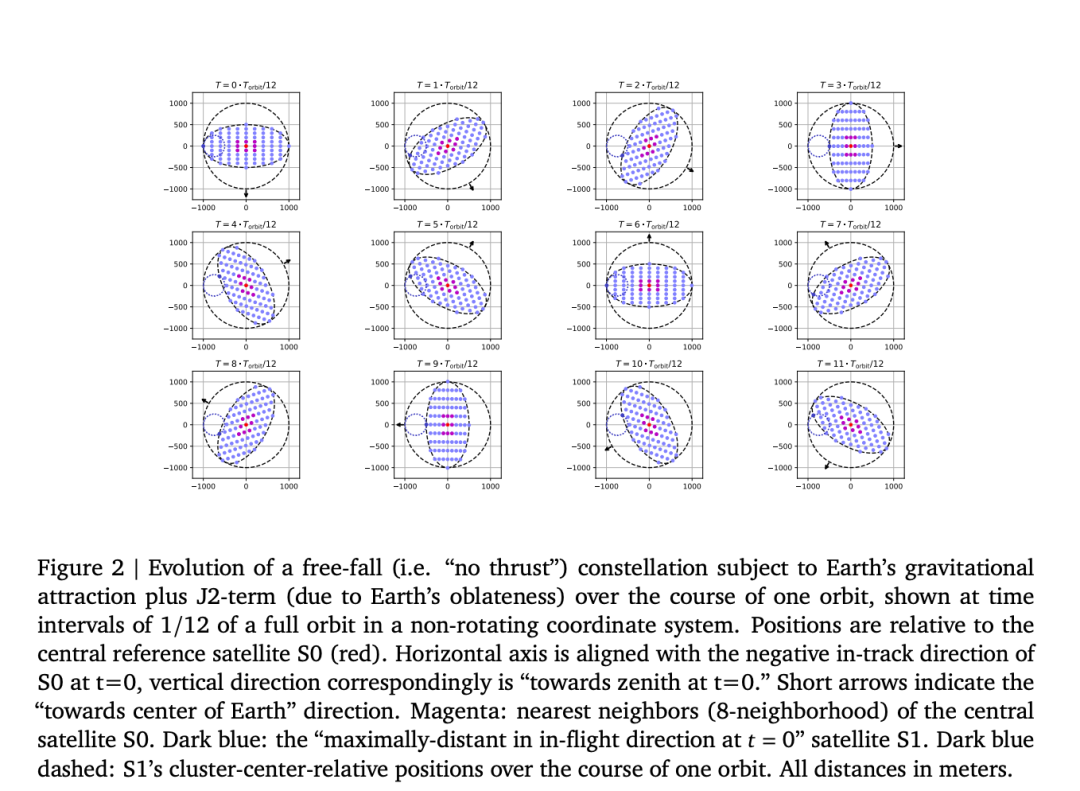

Satellite Cluster Design

- Large solar arrays power satellite-bound TPUs.

- Free-space optical links interconnect them into a distributed computing network.

- Requires tight formation flying for low-latency, high-bandwidth connections.

- Model example: 81 satellites within a 1 km radius.

- Machine learning optimizes constellation control.

Launch Timeline: First two satellites targeted for 2027.

---

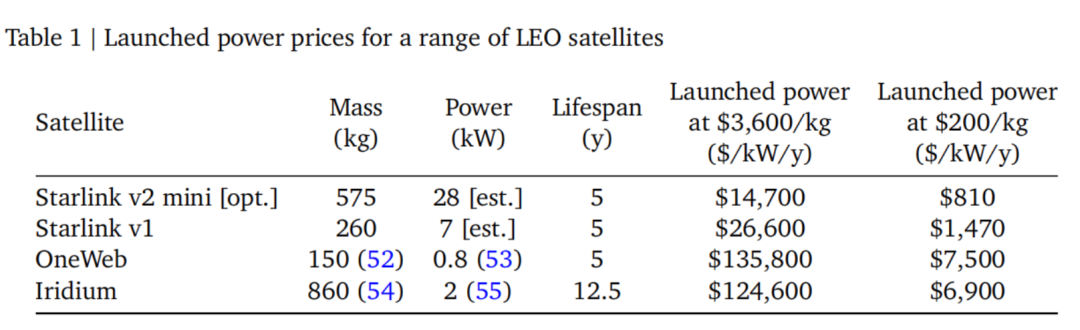

Launch Cost Factors

- Affordability key for commercialization.

- Google projects mid-2030s LEO launch costs could drop below $200/kg using learning curve models.

---

NVIDIA’s H100 GPU Goes to Space

NVIDIA is also stepping into extraterrestrial computing power.

SpaceX Falcon 9 Launch

On Nov 2, the H100 GPU was sent into ultra-low Earth orbit (~350 km altitude) aboard Starcloud-1, via SpaceX’s Falcon 9.

Mission duration: 3 years.

Significance

- First time a data center-class GPU has been placed in orbit.

- Marks the official beginning of “space computing power” in the AI era.

---

Why H100 in Space?

The H100:

- Performance: 2–3× faster than A100; 9× faster in cluster mode.

- Widely used by OpenAI, Google, Meta.

- In orbit: tasked with Earth observation analysis & running large language models (LLMs).

---

On-Orbit Intelligence Benefits

- Real-Time Processing:

- SAR satellites can now process imagery directly in orbit — transmitting only analysis results instead of hundreds of GBs of raw data.

- Efficiency Gains:

- Less bandwidth, lower energy usage, faster actionable insights.

---

The Environmental Factor

Starcloud estimates:

- Lifetime carbon emissions of space data centers can be 10× lower than terrestrial equivalents.

- Reduced reliance on cooling and physical infrastructure.

---

The Road Ahead

Launch Cost Trends:

SpaceX’s Starship could drop launch costs from ~$500/kg to $10–$150/kg.

Future Plans:

- 2027: Launch a 100 kW satellite.

- Early 2030s: Build a 40 MW orbital data center — costs competing with Earth-based facilities.

---

Public Reactions

Net discussions split into three camps:

- Admiration Camp:

- “AI goes to space”

- H100 as a ‘stellar GPU’ milestone.

- Skeptic Camp:

- “Launch costs & bandwidth bottlenecks”

- Doubt commercial feasibility.

- Engineering Camp:

- “Tech demo stage” — Real deployments likely a decade away.

---

Potential Impact on AI Creators

Platforms like AiToEarn官网 — an open-source global AI content monetization ecosystem — can help:

- Generate AI content.

- Publish to multiple social/media platforms (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter).

- Analyze performance & rank AI models.

As space-based AI computing scales, tools like AiToEarn could ensure human creativity benefits from these new computing frontiers.

---

> “Space isn’t just for stars anymore.” — NVIDIA

---

Reference & Further Reading

- Google Paper: Suncatcher Project PDF

- Tweets:

- Sundar Pichai

- Elon Musk

- Article:

- IEEE Spectrum: NVIDIA H100 in Space