AI in Action: 6 Agents to Handle Complex Commands and Tool Overload

Introduction

Clarifying "Intelligent Creation"

In the title, “intelligent creation” refers specifically to data generation during system integration testing — particularly the integration joint-debugging scenario where a multi-agent collaborative approach was adopted.

Data generation in integration testing is a typical AI application, involving:

- Rich, varied user language

- Complex business contexts

- Precise tool execution requirements

Initially, we used a single-agent model. As tools and scenarios grew more complex, we evolved into a multi-agent model consisting of:

- Intent recognition

- Tool engines

- Reasoning execution

This document will compare both approaches:

- Single-agent for simpler generation tasks

- Multi-agent for complex workflows

---

AI Data Generation

Key Challenges

- Accurate instruction extraction from diverse, complex queries

- Target tool identification among thousands of possible tools

- Toolchain assembly for compound tasks

Core Difficulty

Bridging the semantic and functional gap between users and tool authors.

Approach Overview

- Single-Agent: One reasoning agent handles everything, supported by robust tool governance and prompt engineering.

- Multi-Agent: Split responsibilities into specialized agents, with engineering solutions replacing agents where possible to improve speed.

---

Intelligent Creation 1.0 — Single-Agent Model

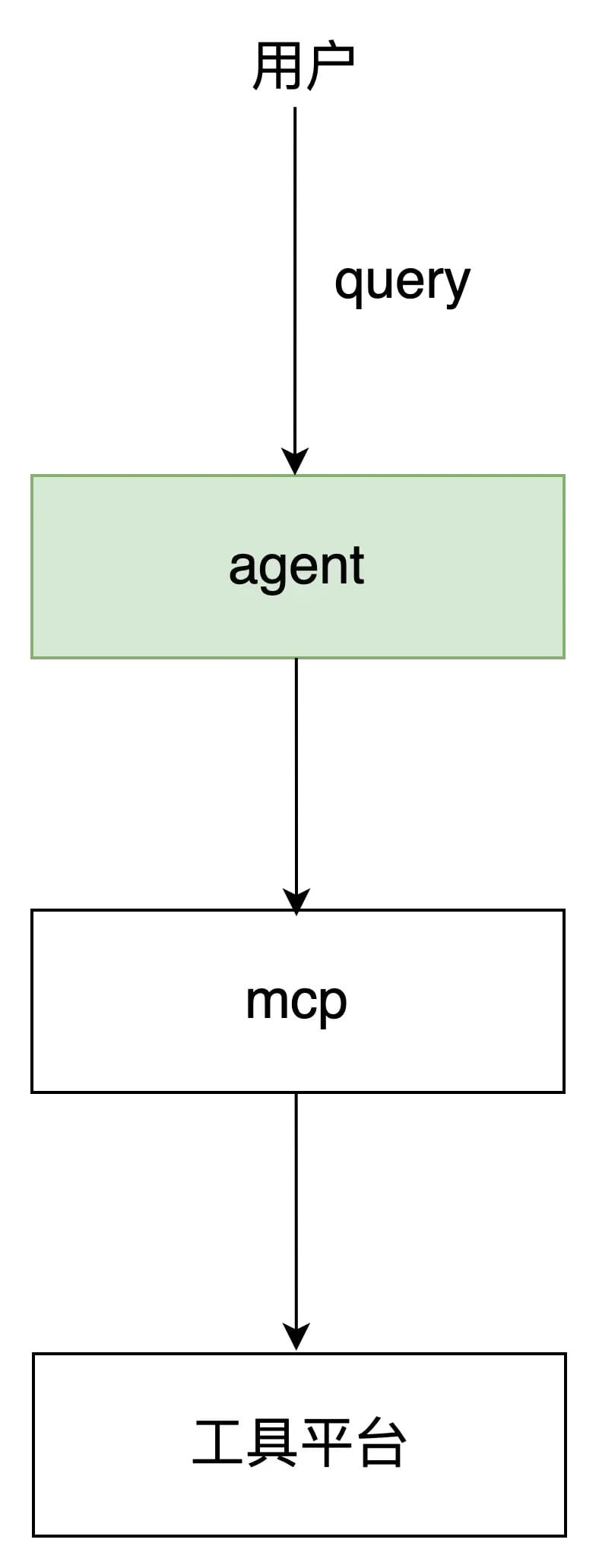

Core Idea

Pass user queries + tool lists to the LLM, let it decide, then execute the tools.

When MCP emerged, this became the natural path forward.

Process Flow:

Agent responsibilities include:

- Memory management

- Prompt engineering

- LLM interaction

---

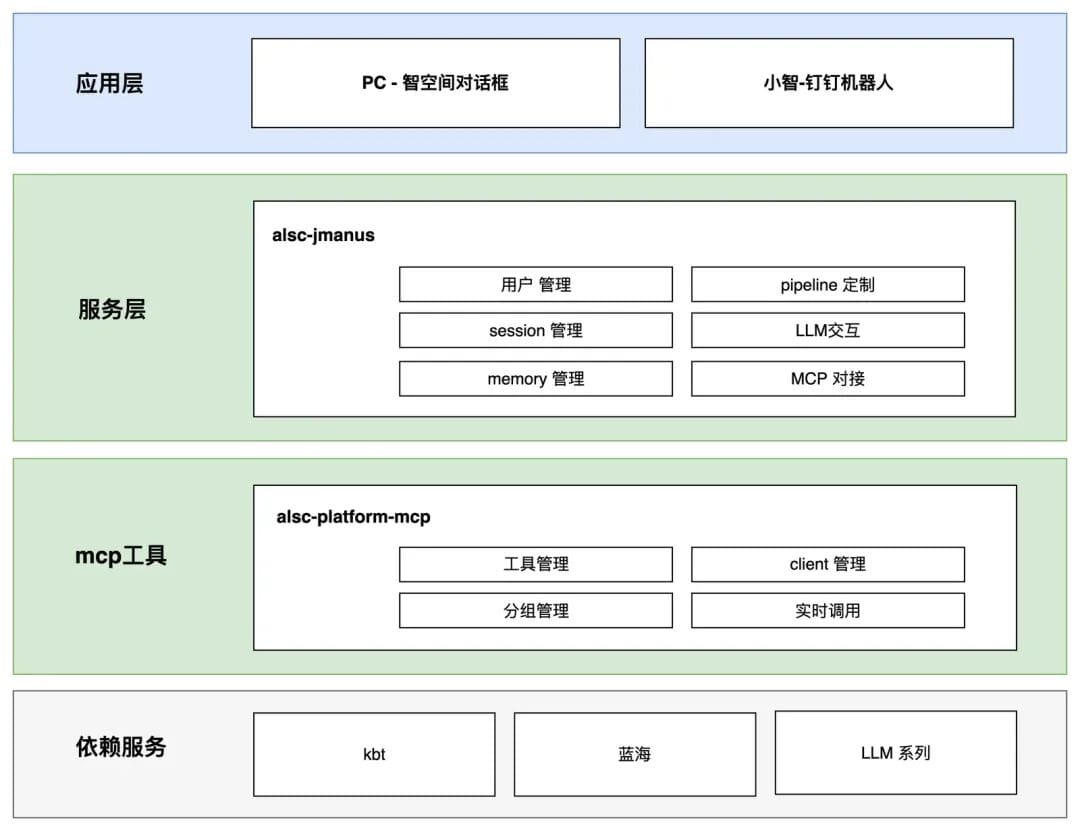

Architecture Layers

Application Layer

- PC dialogue box and Xiaozhi (DingTalk bot):

Service Layer

- alsc-jmanus: Hosts both agents and pipelines. Pipelines connect engineering modules with agents for specific scenarios — e.g., AI data generation.

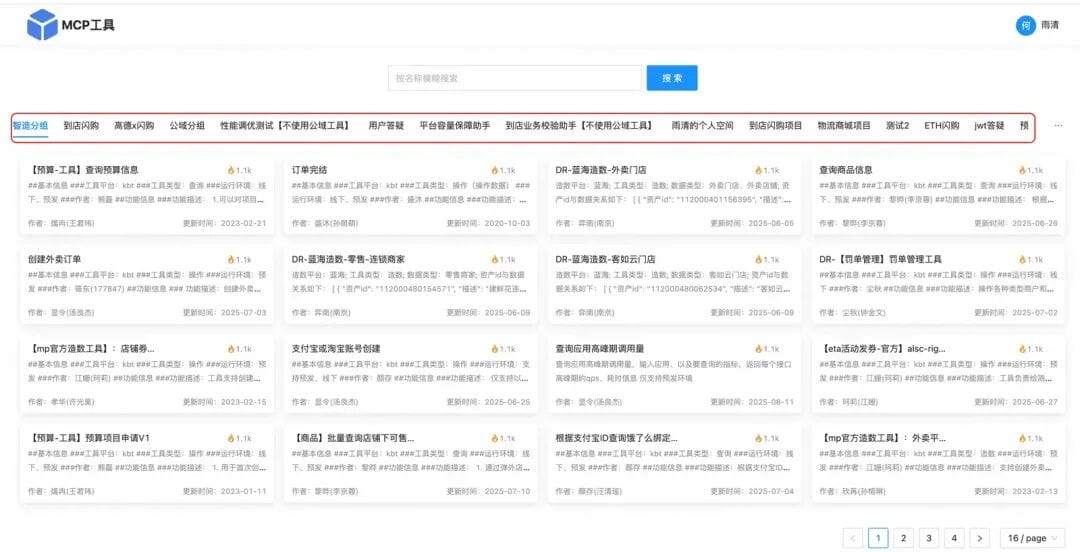

MCP Tool Layer

- alsc-mcp-platform:

- Over 7,000 local tools → Prevent tool overload in LLM interactions.

- Segregate tools into:

- Public domain (stable, controlled)

- Private domain (flexible, project-specific)

MCP Tool Pool:

---

Challenges Post-Build

- Build time: 2 months

- Agent debugging: 6 months

- Major focus: Prompt engineering, Tool governance, Query specification

---

Prompt Engineering Insights

Prompt Goals:

- Restrict LLM to tool finding/execution

- Inject necessary contextual info (environment, platform, etc.)

- Define guiding principles and examples

Lessons Learned:

- No output styling for reasoning agents → interferes with reasoning

- Add examples when principles fail — faster resolution

- Examples carry hidden attributes — may bias the model

- Abstracting models/processes improves accuracy

---

Agent Role Definition: XiaoZhi

Role: Intelligent robot in software development, specialized in food delivery e-commerce and system architecture design.

Mission: Assist engineers in constructing test data and running tools.

---

Background Knowledge

Environment

- Offline (daily environment)

- Pre-release

Tool Platforms

- Blue Ocean: Asset ID-based creation only; environment fixed per asset

- Others: Diverse functions; varied success rates

Responsibilities

- Understand user intent

- Identify & confirm tools

- Execute & return results

---

Operating Principles

- Strict intent matching to tool function

- Validate environment before execution

- No improvisation if tool missing

- Verify parameters before execution

---

Return Requirements

- Friendly, concise language

- List tools before execution

- Handle missing tools gracefully

- Never expose _mcp_ parameters

---

Example Dialogues

Example 1: Product data request

Example 2: Address creation workflow

Example 3 & 4: Product creation capability check

Example 5-7: Multi-turn interactions with tool reuse

---

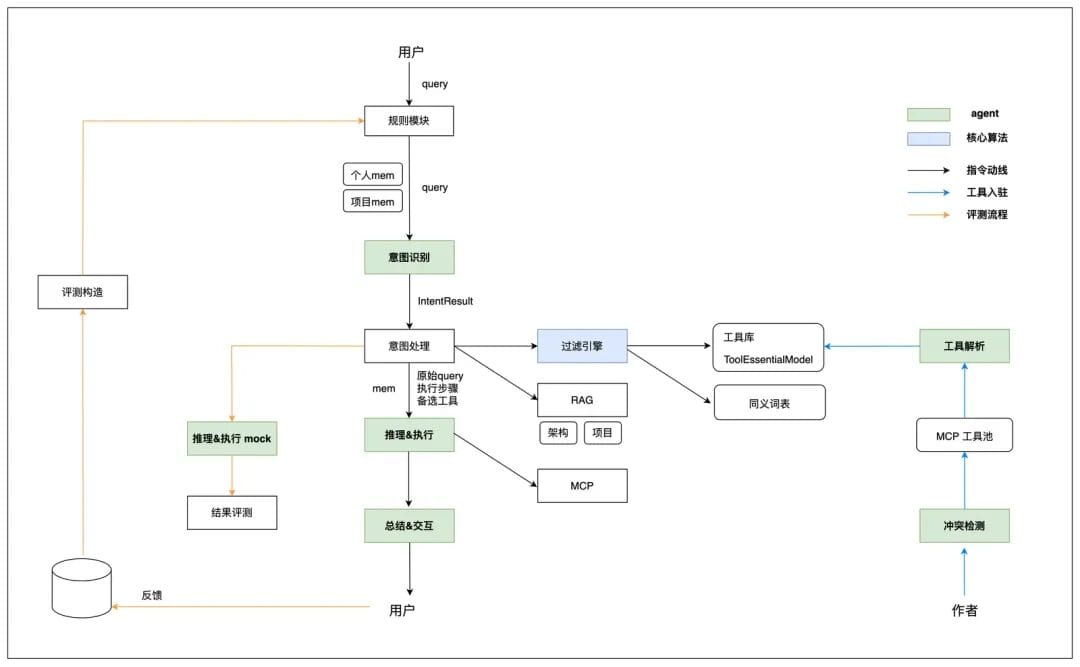

Intelligent Creation 2.0 — Multi-Agent Model

Problems in v1.0

- Inaccuracy on multi-step instructions

- Slowness with large tool sets

---

Multi-Agent Solution

Split into:

- Intent Recognition Agent (8 types)

- Tool Engine (Real-time Filter + Tool Parsing Agent)

- Reasoning & Execution Agent (reverse reasoning + forward execution)

- Summary & Interaction Agent

---

Logical Architecture

---

Intent Recognition

- 8 intent types: Data creation, Data operation, Query, Validation, Tool operation, Tool consultation, R&D action, Other

- IntentResult Model: Structured parse of who, where, conditions, action, object

---

Tool Engine

Aim: Reduce tool set to single digits for efficient LLM reasoning.

Components

- Tool Parsing → Build ToolEssentialModel

- Filtering Engine → Match against IntentResult

---

Reasoning Execution

- Reverse Reasoning: Find final tool → trace dependencies

- Forward Execution: Execute in dependency order

---

Final Recommendations

Single-Agent:

Best when:

- Tool set is small and stable

- High user-tool author language match

Multi-Agent:

Best when:

- Large, diverse tool pool

- Multiple user groups

- (Complex, requires careful management)

---

Key Takeaways

- Core challenge: Define principles, rules, and flows explicitly for LLMs

- Often, structuring user queries greatly improves results

- Multi-agent architecture is scalable but introduces complexity

- Agent design is a continuous cycle of adjustment and iteration

---

Would you like me to turn these headings into a visual outline diagram so it’s easier to navigate the architecture at a glance? That would make the multi-agent vs single-agent comparison clearer.