Airbnb’s Mussel V2: Next-Generation Key-Value Store Unifying Stream and Batch Processing

Airbnb Introduces Mussel v2: Next-Generation Key-Value Storage

Airbnb’s engineering team has launched Mussel v2, a complete overhaul of its internal key-value engine. This new system is designed to:

- Unify streaming and bulk ingestion

- Simplify operations

- Scale to much larger workloads

Reportedly, Mussel v2 can:

- Sustain 100,000+ streaming writes per second

- Support tables beyond 100TB

- Keep p99 read latency under 25ms

- Bulk ingest tens of terabytes of data

All of this allows product teams to focus on innovation rather than on pipeline management.

---

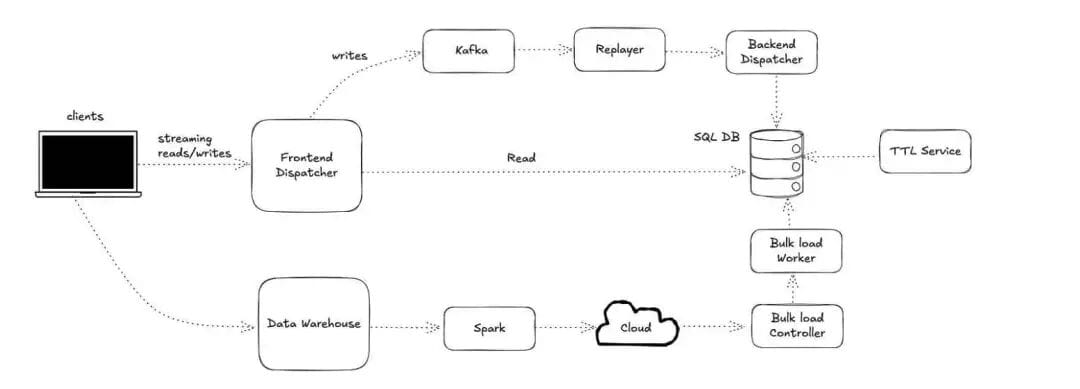

Limitations of Mussel v1

The earlier Mussel v1 powered internal Airbnb data services but had key limitations:

- Static hash partition design on Amazon EC2

- Managed via Chef scripts

- Separate batch and streaming paths → higher overhead, harder consistency

- Increasing difficulty as data volume and integrations grew

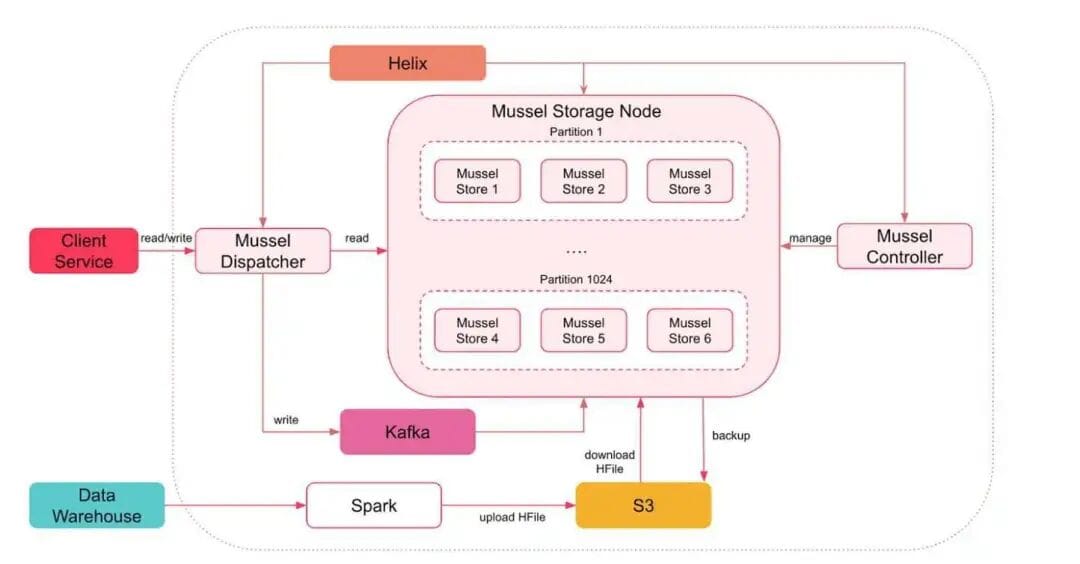

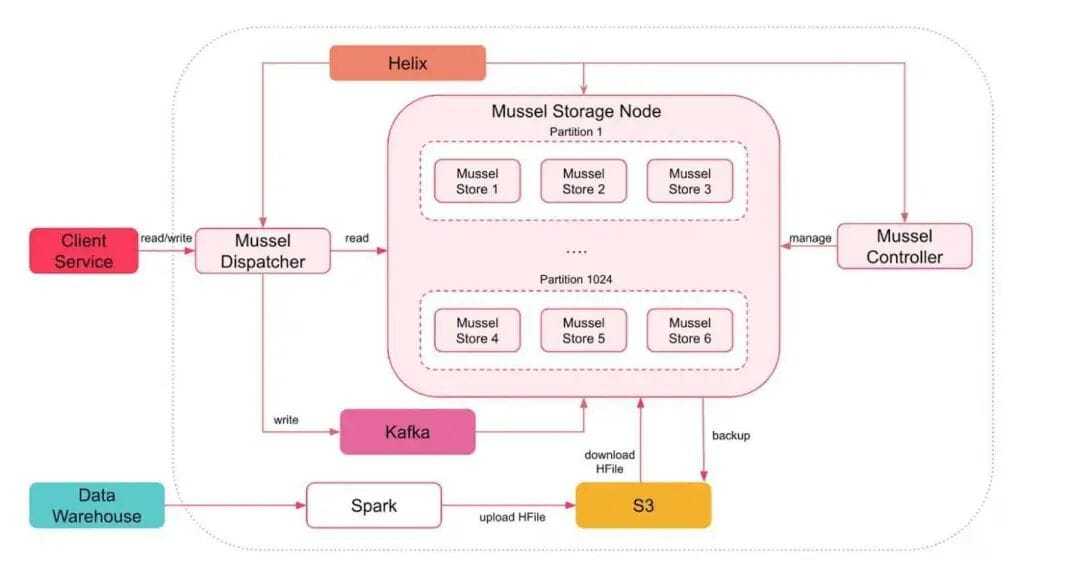

Mussel V1 architecture (Image source: Airbnb Engineering Blog)

---

Design Innovations in Mussel v2

Mussel v2 integrates a NewSQL backend with a Kubernetes-native control plane, offering:

- Elasticity of object storage

- Low-latency caching responsiveness

- Service mesh manageability

Key Features

- Dynamic range sharding with pre-splitting to reduce hotspots

- Namespace-level quotas and dashboards for cost transparency

- Stateless Dispatcher layer that scales horizontally:

- Routes API calls

- Handles retries

- Supports dual-write & shadow-read for migrations

---

Write and Bulk Ingestion Workflows

- Writes

- Persisted to Kafka for durability

- Processed by Replayer & Write Dispatcher sequentially into the backend

- Bulk loads

- Via Airbnb’s data warehouse using Airflow jobs

- S3 staging area

- Maintains merge-or-replace semantics

---

Efficient Data Expiration

- Topology-aware expiration service: shards namespaces into range-based tasks

- Parallel deletion by multiple workers

- Minimizes impact on real-time queries

- For write-heavy tables: max version limits & targeted deletion

Mussel V2 architecture (Image source: Airbnb Engineering Blog)

---

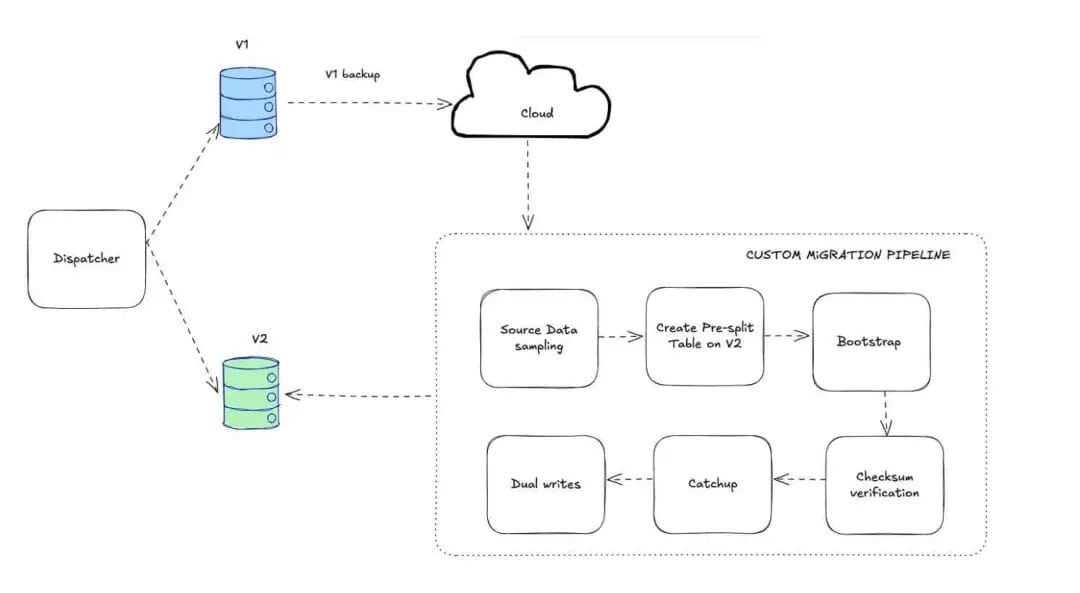

Migration from v1 to v2

Migrating was challenging but achieved with zero downtime:

Approach

- Table-granularity blue-green deployment

- Continuous validation & rollback

- Bootstrapping from backups & sampled data

- Planned pre-splits

- Checksum validation after bootstrap ingestion

- Apply lagging Kafka events

- Enable dual writes before cutover

---

Cutover Process

- Gradually shift reads to v2

- Use shadow traffic to monitor consistency

- Roll back to v1 if error rates spike

- Kafka remained the common log throughout

---

Data migration pipeline from Mussel V1 to V2 (Image source: Airbnb Engineering Blog)

---

Extra Operational Complexity

Migrating from eventual consistency to strong consistency required:

- Write deduplication

- Retry control

- Query tuning & workload distribution

- Automated rollback and monitoring

- Per-table staging sequences

Result: 1PB+ migrated with no downtime.

---

Full article:

Airbnb’s Mussel V2: Next-Gen Key Value Storage to Unify Streaming and Bulk Ingestion

---

Broader Context: Scalability Lessons for Content Technology

Advanced architectures like Mussel v2 show the value of scalability and operational simplicity — principles equally important in content creation tech.

For creators aiming to leverage AI to produce, publish, and monetize across multiple platforms, open-source tools such as AiToEarn官网 offer:

- Integrated AI content generation

- Cross-platform publishing to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter

- Analytics and AI model rankings

- A pipeline that is transparent and efficient — paralleling Mussel v2’s goals

---

Would you like me to also create a side-by-side comparison table of Mussel v1 vs Mussel v2 to make the improvements even clearer?