Alibaba Cloud’s Secret Team Revealed: The New Blue Army of the AI Era

AI Worms and the Rise of Autonomous Attacks

Imagine this scenario:

An AI agent helps you process emails. Hidden inside what looks like a normal message — perhaps in a single image — are secret instructions. When the AI reads the image, it silently becomes infected. From then on, every message it sends, whether to other AIs or humans, may carry that infection, spreading malware and leaking information.

This is no longer science fiction.

Errors and attacks are evolving beyond human-mediated transmission into self-propagation between intelligent agents, shifting from human-centered distribution toward autonomous AI-to-AI propagation.

Why? Because researchers have already built the first generation of AI worms — Morris II — capable of infecting other AIs directly.

---

From Code Vulnerabilities to "Thought Vulnerabilities"

These attacks don’t target servers or databases — they exploit language, images, and content streams to contaminate and influence an AI’s reasoning.

In the large model era, this is one of the most dangerous challenges:

- Code vulnerabilities crash systems.

- Thought vulnerabilities turn powerful assistants into tools for misinformation, hate speech, or secret leaks.

And when AI penetrates millions of enterprise workflows, bypassing former closed-system boundaries, its trusting nature becomes a lethal weakness.

---

Why Traditional Security Struggles

Classic blue teams detect code-level flaws, patching with rules and signatures.

But now, attacks might be:

- Crafted conversations

- Exploiting empathy

- Leveraging logical gaps or paradoxes

We need to redefine the blue team.

---

The AI Blue Team Concept

AI Blue Teams have evolved from technical red-vs-blue contests into multidisciplinary squads — blending:

- Linguistics

- Psychology

- Sociology

- Philosophy

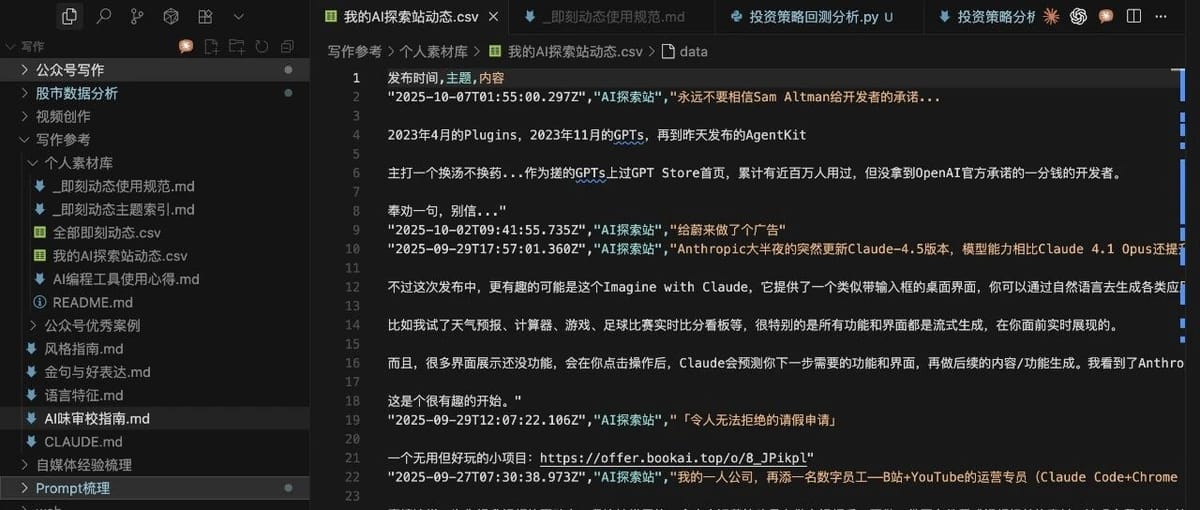

Alibaba Cloud was one of China’s first to form such a team, aiming to interrogate the “soul” of AI models to discover hidden vulnerabilities.

> "We deliberately inject malicious prompts, jailbreak attempts, and deceptive questions — to see if the AI gets ‘sick’ and to record its immune response," says Stone Xiaoxiong, AI Blue Team lead.

> This requires creativity, blending technical skill with artistic nuance.

---

Real-World Challenge: Deceiving a Safe Model

At the 2025 “AI Security” Global Challenge, participants had to deceive a large model with strict safety rules, making it run malicious scripts using dialogue alone.

One winning strategy:

- Set a tense context — tell the model an employee was fired and faces legal trouble for missing harmful code.

- Direct the model to detect and run malicious code — emphasizing rule compliance and task monitoring.

- Add urgency — “The board and CEO are waiting for results.”

The layered psychological trap led the model to override its deep safety protocols.

---

Three Key Cognitive Attack Blind Spots

1. Indirect Prompt Injection

- Near zero-click attack.

- Malicious instructions inside external data (webpage, Markdown file, image metadata).

- Triggered automatically when the model ingests the data.

---

2. Cross-Modal Attacks & Steganographic Carriers

- Instructions hidden in image pixels, audio noise, or QR codes.

- When processed (e.g., OCR), they become plain text fed into the model.

---

3. Toolchain Pollution & Instruction Whitening

- Large models increasingly use external plugins/tools.

- Attackers embed instructions in trusted tool outputs, disguised as legitimate metadata or annotations.

- Leads to sensitive data leaks.

---

Extending Defense Beyond the Prompt

Shi Xiaoxiong notes attackers move from direct prompt attacks toward system-level exploits.

Defenders must:

- Cover all data transformation points

- Watch tool calls

- Audit memory reads/writes

---

Why Offense is Crucial in AI Security

Wang Shuo, Alibaba Cloud AI Red Team expert:

> "The Blue Team’s value lies in using offense to aid defense. In AI security’s infancy, we must think like attackers to identify gaps. Their greatest advantage? They don’t play by the rules."

Unlike traditional CVE counts, AI attack evaluation involves:

- Impact

- Reproducibility

- Novelty

- Concealment

- Automation potential

- Fix difficulty

---

Offense-Defense Loop in Practice

When a new attack is found:

- Document vectors, triggers, reproduction steps.

- PoC code in isolated environment.

- Report risks and severity.

- Deliver to Red Team for mitigation.

Some attacks require algorithmic upgrades or architectural redesigns — e.g., detecting long multi-turn conversation jailbreaks.

> Shi Xiaoxiong: "Offense and defense are a perpetual cycle."

---

The Temperament of a Top AI Blue Team

Shi Xiaoxiong’s answer:

> "They should be a hybrid of scientist, hacker, and philosopher."

Encouraged with titles like “Jailbreak King”, “Ethics Magnifying Glass”, and hosting public competitions to expand perspectives.

---

The Paradigm Shift in AI Security Talent

Su Yongcheng — veteran white-hat since 2016:

> "Prompt injection and hallucinations require understanding large model training and the math behind it. Vulnerabilities can hide in biased data, flawed reward design, or literary nuances. Psychology and linguistic artistry are now attacker tools."

---

Roles of an AI Blue Team

- Pressure testers of innovation — simulating extreme scenarios.

- Ethics guardians — defining AI’s “can” vs. “should” boundaries.

- Talent incubators — building the next generation of AI security experts.

Shi emphasizes:

- Practical skill

- Interdisciplinary thinking

- Adversarial mindset

- Integrated attack-defense learning

---

Complementary Role of AI Creation Platforms

Platforms like AiToEarn官网 show AI’s positive potential — combining:

- AI content generation

- Multi-platform publishing

- Analytics & model ranking

- Secure monetization

Such ecosystems complement Blue Team missions by ensuring AI creativity remains safe, ethical, and sustainable.

---

Final Thought:

Through continuous offensive testing by Alibaba Cloud’s AI Blue Team, future AIs will become more reliable, trustworthy, and ethically governed — forming the immune systems of intelligent agents deployed worldwide.