Amazon Web Services Launches M8a General Purpose EC2 Instances with 5th Gen AMD EPYC “Turin” Processors

AWS EC2 M8a Instances — General Availability Overview

AWS has officially launched General-Purpose M8a Instances, powered by the 5th Generation AMD EPYC ("Turin") processors with a maximum frequency of 4.5 GHz.

These instances are designed for high-performance, throughput-intensive applications, including:

- Gaming

- Financial applications

- Machine learning inferencing

- Video encoding

- Application servers

- Simulation modeling

- Mid-size data stores

- Application development environments

- Caching fleets

🔗 More details: AWS M8a Instances | AMD EPYC 9005 Series

---

Expanding the AWS EC2 Portfolio

The M8a joins recent additions to the EC2 family, including:

- Memory-optimized: R8i and R8i-flex

- Compute-optimized: C8i and C8i-flex

Built upon the M7a series introduced two years ago, AWS claims:

- 30% higher performance

- Up to 19% better price-to-performance

---

Performance Enhancements

> Betty Zheng, Senior Developer Advocate at AWS:

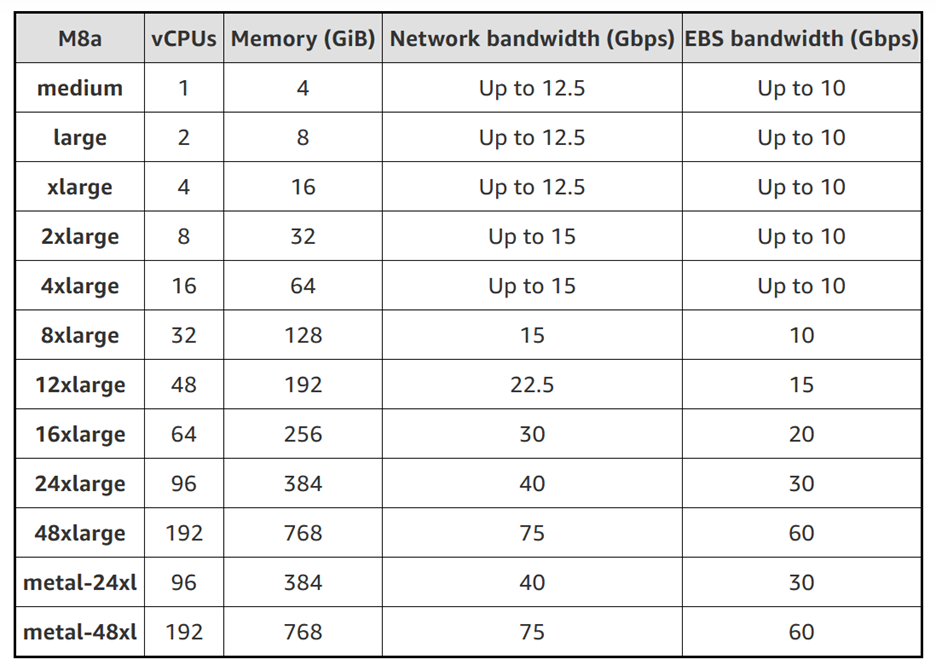

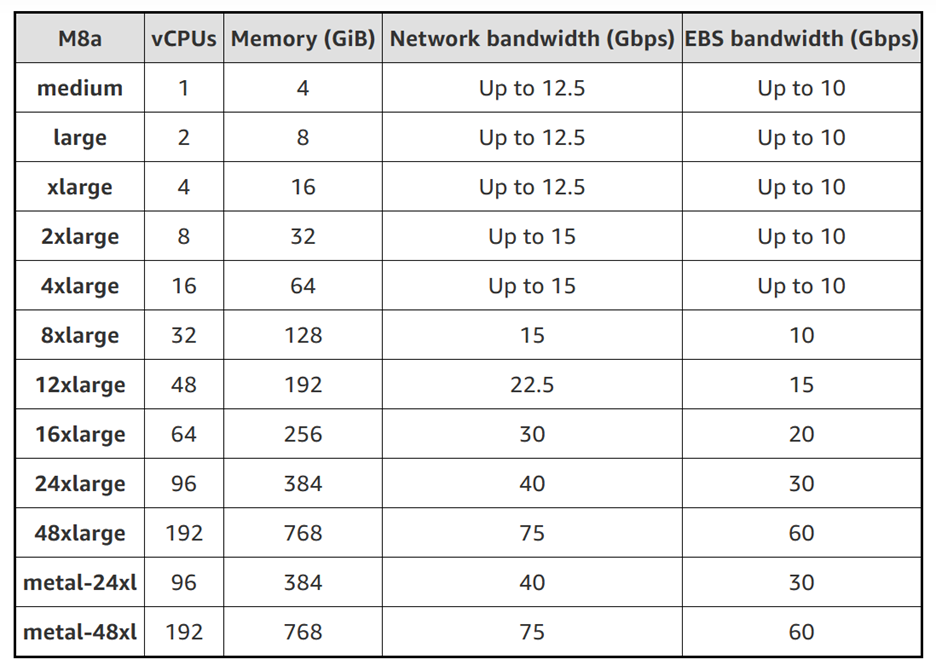

> For workloads with high I/O requirements, M8a instances provide up to 75 Gbps networking bandwidth and 60 Gbps Amazon EBS bandwidth — a 50% improvement over the previous generation.

Key Advantages:

- Networking bandwidth: Up to 75 Gbps

- Amazon EBS bandwidth: Up to 60 Gbps

- Built on AWS Nitro System — low overhead, consistent performance, enhanced security

- 6th-gen AWS Nitro Cards — I/O acceleration support

- Max configuration: Up to 192 vCPUs and 768 GiB RAM

---

AiToEarn Integration for Cloud Creators

For content creators building high-performance AI workflows, platforms like AiToEarn官网 leverage AWS scalability.

AiToEarn Highlights:

- Open-source global AI content monetization

- Cross-platform publishing to Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter)

- Integrated tools: AI content generation, analytics, and model ranking

- Backend scalability: Works seamlessly with M8a-powered infrastructure

🔗 Resources: AiToEarn博客 | GitHub Repo | AI模型排名

---

Configurations Available

M8a instances include:

- 10 virtualized instance sizes

- 2 bare-metal configurations: `metal-24xl` and `metal-48xl`

- Deployment flexibility for small to massive workloads

(Source: AWS News Blog)

---

Industry Feedback

Ryan Sagare — LinkedIn post:

> 3rd-party benchmarks show AMD EPYC M8a winning 133 out of 145 tests against M8i and M8g — a 91% win rate.

Niall Mullen, Netflix — AMD blog post:

> 5th Gen EPYC is now core to Netflix infrastructure — enabling scalability for live events and extending performance features like AVX-512 across encoding, personalization, and ML workloads globally.

---

Not All Praise

Snark bot from Last Week in AWS humorously noted:

> AWS announces M8a instances: 30% faster than M7a! Because apparently M7g, M7i, M7a-large-with-extra-cheese weren’t confusing enough. Now with 45% more marketing percentages and 100% more pricing calculator headaches!

---

Availability and Pricing Options

Regions:

- US East (Ohio)

- US West (Oregon)

- Europe (Spain)

Purchase Models:

---

Final Thoughts

Managing AWS's wide instance portfolio can be challenging, especially across multi-region deployments.

Platforms like AiToEarn demonstrate how AI-powered automation can help developers and media creators operate more efficiently — whether deploying compute resources or pushing content worldwide.

---

If you like, I can produce a quick table comparing M8a vs M7a vs M8g instance specs — would you like me to add that?