Andrew Ng Releases Automated Paper Reviewer, Achieves Near-Human Performance at ICLR

AI's Role in Academic Paper Review: Opportunities and Challenges

At present, even within the AI research community, there is no unified standard governing the use of AI in paper review.

Among leading global conferences:

- ICLR requires disclosure when large models are used during review writing.

- CVPR explicitly prohibits using large models at any stage of writing review comments.

---

Rising Pressure: Increasing Submissions, Limited Human Capacity

As submission numbers surge, human reviewers alone cannot keep up.

Even under ICLR 2026's strictest control rules, analysis revealed:

> Up to one-fifth of review comments were still one-click generated by large models.

Yet despite this adoption, review timelines remain excessively long.

Case in Point: Slow Feedback Loops

Renowned AI scholar Andrew Ng has criticized the ever-lengthening review cycles.

One of his students faced an especially difficult path:

- Rejected six times over three years

- ~6 months wait for each review decision

These lengthy cycles:

- Delay publication

- Reduce research agility

- Clash with the pace of modern technological development

---

Idea: AI-Powered "Paper Feedback Workflow"

If review timelines cannot be significantly shortened, perhaps AI can help in a different way:

- Provide high-quality feedback before official submission

- Enable directional revisions early

- Reduce cost and time lost in repeated rejections by major conferences/journals

Goal: Build an efficient AI-assisted pre-review pipeline for researchers.

---

The "Agentic Reviewer" Project by Andrew Ng

To address these issues, Professor Andrew Ng launched the Agentic Reviewer system for research papers.

Project origins:

- Started as a weekend hobby

- Enhanced with support from Ph.D. student Yixing Jiang

Training:

Used ICLR 2025 review data to develop the model.

Evaluation (Spearman correlation – higher is better):

- Human vs. Human: 0.41

- AI vs. Human: 0.42

This means the AI reviewer’s consistency is on par with human reviewers.

---

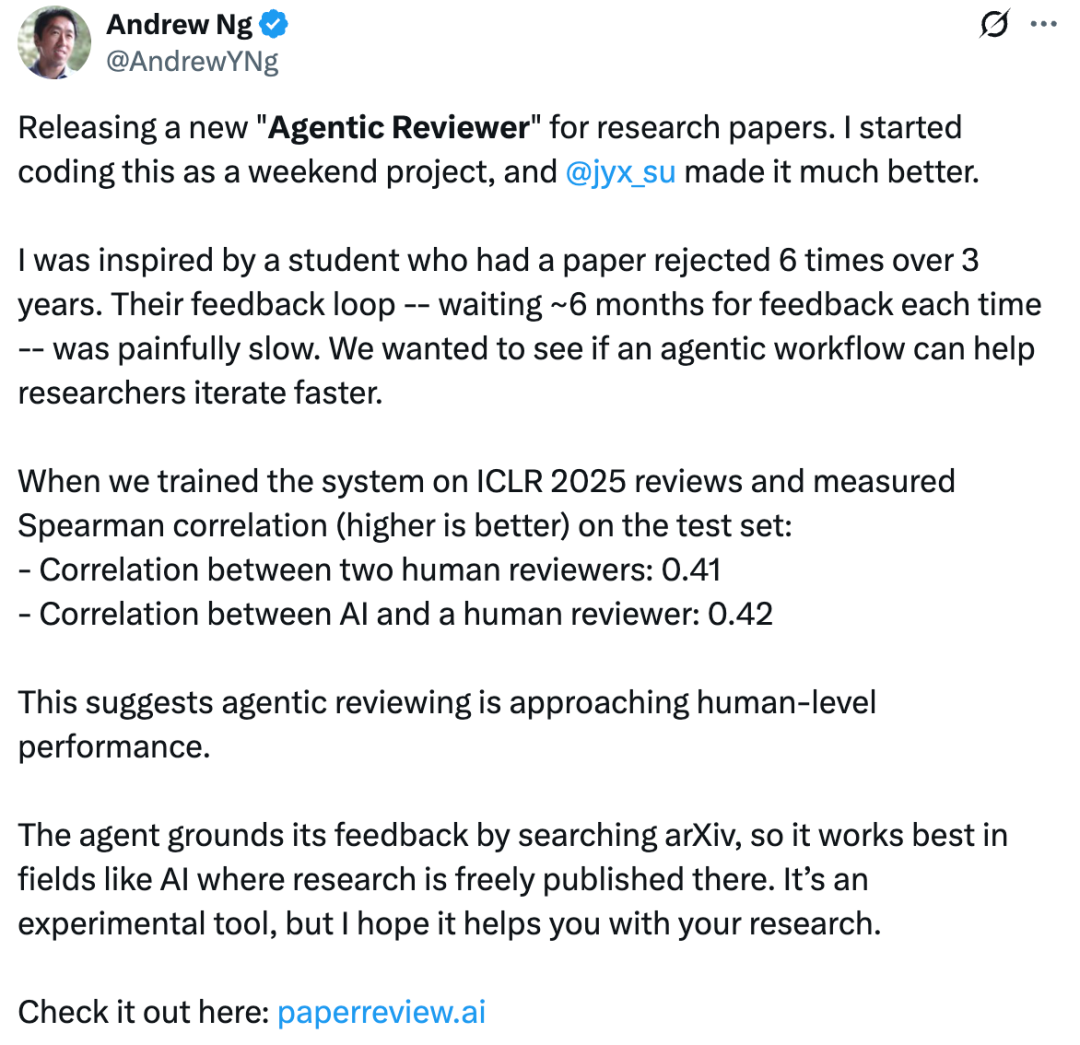

How It Works

- Retrieves evidence from arXiv to support its feedback

- Most effective in fields like AI where papers are openly available on arXiv

- Demo link: https://paperreview.ai/

---

Public Response

- Feedback is largely positive

- Users suggest customizing for specific conferences/journals

- Potential to provide estimated review scores

---

Potential Impact: Accelerating Science

AI agents could:

- Speed up research cycles

- Shorten talent cultivation timelines

- Act as an engine for academic progress

---

Remaining Concerns

- If researchers pre-check their work with AI, could this reduce academic diversity?

- How will AI tools shape long-term reviewer behavior?

---

Looking Ahead: A Transforming Review System

With both researchers and reviewers using AI for feedback, the academic review process may be approaching a major structural transformation.

While the exact role AI will play is still evolving, its influence in academic workflows is expanding rapidly.

---

Related Productivity Platforms

In this ecosystem of faster, AI-assisted workflows, platforms like AiToEarn官网 offer a parallel approach:

- Integrate AI content generation

- Publish across multiple platforms simultaneously

- Provide analytics and model rankings

For academics, similar integrations could:

- Merge AI reviewers (like Agentic Reviewer) with publication and dissemination tools

- Streamline feedback, revision, and sharing into one seamless process

Example: AiToEarn enables creators to multi-post to:

- Douyin

- Kwai

- Bilibili

- Rednote (Xiaohongshu)

- Threads

- YouTube

- X (Twitter)

Turning AI-powered work into measurable, wide-reaching impact.