# The Open-Source Landscape for Large Models: New Shifts and Strategies

---

## Overview

During the recent National Day holiday, while many industries paused for a break, the **large model sector** continued evolving rapidly.

In the past two weeks alone, leading teams in China and abroad have launched **over ten major products**, each with distinct priorities.

Highlights include:

- **Late September:** Alibaba unveiled its all-in-one lineup centered around **Qwen3-Max**, showcasing its tech strength.

- **End of September:** DeepSeek and Anthropic targeted real-world programming scenarios, releasing **DeepSeek V3.2-Exp** and **Claude Sonnet 4.5**.

- **Zhipu AI:** Introduced **GLM-4.6**, positioning it as China's strongest coding model.

With some companies pursuing full-spectrum coverage and others focusing on niche excellence, **competition strategies are diverging**.

---

## 1 — Ant's Bold Entry into the Trillion-Parameter Era

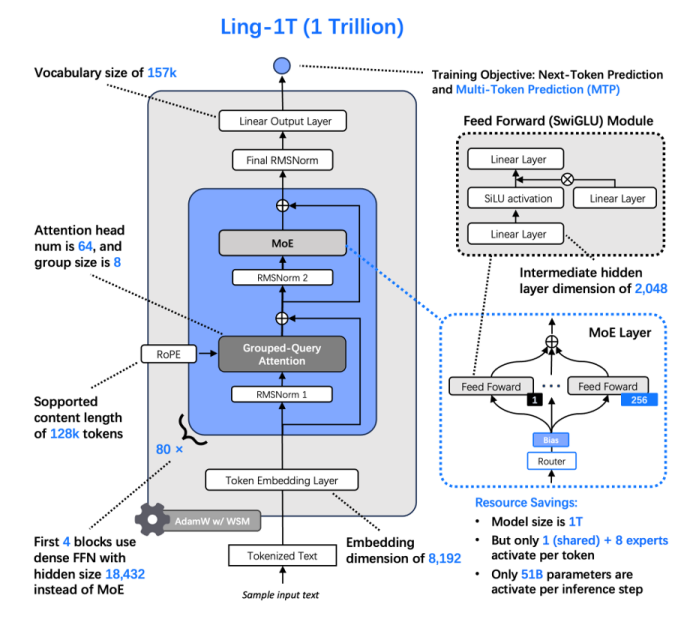

At midnight on **October 9**, Ant Group’s “Bailing” large model team announced **Ling-1T**, a natural language model boasting **1 trillion parameters (1000B)**.

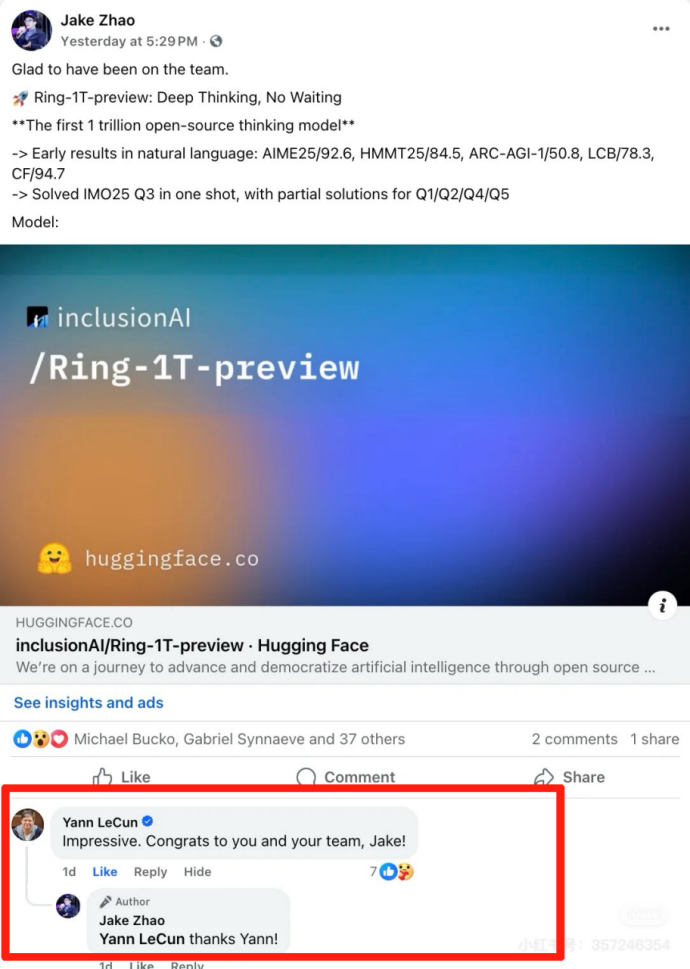

Only ten days earlier, the team had open-sourced **Ring-1T-preview** — its self-developed trillion-parameter reasoning model.

**Two trillion-parameter models in ten days**

This aggressive pace has sparked intense industry discussions about Ant’s intentions.

---

## 2 — Pushing the Limits of Intelligence

### The Bailing Philosophy

Ant Group named its foundational models “Bailing” (百灵) — meaning *success after a hundred tries*, symbolizing inclusive tech.

Development is handled by an independent team, similar to Alibaba’s approach.

**Series distinction:**

- **Ling-Series:** Non-reasoning models (MoE architecture)

- **Ring-Series:** Reasoning models

- **Ming-Series:** Native multimodal models

**Key milestone:** Both Ling and Ring models have now reached the **trillion-parameter scale**.

---

### Why Trillion Parameters Matter

- Equivalent to **human brain neuron count**

- Very few domestic models reach this scale

- Other Chinese trillion-parameter models:

**Kimi K2**, **Qwen3-Max** (Alibaba), **Hunyuan** (Tencent)

Despite theories like *“data wall”* or *“end of pre-training”*, consensus is forming:

**More data + more parameters ⇒ sustained performance gains**

---

## 3 — Industry Perspectives

- **Alibaba:** Algorithm chief Lin Junyang states scaling law “has yet to hit a ceiling”.

- **Kimi Team:** Unveiled flagship K2 after two months of work despite difficulties.

- **Tongyi Qianwen:** Adheres to “Just Scale It”.

**Conclusion:**

Leaders are still exploring the **upper limits of intelligence**.

---

## 4 — Bailing's Rapid Model Rise

Ant’s dual releases signal:

1. Faster iteration cycles

2. Wider open-source adoption

3. Positioning in global high-performance model competition

---

### Open Platforms Matter

Example: **[AiToEarn官网](https://aitoearn.ai)**

- Open-source *global AI content monetization* platform

- Supports multi-platform publishing: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Offers analytics + AI model rankings

- Bridges innovation with global audiences

---

## 5 — Balancing Scale and Efficiency

### The Pareto Challenge

- Large models must balance **parameter size** and **inference speed**

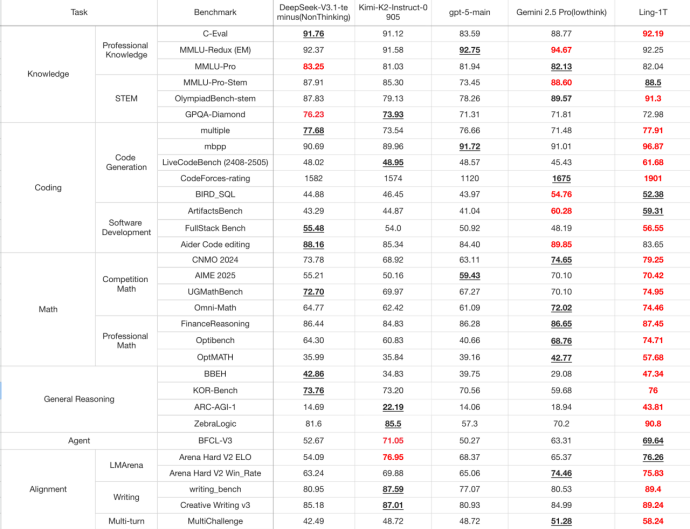

- Ant’s Ling-1T shows strong benchmark performance — topping coding & math metrics

---

### Inference Accuracy: AIME 25

Ling-1T beats **DeepSeek-V3.1-Terminus**, **Kimi-K2-Instruct-0905**, **GPT-5**, **Gemini-2.5-Pro**:

- Shorter reasoning paths

- Higher accuracy

- 128K long text window processing

- ~50B parameters activated per token

Training involved:

- **20 trillion** high-quality tokens

- *Ling Scaling Laws* for hyperparameter optimization

- **LPO strategy optimization** + hybrid reward mechanisms

---

## 6 — Ling Model Family Overview

- **Scale:** 16B → 1T parameters

- **Multimodal:** Vision, audio, speech, graphics

- **Deployment modes:**

- *Ling-mini*: Mobile devices

- *Ling-flash*: SME server

- *Ling-1T*: Cloud

---

**Community Impact**

- *Ming-lite-omni v1.5*: #1 on Hugging Face’s any-to-any chart

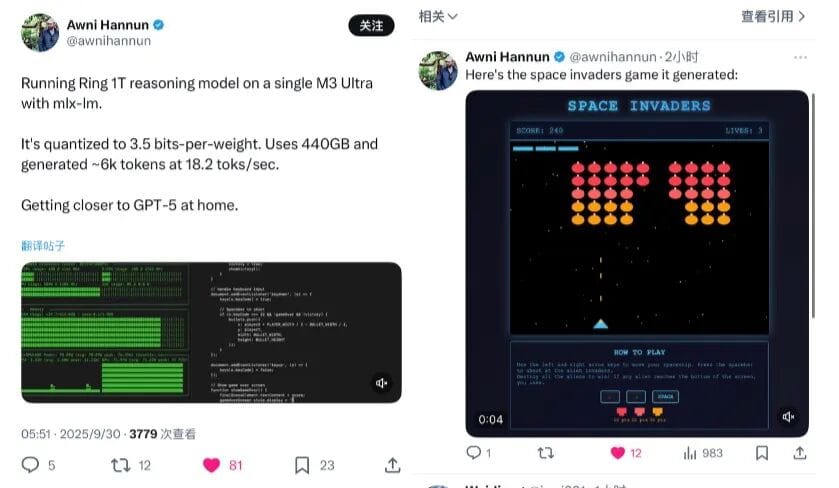

- *Ring-1T-preview*: #3 on Hugging Face’s text generation toplist

- **Yann LeCun:** Commented “Impressive”

---

### Developer Feedback

Apple engineer after local quantization: *“Getting closer to GPT-5 at home”*.

---

## 7 — Co-Building AGI Through Open Source

Ant’s **AI First** strategy aligns with:

- “Alipay’s dual flywheels”

- “Accelerating globalization”

Open source intention:

> Build a truly open AGI ecosystem via transparency and iteration.

Only fully open-source trillion-parameter models:

- Ling-1T

- Ring-1T-preview

- Kimi K2

---

### Benefits of Open Source

- Ready-to-use starting points for complex applications

- Feedback loops accelerate intelligence emergence

- Democratizes technology access

**InclusionAI**: Ant's open-source org

- Full-stack large-model tech

- RL inference framework **AReaL**

- Multi-agent framework **AWorld**

---

## 8 — Extending Impact to Creative Economies

Platforms like **[AiToEarn官网](https://aitoearn.ai)** can:

- Connect AI content generation → cross-platform publishing

- Integrate analytics & ranking

- Monetize outputs globally

These complement open-source ecosystems by turning model power into **sustainable creative economies**.

---

## 9 — Flywheel Reversal in Robotics and AI

[Read Related](https://mp.weixin.qq.com/s?__biz=MzA5ODEzMjIyMA==&mid=2247726783&idx=1&sn=22fb998547c97e203038b1b3ef3066f3&scene=21#wechat_redirect)

---

### Challenge

Agile component makers face:

- Demand gaps

- Rising R&D costs

- Pricing pressure from integrated AI robotics firms

**Opportunity:** Integrate high-performance computing to make components “AI-native”.

---

### Cross-Ecosystem Thinking

Logic from platforms like **AiToEarn**:

- Connect outputs to global audiences

- Use analytics to identify niches

- Apply in robotics beyond traditional verticals

---

## Terms of Use

Unauthorized reproduction prohibited without **AI Technology Review**’s permission.

Reprints on WeChat Official Accounts require prior authorization and source citation.

---

**More Resources:**

[Original Article](https://www.leiphone.com/)

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=29faf503&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzA5ODEzMjIyMA%3D%3D%26mid%3D2247727244%26idx%3D1%26sn%3D6bad54d6787a0faaa6b025803d97842e)