Ant releases and open-sources trillion-parameter reasoning model Ring-1T, approaching GPT-5 capabilities

Ring-1T: Ant Group’s Trillion-Parameter Open-Source Reasoning Model

In the early hours of October 14, Ant Group officially released Ring-1T, a trillion-parameter reasoning model with fully open-sourced model weights and training recipes.

This release builds upon the Ring-1T-preview version from September 30, extending large-scale Verifiable Reward Reinforcement Learning (RLVR) to boost natural language reasoning capabilities. The team also refined general model performance through RLHF training, achieving balanced results across multiple benchmarks.

---

Breaking Ground in Mathematical Reasoning

To advance Ring-1T’s mathematical and complex reasoning skills, the Bailing Team tested it on difficult IMO 2025 (International Mathematical Olympiad) problems:

- Framework: Integrated into AWorld, a multi-agent reasoning framework.

- Approach: Solutions generated purely via natural language reasoning.

- Results:

- Solved Problems 1, 3, 4, and 5 in a single attempt → IMO Silver Medal equivalent

- Third attempt achieved nearly full-score geometric proof reasoning for Problem 2

- On challenging Problem 6, matched Gemini 2.5 Pro’s answer (4048) — correct answer was 2112

---

General Capability Benchmarks

Ring-1T also demonstrated strong general abilities:

- Arena-Hard V2:

- Human Preference Alignment score: 81.59%

- #1 open-source ranking, close to GPT-5-Thinking (High) at 82.91%

- HealthBench:

- Top score among open-source healthcare QA systems

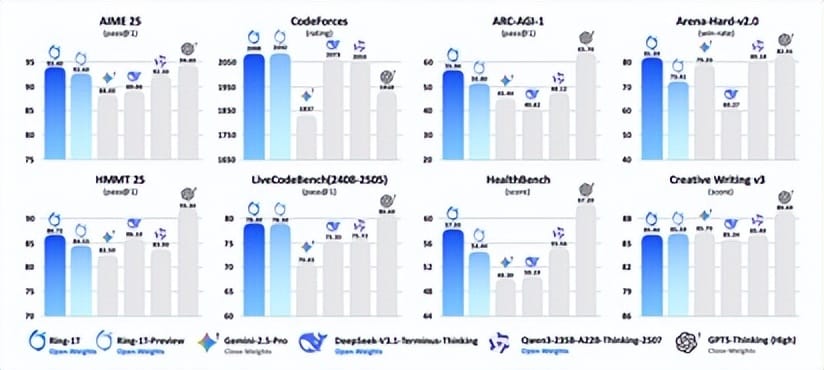

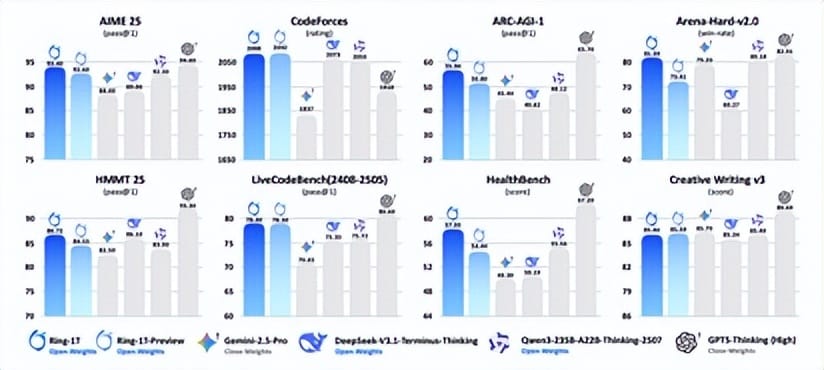

Performance comparison: Ring-1T vs. other reasoning models

---

Overcoming the Precision Divergence Challenge

Training-Inference Precision Mismatch

One of the largest hurdles in trillion-parameter model training is precision divergence — small implementation differences between training and inference that cause accuracy drops or even training collapse.

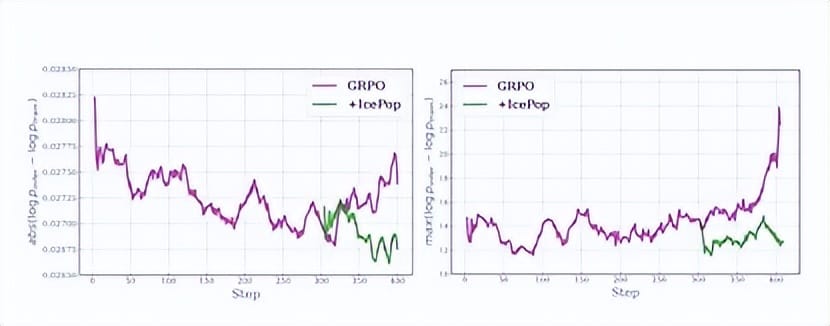

Ant Group’s “Icepop” Algorithm

Icepop uses masked bidirectional truncation to “freeze” distribution differences at a low level, ensuring stable long-sequence, long-duration training.

---

Scaling Reinforcement Learning with ASystem & AReaL

For large-scale RL on trillion-parameter models, Ant Group created ASystem, a high-performance RL platform with the open-source AReaL framework. Key optimizations include:

- Instant GPU memory fragment recovery

- Zero-redundancy weight swapping

- Billion-scale RL training stability — sustainable daily operations

(Left: GRPO divergence grows exponentially; Icepop remains stable. Right: GRPO divergence peaks sharply; Icepop stays low.)

---

Leveraging the Ling 2.0 Architecture

Ring-1T builds on the Ling 2.0 trillion-parameter base model, featuring:

- Highly sparse MoE architecture (1/32 expert activation)

- FP8 mixed precision

- MTP and other efficiency features

Post-training process:

- LongCoT-SFT

- RLVR

- RLHF

This process enhanced complex reasoning, instruction-following, and creative writing.

Users can download the model via HuggingFace or ModelScope, and test it online through Ant’s Baibaoxiang platform.

---

Expanding the Trillion-Parameter Model Family

Ant Bailing’s program now includes 18 models, from 16B parameters to 1T parameters, with two trillion-scale models:

- Ling-1T — General language model

- Ring-1T — Reasoning-focused model

This marks the program’s official entry into the 2.0 phase.

---

Beyond Reasoning: Monetizing AI Content

In the growing open-source AI ecosystem, Ring-1T demonstrates how openness can drive cutting-edge reasoning.

For creators aiming to monetize AI-generated content globally, AiToEarn官网 provides a fully open-source AI content monetization framework:

- Cross-platform publishing: Douyin, Kwai, WeChat, YouTube, Instagram, X (Twitter), and more

- Integrated tooling: AI generation, publishing, analytics

- Ranking visibility: AI模型排名

This enables creators to efficiently turn AI-powered creativity into sustainable income.

---

Would you like me to create a compact infographic-style summary of Ring-1T’s capabilities and unique features within this same Markdown file so it works as a quick reference? That could make the document even more reader-friendly.