Ant Ring-1T Debuts: Trillion-Parameter Reasoning Model Matches IMO Silver-Level Math Skills

Ring-1T — The First Open-Source Trillion-Parameter Reasoning Model

> Ring-1T is now a serious contender capable of standing shoulder-to-shoulder with closed-source AI giants — another proof that open source can match closed source performance.

---

Rapid-Fire Releases from Ant Group

In just over ten days, Ant Group has released three major AI models back-to-back:

- Sept 30 — Ring-1T-preview

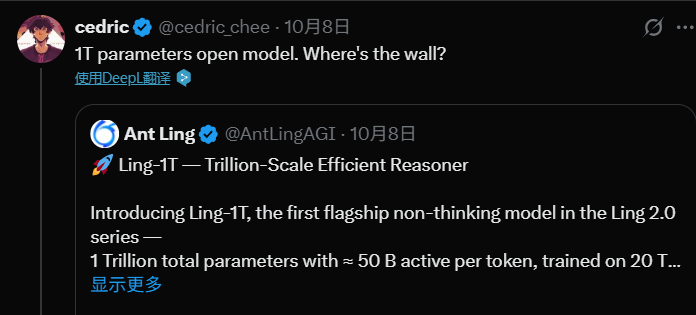

- Oct 9 (midnight) — Ling-1T, their largest general-purpose language model so far

- Oct 14 (midnight) — Ring-1T official reasoning model release

Ling-1T downloads on HuggingFace surpassed 1,000 in just four days.

Social media and Reddit lit up with discussions on its architecture choices — including active parameters and fully dense early layers, aimed at stronger reasoning.

Barely four days later, Ring-1T debuted as the world’s first open-source trillion-parameter reasoning model. Its preview version had already topped multiple leaderboards.

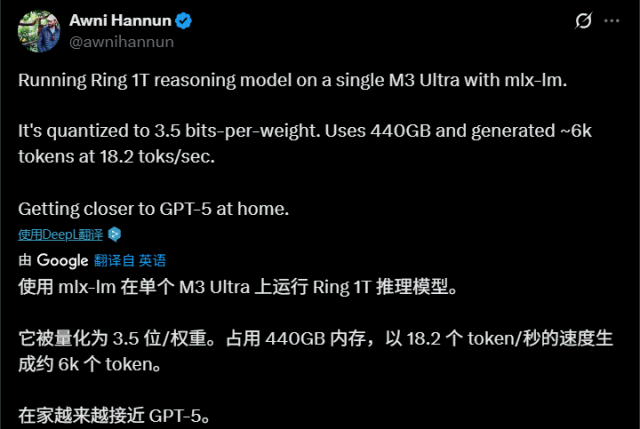

> An Apple engineer even ran Ring-1T-preview locally on an M3 Ultra chip.

---

Full Training Pipeline & Reinforcement Learning

With the official release, Ring-1T completed:

- Large-scale RLVR — reinforcement learning with verifiable rewards for reasoning boost

- RLHF — reinforcement learning from human feedback for general capability balance

Results:

- More balanced skill set — especially in mathematics, programming, and logical reasoning

- IMO silver-medal level in actual Olympiad tasks via multi-agent framework AWorld

Explore Ring-1T:

---

Open-Source SOTA — How Strong is the Official Ring-1T?

Benchmarks Tested

To measure elite-level cognitive performance, the team selected eight advanced benchmarks:

- Math: AIME25, HMMT25

- Coding: LiveCodeBench, CodeForce-Elo

- Reasoning: ARC-AGI-v1

- Composite: Arena-Hard-v2

- Medical: HealthBench

- Creative: CreativeWriting-v3

Competitors:

- Ring-1T-preview

- Gemini-2.5-Pro

- Deepseek-V3.1-Terminus-Thinking

- Qwen-235B-A22B-Thinking-2507

- GPT-5-Thinking (High)

---

Gains over Preview

- Almost all dimensions improved

- High stability in ARC-AGI-v1, Arena-Hard-v2.0, HealthBench

- Maintains strong performance even where slightly lower than preview (CodeForces, LiveCodeBench)

- Closes gap with GPT-5-Thinking (High) — often within a single point difference

Ring-1T now dominates many open-source benchmarks, sometimes matching or beating closed-source leaders like Gemini-2.5-Pro in reasoning.

---

Competition-Level Results

IMO 2025:

- Solved problems 1, 3, 4, 5 → Silver-medal performance

- Near-perfect geometry proof on problem 2

- Problem 6 answer aligned with Gemini-2.5-Pro

ICPC World Finals 2025:

- Solved 5 problems (DFJKL) in 3 tries

- Beat Gemini-2.5-Pro (3 problems)

- Just shy of GPT-5-Thinking (6 problems)

---

Hands-On Testing

1. Coding Ability

Prompt: Generate a simple playable Flappy Bird game.

Result: Fully functional code, slightly abstract art, smooth interaction.

Prompt: Generate a Snake game with smooth animation, scoring, collision detection.

Result: Clean UI, distinct head/body, seamless start/pause function.

---

2. Physics Simulation

Prompt: p5.js script with 25 particles inside a rotating cylindrical container, trails, collision detection.

---

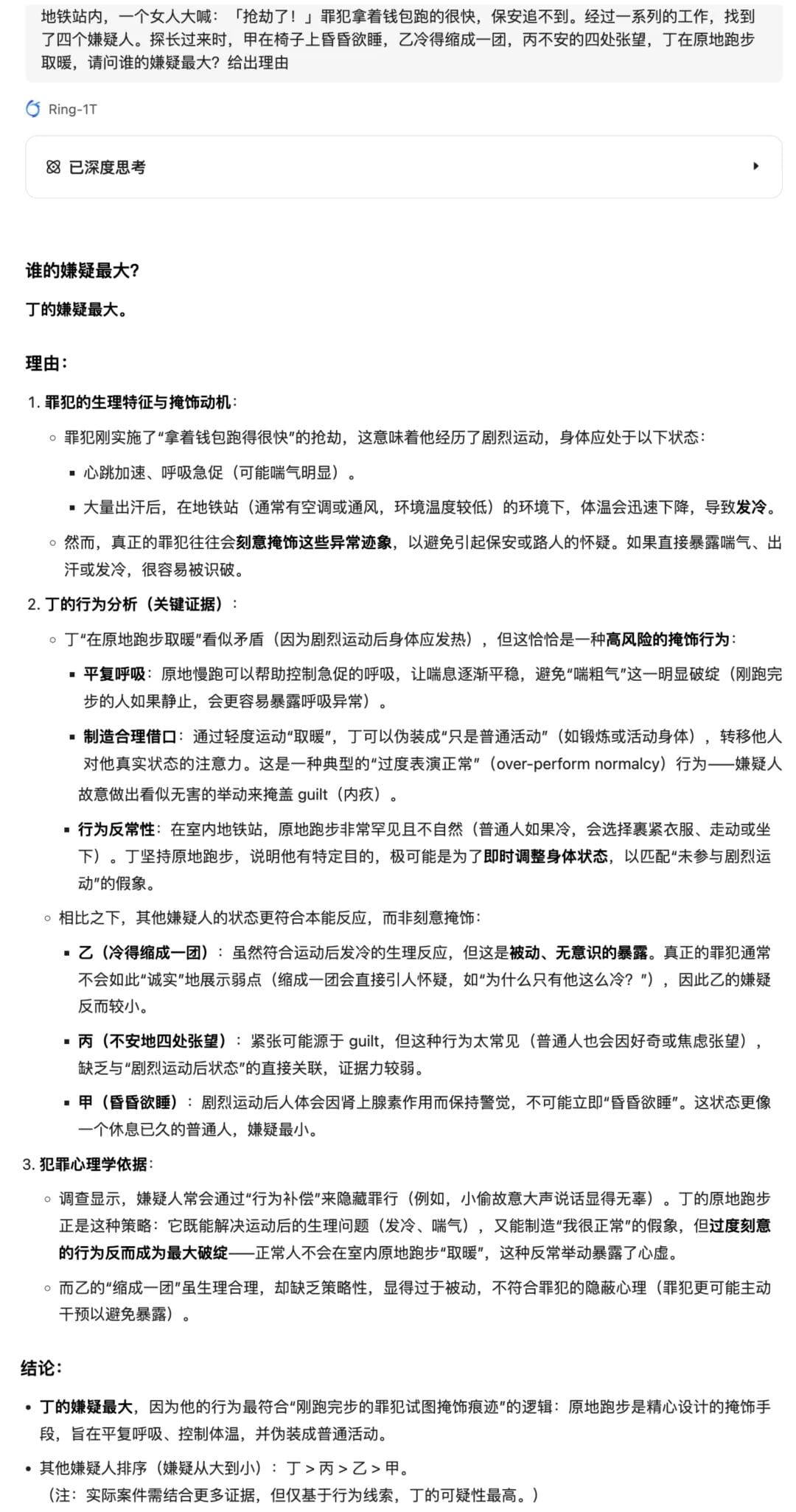

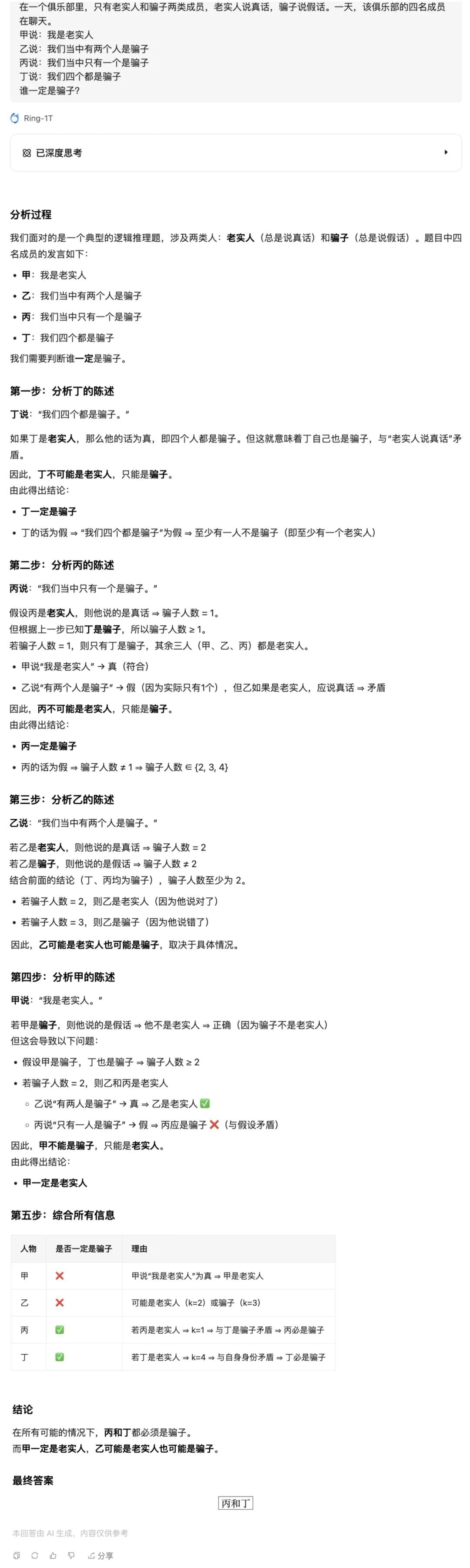

3. Logical Reasoning Tests

- Rabbit race puzzle — Correct fastest & slowest identification

- Subway robbery suspect puzzle — Correct identification based on behavioral clues

- Truth vs lie logic puzzle — Produced reasoning table and correct deduction

---

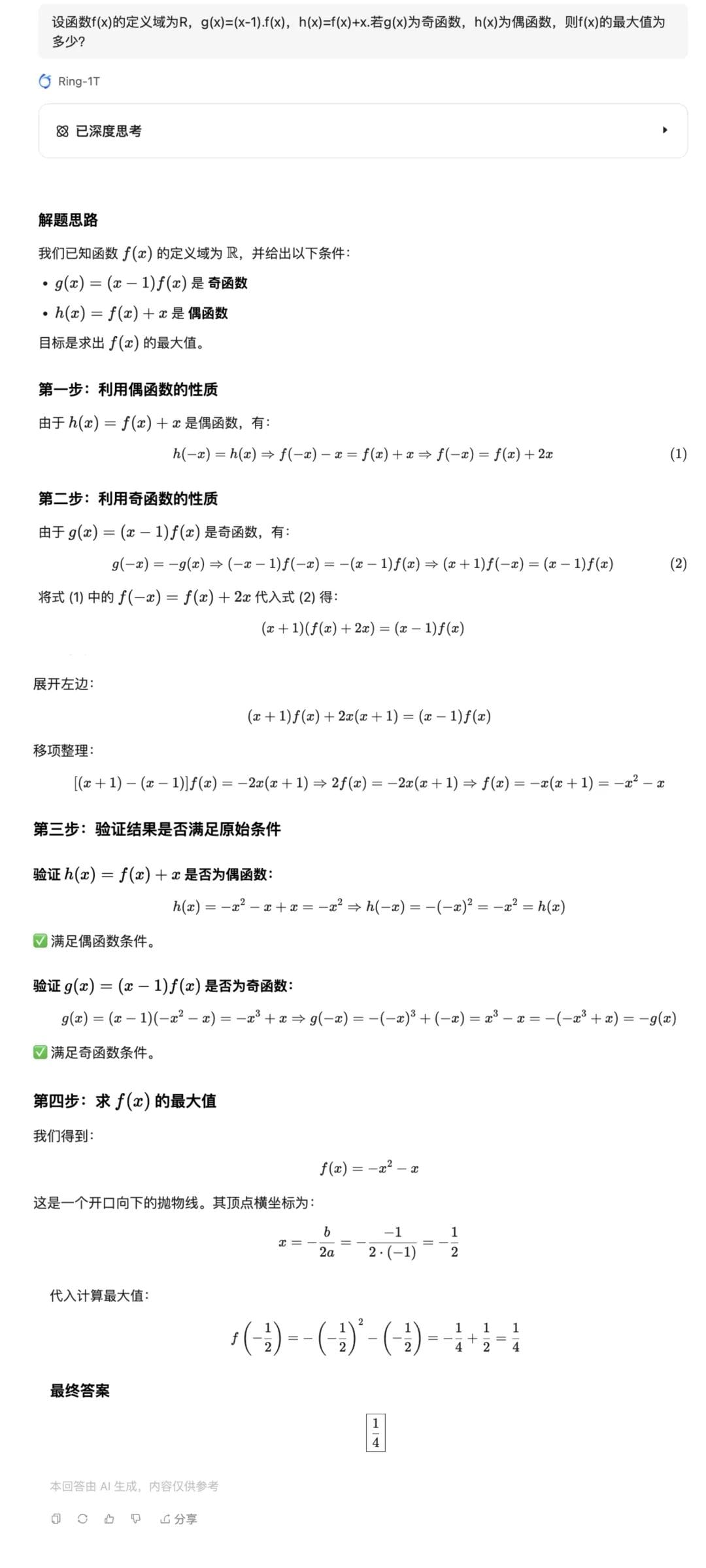

4. Math Olympiad Problem

Prompt: 2025 National High School Math Olympiad preliminary problem

Result: Clear chain-of-thought → Solve equations → Find exact maximum value.

---

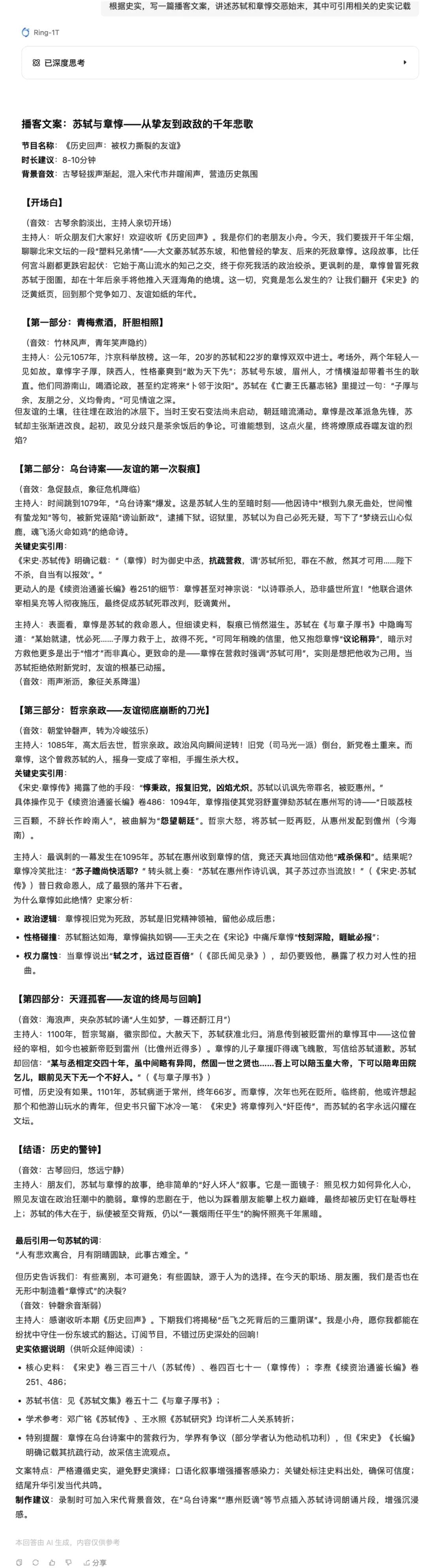

Creative Writing Performance

Prompt: Podcast script about Su Shi and Zhang Dun, with historical citations

Result: Vivid narrative, conversational style, annotated audio effects.

---

Overall Evaluation

Strengths:

- Code Generation: Quick, logical, complete games

- Reasoning: Accurate contextual understanding, efficient solving

- Creative Writing: Style adaptation, engaging narratives

Weaknesses:

- Identity consistency issues

- Occasional mixed Chinese-English output

- Repetition in text

---

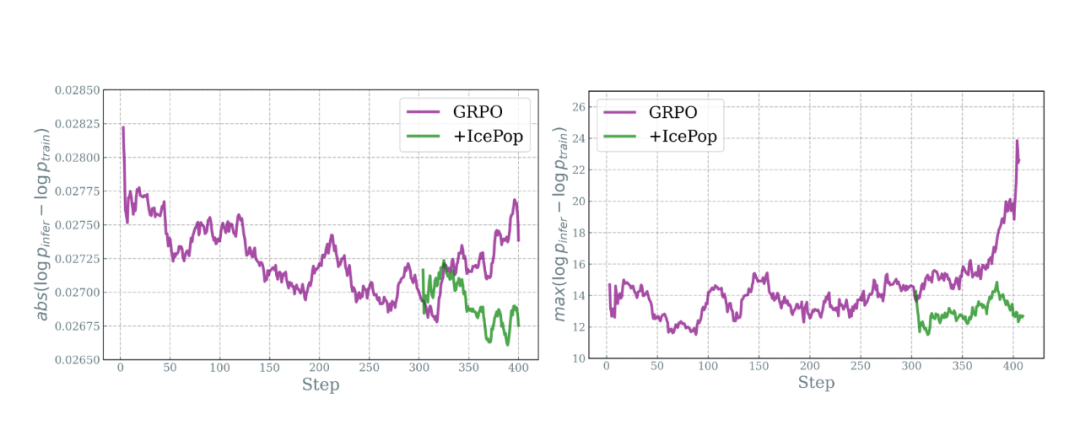

MoE RL Training Challenges & the “IcePop” Fix

Problem: Train–Infer Mismatch

Differences between training and inference precision/settings can crash long-cycle RL training.

Solution: IcePop Algorithm

- Bi-directional truncation + masked clipping

- Filters unstable gradient signals by monitoring token probability differences

- Principle: Better not to learn than to learn wrong

Results: Stable curves in long-cycle training vs baseline GRPO — fewer crashes, healthier output diversity.

---

ASystem RL Framework for Trillion-Scale

- SingleController + SPMD architecture for massive parallel training

- Transparent GPU memory offloading & cross-node pooling → avoids OOM

- GPU P2P direct link for second-level weight exchange → no CPU bottleneck

- Built-in serverless sandbox for reward evaluation at 10K/s throughput

---

Ant Group’s Open-Source Strategy

Beyond releasing models, Ant Group open-sources foundational RL frameworks — e.g., AReaL — enabling the community to replicate trillion-scale RL engineering.

The goal: Make AI as accessible and omnipresent as electricity or payments.

---

Creator Ecosystem Integration

AiToEarn官网 bridges open-source AI with content monetization:

- Multi-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, YouTube, Instagram, Pinterest, X/Twitter)

- AI content generation tools

- Cross-platform analytics and AI model rankings (AI模型排名)

- Designed to help creators earn from AI workflows built on models like Ring-1T

---

Bottom Line:

Ring-1T isn’t just a milestone for open-source reasoning — it’s a platform enabler for next-gen AI creators.

With technical innovation in reinforcement learning (IcePop, ASystem) and real-world publishing infrastructure (AiToEarn), the gap between experimental AI and creator monetization is shrinking fast.