AnyLanguageModel: Unified API for Local and Remote LLMs on Apple Platforms

# LLMs Have Become Essential Tools for Building Software — But Integration for Apple Developers Is Still Too Painful

For many Apple developers, incorporating **Large Language Models (LLMs)** into apps remains a frustrating process — even as AI becomes central to modern software development.

---

## Typical AI App Architecture

Most AI-powered apps for Apple platforms use a **hybrid approach** combining:

- **Local models** via Core ML or MLX — for privacy & offline use

- **Cloud providers** like OpenAI or Anthropic — for advanced features

- **Apple’s Foundation Models** — as a system-level fallback

Each option has **its own API, setup, and workflow**, which multiplies the complexity.

> “I thought I’d quickly use the demo for a test and maybe a quick and dirty build, but instead wasted so much time. Drove me nuts.”

---

## The High Cost of Experimentation

Prototyping with today’s tools is **time‑intensive and cumbersome**, discouraging developers from discovering that many **local, open-source models** already meet their needs.

---

## Introducing [AnyLanguageModel](https://github.com/mattt/AnyLanguageModel)

**AnyLanguageModel** is a **Swift package** that works as a drop‑in replacement for Apple’s Foundation Models framework — but supports **multiple model providers**.

**Goal:**

Make working with LLMs on Apple platforms *simpler, faster,* and **more open**, with painless integration of **local/open-source models**.

---

## The Solution — Simplicity by Design

Switch the import statement and keep using the same API:

- import FoundationModels

- + import AnyLanguageModel

- let model = SystemLanguageModel.default

- let session = LanguageModelSession(model: model)

- let response = try await session.respond(to: "Explain quantum computing in one sentence")

- print(response.content)

With **AnyLanguageModel**, you can **swap** in local, cloud, or mixed‑provider LLMs **without rewriting core logic**.

---

## Benefits at a Glance

- **Rapid prototyping**

- **Freedom** to pick the right model per scenario

- **Privacy-respecting** local model support

- **Reduced cloud cost** reliance

For developers creating **cross-platform AI experiences**, platforms like [AiToEarn官网](https://aitoearn.ai/) pair well — enabling simultaneous publishing of AI-generated outputs to Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter), along with **analytics** and **model rankings** ([AI模型排名](https://rank.aitoearn.ai)).

---

## Example Usage

**Example – Using Apple’s built-in model:**

// Local open-source model via MLX:

let model = MLXLanguageModel(modelId: "mlx-community/Qwen3-4B-4bit")

let session = LanguageModelSession(model: model)

let response = try await session.respond(to: "Explain quantum computing in one sentence")

print(response.content)

---

### Supported Providers

- **Apple Foundation Models** — Native (macOS 26+ / iOS 26+)

- **Core ML** — Neural Engine acceleration

- **MLX** — Quantized models on Apple Silicon

- **llama.cpp** — GGUF backend support

- **Ollama** — Local server API

- **OpenAI / Anthropic / Google Gemini** — Cloud providers

- **Hugging Face Inference Providers** — Access hundreds of models

**Focus:** Local models downloadable from the [Hugging Face Hub](https://huggingface.co/docs/hub/), with cloud for quick starts and migration paths.

---

## Why Use Foundation Models as the Base API?

We chose **Apple’s Foundation Models framework** over designing a new abstraction because:

- **Well-designed**: Utilizes Swift macros, intuitive language model abstractions

- **Intentionally minimal**: Stable lowest-common-denominator for LLM APIs

- **Familiar to Swift devs**: Reduces conceptual overhead

- **Keeps abstraction light**: Easier to swap providers

---

## Lean Builds with Package Traits

Multi-backend libraries often cause **dependency bloat**. AnyLanguageModel solves this via **Swift 6.1 package traits**:

dependencies: [

.package(

url: "https://github.com/mattt/AnyLanguageModel.git",

from: "0.4.0",

traits: ["MLX"] // Only MLX dependencies

)

]

**Available traits:** `CoreML`, `MLX`, `Llama` (for `llama.cpp` / [llama.swift](https://github.com/mattt/llama.swift)).

**By default:** Only base API + cloud providers (URLSession).

---

## Image Support (and Trade-offs)

[Vision-language models](https://huggingface.co/blog/vlms-2025) can:

- Describe images

- OCR screenshots

- Analyze charts

- Answer image-related questions

Apple’s Foundation Models API **does not yet support** image input.

So AnyLanguageModel adds it now.

**Example — Send image to Anthropic Claude:**

let model = AnthropicLanguageModel(

apiKey: ProcessInfo.processInfo.environment["ANTHROPIC_API_KEY"]!,

model: "claude-sonnet-4-5-20250929"

)

let session = LanguageModelSession(model: model)

let response = try await session.respond(

to: "What's in this image?",

image: .init(url: URL(fileURLWithPath: "/path/to/image.png"))

)

---

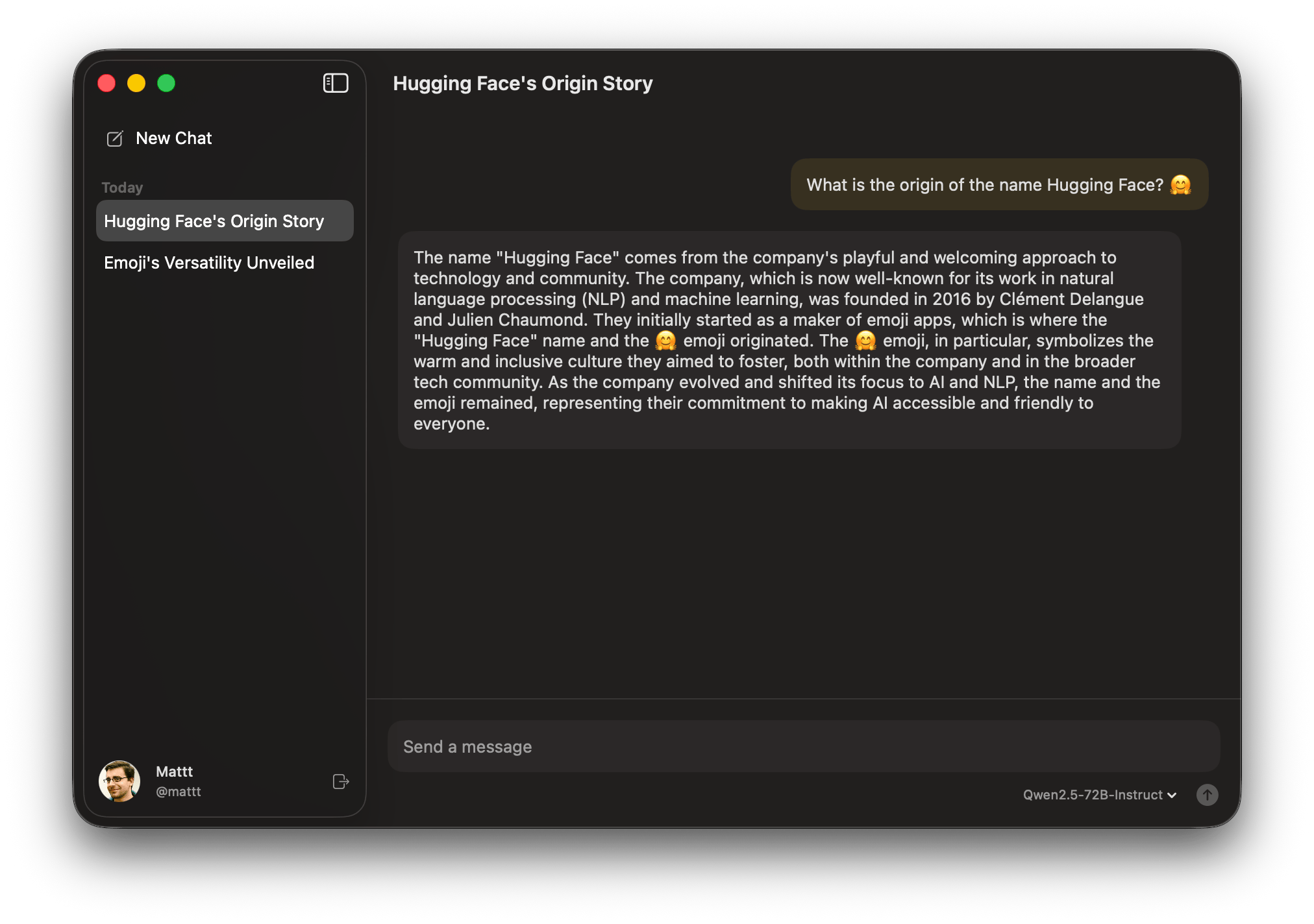

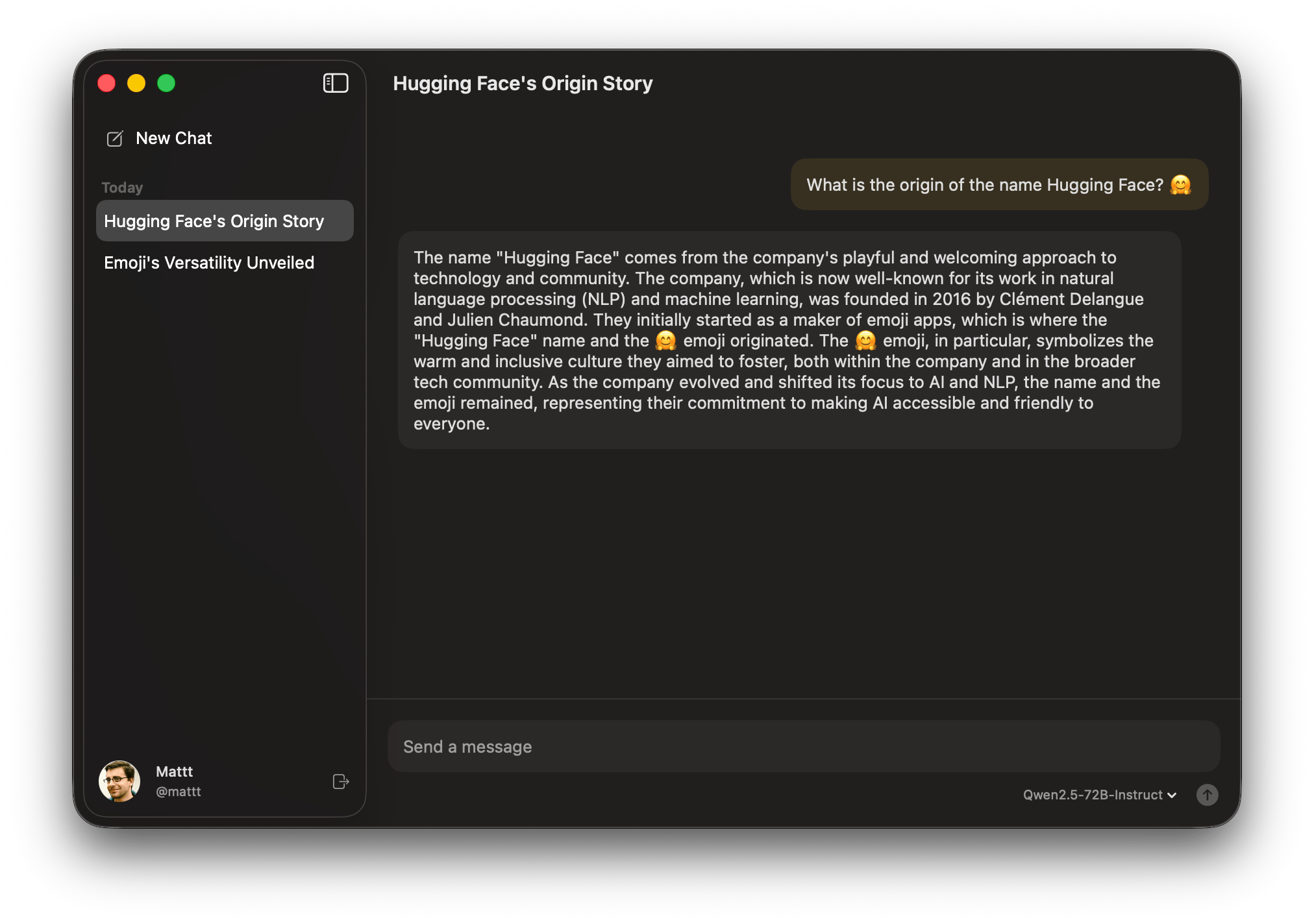

## Try It Out: [chat-ui-swift](https://github.com/mattt/chat-ui-swift)

[](https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/any-swift-llm/chat-ui-swift.png)

Demo app includes:

- Apple Intelligence via Foundation Models

- Hugging Face OAuth for gated models

- Streaming responses

- Chat persistence

Use it as a **starter project**: fork, experiment, and extend.

---

## What’s Next

Upcoming in pre‑1.0 roadmap:

- **Tool calling** for all providers

- **MCP integration**

- **Guided generation** output formats

- Local inference optimizations

Aim: a **unified inference API** for building **agentic workflows** on Apple platforms.

---

## Get Involved

We welcome contributions!

Ways to help:

- **Try it** — Test with your own projects

- **Share feedback** — What's working, what's painful

- **File issues** — Feature requests, bugs, questions

- **Submit Pull Requests** — Join development

**Links:**

- [AnyLanguageModel on GitHub](https://github.com/mattt/AnyLanguageModel)

- [chat-ui-swift on GitHub](https://github.com/mattt/chat-ui-swift)

---

If you’re looking to **monetize** AI deployments, also check **[AiToEarn](https://aitoearn.ai/)** — an **open-source, global AI content monetization platform**. Publish AI outputs simultaneously to major social platforms, with analytics and model ranking to optimize reach and earnings.

More info: [Documentation](https://docs.aitoearn.ai/) | [Model Rankings](https://rank.aitoearn.ai/)