Apple AI Chooses Mamba: Better Than Transformers for Agent Tasks

Apple’s AI: A Leap Beyond Transformers with Mamba + Tools

Everyone says Apple’s AI tends to lag “half a step” behind, but this time a new study goes straight for the heart of the Transformer architecture — with promising results.

Key finding: Mamba + Tools shows superior performance in agent-like scenarios.

---

Study Overview

In the recent paper To Infinity and Beyond, researchers conclude:

> In long-horizon and multi-interaction, agent-style tasks, models based on the SSM (State Space Model) architecture — such as Mamba — show potential to surpass Transformers in both efficiency and generalization.

Lead author of Mamba explains:

---

Understanding Mamba’s Edge

The Problem with Transformers

Transformers feature self-attention — enabling them to connect all tokens in a sequence and understand context deeply.

However, this comes with major drawbacks:

- Quadratic computation cost: Sequence length × sequence length in relationships.

- Example:

- 1,000 tokens → 1 million pairwise computations

- Tens of thousands of tokens → hundreds of millions — a GPU-heavy burden.

Consequences:

- High latency in long-sequence tasks.

- Inefficiency in agent-like tasks that need iterative decision-making and adaptability.

---

Mamba’s Lightweight State Space Model

Mamba, an SSM variant, avoids global attention. Instead, it stores and updates an internal state — like keeping a diary of recent events without rereading old entries.

Advantages:

- Linear computation growth with sequence length.

- Streaming processing — handle data as it arrives.

- Stable memory footprint regardless of length.

---

Limitation — and Apple’s Improvement

Weakness: Limited Internal Memory

In ultra-long sequences, early context can be overwritten due to capacity constraints.

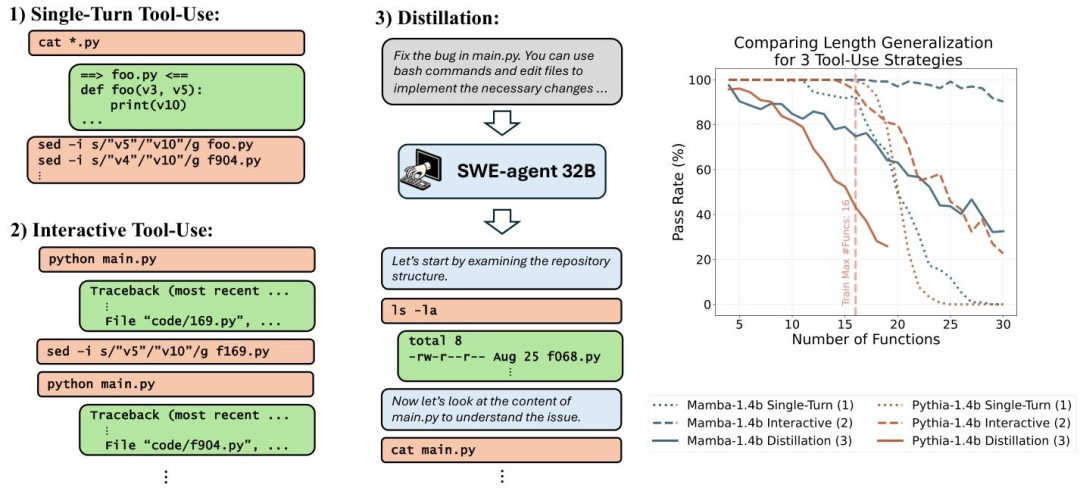

Apple’s Remedy: External Tool Integration

By adding specialized tools, Mamba can extend its capabilities:

- Math pointer tools: Record numbers, track operations.

- Code debugging tools: View files repeatedly; run and verify code.

Result: Tools act as external memory + interactive interfaces.

---

Performance Gains

With tools, Mamba achieves:

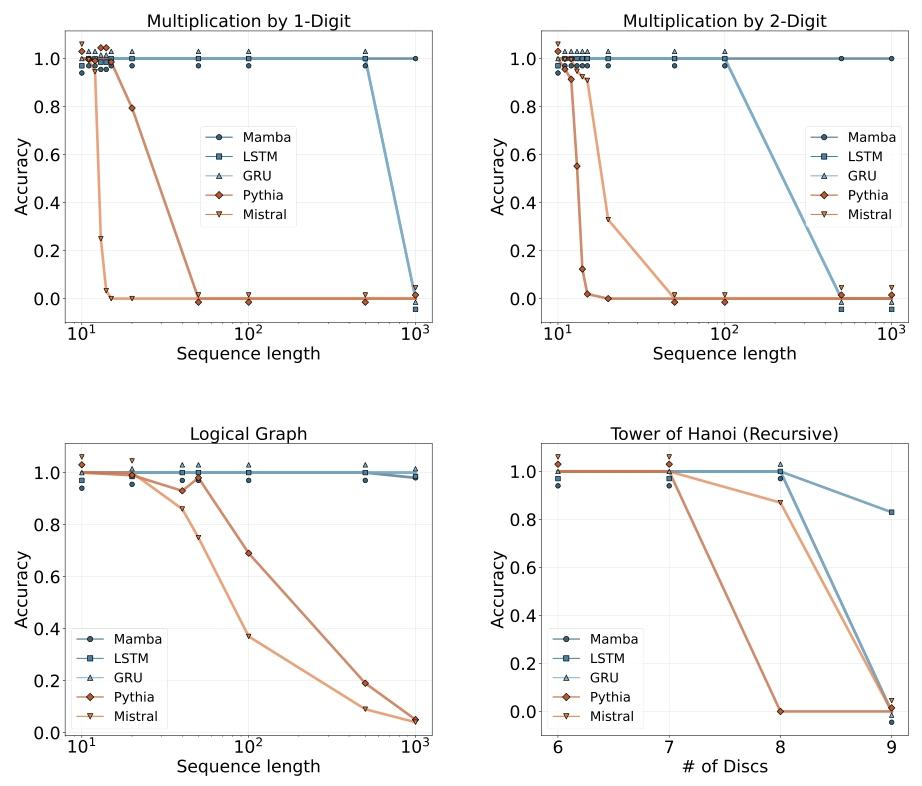

- Multi-digit addition:

- Pointer tool usage enables training on 5-digit sums and scaling to 1,000-digit sums with ~100% accuracy.

- Transformers show errors beyond 20-digit sums.

- Code debugging:

- Simulated workflows (view → edit → run → verify) yield better accuracy than Transformers, even on more complex codebases.

---

Connecting to Broader AI Trends

Tool-augmented AI aligns with wider development in:

- Structured tool ecosystems

- Multi-modal input handling

- Collaborative agents

Platforms like AiToEarn官网 and AiToEarn开源地址 offer multi-platform publishing and monetization, enabling creators to distribute AI-driven outputs to:

- Douyin

- Kwai

- Bilibili

- Xiaohongshu

- Threads

- YouTube

- X (Twitter)

---

Other Example: Tower of Hanoi

In step-by-step logic puzzles:

- Transformers: Intelligent but slow — prone to stalls in repeated adjustments.

- Mamba without tools: Fast reactive but weak memory.

- Mamba + tools: Gains quick reactions and strong recall — effectively handling more complex scenarios.

---

Final Takeaway

Transformers excel in global context but struggle with efficiency in adaptive, interactive tasks.

Mamba + Tools combines fast state-based processing with external memory, resulting in superior agent-like performance and greater scalability.

References:

- Paper: https://arxiv.org/pdf/2510.14826

- Author comment: https://x.com/_albertgu/status/1980287154883358864

---

For Developers & Creators

As AI agent architectures evolve, pairing reactive models like Mamba with specialized tools could redefine efficiency benchmarks.

Platforms such as AiToEarn官网 help creators:

- Experiment with such AI innovations.

- Publish across multiple platforms.

- Monetize results effectively.

If you’d like, I can also prepare a concise comparison table between Transformer vs Mamba + Tools for quick reference.

Would you like me to add that?