Apple's AI Paper Backfires: GPT-Written GT Forces Beijing Programmers to Pull All-Nighters

"Speechless" Moment in AI Research: Apple Paper Retracted After Serious Benchmark Flaws

Big "speechless" incidents happen every day in tech — but today’s case is truly remarkable.

Lei Yang, a researcher from AI large‑model company StepStar, was seriously tripped up by a paper Apple had posted on arXiv.

When Yang raised issues on GitHub, Apple’s team replied briefly, closed the issue, and only retracted the paper after his public follow‑up comment — also deleting the code repository.

---

How It Started

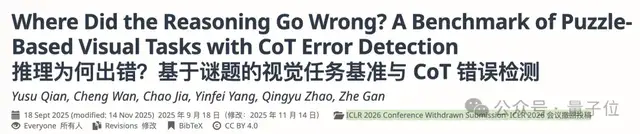

Earlier this month, Lei Yang was tipped off by a colleague about Apple’s paper on arXiv (also submitted to ICLR 2026).

The benchmark it proposed was a perfect fit for Yang's current research, and he was excited enough to drop all other projects to start adapting his work.

The claim was striking: “Small models comprehensively surpass GPT‑5, with data carefully curated by humans.”

But reality hit hard.

- The official code contained absurd bugs.

- The Ground Truth (GT) error rate was estimated at ~30%.

---

Step‑by‑Step Escalation

1. Initial Adaptation

Yang spent an all‑nighter adapting his research to the benchmark — only to find extremely low scores.

> “I was extremely frustrated,” he later said.

2. Discovering the Bug

- The official code sent only the image file path string to the VLM, not the actual image content.

- After fixing the bug, scores dropped even further.

3. Manual Review

Manually checking 20 wrong answers revealed:

- 6 clear GT errors.

- GT was likely auto‑generated by a model with poor QA, leading to hallucinations.

- Estimated GT error rate: ~30%.

4. Reporting the Problem

Yang filed a GitHub issue. Six days later, the authors replied briefly and closed the issue without resolution.

---

Public Call‑Out and Reaction

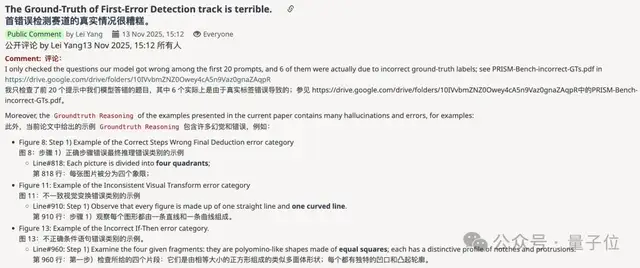

Frustrated, Yang posted a detailed public comment on OpenReview:

- Listed GT problems and misleading examples.

- Warned reviewers and researchers to avoid wasting time.

- Direct OpenReview link: https://openreview.net/forum?id=pS9jc2zxQz

> "...prevent interested researchers from repeating the cycle I experienced — initial excitement, shock, disappointment, and frustration — saving everyone time and effort."

Community response was supportive:

---

Paper Retraction

The day after his public comment:

- Authors retracted the paper.

- Deleted the GitHub repo.

Yang shared his experience across platforms, warning against blindly trusting even polished work from large companies.

---

Authors’ Official Response

On the “Small Sweet Potato” platform, the authors stated:

Communication

- They had communicated with Yang in detail.

- Expressed respect for his work.

Data Quality

- Admitted inadequate review.

- Checked injected error samples manually but did not audit critical parts.

- When GT solutions were auto‑converted to CoT by GPT, hallucinations arose, causing incorrect step labels.

Example Inference

- Demo code in repo was dummy sample, not formal code.

- After Yang’s alert, they modified it.

Issue Handling

- Regretted closing the GitHub issue prematurely.

- Promised to keep issues open until resolved.

> "We will seriously reflect on this experience and keep pushing ahead."

---

Lessons for the AI Community

This incident underscores:

- Rigorous dataset verification is critical.

- Open discussions help maintain research integrity.

- Even high‑profile corporate research can have serious flaws.

---

Role of Open Platforms

Open‑source platforms like AiToEarn官网 can help:

- Generate, publish, and monetize AI‑created content across multiple platforms.

- Integrate analytics and AI model rankings (AI模型排名).

- Surface and address issues transparently before they mislead research.

---

References

- https://x.com/diyerxx/status/1994042370376032701

- https://www.reddit.com/r/MachineLearning/comments/1p82cto/d_got_burned_by_an_apple_iclr_paper_it_was/

- https://www.xiaohongshu.com/explore/6928aaf8000000001b022d64?app_platform=ios&app_version=9.10&share_from_user_hidden=true&xsec_source=app_share&type=normal&xsec_token=CBLEH7cvuVDNN78gtS-RUB8YQp0_GXstBHlQAk14v6t8I=&author_share=1&xhsshare=WeixinSession&shareRedId=NzxHOEQ6OTw6Pjw3Sj81SD1HQUk5R0lK&apptime=1764289526&share_id=c73caa18d27a408898ea99622f8e0360

- https://openreview.net/forum?id=pS9jc2zxQz

- https://openreview.net/pdf/e5917f72a8373c7f56b3cb9c0ac881d991294ee2.pdf

---

Takeaway:

Clear credit attribution, compliance with submission guidelines, and strong community oversight are vital. Open platforms and transparent workflows can ensure that AI research outputs remain accurate, ethical, and impactful.