# Large–Small Model Collaboration in Intelligent Investment Advisory

Leveraging **the precision and performance of traditional quantitative small models** combined with **the flexibility of large-model Agents**, we can achieve:

- **Problem identification**

- **Task expansion**

- **API calls to small models**

- **Answer fusion**

---

## Industry Context

The rise of large models has introduced **new technical possibilities** for all human-interaction domains. In **investment advisory**, adoption has been cautious due to **hallucination risks** and **limited domain reasoning depth**.

This article is based on a June presentation at **AICon 2025 Beijing** by a senior algorithm expert from **Beiyin Fintech**, titled *“Application of Large–Small Model Collaboration in Intelligent Investment Advisory”*.

The proposed architecture:

- **Extends general large models into industry-specific applications**

- Combines **precision small models** with **large-model Agents**

- Uses **problem identification → task expansion → small-model API calls → answer fusion**

- **Mitigates hallucination** and **improves depth** in responses

---

### AICon Beijing 2025

📅 **December 19–20**

🎯 Theme: **"Exploring the Boundaries of AI Applications"**

Focus areas:

- Enterprise-level Agent deployment

- Context engineering

- AI product innovation

Industry leaders will share **real-world experience** and **case studies** on how AI can enhance R&D and operations.

**Agenda:** [https://aicon.infoq.cn/202512/beijing/schedule](https://aicon.infoq.cn/202512/beijing/schedule)

---

## Historical Perspective on Intelligent Investment Advisory

After the **2008 subprime crisis**, human advisors faced scrutiny. This led to the emergence of **Wealthfront** and **Betterment**, shifting the landscape from **Wall Street to Silicon Valley**.

**Past characteristics:**

- Relied on rule-based small models (**Black-Litterman**, **mean–variance**, **risk parity**, etc.)

- Models were **opaque** and controlled by select institutions

- Commissions were earned while risks were borne by investors

**Breakthrough:**

These startups **published algorithms publicly**, along with backtesting results, enabling transparency.

---

### Development in China

Timeline:

- **2014**: Prototypes like *Latte Zhituo* and *Koala Zhituo* appear; *JD Zhituo* designed

- **2017**: China Merchants Bank launches *Mocha Zhituo* — first big public exposure

- Despite innovations, market traction remained limited due to *lack of interaction*

**Key insight:**

Investment advisory is “**three parts investing, seven parts advising**.”

Personalized, ongoing interaction was missing — something only **large models** can now enable.

---

## Emerging Ecosystems & Tools

Platforms like [AiToEarn官网](https://aitoearn.ai/) now enable:

- **AI content creation**

- **Cross-platform distribution**

- **Analytics and monetization**

- Support for major platforms (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

---

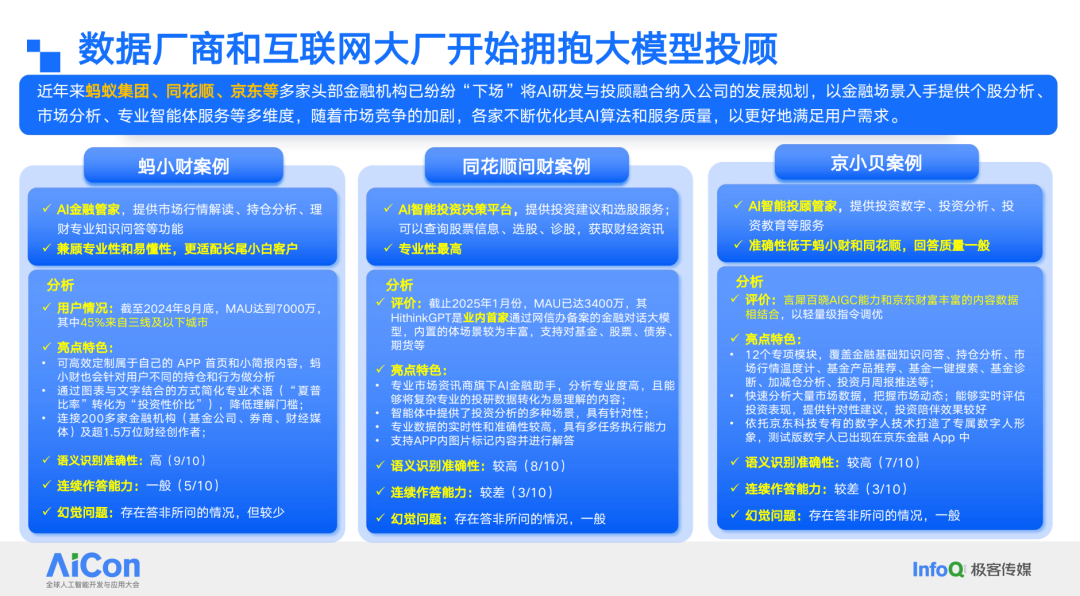

## Key Market Examples

- **Ma Xiao Cai (Ant Group)** — Focus on **compliance** and **knowledge boundaries**

- **Wen Cai (Tonghuashun)** — Integrated **data + large models**; excels in technical queries but weaker in semantics

- **Jing Xiao Bei (JD)** — Primarily an **enhanced customer service bot**

---

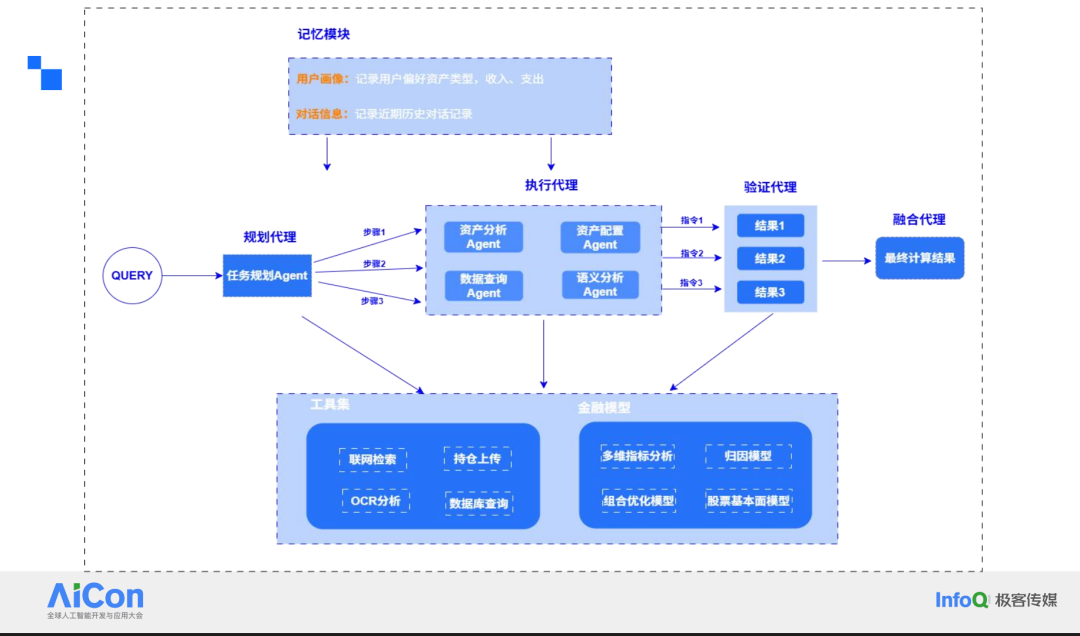

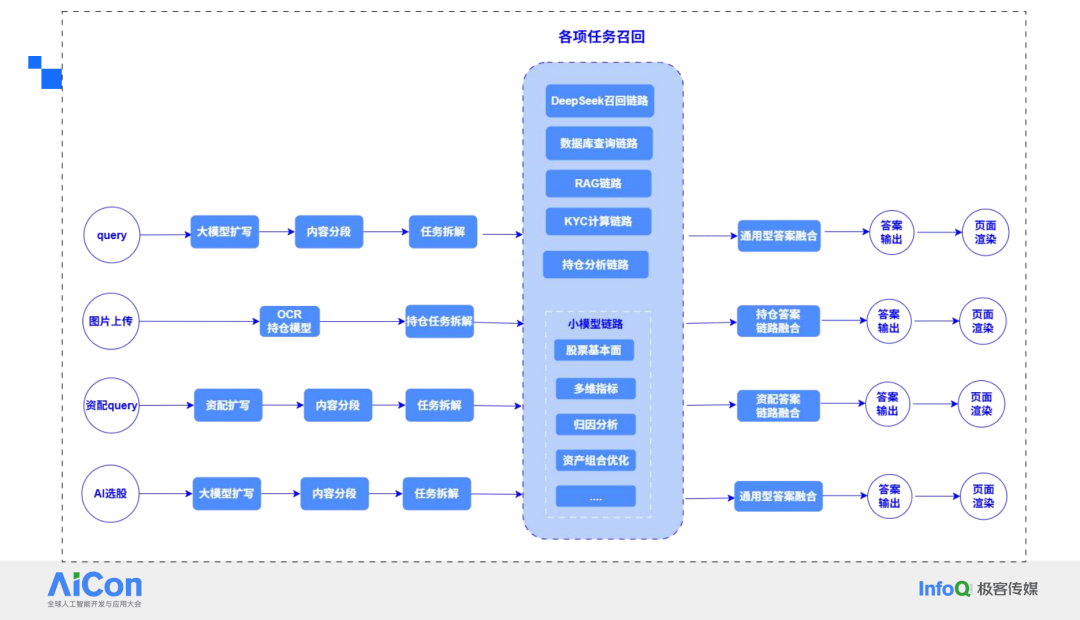

## Functional Architecture Design

**Design principle:**

**Large model = dialogue + planning**

**Small models = precise computation**

### Components:

1. **Data layer** — Connect *all* available data

2. **Large model (DeepSeek)** — Rewriting, expansion, task planning

3. **Small model cluster** — Quantitative computations

4. **Answer synthesis** — Return results in user-friendly form

**ToC vs. ToB difference:**

- **ToC**: Short, divergent queries

- **ToB**: Long, procedural queries with *zero hallucination tolerance*

---

### Categories of Small Models

1. **Basic Models** — Provide raw indicators

2. **Asset Recommendation Models** — Split by asset class with traceable rationale

3. **Attribution Models** — OCR-based portfolio aggregation and analysis

4. **Asset Allocation Models** — Real-time invocation of core allocation engines

5. **Categorical Prediction Models** — Predict trends for broad asset classes

Each has a **feature vector** (algorithm, target audience, input/output format, etc.) for **interpretability and reproducibility**.

---

### Embedding Intent Recognition

- Detect **deeper operations** → switch to **interactive cards**

- Card triggers **API-based functional modules**

- Promotes **reuse of legacy systems** through AI orchestration

---

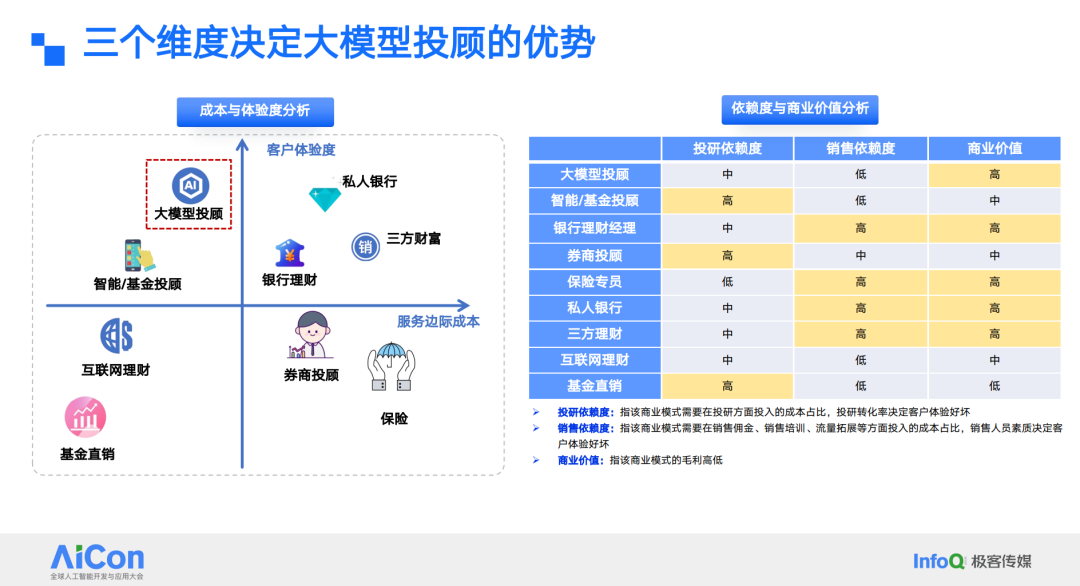

## Why Large–Small Collaboration?

**Three main drivers:**

1. **Hallucination control** — Must approach *100% accuracy* in B-end scenarios

2. **Professional depth** — Small models outperform in quantitative calculations

3. **Lower cost & latency** — Reduces compute time from **40–50s → under 20s**

---

## Engineering Implementation

**Two-step pipeline:**

1. **Colloquial → structured intent** via DeepSeek Chain-of-Thought

2. **Agent-based task planning**:

- Assign facts to large model

- Numbers to small models

- Missing info via RAG

- Visuals via templates

---

## Agent Flow Orchestration

- Use **different prompt strategies** for varied expansion needs

- Optimize each step with **size-appropriate models** (even 0.5B models work for narrow tasks)

- Integrate small models as **API services behind Agents**

- Add **expert-fusion stage** for refined final outputs

---

## Handling Queries: SQL vs API

- Currently using **Text-to-SQL** for indicator queries

- In **well-defined scenarios**, SQL yields precise results

- Considering **API-based** approach for flexibility

---

## The Value of Collaboration

Advantages over traditional systems:

- **Higher interactivity**

- **Better user experience**

- **Lower manual cost** despite higher fixed cost

---

### "Depth-to-Compute" Ratio

Concept: As with **autonomous driving**, getting from 90% to 99% performance requires exponentially more compute.

Solution: Use **small models for deep, narrow tasks** instead of embedding all domain knowledge into a large model.

---

## AI in ToB Systems

- Integrated a **Chatbot interface** to connect multiple systems

- Two-stage interaction: persona-based advisory, then execution flow

- Supports embedding into existing pages and features

---

## Event Reminder

**AICon 2025** – **December 19–20**, Beijing

The last AICon of the year — deep-dives into **Agents**, **context engineering**, and **AI product innovation**.

[Read the original article](2651262812)

---

## Final Note

The **large–small model hybrid** is key for:

- **Mission-critical sectors** like finance

- **Compute efficiency**

- **Domain-specific depth**

Platforms like [AiToEarn官网](https://aitoearn.ai/) allow scaling such approaches across content and industry applications.

[Open in WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=4c3ae5d1&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMjM5MDE0Mjc4MA%3D%3D%26mid%3D2651262812%26idx%3D2%26sn%3D12801567c35d3adadc23746706f9966b)