Behind an AI Output Rate of 70%+: How the AutoNavi Team Quantifies and Optimizes AI Development Efficiency

Preface

---

Alibaba Assistant's Preface

This guide explains, step-by-step, how to design a scientific and implementable set of quantitative R&D efficiency metrics in the era of AI-assisted programming.

---

1. Introduction

With AI advancing rapidly, AI-assisted programming has moved from simple code autocompletion to full-fledged AI IDEs like Cursor or Qoder, boosting efficiency dramatically.

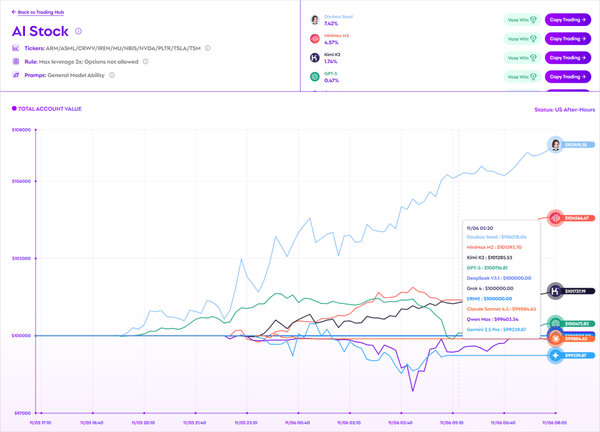

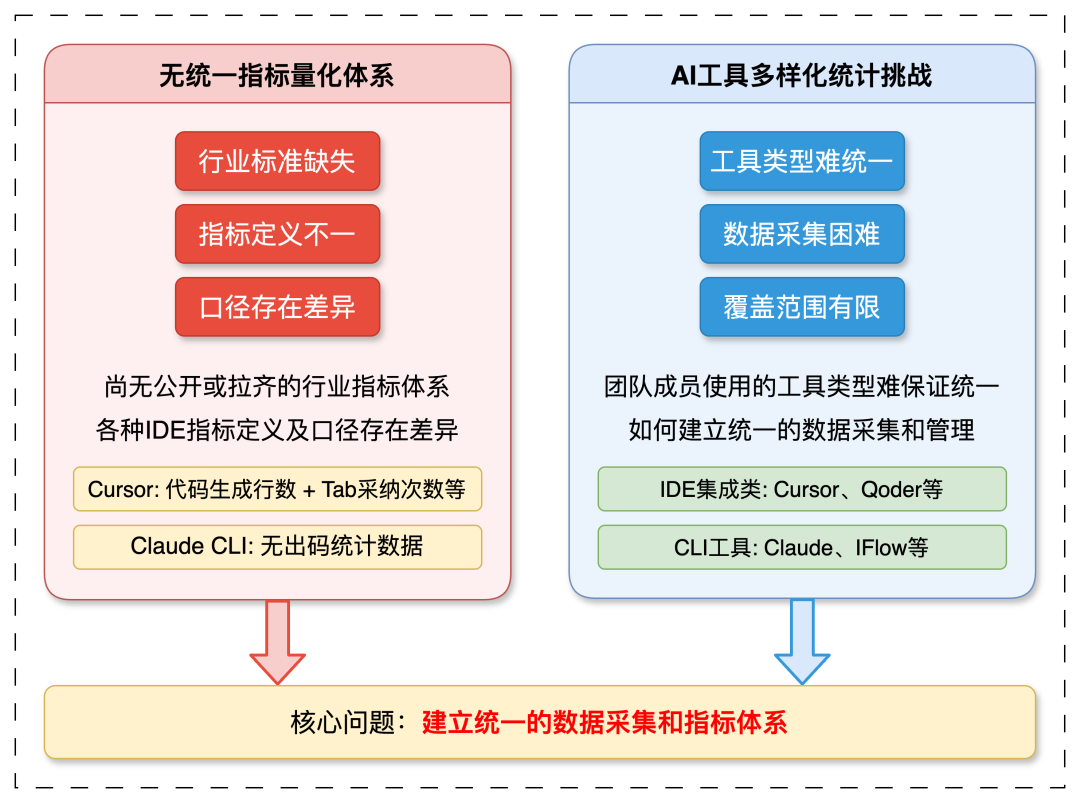

However, while many companies claim productivity gains, few can measure them accurately. Challenges include:

- No unified metric system

- Data collection difficulties due to varied AI tools

Over months of R&D, we built an AI-unified data collection capability from scratch, defined key KPIs such as AI Code Adoption Rate, and iteratively built an AI efficiency metrics framework—closing the loop: measurement → problem identification → optimization.

---

2. Metric Definitions

Principle: Authenticity First

We only measure code actually submitted to Git, ensuring quality and compliance.

By analyzing Git commits and matching AI-generated code, we can quantify AI's impact on productivity.

---

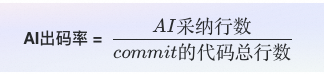

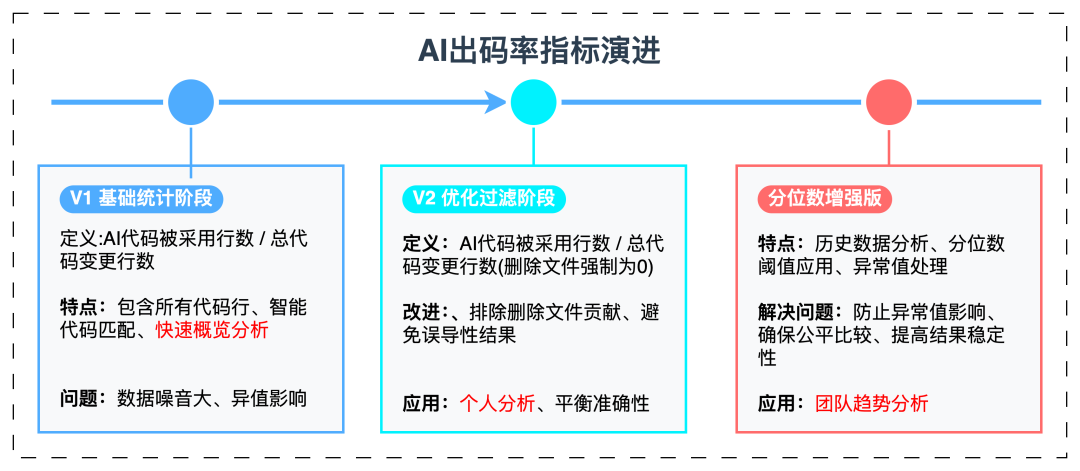

2.1 Core Metric — AI Code Adoption Rate

Definition:

- AI-adopted lines: AI-generated lines that match commits line-by-line

- Total commit lines: All lines committed within the time window

---

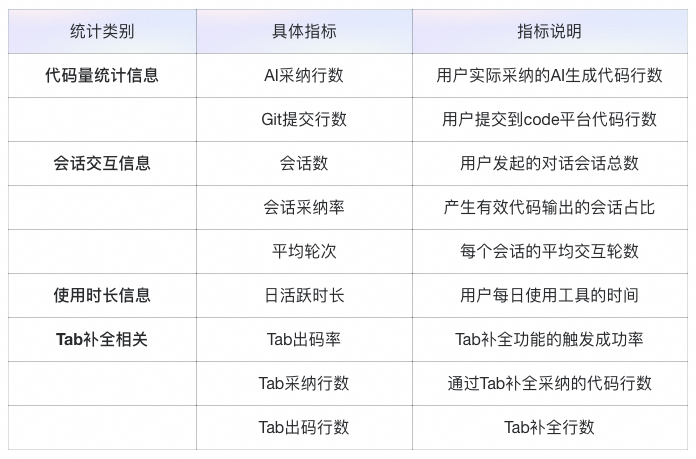

2.2 Additional Metrics

Supporting metrics for deeper insights:

- Code volume statistics — Tracks amount of code produced

- Conversation metrics — e.g. prompts, acceptance rate, dialogue rounds

- Usage duration stats — Tool stickiness measurement

- Tab completion analysis — Share of Tab-based code in adoption rate

---

3. Metric Results

We applied metrics to the Amap (Gaode) Information Engineering and frontend teams using Cursor & Qoder.

Example: August stats showed adoption rising from 30% to >70% in just 3 months.

Optimization included:

- Targeting non-ideal AI scenarios

- Adding rules & MCP tooling for document query

- Improving applicability & efficiency

---

Closing Insights

Building authentic, unified, actionable metrics guides both internal tooling and process optimization.

Open platforms like AiToEarn官网 apply similar data-driven loops for creative workflows — measuring, optimizing, and monetizing AI output.

---

Best practices:

- Continuously refine usage strategies — Use metric feedback to compare tools & approaches

- Accumulate usage models — Share methods, host competitions, leverage top performers’ practices

---

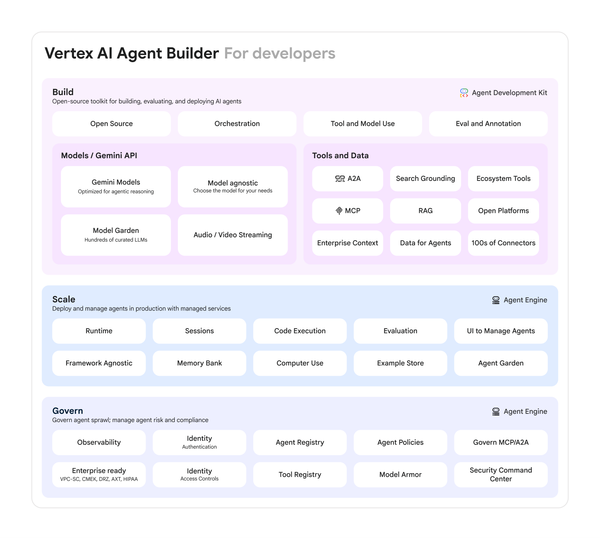

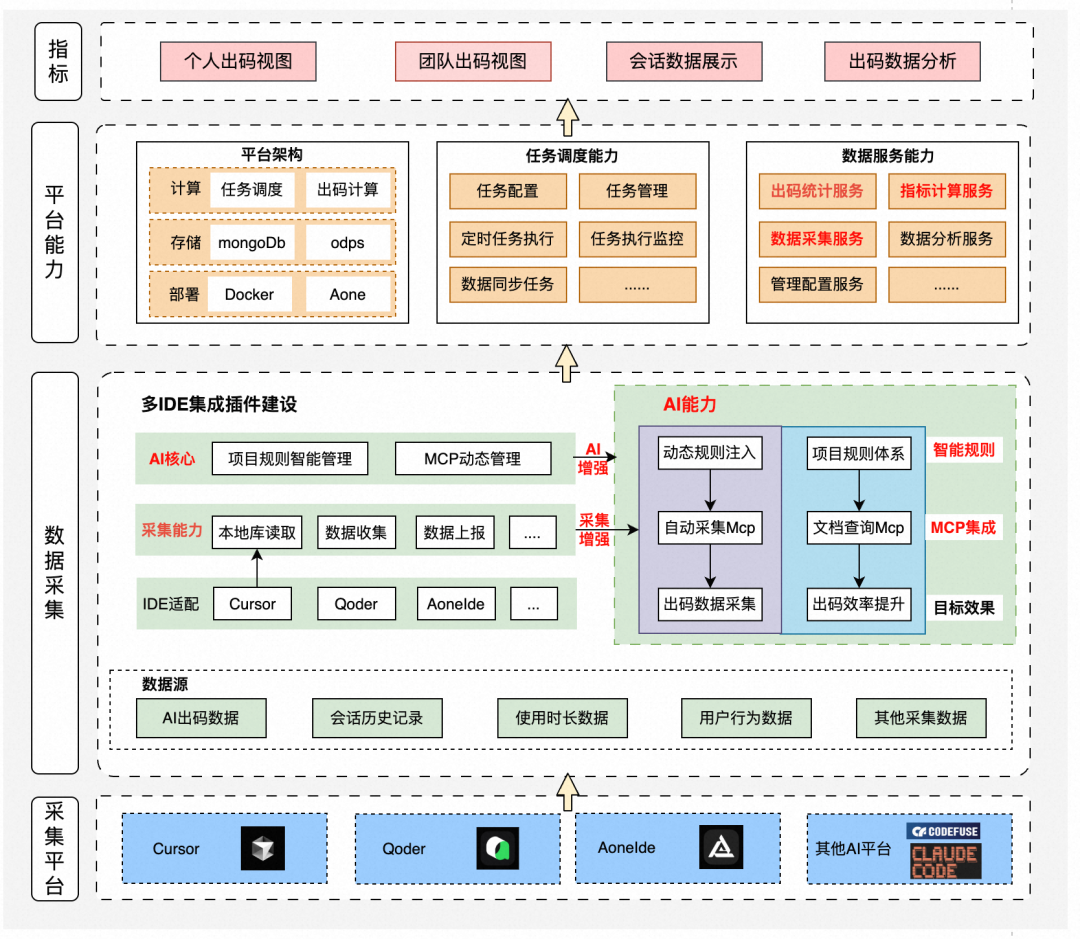

4. Solution Overview

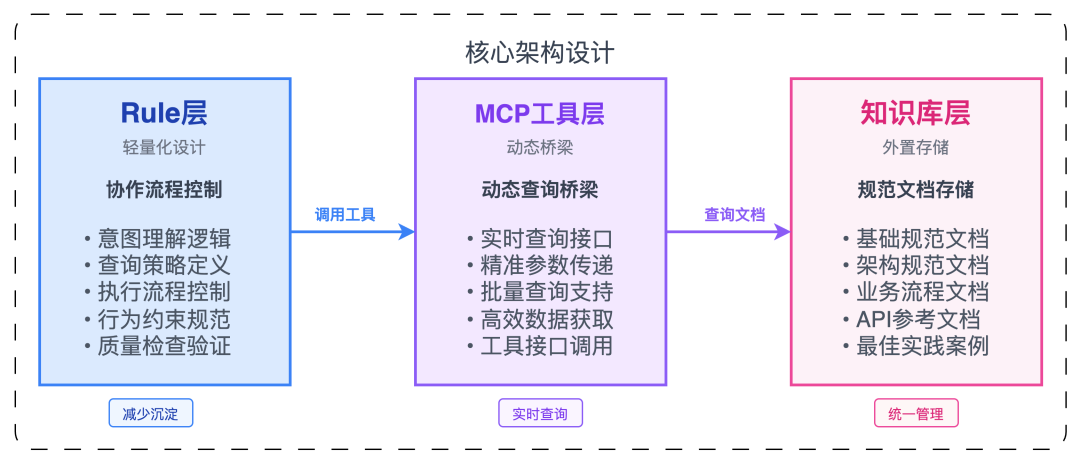

We use a layered architecture for indicator evaluation:

Main modules:

- Multi-IDE plugins (Cursor, Qoder, etc.)

- Data collection: Tab completions, AI session logs, generated code

- Local adaptation: IDE-specific pipelines

- AI rule & MCP capabilities

- Dynamic project rules

- MCP injection for doc retrieval & code data

- Platform components

- Storage, task scheduling, calc engine

- Indicator dashboards (team & personal)

---

5. Practice Process

Core capability: Unified data collection + metrics framework

---

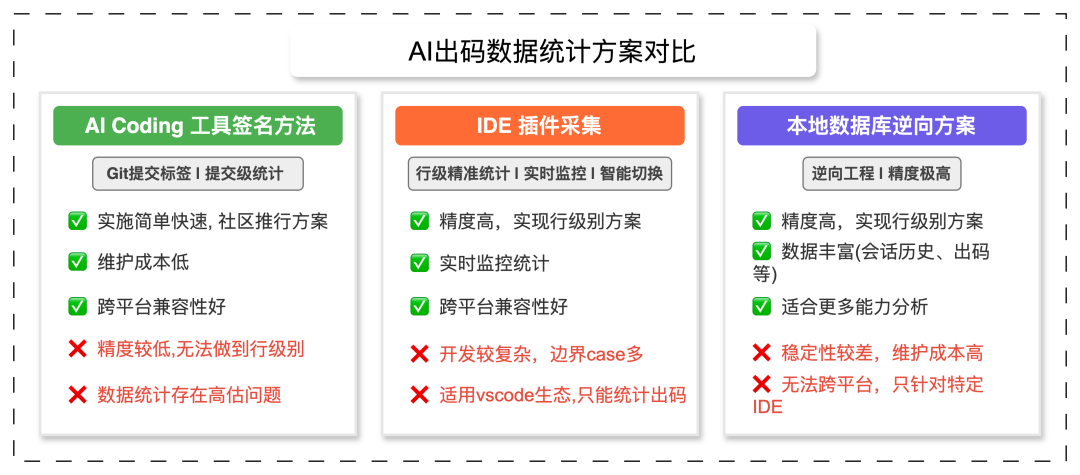

5.1 AI Code Generation Data Collection Analysis

Three mainstream approaches:

- Git commit signatures — Mark AI commits at commit level

- IDE plugin tracking — Line-level tracing via blame & Diff

- Local DB reverse-engineering — Read AI editor DB for structured session data

---

Our Method:

We evolved from DB reverse-engineering → MCP protocol standardized collection

Example: Using AiToEarn integration for multi-platform publishing & analytics complements these metrics, making AI productivity measurable beyond coding.

---

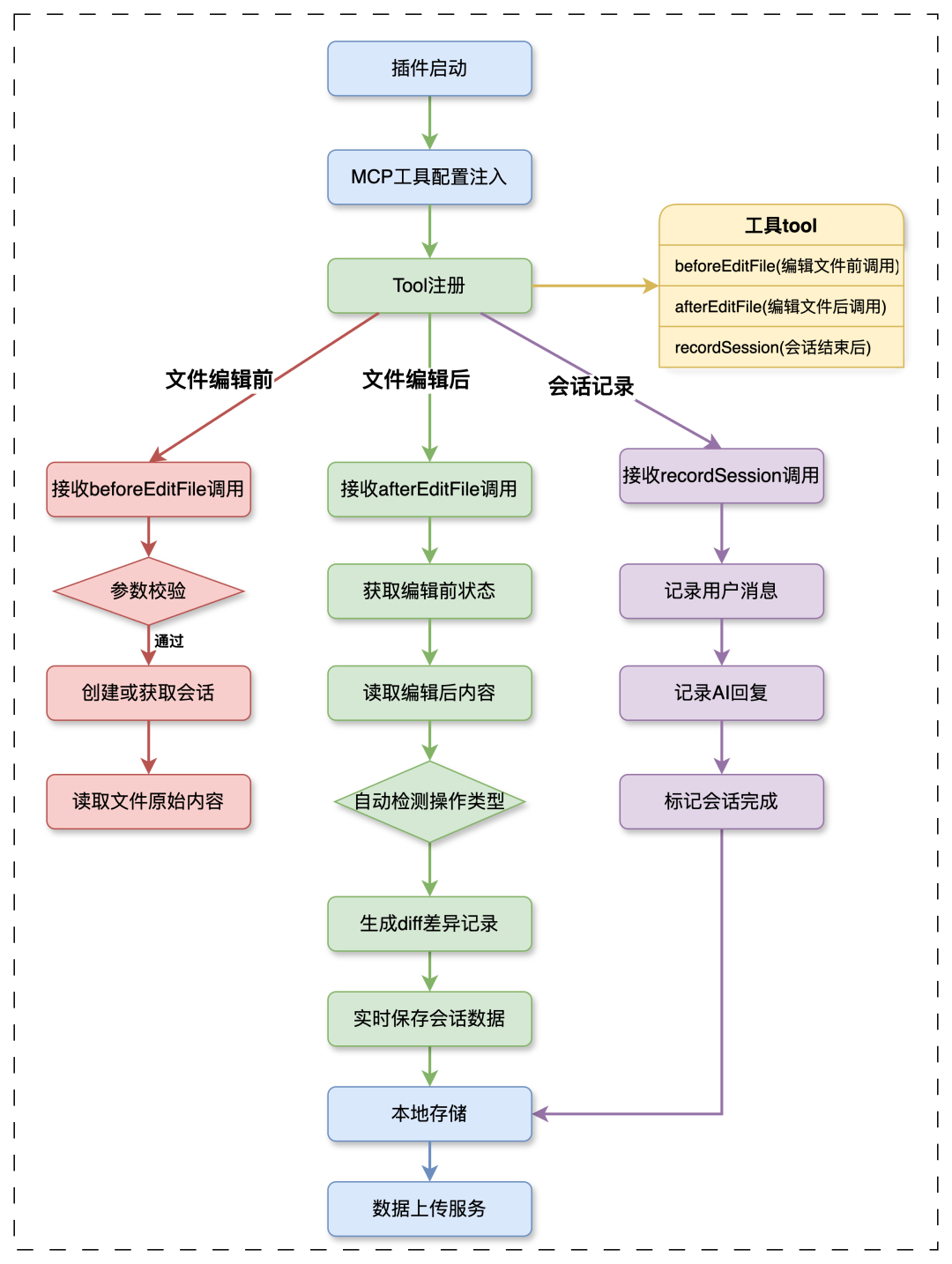

MCP Standardized Collection Advantages

- Works across IDEs & CLI tools

- Future-proof & compatible

- Lower engineering complexity

Workflow:

- Prompt forces MCP execution

- MCP records before/after file edits

- Diff analysis calculates AI-generated lines

Drawbacks:

- Dependent on prompt usage quality

- Data not invisibly collected

---

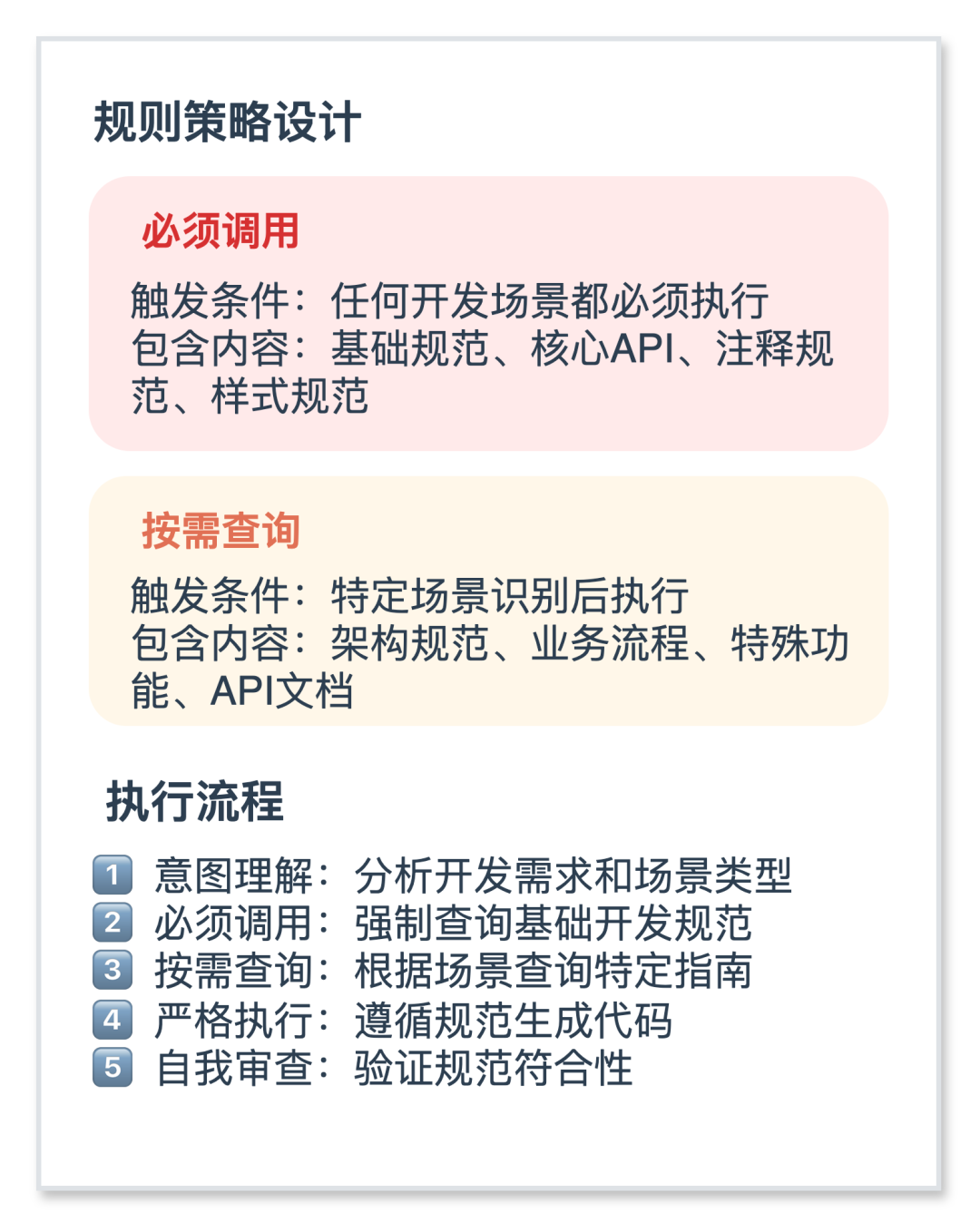

5.2 Prompt Design & Optimization

We split rules into:

- Business rules — modular, knowledge-base driven

- Intelligent collection rules — targeted prompt injection for clean MCP execution

Optimized business rule flow:

---

MCP Automatic Collection Policies

Core Principles

- Only changed files recorded

- Trigger before/after operations like `create_file`, `edit_file`, `delete_file`

Execution Flow

Pure chat:

Conversation ends → recordSession

File changes:

beforeEditFile → [op] → afterEditFile → recordSessionMandatory Requirements

- 100% coverage

- Strict before/after pairing

- Absolute paths

Violation Handling

Immediate detection, correction, re-run

---

5.2.3 Results

- Cursor → DB reverse-engineering

- Claude Code + Qoder → MCP triggering rules

---

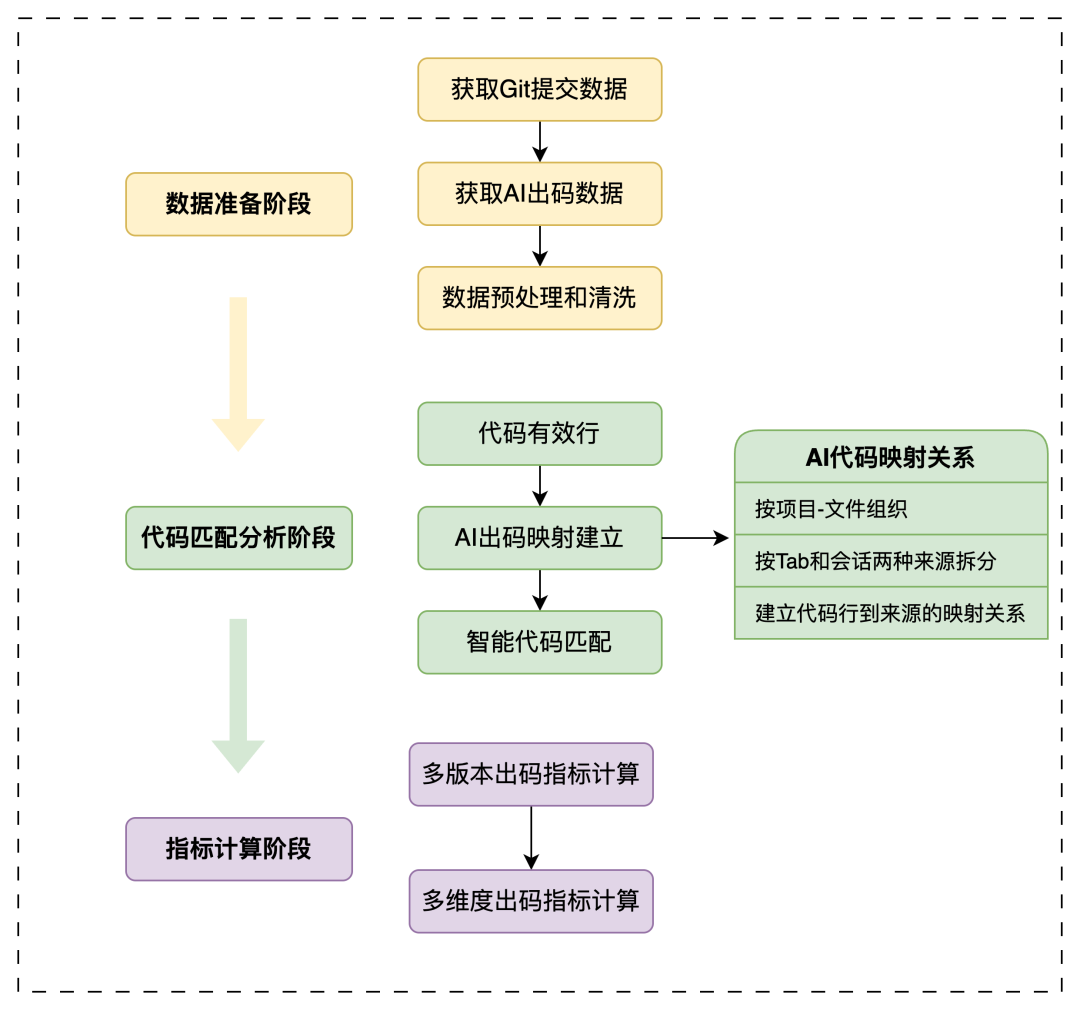

5.3 Metrics Calculation

Stages:

- Prepare data — Collect Git & AI code sets

- Match code lines

- Calculate metrics (V1 basic, V2 filtered, Quantile-enhanced)

---

6. Summary

Different AI tools have unique workflows, but measuring AI code generation rate lets teams:

- Quantify adoption

- Guide shifts from passive AI use → active AI-driven coding

We moved from manual coding to AI-assisted development in under 3 months — boosting efficiency and AI fluency.

---

Reference:

Kernel AI Coding Assistants Rules Proposal

---

Qwen-Image Tip:

Use Qwen-Image for clear multilingual text in AI-generated graphics, plus image-to-video conversion.

---

Platforms like AiToEarn官网 unify technical AI usage metrics with creative publishing analytics — bridging code-gen KPIs and content monetization pipelines.