# UniWorld-V2: Next-Level Precision Image Editing with Reinforcement Learning

A **new model**, even better than Google’s Nano Banana at **fine-detail image editing** and with **stronger Chinese understanding**, has arrived — introducing **UniWorld-V2**.

---

## 📌 Quick Comparison Example

**Task:**

“Change the hand gesture of the girl in the middle wearing a white shirt and mask to an OK sign.”

**Original image:**

**UniWorld-V2 result — perfect execution:**

**Nano Banana result — failed interpretation:**

---

## 🏗 Technical Background

Behind UniWorld-V2 is the **UniWorld team** from **RabbitShow Intelligence & Peking University**, with a **new post-training framework** called **UniWorld-R1**.

**Key innovation:**

- **First-time application of RL policy optimization** to a unified image-editing architecture — making it the **first visual reinforcement learning framework**.

- Built upon this, UniWorld-V2 was released, achieving **state-of-the-art performance**.

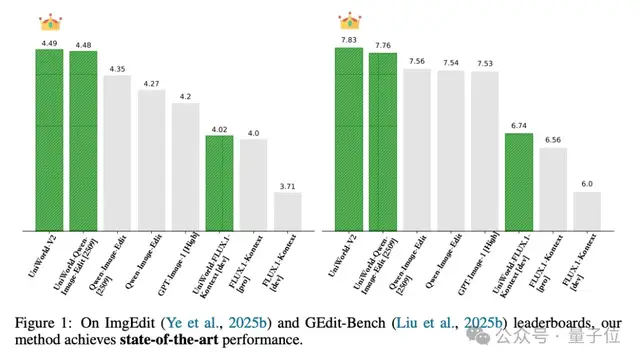

Benchmarks like **GEdit-Bench** and **ImgEdit** show UniWorld-V2 outperforming even **OpenAI’s GPT-Image-1**.

---

## 🌏 Real-World Capabilities Beyond Supervised Fine-Tuning (SFT)

### **1. Chinese Font Mastery**

- Precise interpretation of instructions.

- Renders **complex artistic Chinese fonts** such as “月满中秋” and “月圆人圆事事圆” with **clear strokes** and **accurate meaning**.

Examples:

Modify any character via prompt:

---

### **2. Fine-Grained Spatial Control**

- Handles “red-box control” tasks.

- Executes edits **only within specified areas** — even difficult ones like “move the bird out of the red box.”

Example:

---

### **3. Global Lighting Integration**

- Understands commands like “relight the scene.”

- Adjusts **lighting cohesively across the environment**.

Example:

---

## 🧠 UniWorld-R1: Core Framework

### 🚫 Problems with Traditional Methods

- SFT models tend to **overfit training data**, reducing generalization.

- Lack of a **general reward model** for diverse editing tasks.

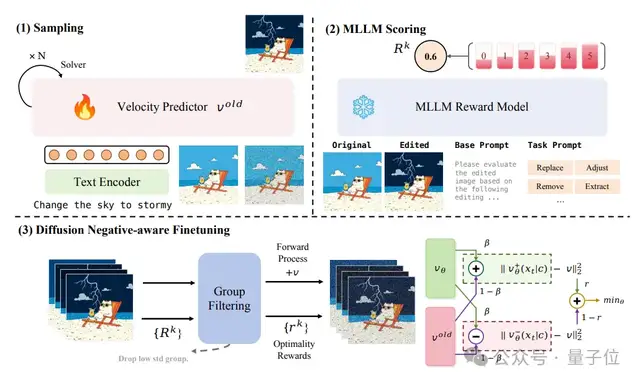

### 💡 UniWorld-R1 Innovations

1. **RL-based unified architecture**

- First image-editing post-training framework using RL policy optimization.

- Employs **Diffusion Negative-aware Finetuning (DiffusionNFT)** — a likelihood-free method that trains efficiently.

2. **MLLM as training-free reward model**

- Uses multimodal LLMs (e.g., GPT-4V) for **task-agnostic evaluation** without extra training.

- MLLM output logits provide **fine-grained feedback**.

**Pipeline:** Sampling → MLLM scoring → DiffusionNFT

---

## 📚 Dataset Overview

- Compiled **27,572 instruction-based image editing samples**.

- Sources: **LAION**, **LexArt**, **UniWorldV1**.

- Added **text editing** and **red-box control** tasks → **9 task types**.

---

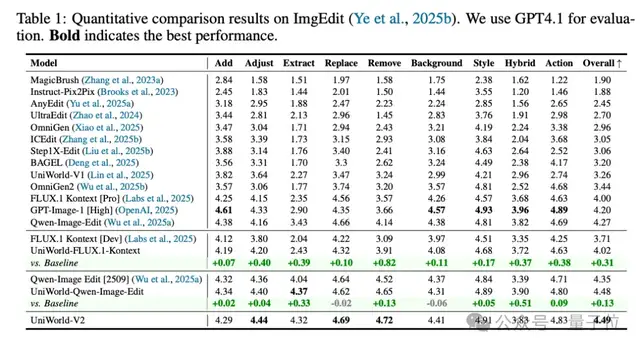

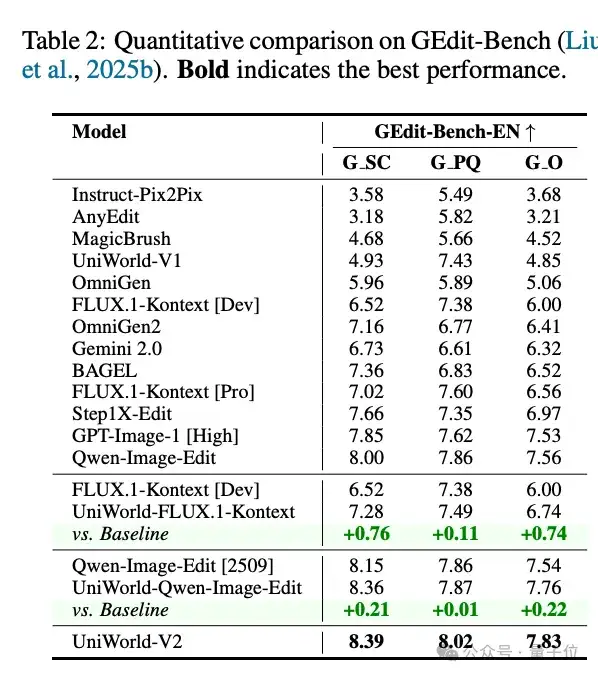

## 🏆 Benchmark Results

### GEdit-Bench

- **UniWorld-V2**: **7.83**

- Outperforms GPT-Image-1 [High]: 7.53

- Outperforms Gemini 2.0: 6.32

### ImgEdit

- **UniWorld-V2**: **4.49** → Best among open-source & closed-source.

---

**Framework generality:**

When applied to **Qwen-Image-Edit** and **FLUX-Kontext**, UniWorld-R1 boosted both models’ scores significantly.

---

### Category Gains

- UniWorld-FLUX.1-Kontext: Big gains in **Adjust**, **Extract**, **Remove**.

- UniWorld-Qwen-Image-Edit: Excelled in **Extract**, **Blend**.

---

### Out-of-Domain GEdit-Bench Performance

- FLUX.1-Kontext ↑ 6.00 → 6.74 (beats Pro version: 6.56)

- Qwen-Image ↑ 7.54 → 7.76

- UniWorld-V2: New state-of-the-art.

---

### 👥 Human Preference Study

- Participants preferred **UniWorld-FLUX.1-Kontext** for **instruction alignment** over FLUX.1-Kontext [Pro].

- Image quality slightly favored the official Pro, but editing capability was better with UniWorld-R1.

---

## 📜 Model Evolution

- UniWorld-V1: Industry’s **first unified understanding-and-generation model** — released **3 months before Nano Banana**.

- UniWorld-R1 **paper, code, and models** are **open-source**:

- GitHub: https://github.com/PKU-YuanGroup/UniWorld

- Paper: https://arxiv.org/abs/2510.16888

---

## 🔗 AiToEarn Integration

**[AiToEarn官网](https://aitoearn.ai/)** — an open-source global platform for:

- AI content generation

- Cross-platform publishing

- Monetization & analytics

**Supported platforms**: Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter).

More resources:

- Blog: [AiToEarn博客](https://blog.aitoearn.ai)

- Open source: [AiToEarn开源地址](https://github.com/yikart/AiToEarn)

- Model ranking: [AI模型排名](https://rank.aitoearn.ai)

---

> **End of Report**