Better Late Than Never: A Look at How SpringAI Is Doing Now

# Introduction

As a seasoned Java professional, I’ve closely followed the language's role in the AI space.

Due to **inherent limitations**, however, **Java is unlikely to become a mainstream AI development language**.

That said, Java **does** have a place in the AI ecosystem — especially as a **backend request-forwarding layer** for B2B and B2C applications.

In this article, we’ll focus on **SpringAI**, a key framework in this niche.

Initially, I found early releases disappointing and unsuitable for production use.

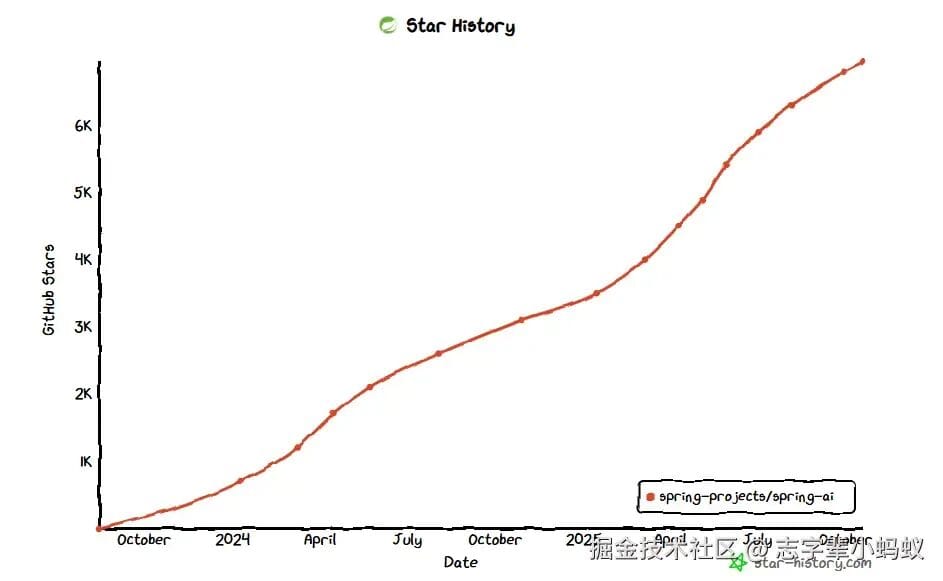

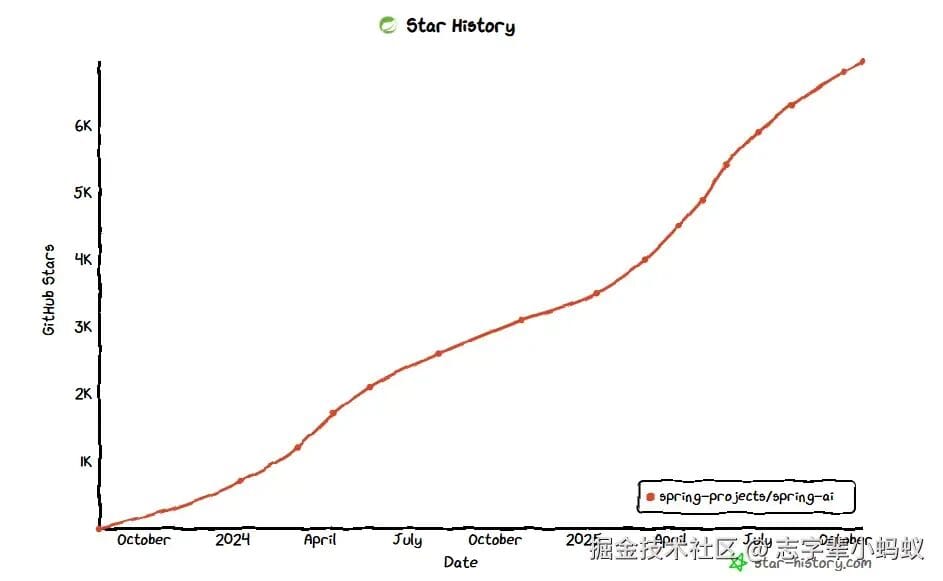

But after **two years of rapid iteration**, is SpringAI now worth learning?

---

## Evolution of SpringAI

| Version | Release Date | Core Changes |

| --- | --- | --- |

| **0.8.1** | Mar 2024 | First public release with basic AI model integration |

| **1.0.0-M1** | May 2024 | ChatClient API, structured output, conversation memory |

| **1.0.0-M2** | Aug 2024 | Expanded provider support, tool calling |

| **1.0.0-M6** | Feb 2025 | @Tool annotation, MCP protocol integration |

| **1.0.0-M7** | Apr 2025 | Independent RAG module, modular architecture |

| **1.0.0-RC1** | May 13, 2025 | API lock-in, production readiness |

| **1.0.0 GA** | May 20, 2025 | First production-grade release |

| **1.0.3** | Oct 2025 | GraalVM native image support |

| **1.1.0-M3** | Oct 15, 2025 | MCP SDK upgrade, multi-document support |

**Key takeaway:** SpringAI focuses on **AI service orchestration**, not computation — leveraging Java’s server-side strengths to unify backend AI integration.

---

## Layered Architecture Overview

┌─────────────────────────────────────────────────────────┐

│ Application Layer │

│ ChatClient API | Prompt Templates | Structured Output │

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ Integration Layer │

│ Spring Boot Auto-Config | Dependency Injection │

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ Core Layer │

│ Model Abstraction | Embedding | Vector Store | Memory │

└─────────────────────────────────────────────────────────┘

↓

┌─────────────────────────────────────────────────────────┐

│ Extension Layer │

│ RAG | Agent | MCP | Tool Calling | Observability │

└─────────────────────────────────────────────────────────┘

---

## Key Features

### 1. Unified API Interfaces

SpringAI abstracts away provider-specific differences via **`ChatClient`** and **`EmbeddingClient`**.

This enables switching between **OpenAI**, **Claude**, **Google Gemini**, **AWS Bedrock**, **Ali Tongyi Qianwen**, **DeepSeek**, **Zhipu AI**, **Ollama**, and **20+ other providers** **without touching business code**.

**Example: Basic prompt call**

return chatClient.prompt()

.system("You are a professional text summarization assistant...")

.user("Please generate a summary for the following text:\n" + text)

.options(OpenAiChatOptions.builder()

.withTemperature(0.3)

.withMaxTokens(300)

.build())

.call()

.content();

⚠ **Tip:** Provider differences in *context limits* and parameters like `maxTokens` can greatly impact output quality.

For **one-click model switching**, learn to fine-tune configuration per provider.

---

### 2. Prompt Engineering & Templating

Supports defining structured prompts for **System/User/Assistant/Tool** roles and rendering with variables:

SystemPromptTemplate sysTpl = new SystemPromptTemplate("Respond in the style of {role}");

Message sysMsg = sysTpl.createMessage(Map.of("role", "Technical Expert"));

UserMessage userMsg = new UserMessage("Explain Spring Boot auto-configuration");

Prompt prompt = new Prompt(List.of(sysMsg, userMsg));

String result = chatClient.prompt(prompt).call().content();

---

### 3. Streaming Responses & Async Processing

Full **Reactor** integration for:

- **Synchronous** calls

- **Streaming** via SSE/Flux

- **Asynchronous** flows

Example: SSE streaming endpoint

@GetMapping(value = "/stream", produces = MediaType.TEXT_EVENT_STREAM_VALUE)

public Flux stream(@RequestParam String msg) {

return chatClient.prompt().user(msg).stream().chatResponse();

}

---

### 4. RAG (Retrieval-Augmented Generation)

Built-in vector store abstraction with support for **20+ databases** (PGVector, Milvus, Pinecone, Redis, Chroma, etc.).

**Core RAG flow:**

1. **Retrieve** relevant data

2. **Augment** prompt with context

3. **Generate** answer

**Example query:**

@Service

public class RagService {

@Autowired private VectorStore vectorStore;

@Autowired private ChatClient chatClient;

public String query(String question) {

List docs = vectorStore.similaritySearch(question);

String context = docs.stream()

.map(Document::getContent)

.collect(Collectors.joining("\n"));

return chatClient.prompt()

.user("Based on...\n" + context + "\nQuestion: " + question)

.call()

.content();

}

}

---

### 5. Conversation Memory

`ChatMemory` stores multi-turn dialogue across sessions.

@Autowired private ChatMemory chatMemory;

@Autowired private ChatClient chatClient;

public String chat(String sessionId, String msg) {

List history = chatMemory.get(sessionId);

history.add(new UserMessage(msg));

String reply = chatClient.prompt(new Prompt(history)).call().content();

chatMemory.add(sessionId, new AssistantMessage(reply));

return reply;

}

Default: **`InMemoryChatMemory`** — you can implement persistent storage.

---

### 6. Multimodal Support

Text, image, and audio input/output:

UserMessage msg = new UserMessage("Describe this image",

new Media(MimeTypeUtils.IMAGE_PNG, new ClassPathResource("photo.png")));

String description = chatClient.prompt(new Prompt(msg)).call().content();

---

### 7. Agent Workflows

Multi-role agent orchestration for complex tasks:

@Bean(name = "opsAgent")

public Agent opsAgent(ChatClient.Builder builder, WeatherTool weatherTool, DatabaseTool databaseTool) {

ChatClient client = builder.defaultSystem("You are an intelligent assistant...")

.build();

return Agent.builder()

.chatClient(client)

.tools(List.of(weatherTool, databaseTool))

.build();

}

---

### 8. Other Features

- **Function calling & MCP integration**

- **Structured output mapping**chatClient.prompt().user("Minecraft").call().entity(MovieReview.class);

- **Annotation-based AI calls** (e.g., `@AiPrompt`)

---

## When to Use SpringAI

**Pros:**

- Fast provider switching

- Strong integration with Spring Boot ecosystem

- Streamlined RAG, memory, and multimodal workflows

**Cons:**

- Not suitable as a model runtime

- MCP support less compelling vs Python

---

## Conclusion

SpringAI’s **production readiness** means:

- If you need a **service-layer AI interface**, it’s an efficient choice.

- Paired with Java’s backend strengths, you can integrate AI into microservices with minimal effort.

For teams aiming to **monetize AI-generated content**, integrating such backend orchestration with **[AiToEarn](https://aitoearn.ai/)** enables:

- Multi-platform publishing (Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Built-in analytics and model ranking

- Unified creation-to-distribution workflows

By combining **SpringAI’s developer-friendly integration** with **AiToEarn’s monetization pipeline**, your AI-powered applications can deliver business value end-to-end.

---