Build Your Own: A Step-by-Step Guide to an Android Personal Voice Assistant with Open-Source Tools

Introduction

Most commercial voice assistants process your voice in the cloud before responding.

By using open‑source tools, you can run everything locally on your phone — improving privacy, speed, and giving you full control.

In this tutorial, you’ll build a fully local Android voice assistant using:

- Whisper – Automatic Speech Recognition (ASR)

- MLC LLM – On-device reasoning

- System Text-to-Speech (TTS) – Android’s built-in voice output

Your assistant will be able to:

- Understand voice commands offline

- Speak responses aloud

- Perform tool-calling actions (control devices, send messages)

- Store personal memories

- Use RAG to answer from your own notes

- Execute multi-step workflows (e.g., morning briefing)

We’ll use Termux to run this entirely on Android — no cloud required.

---

Table of Contents

- System Overview

- Requirements

- Step 1 – Test Microphone & Audio

- Step 2 – Install & Run Whisper

- Step 3 – Install Local LLM with MLC

- Step 4 – Local Text-to-Speech (TTS)

- Step 5 – Core Voice Loop

- Step 6 – Tool Calling

- Step 7 – Memory & Personalization

- Step 8 – Retrieval-Augmented Generation (RAG)

- Step 9 – Multi-Step Agent Workflow

- Conclusion & Next Steps

---

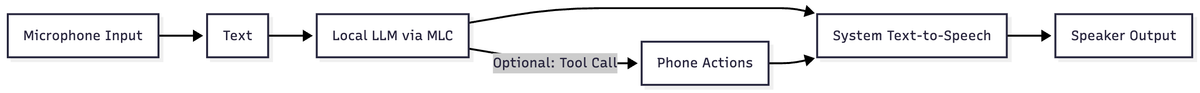

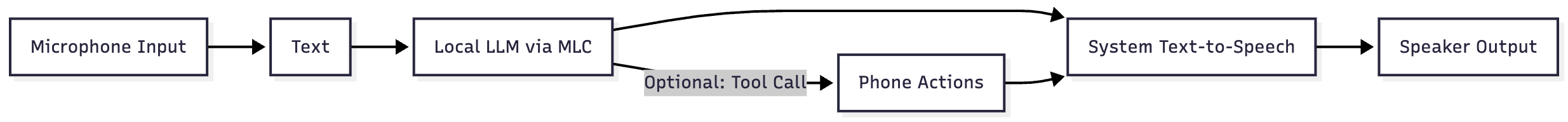

System Overview

Flow:

- Mic input → `Whisper` → text

- Local LLM → understands intent

- Tool calls for actions (optional)

- System TTS → speaks result

Key Concepts:

- ASR – convert speech to text

- LLM – offline reasoning

- TTS – convert text to audio

---

Requirements

You need familiarity with:

- Basic command line navigation

- Minimal Python scripting

You do NOT need:

- ML/model experience

- Neural network knowledge

- Audio engineering skills

Hardware:

- Android phone (Snapdragon 8+ Gen1 recommended)

- Termux installed

- Python 3.9+ in Termux

- 4–6 GB free storage

Why? Whisper + LLaMA models run locally; newer chips handle faster, cooler inference.

---

Install Base Tools

pkg update && pkg upgrade -y

pkg install -y python git ffmpeg termux-api

termux-setup-storage---

Step 1 – Test Microphone & Audio

Purpose

Verify mic & speaker access before building the pipeline.

Core Commands:

# Record 4 seconds

termux-microphone-record -f in.wav -l 4 && termux-microphone-record -q

# Play back

termux-media-player play in.wav

# Speak via system TTS

termux-tts-speak "Hello, local assistant is ready."---

Step 2 – Install & Run Whisper

Install

pip install openai-whisper

# If issues:

pip install faster-whisperTest Script – `asr_transcribe.py`

import sys

try:

import whisper

use_faster = False

except Exception:

use_faster = True

if use_faster:

from faster_whisper import WhisperModel

model = WhisperModel("tiny.en")

segments, info = model.transcribe(sys.argv[1])

text = " ".join(s.text for s in segments)

print(text.strip())

else:

model = whisper.load_model("tiny.en")

result = model.transcribe(sys.argv[1], fp16=False)

print(result["text"].strip())Run:

termux-microphone-record -f in.wav -l 4 && termux-microphone-record -q

python asr_transcribe.py in.wav---

Step 3 – Install Local LLM with MLC

Install & Configure

git clone https://github.com/mlc-ai/mlc-llm.git

cd mlc-llm

pip install -r requirements.txt

pip install -e pythonDownload model:

mlc_llm download Llama-3-8B-Instruct-q4f16_1Test Script – `local_llm.py`

from mlc_llm import MLCEngine

import sys

engine = MLCEngine(model="Llama-3-8B-Instruct-q4f16_1")

prompt = sys.argv[1] if len(sys.argv) > 1 else "Hello"

resp = engine.chat([{"role": "user", "content": prompt}])

print(resp.get("message", resp) if isinstance(resp, dict) else str(resp))Run:

python local_llm.py "Summarize building a local assistant"---

Step 4 – Local Text-to-Speech (TTS)

Use Android built-in:

termux-tts-speak "Local assistant speaking."---

Step 5 – Core Voice Loop

File: `voice_loop.py`

import subprocess

def run(cmd):

return subprocess.check_output(cmd).decode().strip()

print("Listening...")

subprocess.run(["termux-microphone-record", "-f", "in.wav", "-l", "4"])

subprocess.run(["termux-microphone-record", "-q"])

text = run(["python", "asr_transcribe.py", "in.wav"])

reply = run(["python", "local_llm.py", text])

try:

subprocess.run(["python", "speak_xtts.py", reply])

subprocess.run(["termux-media-player", "play", "out.wav"])

except:

subprocess.run(["termux-tts-speak", reply])---

Step 6 – Tool Calling

Tools – `tools.py`

import json

def add_event(title, date):

return {"status": "ok", "title": title, "date": date}

TOOLS = {"add_event": add_event}

def run_tool(call_json):

data = json.loads(call_json)

name = data["tool"]

args = data.get("args", {})

if name in TOOLS:

result = TOOLS[name](**args)

return json.dumps({"tool_result": result})

return json.dumps({"error": "unknown tool"})---

Step 7 – Memory & Personalization

Simple KV store – `memory.py`

import json

from pathlib import Path

MEM_PATH = Path("memory.json")

def mem_load():

return json.loads(MEM_PATH.read_text()) if MEM_PATH.exists() else {}

def mem_save(mem):

MEM_PATH.write_text(json.dumps(mem, indent=2))

def remember(k, v):

mem = mem_load(); mem[k] = v

mem_save(mem)---

Step 8 – Retrieval-Augmented Generation (RAG)

pip install chromadbExample – `rag.py`:

from chromadb import Client

client = Client()

notes = client.create_collection("notes")

notes.add(documents=["Contractor quote was $42000 for extension."], ids=["q1"])

results = notes.query(query_texts=["extension quote"], n_results=1)

print(results["documents"][0][0])---

Step 9 – Multi-Step Agent Workflow

Example: Morning briefing

- Load agenda from JSON

- Summarize via LLM

- Speak aloud

- SMS partner via Termux

---

Conclusion & Next Steps

You now have:

- Offline ASR (Whisper)

- On-device LLM (MLC)

- Local TTS

- Tool calling

- Persistent memory

- RAG search

- Multi-step agents

From here:

- Add wake word detection

- Integrate with Home Assistant / IoT

- Enhance memory & DB use

You own the stack — local, private, extensible.

Would you like me to prepare a condensed quick-start cheat sheet version of this tutorial for rapid reference?