Building a Makaton AI Assistant with Gemini Nano and Gemini API

# Building the Makaton AI Companion: An AI-Powered Accessibility Tool

## Introduction

When I began exploring AI systems to translate **Makaton** — a sign and symbol-based language supporting speech and communication — my aim was to **close accessibility gaps** for learners with speech or language challenges.

What started as an academic experiment grew into a working prototype blending **on‑device AI (Gemini Nano)** with **cloud AI (Gemini API)** to analyze images and translate them into English meanings. The idea: a lightweight, single‑page web app to recognize Makaton gestures or symbols and instantly return an English interpretation.

In this guide, you'll learn how to **build, troubleshoot, and run** the **Makaton AI Companion** step‑by‑step.

---

## What You Will Learn

- Purpose and role of Makaton in inclusive education.

- Combining *on‑device* AI (Gemini Nano) with *cloud* AI (Gemini API).

- Building a functional AI web app for image description and mapping to meaning.

- Handling CORS, API key issues, and model selection errors.

- Storing API keys locally for privacy via `localStorage`.

- Using browser speech synthesis for spoken output.

---

## Table of Contents

- [Tools and Tech Stack](#tools-and-tech-stack)

- [Mapping Logic](#mapping-logic)

- [Local Server Setup](#local-server)

- [Building the App Step by Step](#building-the-app-step-by-step)

- [Common Issues & Fixes](#how-to-fix-common-issues)

- [Demo: Makaton AI Companion](#demo-the-makaton-ai-companion-in-action)

- [Broader Reflections](#broader-reflections)

- [Conclusion](#conclusion)

---

## Tools and Tech Stack

### Frontend

- **HTML, CSS, JavaScript (Vanilla)** — No frameworks.

- Single `index.html` controls upload, AI call, and output display.

### AI Components

- **Gemini Nano** (Chrome Canary) — Local text generation.

- **Gemini API** — Cloud fallback for image analysis.

- Models tested: `gemini-1.5-flash`, `gemini-pro-vision`

- Fallback logic to avoid 404 errors.

### Local Storage

- API key stored in `localStorage` for privacy.

### Browser SpeechSynthesis API

- Converts English translation to spoken output on demand.

---

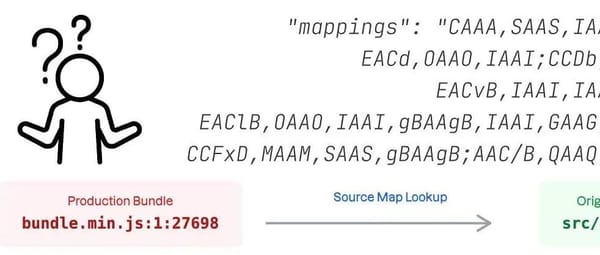

## Mapping Logic

Use a simple keyword dictionary (`mapping.js`) linking AI descriptions to Makaton meanings:

{ keywords: ["open hand", "wave"], meaning: "Hello / Stop" }

---

## Local Server

Avoid `file://` CORS issues by running a local HTTP server:

python -m http.server 8080

Open [http://localhost:8080](http://localhost:8080) in **Chrome Canary**.

---

## Building the App Step by Step

### 1. Create Project Structuremakaton-ai-companion/

├── index.html

├── styles.css

├── app.js

└── lib/

├── mapping.js

└── ai.js

### 2. Basic HTML Interface

Upload, describe, view meaning, speak or copy.

Upload Image

Describe

### 3. Mapping Descriptions

`mapping.js` keyword search:

export function mapDescriptionToMeaning(desc) {

// Keyword match logic

}

### 4. AI Logic

`ai.js` handles:

- Checking Gemini Nano availability.

- Listing and ranking Gemini API models.

- Fallback to manual description.

### 5. Main App Script

`app.js` integrates:

- File handling (upload/drag-drop),

- AI description (on-device/cloud),

- Mapping to meaning,

- Speak and copy functionality.

---

## How to Fix Common Issues

### 1. CORS Errors

**Problem:** Opening via `file://` blocks JS modules.

**Fix:** Use local HTTP server (`python -m http.server`).

### 2. Model Not Found (404)

**Problem:** Gemini API endpoint unavailable.

**Fix:** Dynamic listing and ranking of models via API call.

### 3. Packaging for Local Use

Bundle project files in ZIP. Serve locally without build tools.

### 4. Missing Exports

**Problem:** Import/export name mismatch.

**Fix:** Match names exactly in JS files.

---

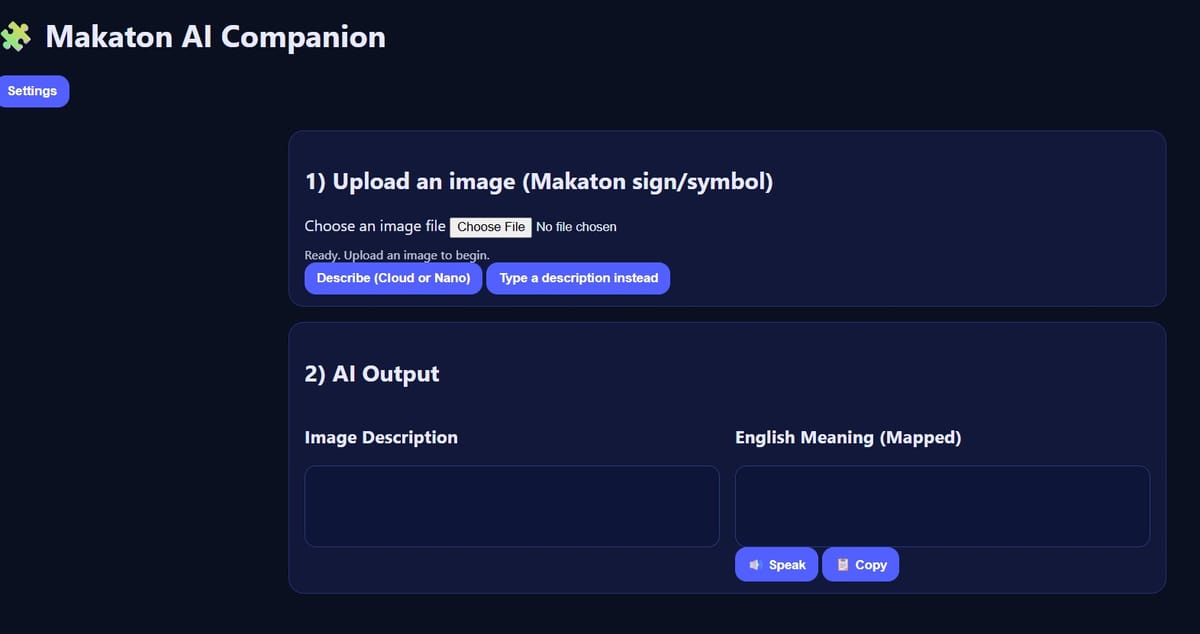

## Demo: The Makaton AI Companion in Action

### Step 1: Run Locallypython -m http.server 8080

Open `http://localhost:8080`.

### Step 2: Set API Key

Generate via [Gemini AI Studio](https://aistudio.google.com/welcome).

Save in Settings modal.

### Step 3: Enable Gemini Nano

**Chrome Canary**: Enable `chrome://flags/#prompt-api-for-gemini-nano`

Download model via `chrome://components`.

### Step 4: Upload Image & Describe

### Step 5: AI Output

- Visual description → keyword match → English meaning.

- Speak or copy results.

---

## Broader Reflections

This project shows how **computer vision + NLP** can support communication for Makaton users.

The image → description → mapped meaning pipeline bridges perceptual and semantic AI understanding.

---

## Conclusion

The **Makaton AI Companion** demonstrates that AI accessibility tools can be:

- Lightweight

- Privacy‑preserving

- Educationally impactful

Future improvements:

- Live camera gesture recognition

- Expand mapping dictionary

- Support multiple symbol systems

---

## Join the Conversation

Try adapting this for live webcam recognition or other symbol sets.

Source code: [GitHub – Makaton AI Companion](https://github.com/tayo4christ/makaton-ai-companion)

---