Catch Up with GPT-5 for $4.6M? Kimi Team Responds for the First Time, Yang Zhilin Joins

Kimi K2 Thinking: Insights from the AMA

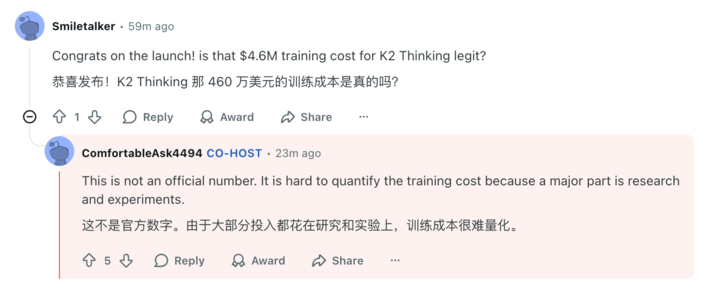

Last week, Kimi K2 Thinking — an open-source model — made waves by outperforming OpenAI and Anthropic in benchmark tests. The release sparked praise across social media, and our own tests confirmed significant progress in AI agents, coding, and writing capabilities.

Just days later, the Kimi team, led by founder Yang Zhilin, hosted a highly informative AMA on Reddit.

▲ Co-founders Yang Zhilin, Zhou Xinyu, and Wu Yuxin in AMA discussions.

The AMA covered:

- Early hints about the next-generation K3 model

- Technical details on the KDA attention mechanism

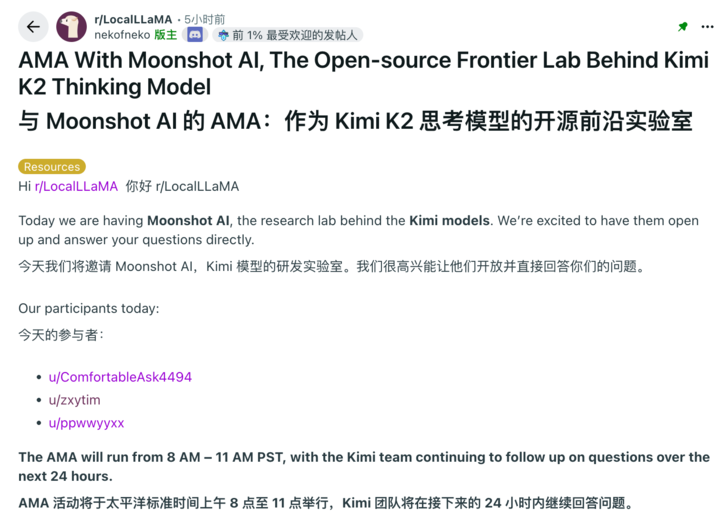

- Clarification on the rumored $4.6M training cost

- How their approach differs from OpenAI’s

---

Quick Highlights

- $4.6M cost rumor: Not official; training costs are hard to quantify.

- K3 release timing: Playfully linked to Altman’s hypothetical trillion-dollar data center.

- KDA attention: Will continue powering K3.

- Vision models: Data collection already in progress.

---

Challenging OpenAI: “We Have Our Own Pace”

One spicy AMA moment came when Kimi addressed release timing comparisons with OpenAI:

> “Before Altman’s trillion-dollar data center finishes construction.”

Humorous? Yes. But also a subtle boast about matching GPT-5-like abilities with less hardware.

On OpenAI’s massive training spend, Kimi responded:

> “We don’t know, only Altman himself knows.”

> “We have our own way and pace.”

That “pace” reflects their product philosophy:

> No AI browser clones — focus on better models, not browser wrappers.

---

Training Costs & Hardware

- Rumored $4.6M cost: Inaccurate — most funds go into R&D and experiments.

- Hardware: H800 GPUs + Infiniband, optimized to get the most out of limited GPU counts.

---

Personality & the “AI Slop” Debate

Kimi K2 Instruct stands out for being less sycophantic, more insightful, and stylistically distinctive — thanks to a mix of knowledge-rich pre-training and style-focused post-training.

▲ LLM EQ ranking: https://eqbench.com/creative_writing.html

Criticism:

K2 Thinking sometimes defaults to overly positive “AI slop” responses, steering even violent prompts toward optimism.

Reflection:

The team admitted this is common; RL reward models often amplify it.

---

Benchmarks vs. Real-World Intelligence

Some users suspect K2 Thinking was tuned to score higher on HLE benchmarks, given the gap between scores and everyday performance.

Kimi’s explanation:

- Minor advances in autonomous reasoning aligned with high HLE scores.

- Focus going forward: boost real-world general intelligence, not just benchmark optimization.

---

NSFW Content?

While some suggested leveraging Kimi’s writing skills for NSFW text, the team currently has no plans — citing the need for age verification and better alignment.

---

Core Technology — KDA, Long-Chain Reasoning, & Multimodality

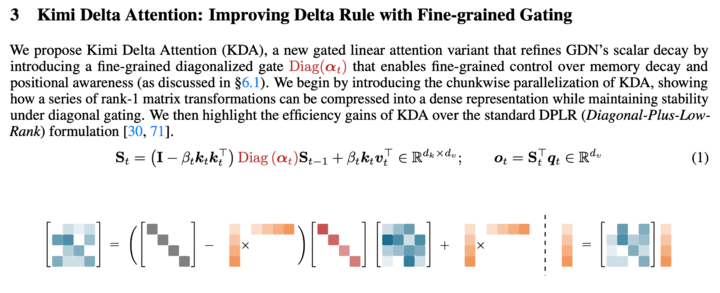

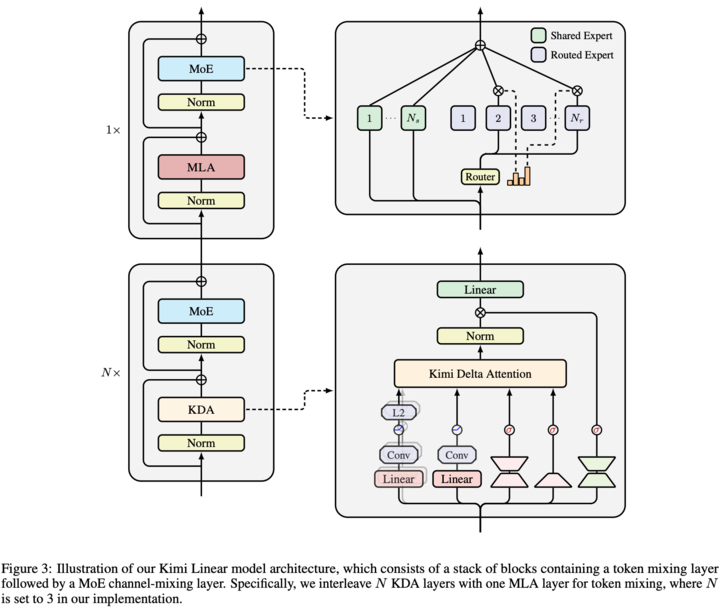

Kimi’s October paper Kimi Linear: An Expressive, Efficient Attention Architecture introduced Kimi Delta Attention (KDA).

▲ Paper: https://arxiv.org/pdf/2510.26692

KDA Advantages:

- Smarter, more efficient hybrid attention

- Benefits in long-sequence reinforcement learning

- Used in K3 for cost savings, though full attention remains superior for very long I/O tasks.

---

Ultra-Long Reasoning Chains

K2 Thinking can chain 300 tools in a single reasoning session — reportedly rivaling GPT-5 Pro capability.

Why?

- More “thinking tokens” per step

- Native support for INT4 quantization — enabling:

- Faster inference

- Less reasoning degradation vs. post-compression

---

Vision-Language Models: Work in progress — text-only came first due to longer data collection timelines and limited resources.

---

Ecosystem, Costs, & Context Length

Missing 1M Context Models?

> Cost too high.

256K context: Plans to extend for large codebase handling.

---

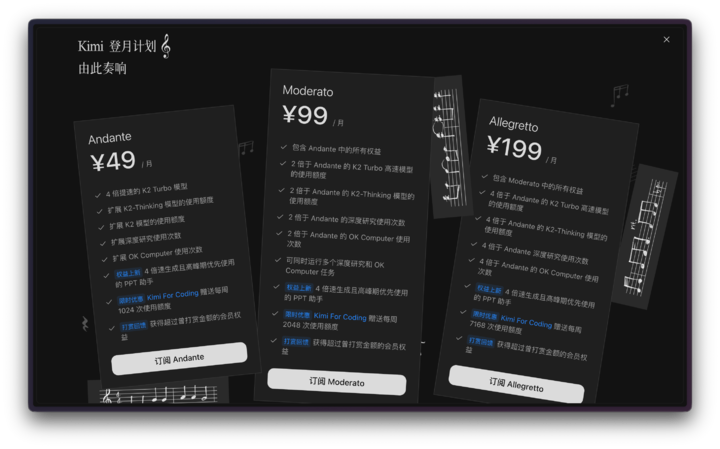

API Pricing Controversy

Some devs dislike per-call pricing vs. token-based billing — makes cost prediction harder.

Kimi explained:

- API call counts make costs clearer for average users

- But they’re open to exploring new billing methods.

---

Commitment to Open Source

> We embrace open source because general AI should unite, not divide.

---

AGI Arrival?

Kimi says AGI is hard to define, but people are starting to feel it, and more powerful models are on the way.

---

From Aggressive Marketing to Steady Pace

The AMA showed Kimi is more confident and measured — committed to open-source innovation as their chosen path in the global AI race.

---

Connecting AI Models to Monetization

Platforms like AiToEarn官网 embody this vision:

- Generate AI content

- Publish across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X

- Track analytics and model rankings

- Open-source hub: https://github.com/yikart/AiToEarn

This synergy between open AI creation and monetization tools could reshape how developers and creators work with models like Kimi K3.

---

Bottom Line:

Kimi’s AMA pulled back the curtain on technology, philosophy, and pace — reaffirming that in AI’s high-cost, hypercompetitive race, open-source collaboration remains their north star.