China’s Only Winner: Alibaba Qwen Wins Best Paper at Top Global AI Conference

November 28 News: Tongyi Qianwen Wins NeurIPS 2025 Best Paper Award

At NeurIPS 2025, one of the world’s most prestigious AI conferences, Alibaba’s Tongyi Qianwen team emerged among more than 20,000 submissions to win the Best Paper Award—the only winning team from China.

This groundbreaking paper is the first in the industry to reveal how the attention gating mechanism impacts the performance and training of large models—a breakthrough seen by experts as a major step toward overcoming current bottlenecks in large model training.

---

Significance of NeurIPS

NeurIPS is among the most influential AI conferences globally, where landmark innovations such as Transformer and AlexNet originated.

This year’s stats:

- 20,000+ submissions from leading institutions: Google, Microsoft, OpenAI, Alibaba, MIT, and more

- Acceptance rate: ~25%

- Best Paper Awards: only 4 papers selected (probability < 0.02%)

---

Background: Transformer & Attention Limitations

In 2017, Google’s NeurIPS paper introduced the Transformer architecture and self-attention, enabling AI to focus on key information—fundamental to modern large model research.

Despite significant advancements, current attention mechanisms have drawbacks:

- Over-focusing on certain details while ignoring other critical information

- Performance degradation in broader tasks

- Instability during training

---

What Is Gating in Attention?

The gating mechanism, often called an AI model’s “intelligent valve”:

- Filters irrelevant information

- Enhances overall performance

Previous Attempts

- Applied in models like AlphaFold2 and Forgetting Transformer

- Lack of clear theoretical understanding and large-scale experimental evidence

---

Tongyi Qianwen’s Contribution

The Tongyi Qianwen team:

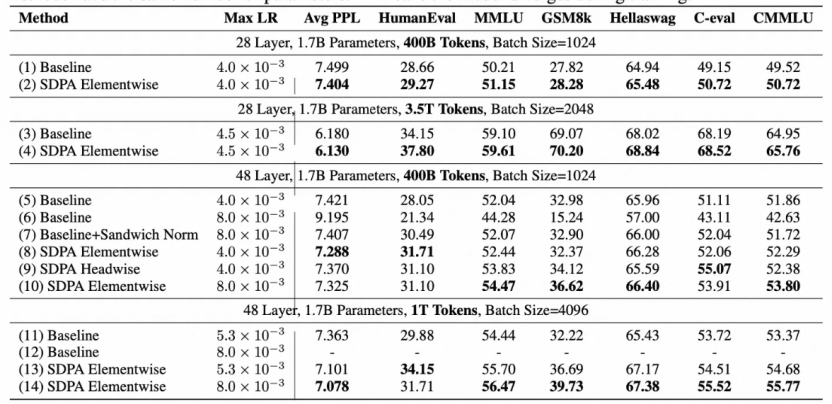

- Ran dozens of experiments on:

- 1.7B dense model

- 15B Mixture-of-Experts (MoE) model

- Trained on up to 3.5 trillion tokens in a single experiment

Key discovery:

- Gating the output of each attention head yields the highest performance improvement

Results:

- Only 1% increase in parameter count

- Perplexity reduction: >0.2

- MMLU benchmark improvement: +2 points

- Even more effective at larger scales

---

Real-World Impact

- Implemented in Qwen3-Next model → boosted performance & robustness

- Technical solution, experiment models, and product-level models open-sourced

- NeurIPS reviewers:

- “This work will see broad application and greatly enhance understanding of attention in LLMs.”

---

Team Statement

> “A deep understanding of gated attention mechanisms not only guides large language model architecture design but also lays the foundation for building more stable, efficient, and controllable large models.”

---

Qianwen Project’s Open-Source Achievements

- 300+ models open-sourced across all modalities and sizes

- 700M+ downloads globally

- 180,000+ derivative models created

- Ranked #1 worldwide

---

Related Platform: AiToEarn

Platforms like AiToEarn官网 are critical for open-sourcing and cross-platform dissemination of AI research.

AiToEarn features:

- Global AI content monetization

- Multi-platform publishing: Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Integrated AI generation tools

- Analytics and model rankings (AI模型排名)

Potential Role:

AiToEarn could help turn cutting-edge AI research like Tongyi Qianwen’s work into widely shared, monetizable knowledge.

---

If you want, I can create a clear visual comparison chart showing:

- Standard attention vs. gated attention performance results

- Would you like me to add that for this article?