claude code docs map

Understanding Claude Code’s Self-Referential Tool Calls

One standout feature of Claude Code is its ability to answer questions about itself by automatically initiating WebFetch tool calls.

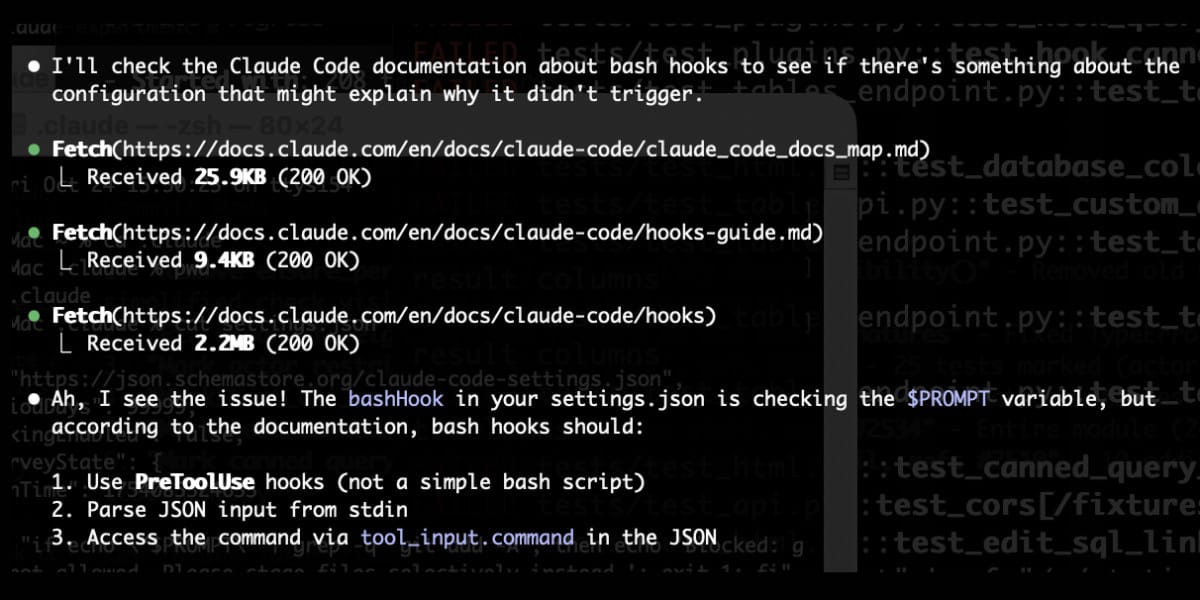

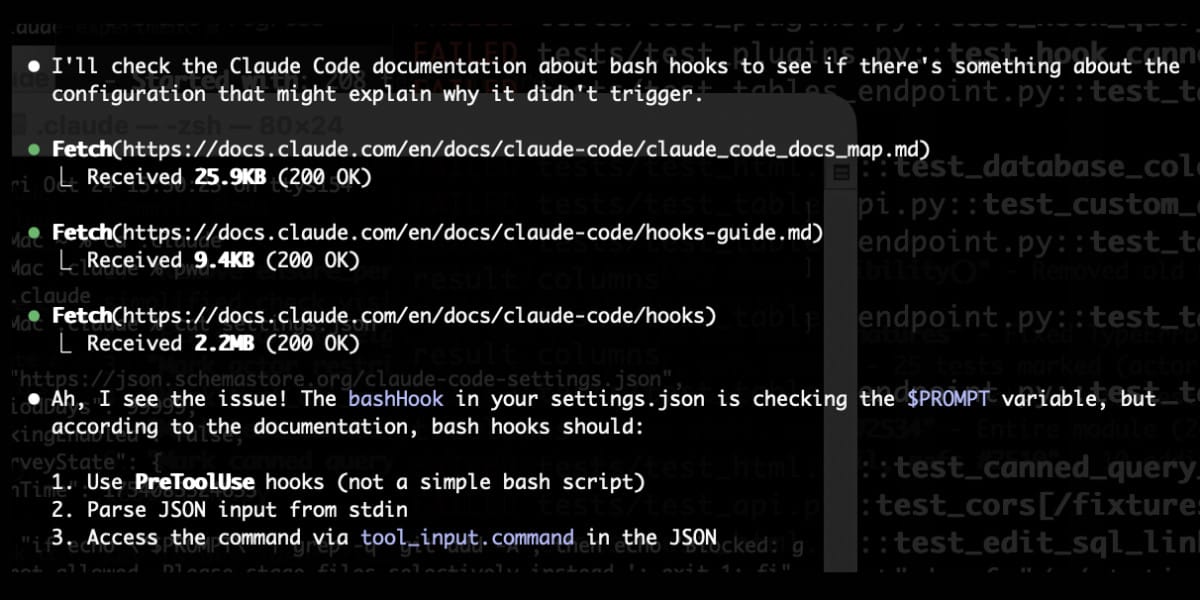

Example: asking about the "hooks" feature

In this example, I asked Claude Code about its hooks feature, prompting it to fetch its own documentation for a more accurate answer.

---

The Role of `claude_code_docs_map.md`

The `claude_code_docs_map.md` file serves as:

- A clean, central index for all Claude Code documentation

- Following the principles of llms.txt for discoverability

- Helping Claude Code locate feature-specific docs when answering questions

This ensures the assistant can reference current documentation rather than relying solely on outdated training data.

---

Inside the System Prompt

Using this trick, I intercepted Claude Code’s system prompt and found a directive about `claude_code_docs_map.md`:

> When the user directly asks about Claude Code (e.g., "can Claude Code do...", "does Claude Code have..."), or asks in second person (e.g., "are you able...", "can you do..."), or asks how to use a specific Claude Code feature (e.g., implement a hook, or write a slash command), use the WebFetch tool to gather information to answer the question from Claude Code docs. The list of available docs is available at https://docs.claude.com/en/docs/claude-code/claude_code_docs_map.md.

---

Why This Approach Matters

Many LLM-based tools — including ChatGPT and Claude.ai — often give incomplete or outdated answers about themselves.

This is largely because:

- Their training data predates latest releases

- They lack live doc integrations

- Tool-specific documentation may not be structured for retrieval

Claude Code’s doc map + WebFetch pattern is a best practice that keeps answers accurate and up-to-date.

---

Related: Cross-Platform AI Documentation

For creators aiming to keep documentation synchronized across platforms, solutions like AiToEarn make this possible by:

- Generating, publishing, and monetizing AI content across multiple channels

- Integrating analytics and model rankings

- Allowing simultaneous updates to all platforms, including:

- Douyin, Kwai, WeChat, Bilibili, Rednote

- Facebook, Instagram, LinkedIn, Threads

- YouTube, Pinterest, X (Twitter)

Potential Benefits

- Seamless self-updating doc integrations for LLM tools

- Easier maintenance of multi-platform documentation ecosystems

- Greater discoverability of AI product features

---

Key Takeaway

Claude Code’s implementation of live doc retrieval via a centralized index represents a model pattern for LLM products — and could be even more powerful if coupled with cross-platform publishing frameworks like AiToEarn.

---

If you want, I can expand this into a step-by-step best-practice framework LLM developers can follow to implement a similar system prompt + doc map approach. Would you like me to create that?