Cloudflare Workflows Adds Python Support to Boost High-Reliability AI Pipelines

Cloudflare Workflows: Durable Multi‑Step Orchestration

Cloudflare Workflows provide a durable execution engine for building multi‑step application orchestration.

Initially supported only in TypeScript, the platform now offers Python (beta), recognizing Python as the de facto language for data pipelines, AI/ML workflows, and automation in data engineering.

---

Core Infrastructure

Workflows are built on:

- Workers — the serverless execution environment.

- Durable Objects — state persistence and coordination for long‑running processes.

Durable Objects ensure:

- State persistence

- Retry of individual failed steps

- Faster recovery without restarting the entire workflow

---

Understanding Workflow Steps

From Cloudflare’s introduction:

> A core building block of every Workflow is the step:

> A retriable component of your application that can optionally emit state.

> That state persists even if subsequent steps fail—allowing recovery without redundant work.

---

Python Support in Cloudflare Workflows

In Cloudflare’s blog post, the addition of Python is presented as a natural fit for:

- AI and data automation

- Orchestration alongside machine learning workloads

Cloudflare’s Python journey:

- 2020 — Python in Workers via Transcrypt

- 2024 — Direct Python integration in workerd

- Early 2024 — CPython & Pyodide packages support (e.g., `matplotlib`, `pandas`)

- Now — Native Python Workflows with full feature parity with JavaScript SDK

---

Design Goals for Python SDK

- Complete parity with JavaScript SDK

- Fully Pythonic API design

- Native async/concurrency support

- Easy step dependency management — even with parallel tasks

---

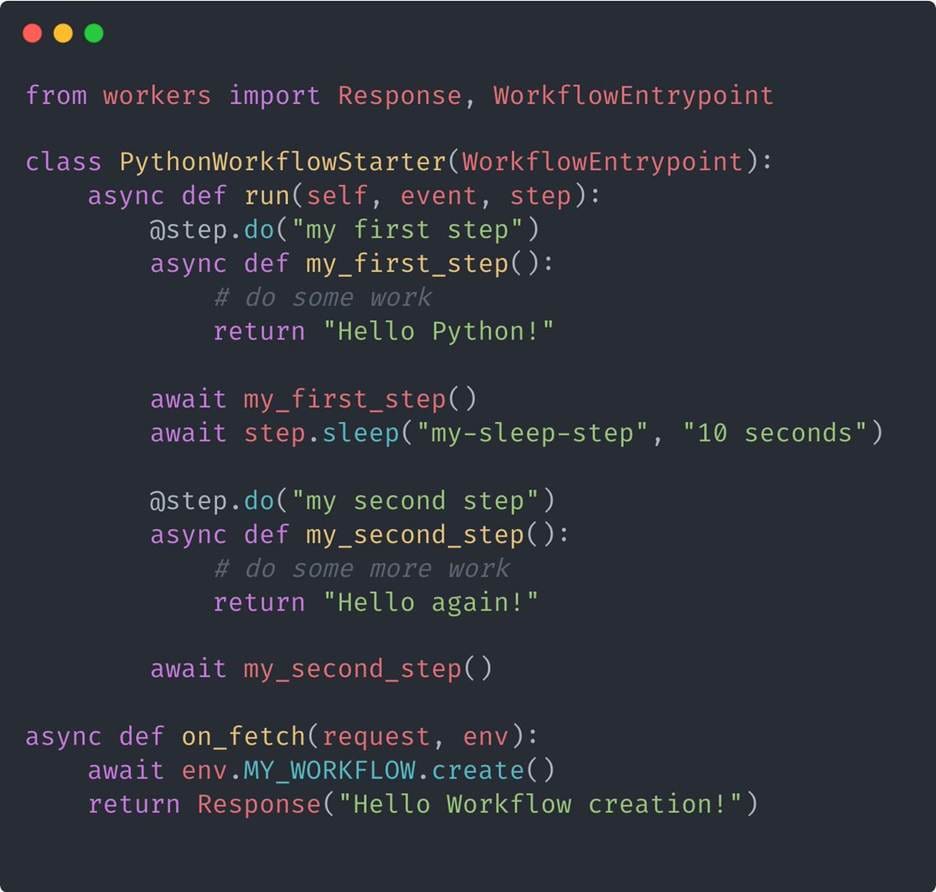

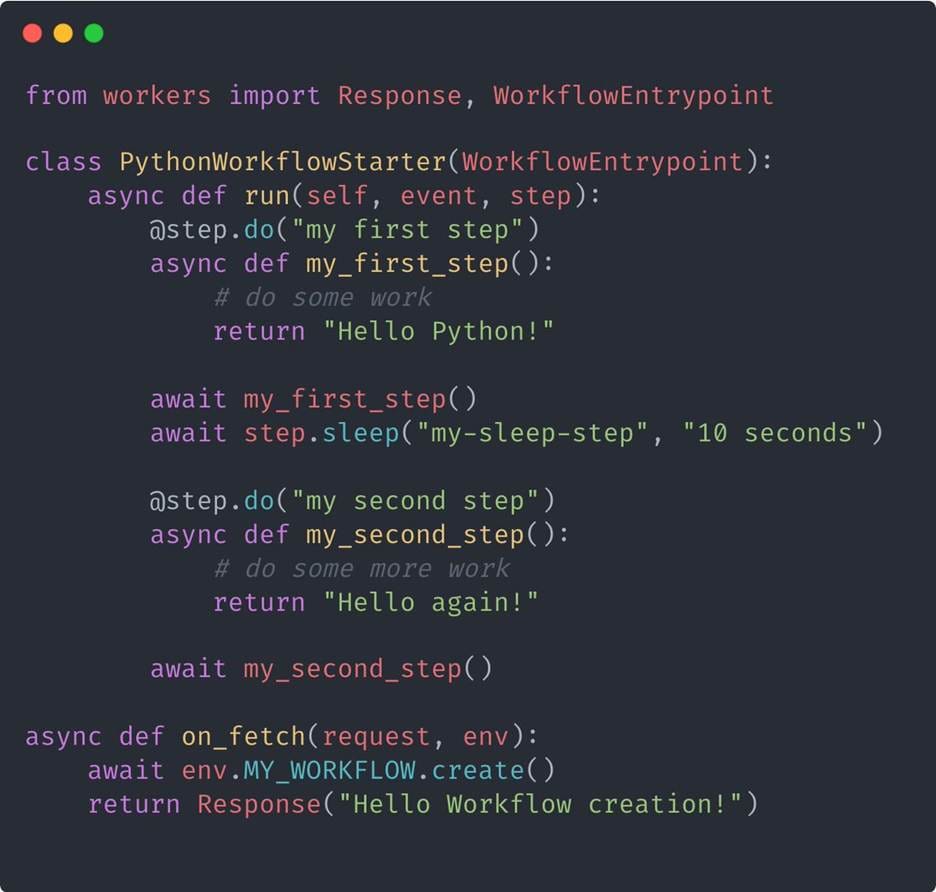

Two Ways to Define Workflow Steps in Python

- Using `asyncio.gather`

- Concurrent execution of tasks

- JavaScript promises (thenables) proxied into Python awaitables

- Using Python decorators (`@step.do`)

- Cleaner Directed Acyclic Graph (DAG) declaration

- Automatic state & data handling between steps

- See DAG execution flow docs

(Source: X Tweet Matt Silverlock)

---

Example Use Cases

Cloudflare Workflows with Python enable complex, long‑running orchestrations for:

- AI/ML Model Training

- Dataset labeling → model feed → run completion → loss evaluation → human feedback → loop continuation

- Data Pipelines

- Complex ingestion & transformation with idempotent steps for consistency

- AI Agents

- Example:

- Compile grocery list

- Check stock levels

- Place orders — leveraging state persistence & retries

📎 Resources: Sample Python Workflows on GitHub

---

Integrating with Multi‑Platform AI Publishing

Platforms like AiToEarn官网 complement Python Workflows by enabling:

- AI content generation

- Cross‑platform publishing (Douyin, Kwai, WeChat, Bilibili, Instagram, YouTube, X/Twitter, etc.)

- Analytics & model rankings

- Global AI content monetization

Why this matters: Modern AI applications benefit from both orchestration and distribution.

Python Workflows handle orchestration, while AiToEarn supports publishing and monetization—resulting in end‑to‑end automation.

---

Key Takeaways

- Python in Cloudflare Workflows = Native orchestration for AI/data engineering

- Durable Objects = Persistent state & reliable recovery

- Two coding patterns — `asyncio.gather` or `@step.do` DAG decorators

- Multi‑platform publishing tools like AiToEarn extend workflow impact

---

Would you like me to create a code snippet example showing both the `asyncio.gather` and `@step.do` approach for a Cloudflare Python Workflow? That would make this even easier to understand for readers.