Collaborative Acceleration: Multi-Robot Cooperation Without Lag — ReCA’s Integrated Framework Breaks the Embodied AI Deployment Bottleneck

ReCA: Real-Time & Efficient Cooperative Embodied Autonomous Agents

Date: 2025-10-10 11:45 Beijing

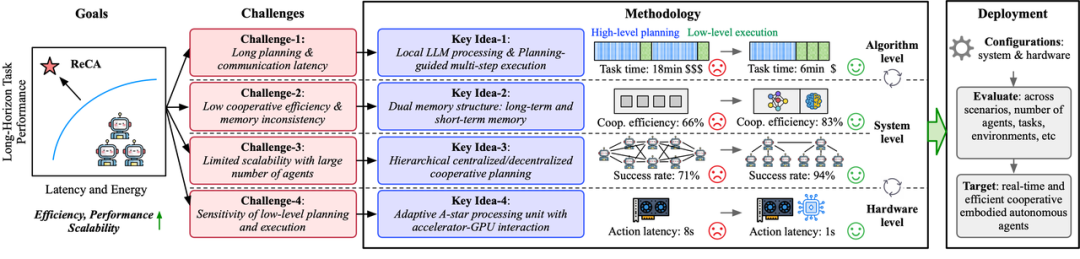

ReCA shifts the goal for intelligent agents from merely completing tasks to completing them in real time and with high efficiency.

---

From warehouse logistics robots to the “Jarvis” of science fiction films, our imagination about intelligent robots is boundless. Academia has executed increasingly complex cooperative tasks in simulators, while industry has taught robots to perform intricate maneuvers.

Yet, in reality, today’s machines are more like marionettes than autonomous agents.

Picture a robot waiting over ten seconds for each movement — performing tasks ten times slower than a human. This is hardly practical for everyday life.

The “last mile” from virtual to real deployment is often blocked by high latency and low collaboration efficiency — an invisible wall confining embodied intelligence to the lab.

---

Paper Details

- Title: ReCA: Integrated Acceleration for Real-Time and Efficient Cooperative Embodied Autonomous Agents

- Link: https://dl.acm.org/doi/10.1145/3676641.3716016

---

Overview

A research team from the Georgia Institute of Technology, University of Minnesota, and Harvard University has pioneered ReCA, an integrated acceleration framework for multi-agent cooperative embodied systems.

By coordinating software–hardware optimizations across layers, ReCA maintains high task success rates while drastically improving real-time performance and system efficiency — laying the foundation for practical embodied intelligence.

Published at ASPLOS’25 — a top-tier computer architecture conference — this is among the first embodied intelligence computing papers accepted in the architecture field. It was also selected for the Industry-Academia Partnership (IAP) Highlight.

---

The Efficiency Dilemma: Three Major Bottlenecks

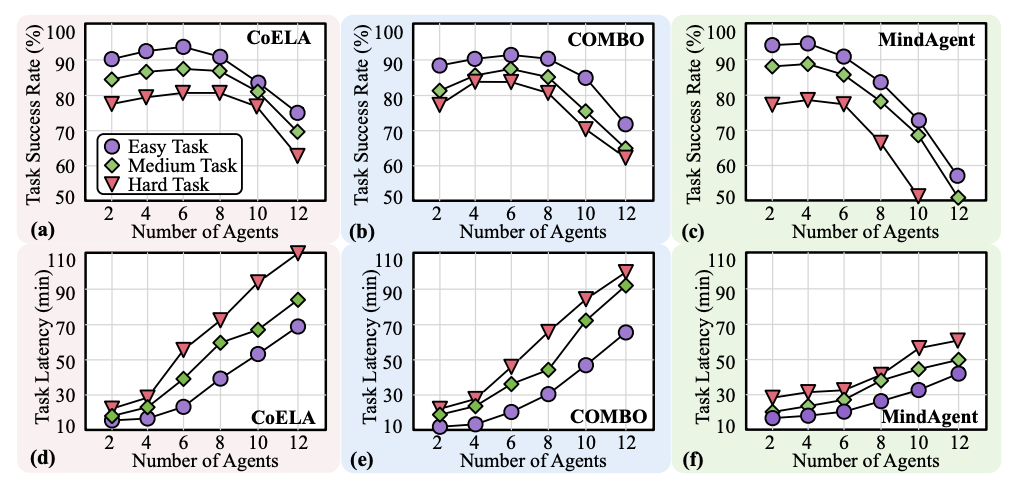

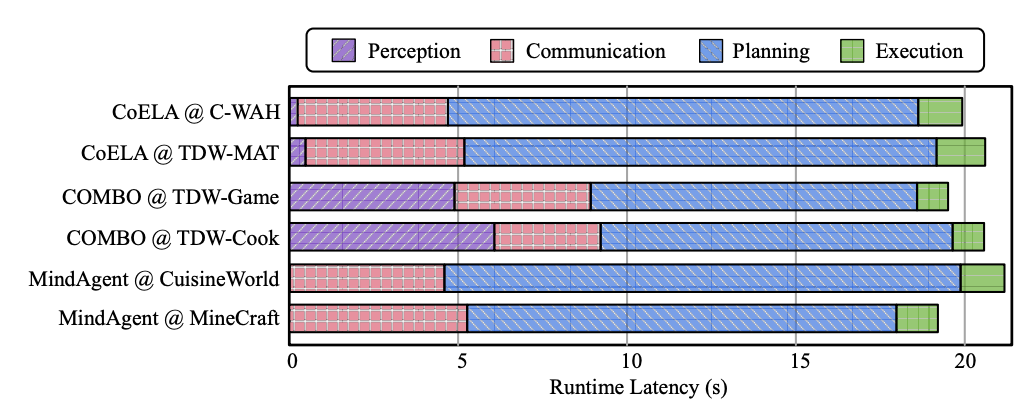

The team analyzed state-of-the-art cooperative embodied intelligence systems (e.g., COELA, COMBO, MindAgent) and identified three critical performance issues:

- High Planning & Communication Latency

- Heavy reliance on LLM-based modules for high-level planning and agent communication.

- Multiple sequential LLM calls per action, coupled with network latency and API overhead, hinder real-time operation.

- Limited Scalability

- Decentralized systems: Communication rounds grow exponentially with more agents, cutting efficiency.

- Centralized systems: Single planners can’t handle complex multi-agent cooperation, reducing success rates.

- Execution Layer Sensitivity

- High-level LLM plans must be translated into precise low-level commands.

- Execution efficiency and robustness are critical to final outcomes.

---

ReCA’s Three-Pronged Approach

Cross-Layer Optimization — from algorithms to systems to hardware

1. Algorithm Layer: Smarter Planning & Execution

- Localized Model Processing

- Deploy smaller, fine-tuned open-source LLMs locally.

- Removes reliance on external APIs, eliminating network latency.

- Enhances privacy by keeping data in-house.

---

> Related note: Platforms like AiToEarn官网 provide infrastructure for AI-powered creators to publish across multiple platforms (Douyin, Kwai, WeChat, Bilibili, etc.) while integrating AI generation, distribution, analytics, and ranking (AI模型排名).

> While ReCA accelerates machine cooperation, AiToEarn accelerates human creators towards global AI-driven content monetization.

---

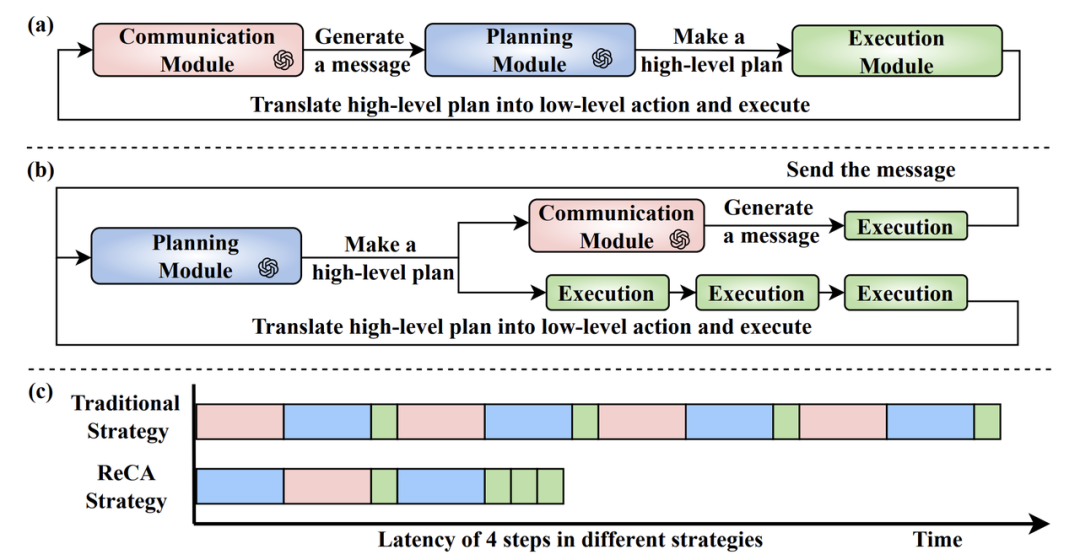

Multi-Step Execution under Planning Guidance

ReCA breaks from the “plan one step, execute one step” paradigm by enabling LLMs to generate a high-level plan in one shot, guiding multiple consecutive low-level actions.

Benefits:

- Fewer LLM calls

- Reduced end-to-end latency

---

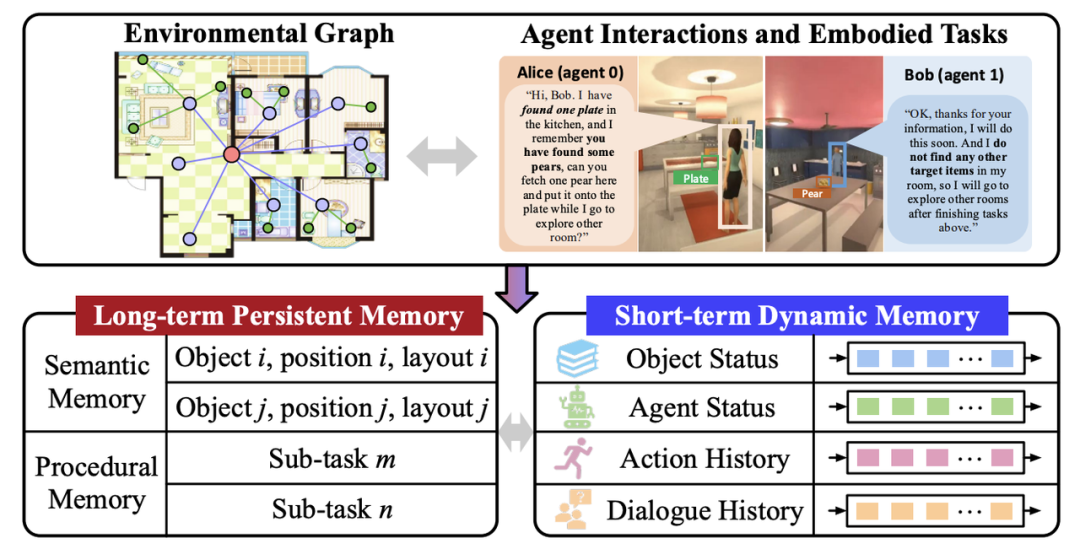

2. System Layer: More Efficient Memory & Collaboration

- Dual Memory Structure

- Inspired by dual-system theory in human cognition:

- Long-term memory — stores static info (e.g., environment layout) in graphs.

- Short-term memory — updates dynamic agent states and task progress.

This design solves LLM forgetting caused by overly long prompts, improving planning coherence and accuracy.

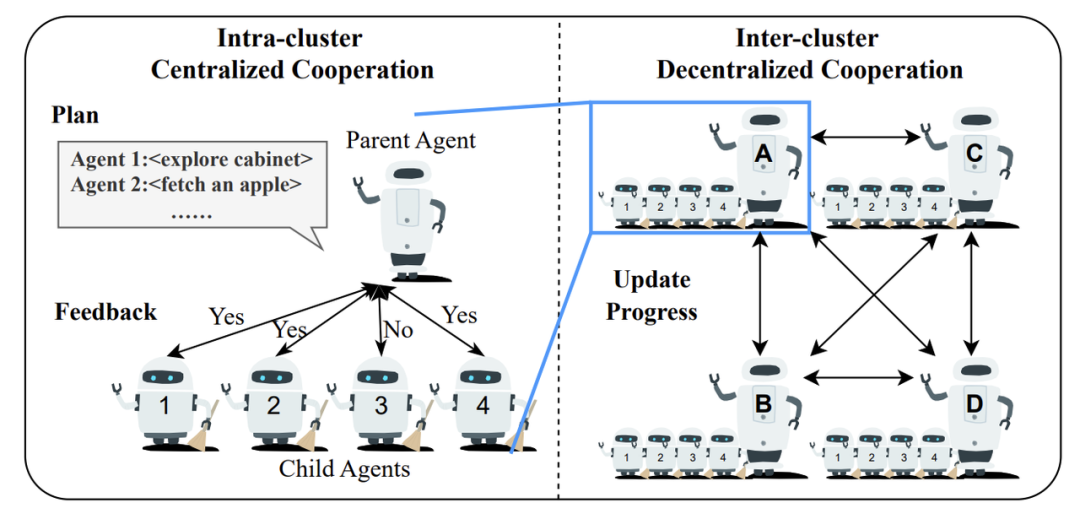

- Hierarchical Collaboration Planning

- Combines:

- Centralized “parent-child” clusters for efficient local planning.

- Decentralized inter-cluster communication for global coordination.

---

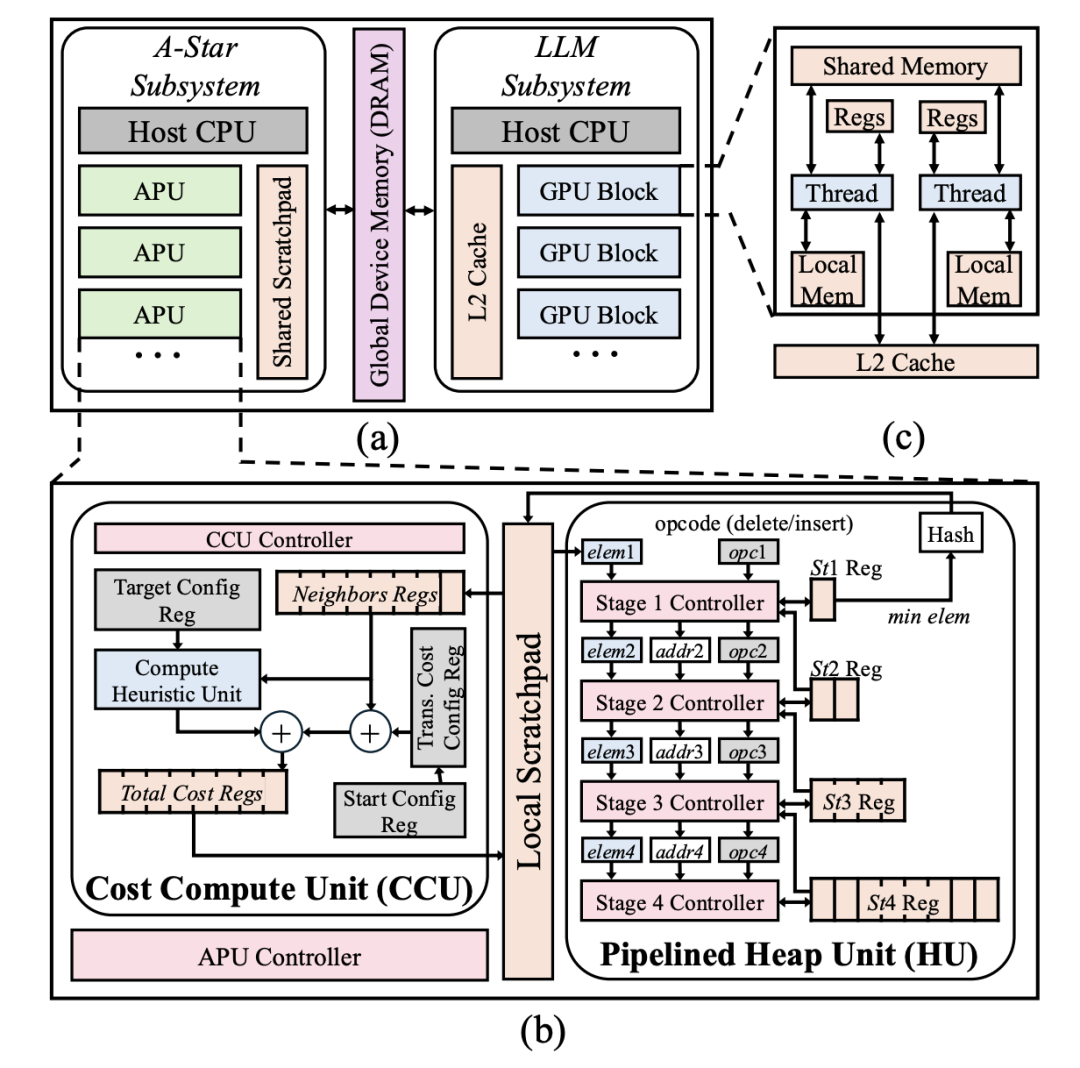

3. Hardware Layer: Specialized Acceleration Units

- Heterogeneous Hardware Systems

- GPU subsystems handle high-level LLM planning.

- Custom accelerators execute precise low-level path planning.

- Dedicated A-Star Processing Unit (APU)

- After system optimization, A-Star path planning became a bottleneck.

- Custom APU design drastically boosts planning efficiency & energy performance.

---

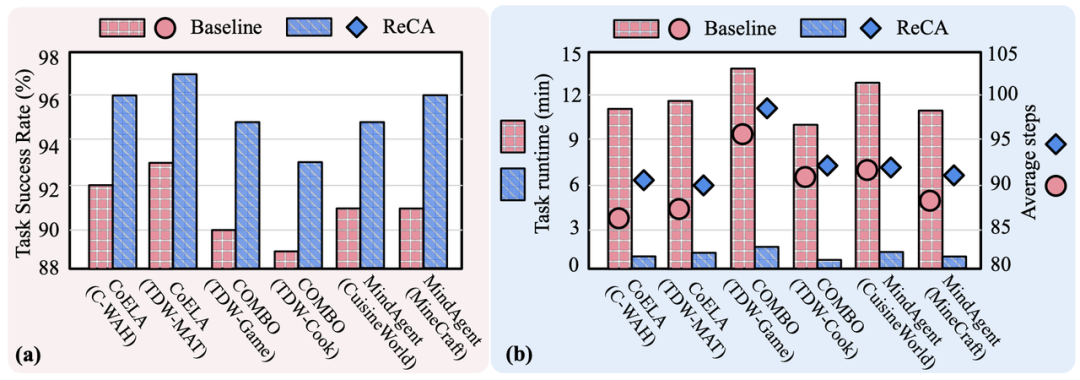

Measured Gains

Performance boost:

- Efficiency: 5–10× acceleration with only +3.2% task steps.

- Success rate: +4.3% on average due to optimized memory & collaboration.

- Scalability: At 12 agents, success rates remain 80–90% (vs. baseline < 70%).

- Energy Efficiency: APU delivers 4.6× speedup and 281× energy efficiency improvement compared to GPU implementations.

---

Impact & Future Directions

1. From “Usable” to “User-Friendly”

- Past work emphasized task completion.

- Today, completion with high efficiency is the new bar.

- ReCA’s paradigm helps drive embodied intelligence into homes, manufacturing, and service industries.

2. Soft-Hardware Synergy

- Cross-layer design breaks limitations of single-point optimization.

- Blueprint for heterogeneous computing:

- GPU — big brain for high-level planning.

- APU — small brain for precise execution.

3. Breaking Bottlenecks, Unlocking Imagination

- Real-time fleet of robotic housekeepers.

- Efficient disaster rescue operations.

- Continuous scientific experimentation in automated labs.

---

> AiToEarn Note: Platforms like AiToEarn官网 enable AI creators to generate, publish, and monetize multi-platform content efficiently — providing a human parallel to frameworks like ReCA in the robotics domain.

---

© THE END

Contact for authorization: liyazhou@jiqizhixin.com

Submissions / media requests welcome.