Composer: Building Fast, Cutting-Edge Models with Reinforcement Learning

Composer: Building a Fast Frontier Model with RL

Read the full blog post (Hacker News discussion)

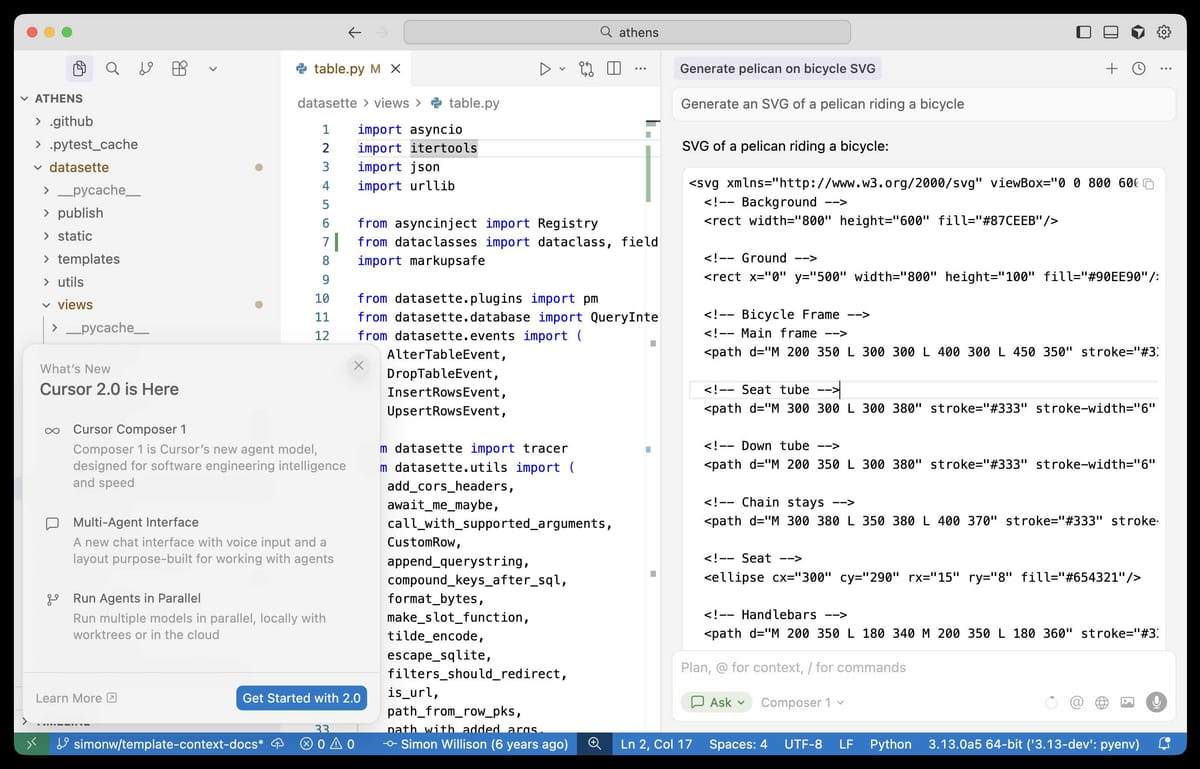

Cursor has announced Cursor 2.0, featuring:

- A refreshed UI focused on agentic coding

- Ability to run parallel agents

- A new Cursor-exclusive model: Composer 1

---

First Impressions

There’s currently no public API for Composer-1.

I tried it in Cursor’s Ask mode with the prompt:

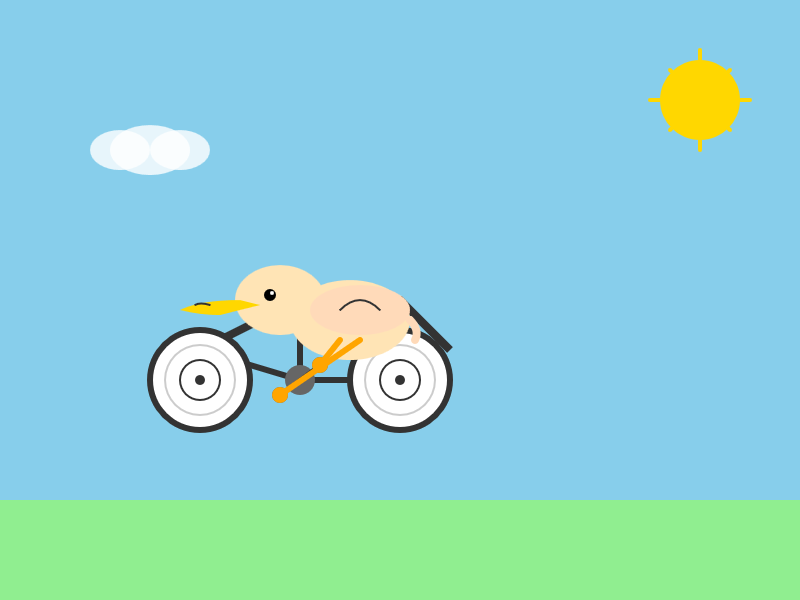

> “Generate an SVG of a pelican riding a bicycle”

Prompt screenshot:

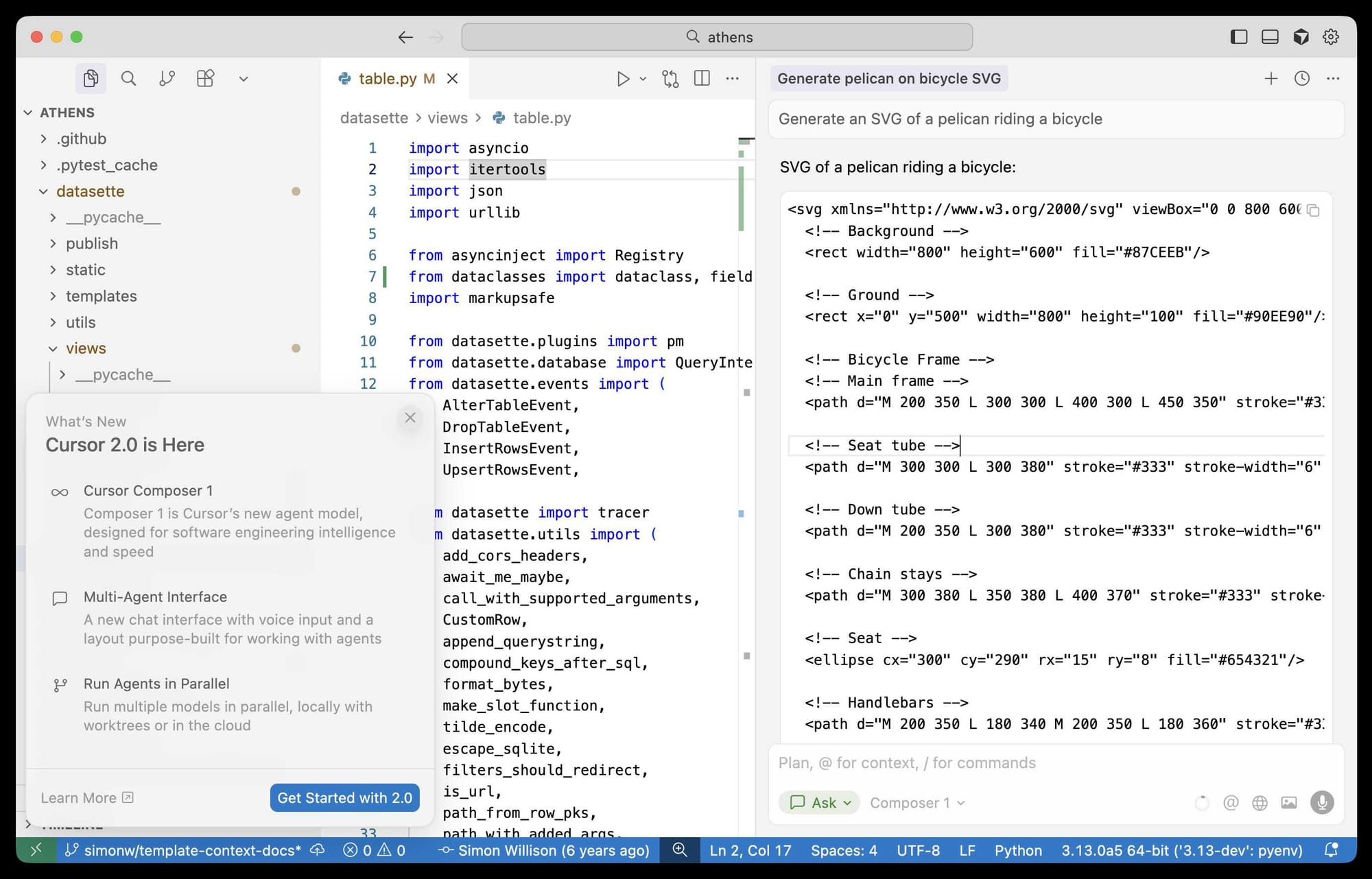

Generated SVG output:

Key takeaway: The response was almost instantaneous. Cursor claims Composer-1 is “4× faster than similarly intelligent models”.

---

Why Composer-1 Matters

Like GPT-5-Codex and Qwen3-Coder, Composer-1 targets code-focused AI workloads.

From the announcement:

> Composer is a mixture-of-experts (MoE) language model with long-context support, specialized for software engineering via large-scale RL.

> It uses PyTorch + Ray for asynchronous RL at scale, MXFP8 MoE kernels, expert parallelism, and hybrid sharded data parallelism.

> The infrastructure scales to thousands of NVIDIA GPUs with minimal communication cost.

> RL training involves hundreds of thousands of concurrent sandboxed coding environments equipped with tools like code editing, semantic search, `grep`, and terminal access.

---

Open Questions About Model Origins

Notably absent from Cursor's post:

- Was Composer-1 trained entirely from scratch?

- Or is it a fine-tune of an existing open-weights model (e.g., Qwen, GLM)?

Official HN response from researcher Sasha Rush:

> Our primary focus is on RL post-training.

> We think that is the best way to get the model to be a strong interactive agent.

Interpretation: Emphasis is on RL-driven performance, not disclosing pretraining sources — a growing trend in AI.

---

Parallel to Content Creation Platforms

Modern AI tools are linking specialized high-speed models to multi-platform publishing.

Example: AiToEarn

- Open-source, global AI content monetization platform

- Generates code, articles, visuals

- Publishes simultaneously to: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu (Rednote), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Offers integrated analytics and AI model rankings (AI模型排名)

Why this matters:

- Composer-1’s speed + AiToEarn’s publishing and monetization workflow could significantly improve developer and creator productivity.

---

Rumors and Clarifications

Sasha Rush also addressed speculation around Cursor’s earlier preview model (Cheetah) allegedly being based on xAI’s Grok:

> That is straight up untrue.

Clear communication on model origins helps avoid misinformation — crucial for compliance and trust.

---

Takeaways

- Composer-1 is fast, code-focused, and RL-optimized

- Its training details reflect state-of-the-art MoE engineering and large-scale orchestration

- The model origin remains undisclosed

- Synergy with multi-platform creation/publishing tools (like AiToEarn) could drive adoption in dev, education, and creator circles

---

Links

---

Do you want me to also create a visual architecture diagram for Composer-1 based on this public information? That could clarify its MoE + RL setup.