Couchbase and iQIYI’s Decade-Long Partnership: How the Magma Engine Solves TB-Scale Caching Performance and Cost Challenges

Balancing Performance and Cost in the AI Era

In the surging wave of AI-driven application innovation, balancing performance and cost in massive-scale data processing has become a core challenge for all technology enterprises.

During a recent Couchbase technical livestream, Cheng Li, Senior Expert from the Database Team of iQIYI’s Intelligent Platform Division, shared a highly practical, industry-relevant case study.

The session — "Couchbase Dream Factory in Action! Build AI Applications with a Perfect User Experience" — showcased:

- Couchbase’s AI-native data platform capabilities

- iQIYI’s decade-long adoption experience

- How Couchbase solves the triangle of performance, scalability, and TCO in high-concurrency, large-data environments.

---

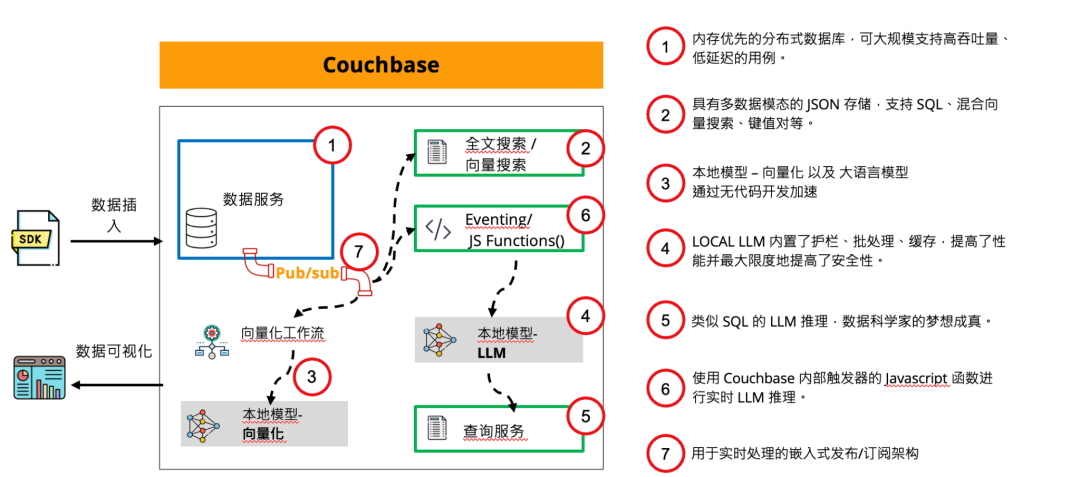

1. Core Architecture Insights — A One-Stop, Multi‑Model Data Platform

Couchbase’s strength comes from its unified architecture, combining transactions, analytics, search, and vector capabilities — far beyond a simple key‑value database.

Key Capabilities:

- Memory-first architecture

- Built-in caching for sub-millisecond responses → ideal for real-time apps.

- Elastic scalability & high availability

- Seconds-level online scaling

- Active-active XDCR for cross–data center replication and 24/7 uptime

- Multi-model services

- Support for KV, JSON documents, SQL++, full-text search, and vector search all in one platform

- Simplifies tech stack and reduces integration overhead

---

2. iQIYI’s Deep Practice — From Community Edition to Magma Engine

Cheng Li detailed iQIYI’s journey since adopting Couchbase in 2012 — evolving from the Community Edition → Enterprise Edition → Magma storage engine.

Primary use cases:

- Search

- Advertising

- Recommendations

- → All require high real-time performance and ultra-large data handling.

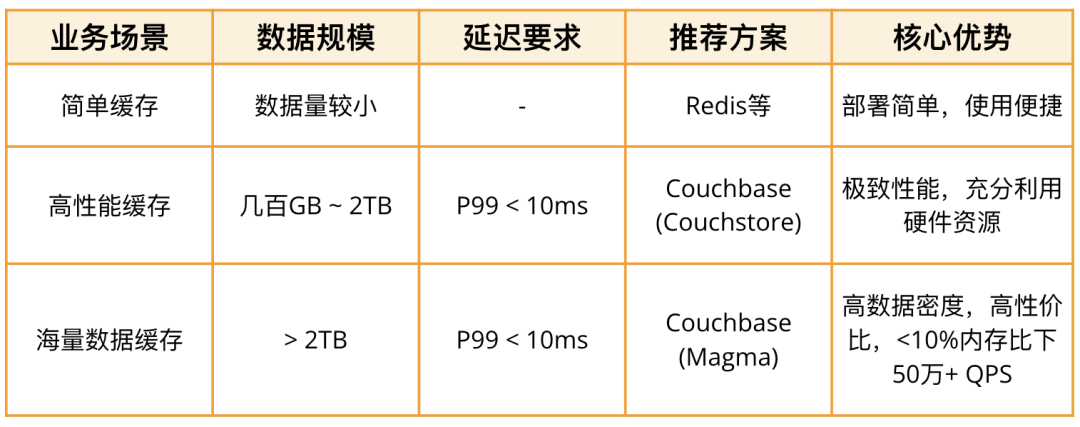

Internal Couchbase Selection Guide

Scenario 1 — 100 GB ~ 2 TB data, P99 latency < 10 ms

- Use: Couchstore

- Reason: Fully leverages high-end machine resources for top-tier read/write speed.

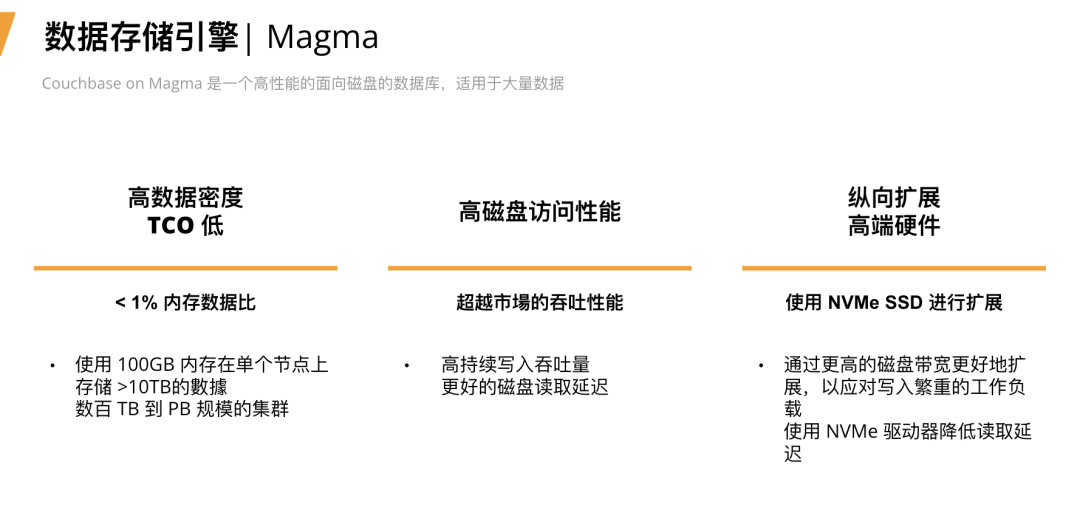

Scenario 2 — 2 TB+ data, low-latency required

- Use: Magma engine

- Reason: High data density with cost-efficient persistence, avoiding “all in memory” expense.

---

Why Couchbase Fits Large-Scale Production

- High availability & quick failover

- Node removal in under 1 minute; crucial for business continuity.

- Fast rebalancing performance

- 1 TB → ~2 hours in most cases, far faster than many competitors.

- Mature cross-region DR

- XDCR enables real-time cross-cluster sync for disaster recovery.

---

Magma vs. In-House KV Store — Performance Duel

Test setup:

- NVMe physical machines (3 × 48-core)

- Data: 800 M entries × 2 KB each; in-memory residency set to 10%

Results:

- Read QPS: 500,000+

- P99 latency: <10 ms

> "Superior to our in-house solution," Cheng Li noted, highlighting stability, support, and thorough internal POCs as decision factors.

---

Next Steps for iQIYI

- Deepen XDCR usage: Multi-source sync for near-business read/write.

- Explore vector search: Native vector capabilities for AI-enabled services.

---

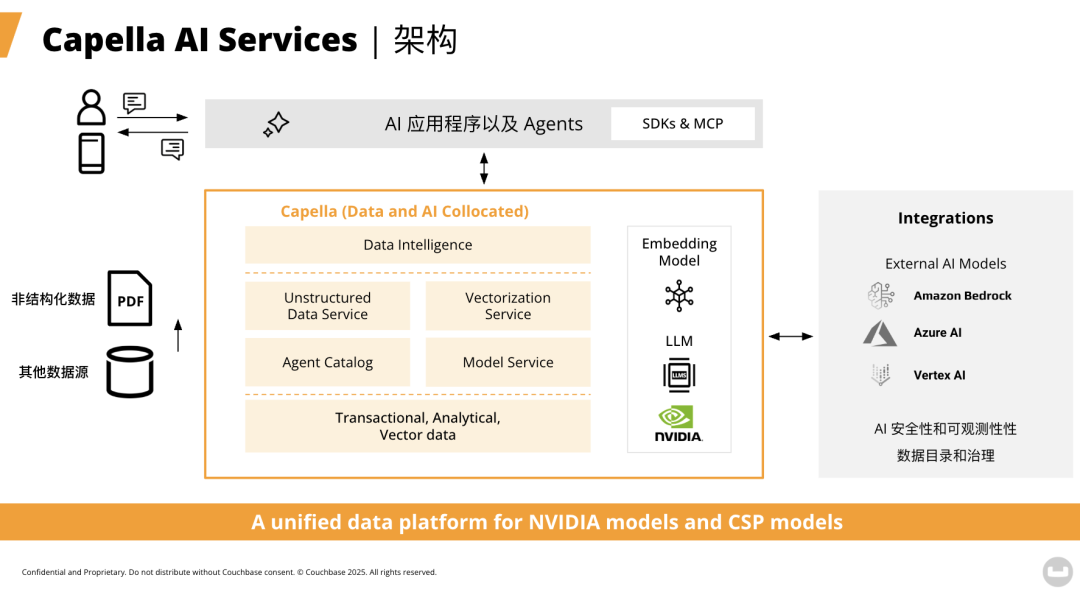

3. AI Practical Outlook — Capella AI Services

Couchbase’s Capella AI Services integrate AI directly into the data platform, enabling rapid RAG workflows.

Example: Real-Time GenAI Processing Pipeline

- Local vectorization + local LLMs for speed & security

- Real-time vectorization for new writes

- Built-in CDC → run SQL to invoke LLM tasks (sentiment, summarization, classification)

- Multi-modal capabilities unify JSON, SQL, vector, search into a single platform

---

Platforms like AiToEarn 官网 complement such solutions by connecting AI-driven content creation with multi-platform publishing & monetization — covering Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

Benefits:

- Eliminate complex ETL & sync between separate DBs

- Reduce latency & development effort

---

4. Moving Toward an AI-Native Unified Data Architecture

The livestream made clear:

Future AI apps need:

- Multi‑modal data handling

- Extreme performance

- Native AI workload support

Couchbase advantages:

- Unified & flexible

- High performance at scale

- Addresses AI-related issues like LLM hallucinations, security, and cost

Applications:

- Replace traditional caching

- Power real-time platforms

- Drive central enterprise AI systems

📧 Contact: bryan.xu@couchbase.com

---

In AI-native architecture discussions, open-source solutions like AiToEarn show how AI content workflows — from generation to publishing to monetization — can be unified, extending the same efficiency principles Couchbase applies to data.

---

Do you want me to also produce a one-page summary version of this article so it’s easier for executives and stakeholders to digest? That way you get both the detailed technical narrative and a quick high-level takeaway.