Cursor 2.0: Even the Strongest AI Coding Needs to Fully Catch Up on Model Training

Cursor 2.0 — Can It Make Money?

Cursor 2.0 has officially arrived.

While developers rushed to test the new features, a more important milestone emerged: Cursor has introduced its self-developed model — Composer — and radically redesigned its role in the product. This marks a decisive move in the AI coding race.

---

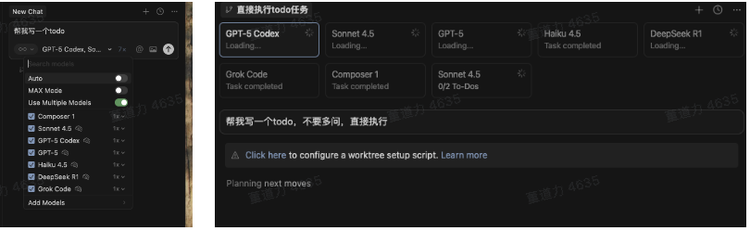

1. A New Development Paradigm: Agent-Based, Multi-Task Parallelism

From File-Centric to Agent-Centric

In its official changelog, Cursor states it is transitioning from “a file-centric editor” to “an Agent-centric development platform.”

> _“We’ve rebuilt everything.”_

Before 2.0:

- UI similar to other editors (files, cursors, AI command line).

Now:

- The Agent is the core.

- You specify your goal, and the system dispatches one or more AI Agents to plan, execute, and verify.

One person, one computer, eight AI developers working at once.

How It Works

- Enable Use Multiple Models.

- Select the models.

- Input a prompt.

- Watch multiple AI coding models cooperate.

Token usage per request can be huge — but managed.

Isolation Mechanism:

- Each Agent operates on a git worktree (separate branch).

- No overwriting or conflicts.

- Outputs merged after tasks complete.

---

2. Cursor Is Now a Model Company

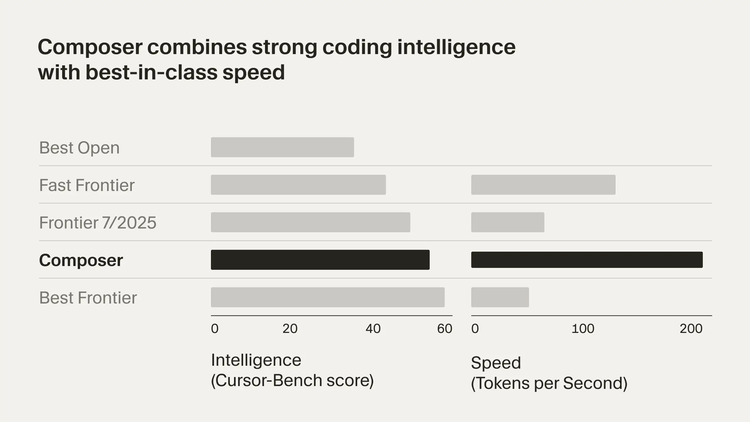

The foundation is Composer, Cursor’s first proprietary model.

Why Composer Matters

- 4× faster reasoning at similar intelligence levels.

- Most interactions finish within 30 seconds.

- Cursor no longer depends on external APIs.

Before Composer:

- Depended on OpenAI/Anthropic models.

- Higher costs, slower responses, limited context.

With Composer:

- Vertical optimization for code generation, semantic indexing, and context retrieval.

- Reads entire codebase (including dependencies) before producing output.

- Functions like an engineer who understands the project.

The Business Challenge

- Subscription revenue is fixed.

- Reasoning costs are usage-based.

- Multi-agent setups = linear token scaling.

- Extended planning chains = more execution rounds.

Without in-house models, heavy usage = high provider costs → low profit. Composer solves this by lowering cost and speeding execution, keeping control in Cursor’s stack.

---

3. Beyond Coding: Monetization Parallels

Platforms like AiToEarn官网 show how AI content generation can be linked with multi-platform publishing and monetization:

- Supports Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter).

- Integrates generation tools, publishing, analytics, and AI model rankings.

Key takeaway: Proprietary optimization + integrated monetization can turn AI efficiency into business value. Composer’s release is Cursor’s “model gap” fix — speed, cost control, scalability.

---

4. Technical Path to Composer

- Aug: Rewrote MoE MXFP8 core → low-latency inference base.

- Sep–Oct: Online reinforcement learning → improved completion model.

- Added Plan Mode: read library → produce plan → execute.

- Oct 29: Composer launched.

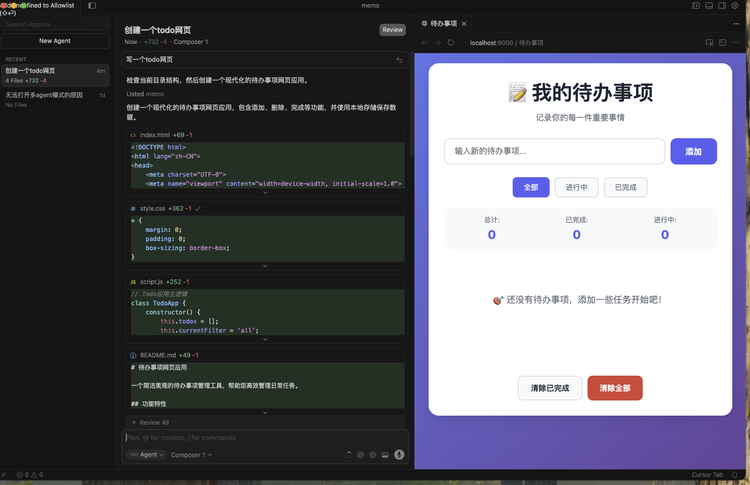

> _Personal test:_ Built a simple to-do web app — speed is impressive. Larger projects will better reveal differences with other models.

---

5. Embedded Browser: AI Sees Its Own Output

Why important?

- Before: Developer runs code, checks results manually.

- Now: Cursor’s AI can open a browser inside the editor, run code, see output, fix issues.

- Enables self-testing loops without extra input.

---

6. Crucial Engineering Updates

Security & Execution Boundaries:

- Sandboxed terminals by default.

- Commands run in isolated environments → prevents system/resource risks.

Team Collaboration:

- Team-level rules & shared commands.

- AI follows organization-wide coding standards and conventions.

New Interaction:

- Voice control for coding.

---

7. Community Reaction

Mixed feedback:

Positive:

- Paradigm shift from assistant to active agent.

- Interaction design is AI-native, not bolted onto legacy IDEs.

Concerns:

- High token consumption in multi-model setups.

- Pricing may deter individuals.

- Stability issues in aggressive feature rollout.

Industry Outlook:

- Model “ceiling” won’t be easily broken with mere parameter increases.

- IDE features converging: completion, cross-file rewriting, embedded browser, self-test loops, multi-model switching.

---

8. Linking Development & Content Ecosystems

Platforms like AiToEarn官网 merge AI-driven development with multi-channel content publishing + monetization.

For developers, connecting code-generation agents to publishing/analytics opens new revenue streams alongside technical innovation.

The real business challenge:

Balancing throughput, accuracy, and token/time costs.

---

Final Takeaway

Cursor 2.0’s approach:

- Multi-agent + worktree isolation default.

- Composer ensures low latency + semantic understanding.

- Cost + scheduling controlled in-house.

It’s not just about stronger models — it’s about making the AI programming business work sustainably.

---

---

Would you like me to also produce a concise executive summary table for this article so it’s easier to grasp at a glance? That would make it even more reader-friendly.