Cursor launches its first coding LLM: 250 tokens/sec code generation with reinforcement learning + MoE architecture

Cursor 2.0 Launch: First Native Coding Model

Cursor has officially released Cursor 2.0, marking the debut of its first in-house large language model — Composer.

Unlike previous versions powered by GPT or Claude, Composer is fully developed and trained internally.

---

Why Composer is a Big Deal

According to Cursor:

- 400% faster task completion compared to competitors.

- Complex tasks finished in ~30 seconds.

- Purpose-built for low-latency coding and real-time reasoning in large, complex codebases.

---

New Features in Cursor 2.0

1. Native Browser Tool

Composer can:

- Test code

- Debug

- Iterate until results are correct

2. Voice-to-Code

Simply describe your idea aloud — Composer converts it directly into code.

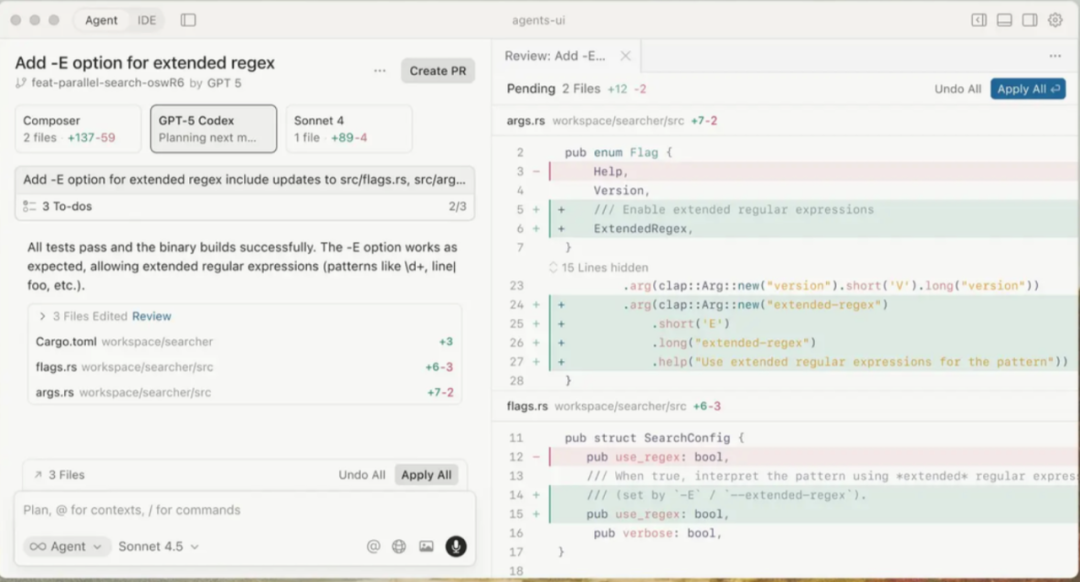

3. Agent-Centric Interface

Shift from file-centric workflows to agent-centric ones:

- Multiple agents run simultaneously

- Each agent acts independently

- Parallel problem-solving → pick the best output automatically

> This multi-agent, parallel approach significantly boosts quality and efficiency.

---

---

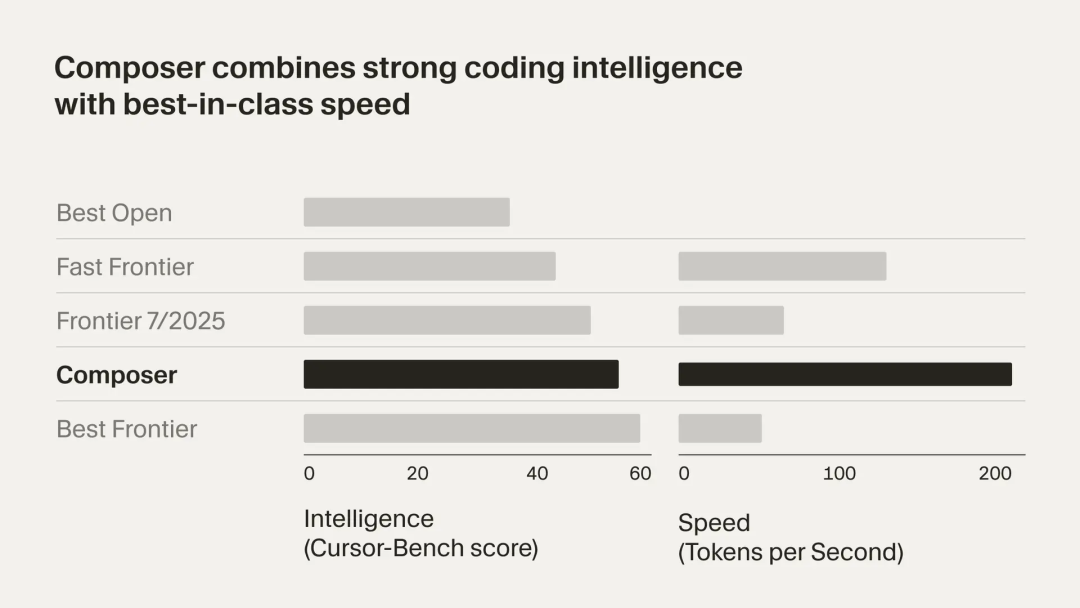

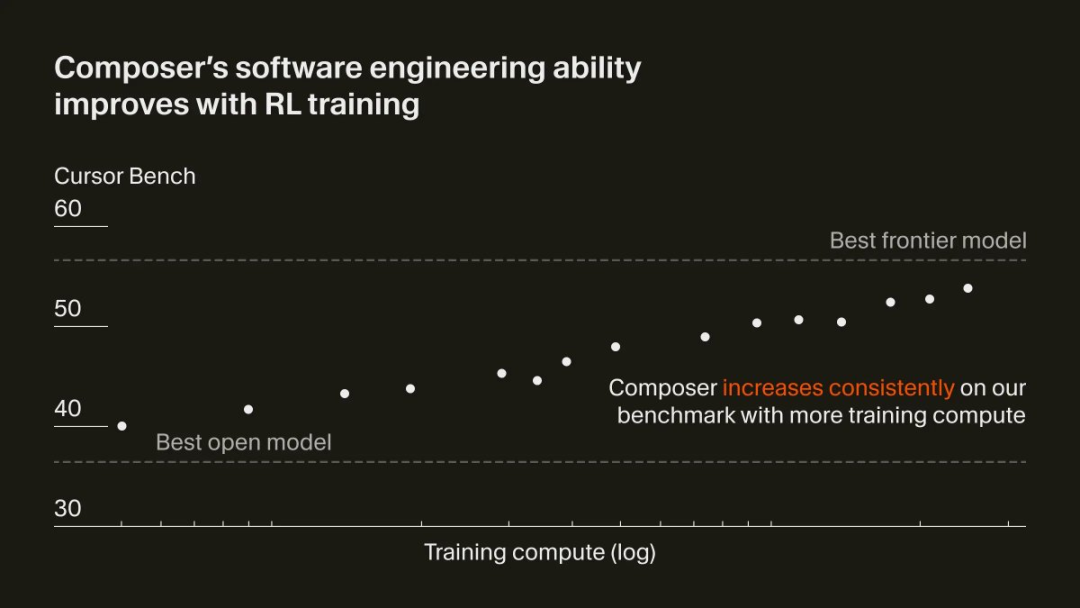

Performance Benchmarks

Tests were run on Cursor Bench, an internal benchmark mimicking real-world developer scenarios.

Key Metrics:

- Generation speed: 250 tokens/sec —

- ~2× faster than GPT-5 and Claude Sonnet 4.5

- ~4× faster than many mainstream models

- Maintains high reasoning ability comparable to mid-tier frontier models

Beyond correctness, Bench also assesses:

- Consistency with abstraction levels

- Adherence to style guides

- Compliance with engineering best practices

---

Technology Behind Composer

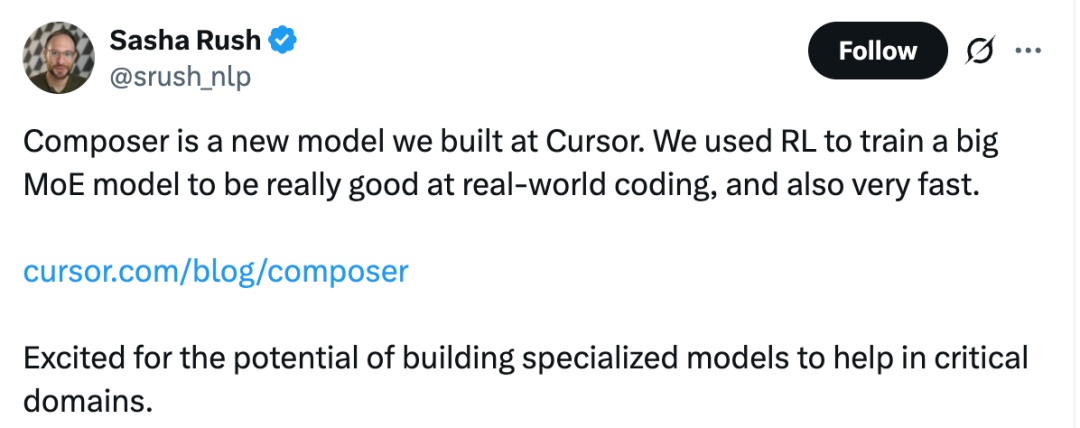

Cursor’s research scientist Sasha Rush attributes the performance leap to reinforcement learning (RL) combined with a Mixture of Experts (MoE) architecture.

> “We trained a large MoE model with reinforcement learning, tuned toward real programming tasks, and it runs extremely fast.”

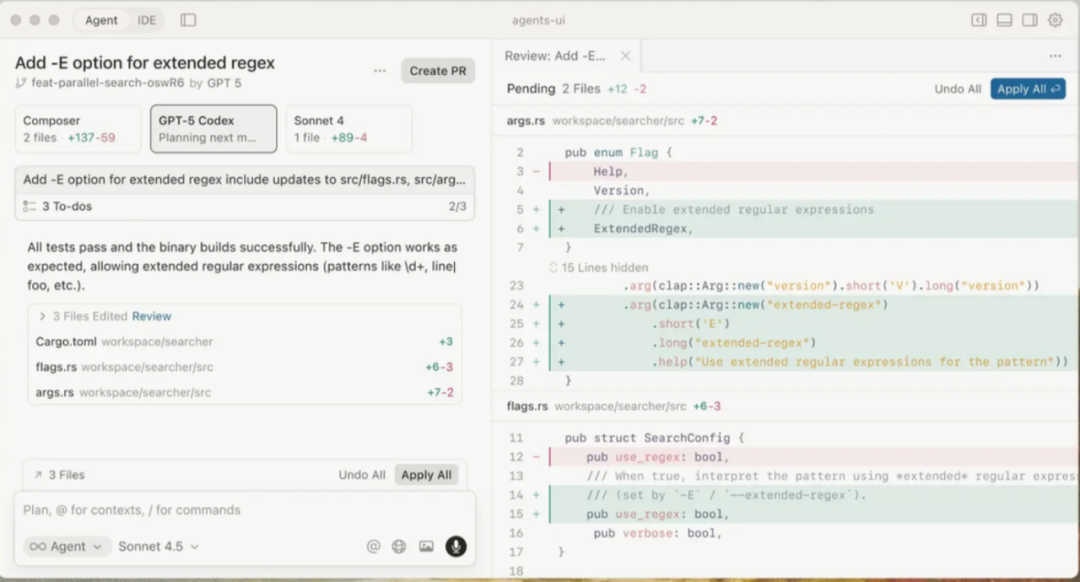

RL in a Real Environment

RL requires training in a working system — not just static datasets — to achieve expertise.

Training included:

- Full Cursor environment access

- Tool execution (file editing, semantic search, terminal commands)

- Real engineering tasks (proposal drafting, code changes, debugging)

- Automated testing and iterative self-correction

Emergent Behaviors:

- Running unit tests automatically

- Fixing code formatting errors

- Completing multi-step debugging workflows independently

---

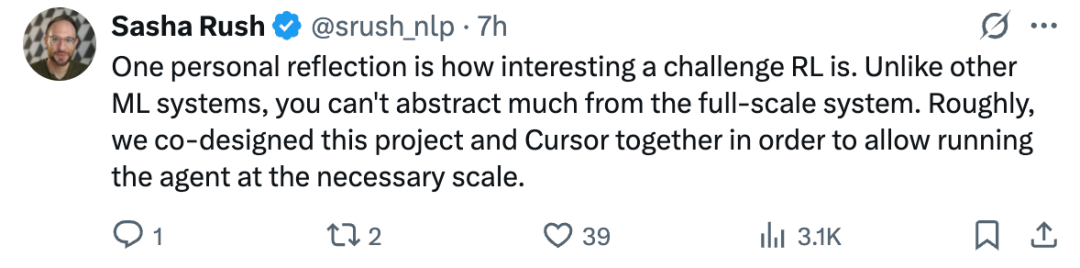

System Integration Advantage

> “We co-designed Composer with Cursor to ensure smooth operation at full real-world scale.”

Unlike general-purpose LLMs competing purely on capability, Composer benefits from deep application-side integration, aligning model function with IDE workflows.

---

---

Skepticism & Transparency Concerns

While RL details are clear, the base model’s origin remains undisclosed.

Questions without answers:

- Is Composer pre-trained entirely by Cursor?

- Is it based on an open-source model?

- Was Cheetah, an earlier prototype, its foundation?

Hacker News discussions reveal repeated evasions on this point from Cursor researchers. Until there’s independent verification or official disclosure, skepticism will persist.

---

References

- https://news.ycombinator.com/item?id=45748725

- https://simonwillison.net/2025/Oct/29/cursor-composer/

- https://venturebeat.com/ai/vibe-coding-platform-cursor-releases-first-in-house-llm-composer-promising

- https://cursor.com/cn/blog/2-0

- https://x.com/srush_nlp/status/1983572683355725869

- https://x.com/cursor_ai/status/1983567619946147967

---

Related: Multi-Platform AI Content Monetization

For developers and creators inspired by AI-assisted workflows, platforms like AiToEarn offer open-source tools to:

- Generate AI content

- Publish across Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X (Twitter)

- Track analytics and model rankings

- Monetize efficiently across ecosystems

Open-source repo: AiToEarn on GitHub

---

Bottom line:

Composer in Cursor 2.0 represents a battle-ready, fully integrated AI coding assistant, leveraging reinforcement learning directly within the production IDE.

But until details on the base model architecture are revealed, questions about its origins will remain.