# **Google’s Comeback: Inside Gemini 3’s Rise and Impact**

Google’s newly launched **Gemini 3** is shaking up Silicon Valley’s AI landscape. At a time when OpenAI and Anthropic are locked in fierce competition, Google — boosted by deep infrastructure capabilities and a native multimodal approach — has shifted from *follower* to **leader**.

This release marks not only a leap forward in multimodal capability but also Google’s most aggressive application of the **Scaling Law** to date.

On **November 20**, *Silicon Valley 101* hosted a livestream with four frontline AI experts:

- **Tian Yuandong** — Former Meta FAIR Research Director, AI Scientist

- **Chen Yubei** — Assistant Professor at UC Davis, Co‑founder of Aizip

- **Gavin Wang** — Former Meta AI Engineer, worked on Llama 3 post‑training and multimodal inference

- **Nathan Wang** — Senior AI Developer, Special Research Fellow at *Silicon Valley 101*

The discussion tackled big questions:

- **What makes Gemini 3 truly powerful?**

- **What did Google get right?**

- **How will global LLM competition evolve?**

- **Where are LLMs headed — and beyond them, what’s next?**

Below is a condensed recap. For the full session, see the replay on **YouTube** or **Bilibili**.

---

## 1. Hands‑on Testing: **Standout Strengths**

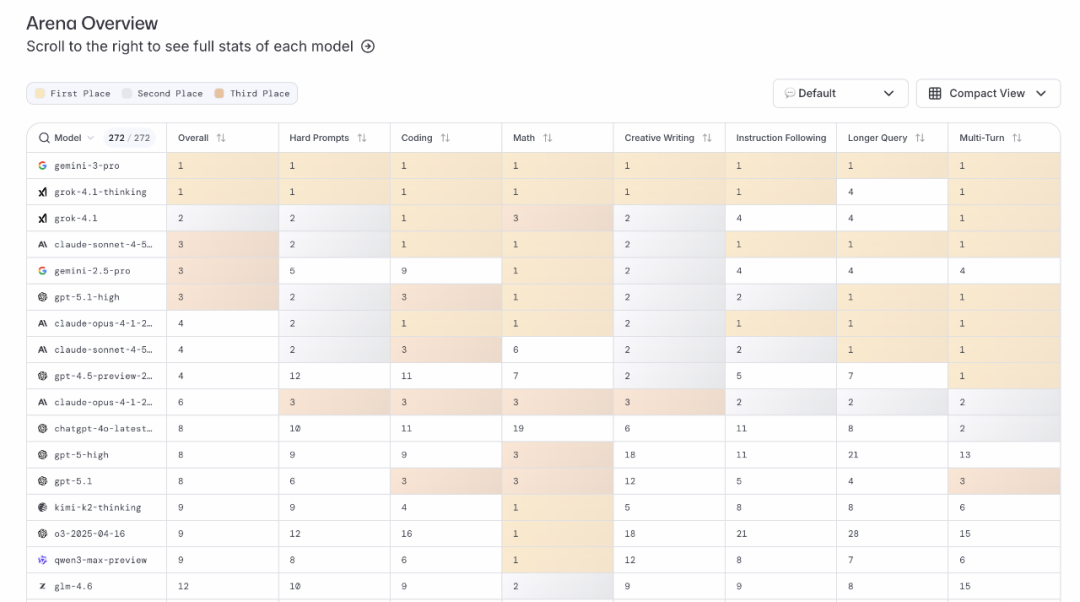

Within 48 hours of release, Gemini 3 was topping leaderboard benchmarks. Unlike earlier models that improved in just one area (e.g. code, text), Gemini 3 is authentically **native multimodal**.

### Developer Impressions

**Source:** LM Arena

**Nathan Wang:**

I tested three products:

1. **Gemini app**

2. **Google AntiGravity** (developer IDE)

3. **Nano Banana Pro** (newly launched today)

AntiGravity feels built for the *Agentic* era:

- **Manager View + Editor View** — watch 8–10 agent assistants split tasks (e.g., code writing, unit testing).

- **Browser Use integration** — can open Chrome, “see” a page, click buttons, upload files.

This makes automated testing & development seamless. Features like *Screenshot Pro* deliver end‑to‑end functionality across visual, code, and logic — something other tools haven’t matched.

**Nano Banana Pro** impressed me with slide generation:

- Maintains logical flow across complex topics (e.g., Gemini’s evolution 1.0 → 3.0).

- Generates advanced charts inline.

This could eventually replace current PPT software.

---

### Storytelling & Creativity Benchmarks

**Tian Yuandong:**

I use a personal “novel continuation” benchmark. Older models wrote flat, formal prose. Gemini 2.5 improved descriptions but lacked plot twists.

**Gemini 3** surprised me:

- Engaging plot structures, twists, and character interactions.

- Ideas worth saving for actual writing — for the first time, it sparked narrative inspiration.

In scientific work, it’s still like a sharp but *new* Ph.D. student: broadly knowledgeable, quick to recall tools, but lacking deep intuition for research direction.

---

### ARC‑AGI‑2 Leap

**Gavin Wang:**

ARC‑AGI‑2 tests few‑shot/meta‑learning — not memorized data. Most scores were single digits; Gemini 3 hit ~30%. Likely due to true **multimodal reasoning**, training vision, code, and language together.

---

### Limits in Real‑World Vision

**Chen Yubei:**

Feedback from our Vision Group showed worse performance in real‑world video analysis than its predecessor — Gemini 3’s training benchmarks for such cases were minimal.

---

**Takeaway:** Gemini 3 excels in logical content generation and cross‑modal reasoning, but has gaps in nuanced, real‑world visual tasks.

---

## 2. Google’s Technical Edge

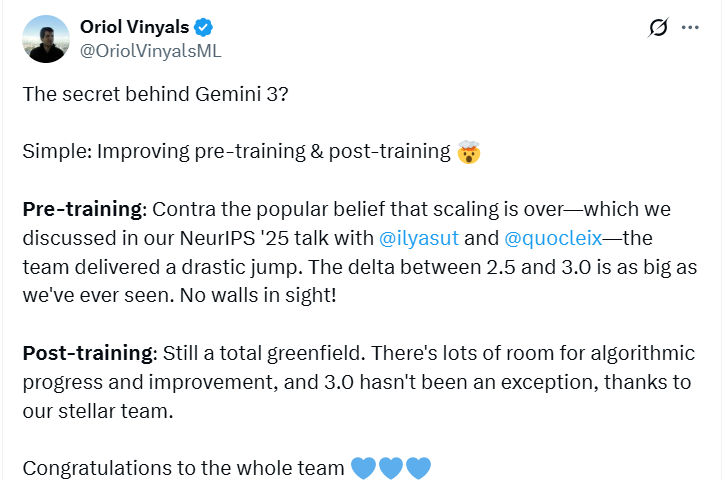

At launch, the Gemini lead cited “improved pre‑training and post‑training.” But is this secret sauce algorithmic brilliance or raw computational heft?

### Engineering vs. Brute Force

**Tian Yuandong:**

The phrase is vague — in reality it’s comprehensive systems engineering: better data, tuning, stability, and fixing pipeline bugs. At Google’s scale, removing inefficiencies lets the **Scaling Law** shine.

---

**Gavin Wang:**

Gemini 3 described its own method: **Tree of Thoughts** + self‑rewarding scores. Parallel reasoning paths are internally scored and pruned, unlike previous chain‑of‑thought prompts.

Integrated with Mixture‑of‑Experts (MoE) and search, this embeds advanced reasoning flows *within* the model.

---

**Nathan Wang:**

API docs contain a hint: *“Context Engineering is a way to go.”* This likely means Gemini automates retrieval of rich contextual data before answering — building an informed reasoning environment without explicit prompts.

---

**Chen Yubei:**

Hardware matters: Google’s TPU vertical integration avoids NVIDIA’s > 70% margins, letting them train bigger, more complex multimodal models within the same budget. This hardware moat pressures competitors.

---

## 3. Developer Ecosystem: **Coding War Over?**

Social buzz claims Google’s AntiGravity + Gemini 3 dominance in SWE‑bench “ends” the coding war.

**Gavin Wang:**

AntiGravity integrates visual inputs with code outputs — AI can “see” the UI and modify code accordingly. But opportunities remain for startups to innovate around business models, specialized workflows, and industry niches.

---

**Nathan Wang:**

AntiGravity shines for front‑end generation, but stalls in backend deployment and complex architectures. Privacy concerns may keep firms with proprietary code away from full integration into Google’s ecosystem.

---

**Tian Yuandong:**

Professional devs judge on *Instruction Following*, not just flashy demos. Examples like reversed arrow keys in generated FPS games show that small bugs can be fatal in production — Gemini 3 lowers barriers but isn’t a complete replacement yet.

---

## 4. Beyond LLMs: **Post‑Scaling Paradigms**

NeoLab startups like Reflection AI and Periodic Labs are attracting funding while exploring paths beyond traditional LLM scaling.

---

### Interpretability & Efficiency

**Tian Yuandong:**

Scaling forever will exhaust compute and resources. Understanding emergence from first principles could yield breakthroughs without gradient descent. AI is already accelerating such research — e.g., instant coding and validation of ideas.

---

**Chen Yubei:**

Nature’s paradox: smarter intelligence learns more with less data. Humans use < 10B tokens before age 13, yet current models use trillions. Future “large” should be architectural complexity, not just dataset size.

---

### World Models & Edge AI

**Gavin Wang:**

Next frontier: **World Models** (physics‑aware AI). Approaches:

1. **Video‑based** (e.g., Genie 3) — simulates 3D worlds from 2D footage.

2. **Mesh/physics‑based** — collision volumes, physics simulation.

3. **Point‑cloud encoding** — e.g., Gaussian Splatting.

Also advocate **small edge models** to avoid digital centralization — enabling high‑performance AI offline on personal devices.

---

## 5. Bubble or Singularity?

Gemini 3 is Google’s rebuttal to “AI bubble” claims — showing Scaling Law’s continued power when paired with compute, data, and engineering.

But scaling isn’t the only path to AGI. Guests stressed exploring deeper algorithms, novel architectures, and AI decentralization.

---

**Follow Silicon Valley 101** for livestreams on **Bilibili** and **YouTube**.

**Channels:**

Domestic: Bilibili | Tencent | WeChat Video | Xigua | Toutiao | Baijiahao | 36kr | Weibo | Huxiu

Overseas: YouTube

**Contact:** video@sv101.net

[Read Original](2247495687) | [Open via WeChat](https://wechat2rss.bestblogs.dev/link-proxy/?k=e6344912&r=1&u=https%3A%2F%2Fmp.weixin.qq.com%2Fs%3F__biz%3DMzA4NjUwNTI0OA%3D%3D%26mid%3D2247495687%26idx%3D1%26sn%3D98e6bce4c0bf51c2f9392f17fbcd6483)

![Deconstructing Gemini 3: Mastering the Scaling Law and the Power of Full Modality [101 Live]](/content/images/size/w1200/2025/11/img_001-567.jpg)