# DeepSeek V3.2 Official Release — Enhanced Reasoning & Agent Capabilities

DeepSeek has updated again — still within the **year-long V3 series iterations** — this time with the **V3.2 official release**.

---

## Overview

At the end of September, DeepSeek launched the experimental model **DeepSeek‑V3.2‑Exp**, building upon V3.1‑Terminus with **DeepSeek Sparse Attention (DSA)** technology for significantly improved long-text processing efficiency.

Today, **two official versions** have been released:

- **DeepSeek‑V3.2** — balancing reasoning and usability

- **DeepSeek‑V3.2‑Speciale** — long-thinking enhanced edition with superior reasoning

**Highlights:**

- **Speciale edition:** Open‑source model with Gemini‑3.0‑Pro‑level performance on reasoning benchmarks (IMO 2025, CMO 2025).

- **DeepSeek‑Math‑V2:** Math-specialized variant built on V3.2‑Exp‑Base, achieving gold-medal level at IMO.

- **Integrated reasoning mode in tool calls:** Both reasoning and non‑reasoning tool invocation supported.

> **Key Achievement:** Across intelligent agent tool‑call evaluation sets, **DeepSeek‑V3.2** ranks highest among all open‑source models, with strong generalization in real-world scenarios.

**Access:**

- **DeepSeek‑V3.2 official version:** Web, app, and API

- **Speciale edition:** Available via temporary API (supports reasoning mode in tool calls)

**Technical Report:**

[https://modelscope.cn/models/deepseek-ai/DeepSeek-V3.2/resolve/master/assets/paper.pdf](https://modelscope.cn/models/deepseek-ai/DeepSeek-V3.2/resolve/master/assets/paper.pdf)

---

## 01 — A Year of V3 Iterations (V4 Still Unreleased)

DeepSeek V3 launched on **Dec 25 last year**, followed by **R1** on **Jan 20 this year**, sparking a wave in open‑source AI releases from Kimi, MiniMax, and more.

**Enhancement areas across V3 updates:**

- **MoE architecture refinements** — Reinforcement + DSA

- **Agent tool usage improvements** — From V3.1’s upgrades to V3.2’s reasoning‑mode tool calls

- **Unified reasoning/non‑reasoning models** — Similar to Gemini, Claude, GPT‑5 trends

*DeepSeek 2025 release roadmap*

---

## Industry Context

Advancements in reasoning & tool usage align with a growing need for **cross‑platform creator publishing**.

Platforms like **[AiToEarn官网](https://aitoearn.ai/)** provide **open-source tools** for:

- AI content generation

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Analytics & model ranking

These turn models like DeepSeek into **practical content production engines**.

### Speciale Edition Testing

Similar to V3.1‑Exp, Speciale is a **long-thinking enhanced edition** integrating theorem-proving from **DeepSeek‑Math‑V2**. This likely signals a future **V3.3 iteration** built on Speciale’s capabilities.

*Speculation:* While rumors suggest DeepSeek V4 or R2 on the horizon, continued focus on agent tools within V3 could lead to next-year breakthroughs in **multimodality, longer context, or advanced agent functionality**.

---

## 02 — DeepSeek‑V3.2 Official Release

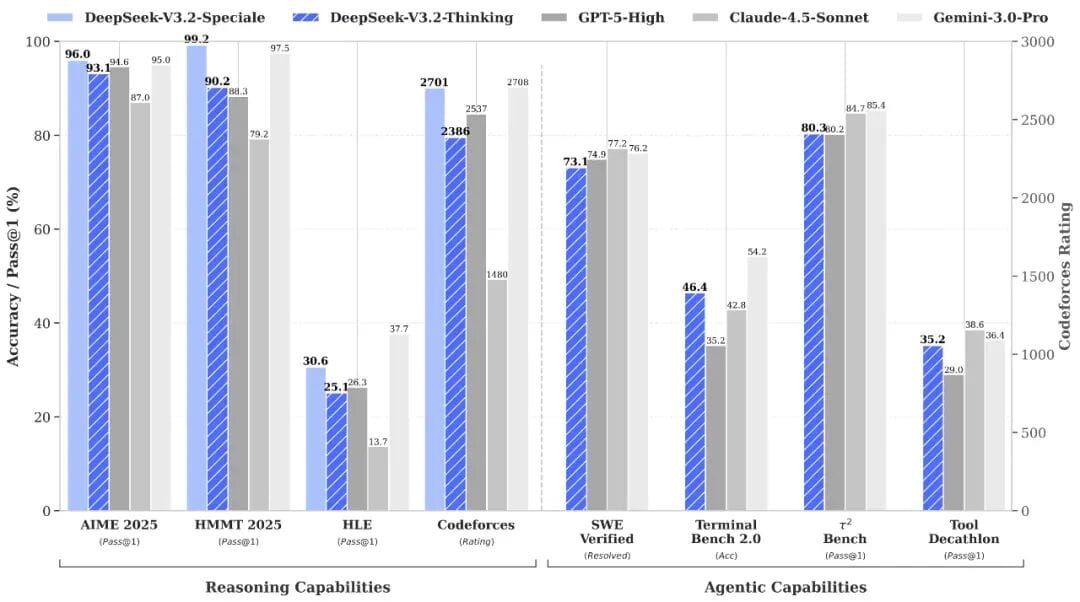

### Performance: GPT‑5-Level Reasoning

**V3.2 goals:**

- Balance reasoning performance with efficient output length

- Serve common Q&A and agent task workflows

**Comparisons:**

- Near GPT‑5 reasoning performance

- Slightly below Gemini‑3.0‑Pro

- Lower computation/wait times versus Kimi‑K2‑Thinking

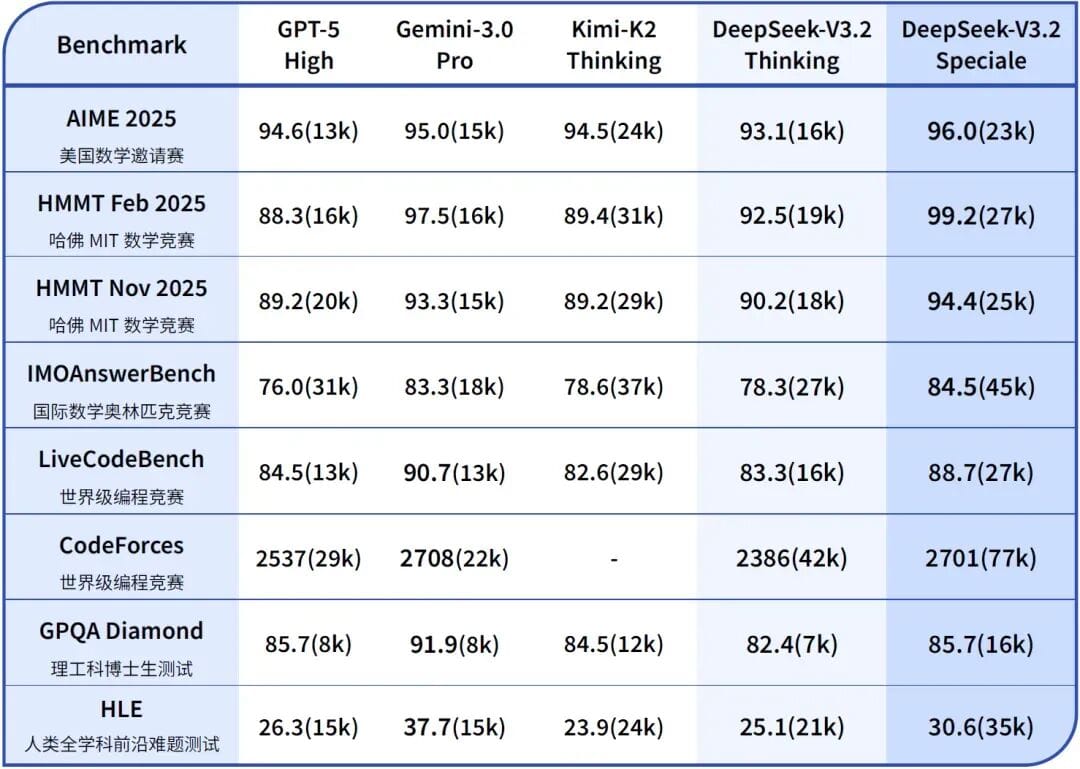

**Speciale Edition:**

- Integrates **DeepSeek‑Math‑V2** theorem‑proving

- Matches Gemini‑3.0‑Pro in reasoning benchmarks

- Gold medals in IMO & ICPC; top 10 in IOI

- Optimized for **complex tasks** — higher token use, costlier runs

- Research‑only — **no tool invocation** and not tuned for casual chat

*Evaluation scores across mathematics, coding, and general domains (token usage in parentheses)*

---

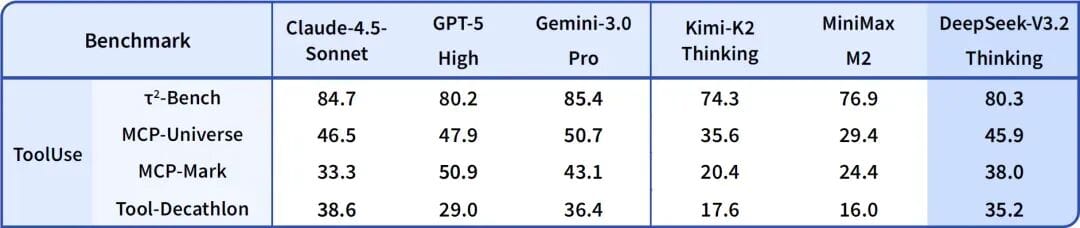

## 03 — Tool Invocation with Integrated Thinking

One major update: **Reasoning integrated into tool calls**.

**Modes available:**

- Reasoning‑mode tool calls

- Non‑reasoning‑mode tool calls

**Training Approach:**

- Large-scale Agent training data synthesis

- “Hard to answer, easy to verify” tasks improve generalization

*Tool invocation benchmark performance — DeepSeek‑V3.2 leads open-source models*

---

## Ecosystem Integration

**AiToEarn** ([官网](https://aitoearn.ai/) / [开源地址](https://github.com/yikart/AiToEarn)):

Enables creators to:

1. Generate AI content

2. Cross‑publish instantly to **multiple platforms**

3. Track analytics & monetize efficiently

Supported Platforms: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter.

By combining **DeepSeek‑V3.2’s reasoning + tools** with AiToEarn’s publishing network, creators can dramatically **scale AI-driven content strategies**.

**Benchmark claim:** In open‑source models, DeepSeek‑V3.2 achieves **top performance** in tool invocation, narrowing the gap with closed‑source leaders — without special test-set training.

*Example: LobeChat + DeepSeek‑V3.2 reasoning + tools for more detailed, accurate responses*

---

### Final Takeaway

DeepSeek‑V3.2 marks a significant step in unifying advanced reasoning, efficient output, and integrated agent tools — all within open source.

With releases like Speciale and supporting platforms like AiToEarn, the potential applications extend from **research excellence** to **real‑world content production and monetization**.

---