Douyin Multimedia Quality Lab EvalMuse Selected for AAAI 2026, Defines New T2I Evaluation Framework

Conference Background

The AAAI committee has announced acceptance notifications for the 2026 AAAI Conference, one of the most prestigious annual events in the AI field.

- Submissions: 23,680

- Accepted: 4,167

- Acceptance Rate: 17.6%

Founded in 1980, AAAI is recognized as one of the top AI conferences and is held annually. The 40th AAAI Conference will take place January 20–27, 2026 at the Singapore Expo.

A joint research project between Douyin Multimedia Quality Lab and Nankai University — EvalMuse-40K: A Reliable and Fine-Grained Benchmark with Comprehensive Human Annotations for Text-to-Image Generation Model Evaluation — has been successfully accepted to AAAI 2026.

Links:

- Paper: https://arxiv.org/abs/2412.18150

- Project Page: https://shh-han.github.io/EvalMuse-project/

---

EvalMuse — Industry-Leading Fine-Grained T2I Evaluation Framework

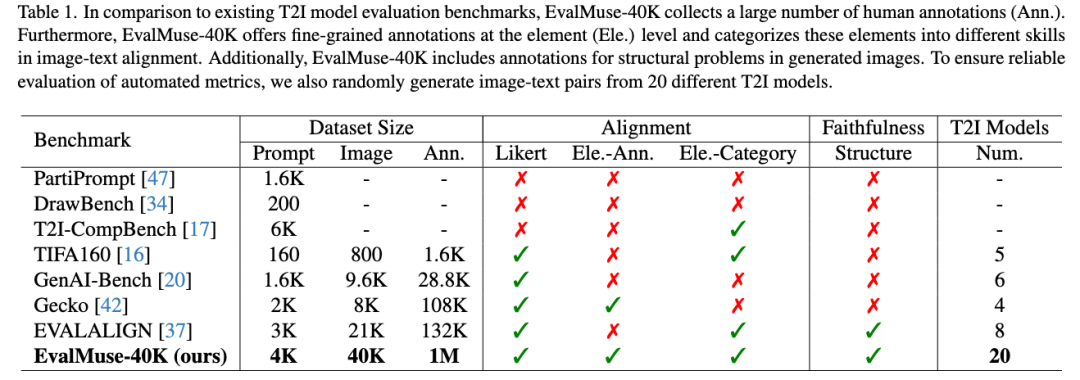

EvalMuse-40K is a benchmark featuring:

- 40,000 image–text pairs

- Over 1 million fine-grained human annotations

- Designed to evaluate image–text alignment in text-to-image (T2I) models with high accuracy and real-world relevance.

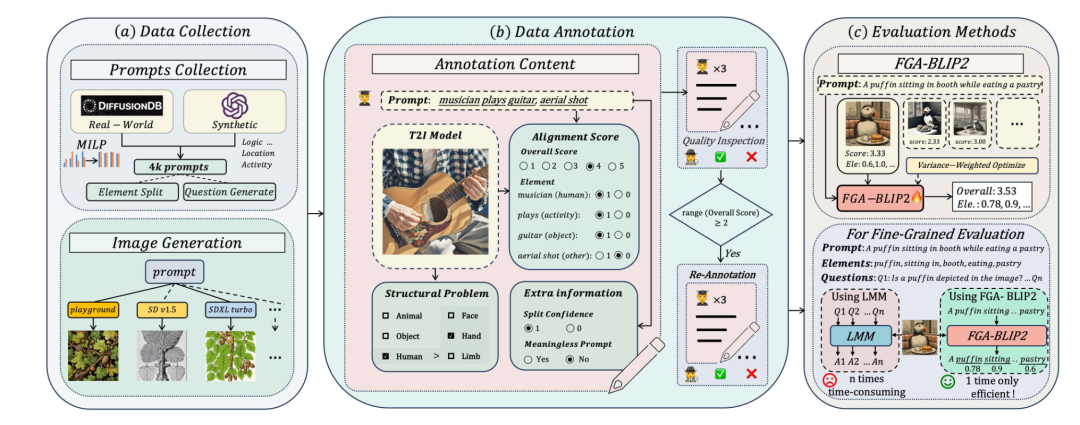

Dataset Construction Process

- Prompt Collection

- 2,000 real-world prompts from DiffusionDB to capture authentic user needs.

- 2,000 synthetic prompts covering attributes such as object count, color, material, environment, and activity.

- Image Generation

- 40,000 images created using 20 different diffusion models for diversity.

- Annotation Workflow

- Three stages: Pre-annotation → Formal annotation → Re-annotation

- Tasks: alignment scoring, element matching, structural issue tagging.

Key Advantages Over Existing Benchmarks

- Larger dataset with more detailed annotations.

- Extensive diversity across prompts and generated images.

- Reliability ensured via multi-stage human annotation.

---

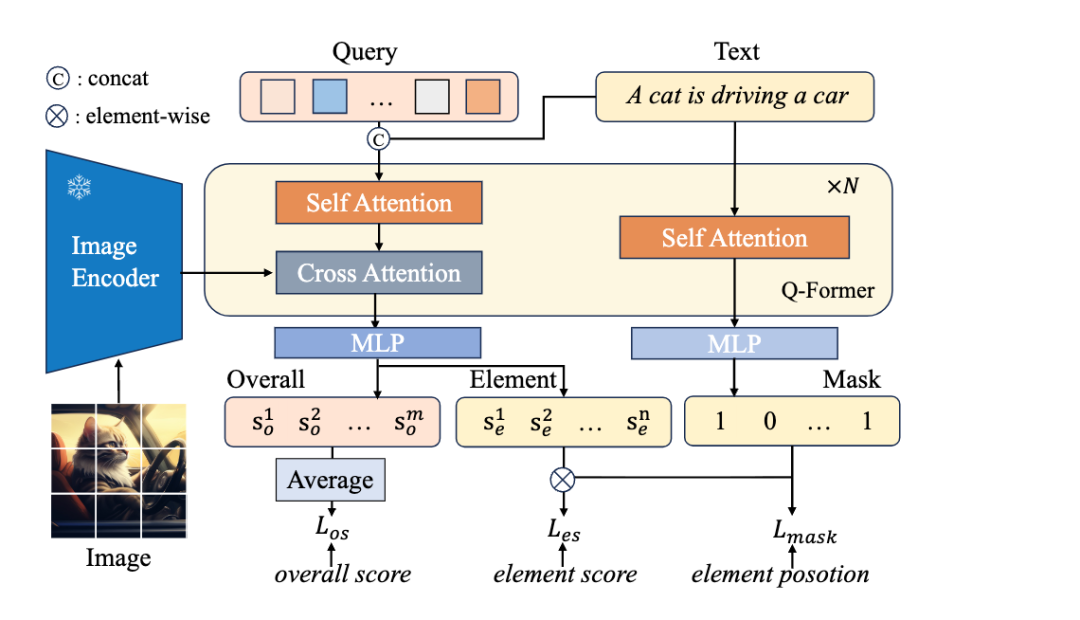

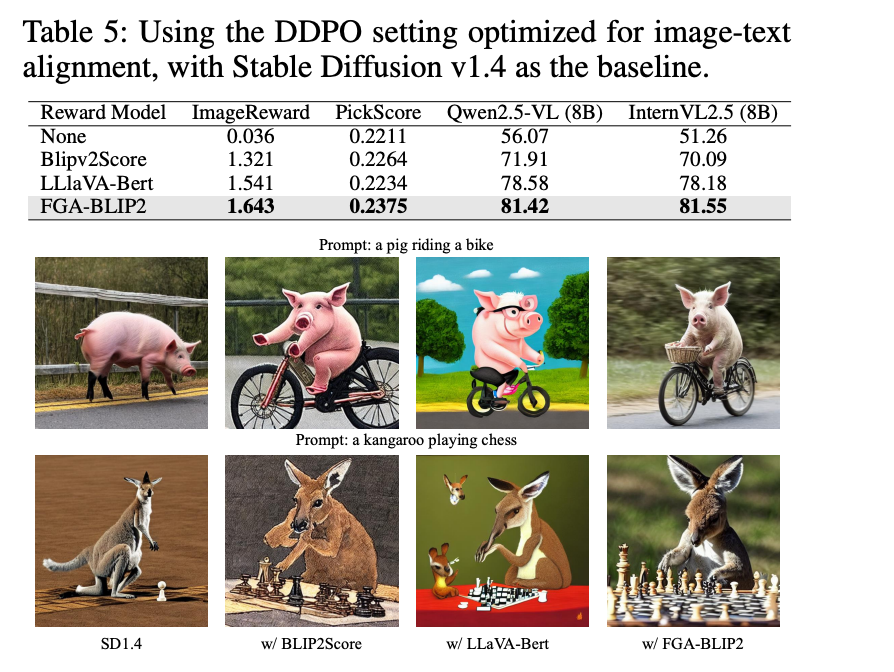

FGA-BLIP2 — Efficient Image–Text Alignment Scoring

FGA-BLIP2 is an end-to-end fine-grained alignment scoring model built on BLIP2.

Highlights

- Direct score learning from image–text pairs.

- Evaluates both overall matches and element-level matches.

- Single inference can output prompt-level and element-level scores simultaneously.

---

Example Scoring Outputs

Example 1

Prompt:

> A photograph of a lady practicing yoga in a quiet studio, full shot.

Image:

"Result": 3.46,

"EleScore": {

"a lady": 0.62,

"photograph": 0.88,

"practicing": 0.57,

"quiet studio": 0.75,

"yoga": 0.73

}---

Example 2

Prompt:

> The word 'START', Five letters

Image:

"Result": 4.15,

"EleScore": {

"START": 0.79

}---

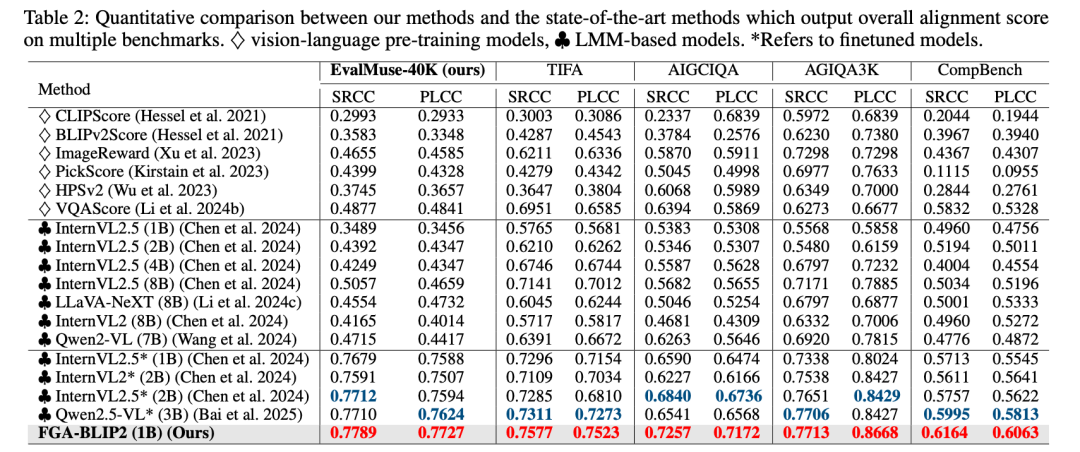

Performance Insights

- FGA-BLIP2: 1B parameters

- Achieves SOTA results across multiple T2I evaluation datasets.

- Outperforms fine-tuned large models such as Qwen 2.5.

---

Applications in Diffusion Model RL

Using FGA-BLIP2 as a reward model for fine-tuning generative models improved generation quality significantly.

---

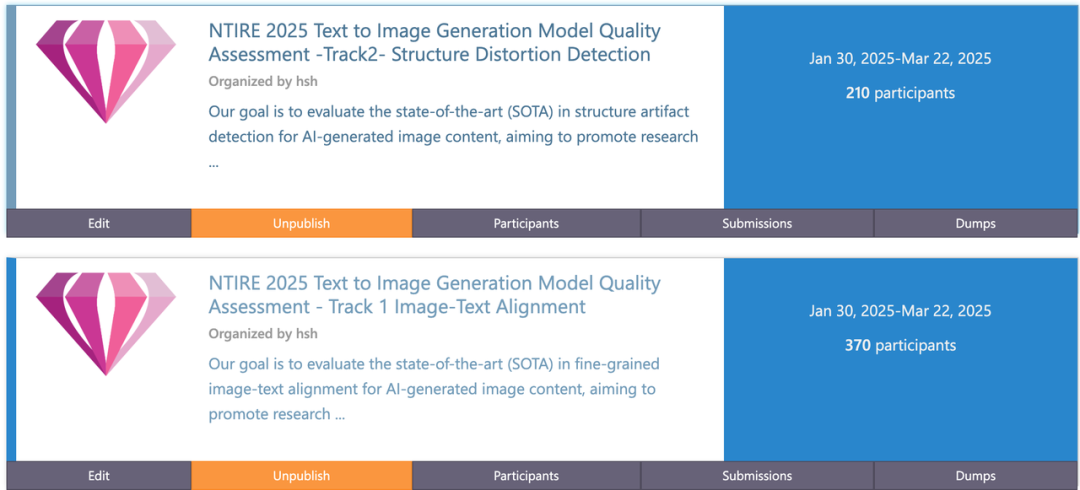

CVPR NTIRE Grand Challenge — Based on EvalMuse

To advance generative image/video evaluation and build a “gold standard,” Douyin Multimedia Quality Lab / Doubao Large Model Team and Nankai University co-organized an academic competition during the 10th CVPR NTIRE workshop.

Participation Stats:

- Total participants: 580

- Track 1: Image-text match evaluation — 370 participants

- Track 2: Structural issue detection — 210 participants

Top Teams:

- Track 1: WeChat Testing Center, Meituan, Ho Chi Minh City University of Science

- Track 2: Hunan University / Munich University, NetEase Games, Ant Group

---

Team Introduction

The Douyin Multimedia Quality Laboratory (ByteDance):

- Specializes in multimedia and AIGC evaluation technologies.

- Capabilities: objective + subjective evaluation in short/long videos, images, livestreaming, RTC, audio.

- Supports Douyin, e-commerce, lifestyle services, advertising, CapCut, Tomato, RedFruit, etc.

Reach out for cooperation: litao.walker@bytedance.com

Scan the QR code to join the team and lead in large model quality evaluation.

---

Relevant Links

- Paper: https://arxiv.org/abs/2412.18150

- Project repo: https://shh-han.github.io/EvalMuse-project/

---

Original Post:

---

In conclusion, as AI multimedia generation accelerates, solutions like EvalMuse-40K and FGA-BLIP2 offer critical tools for measuring and improving quality. Coupled with open-source platforms like AiToEarn — enabling multi-platform publishing, analytics, and monetization — creators and labs can maximize impact and turn AI innovation into tangible value.

Related Tool: AI模型排名 — compares performance across AI models.