# Enterprise AI Agents: From Pilot to Real Results

SaaS often struggles with the “last mile.” But when AI Agents are misused, problems can arise in *every* mile.

By **2025**, enterprise AI Agent adoption is reaching its **inflection point** — companies are moving from “testing” to “deployment,” shifting focus from technical narratives to **business outcomes**.

According to a survey by **Plivo**, **over 60% of enterprises list AI Agents as a key strategic focus for the next 12 months**. Procurement is turning value-oriented, and **RaaS (Results-as-a-Service)** is beginning to overtake **SaaS (Software-as-a-Service)**.

To unlock AI Agents that truly *get work done*, four engineering trends are key:

- **MCP** – Model Context Protocol

- **GraphRAG** – Graph + Retrieval-Augmented Generation

- **AgentDevOps** – Development & Ops for reasoning-based systems

- **RaaS** – Results-as-a-Service

---

## 1. What Are Enterprise AI Agents Missing?

### Four Trends Offering Reusable Engineering Solutions

---

### **Trend 1: MCP Enables AI Agent Extensibility**

At the end of 2024, Anthropic introduced **MCP (Model Context Protocol)** — an open standard enabling LLMs to integrate securely with external data and tools.

**Think of MCP as a "USB-C port for AI"** — a universal connection layer for diverse data sources, reducing integration and ops costs.

**Highlights:**

- First supported by **Claude 3.5 Sonnet**

- Adopted by Block, Apollo, Microsoft, Google, AWS, OpenAI, and BAT

- Community-created MCP Servers approaching **2,000 registered** (GitHub, Hugging Face)

**Practical challenges (especially in China):**

1. **Standards Fragmentation** – Proprietary variants create protocol incompatibilities.

2. **Security Gaps** – Inconsistent authentication; risk of cross-service data exposure.

3. **Operational Complexity** – Incomplete modules for identity verification/audits.

4. **Deployment Overhead** – Separate MCP Server setups complicate scaling.

> **Example Integration**:

> Platforms like [AiToEarn官网](https://aitoearn.ai/) bridge enterprise adoption gaps by combining AI content generation, analytics, multi-platform publishing (Douyin, Kwai, WeChat, Bilibili, …), and model ranking ([AI模型排名](https://rank.aitoearn.ai)) — demonstrating interoperability + monetization in real workflows.

---

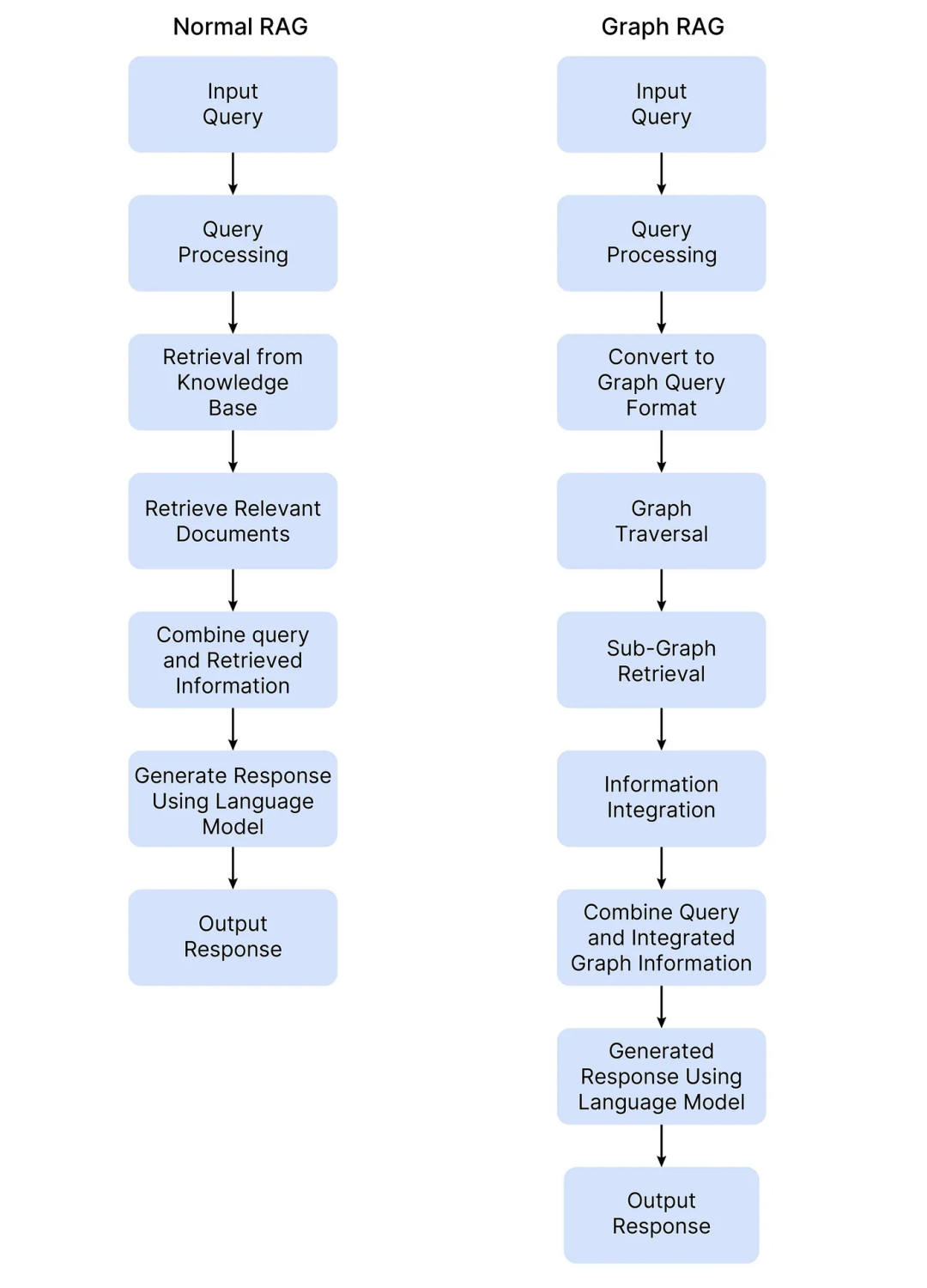

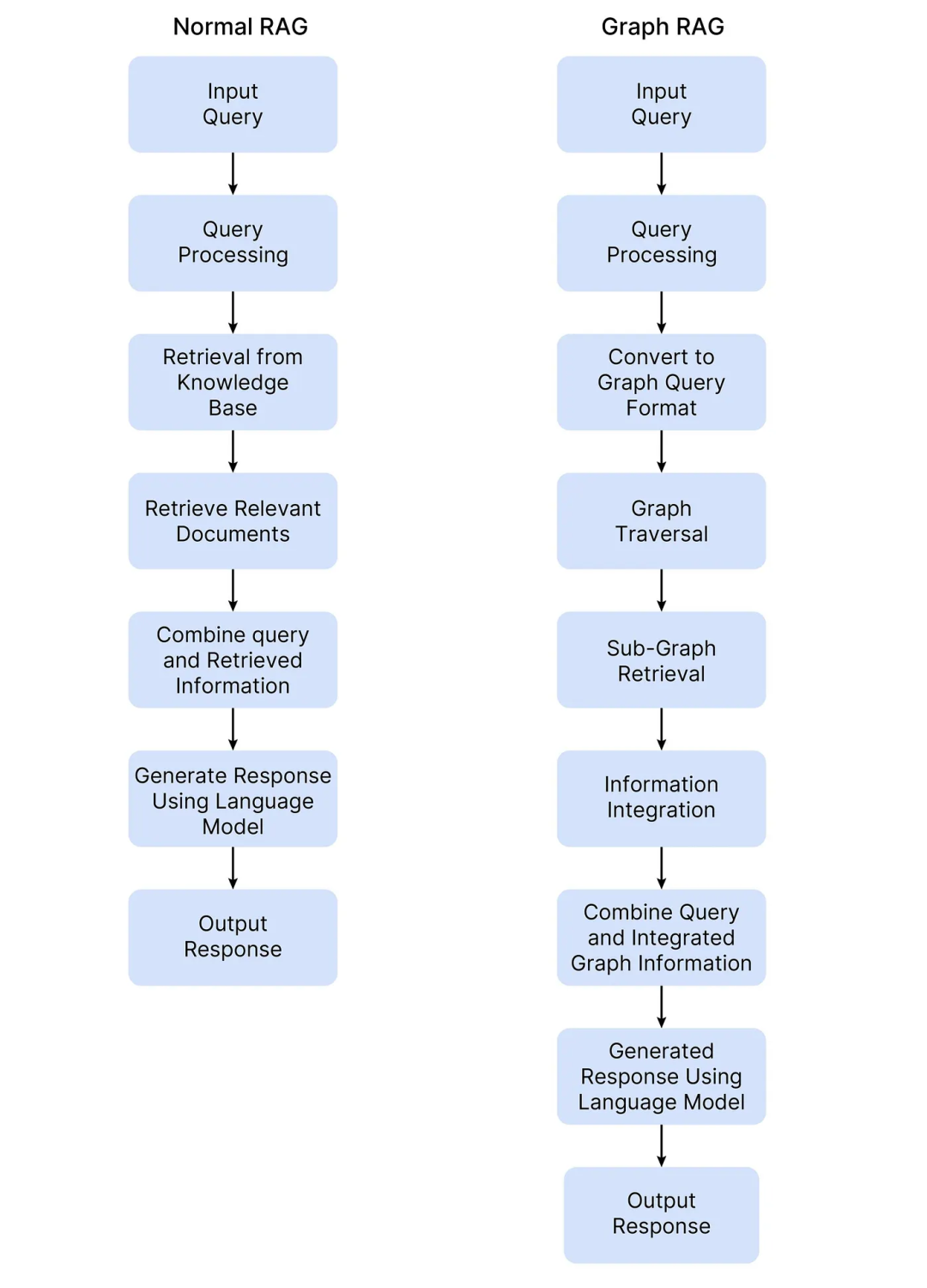

## Trend 2: GraphRAG Keeps Answers Consistent

**GraphRAG**, proposed by Microsoft, merges **knowledge graphs** with **RAG** to improve:

- **Multi-document consistency**

- **Cross-version coherence**

- **Logical relationship retrieval**

**Ideal use cases:**

- Long-text processing

- Multi-hop reasoning

- High-logic & explainability demands

In finance, insurance, healthcare, law — boosts accuracy by **20–50 percentage points** & cuts token costs **10–100×**.

**Challenges:**

1. **Knowledge Graph Construction** – Parsing PDF, PPT, spreadsheet complexity.

2. **Version Control** – Avoid outdated rules in answers.

3. **Global Recall Complexity** – Graph + vector recall increases costs if poorly scoped.

**Best Practice:**

Focus on a **governable knowledge asset chain** — balance scale with governance.

Example: **Bairong Cloud** emphasizes:

- High-accuracy parsing

- Strict version governance

- Intent clarification before answering

> **AiToEarn's Role:**

> [AiToEarn](https://aitoearn.ai/) connects governed AI knowledge assets with publishing & monetization across major channels, turning consistent internal intelligence into revenue-generating external content.

---

### GraphRAG + U-Retrieval in Finance

**Pipeline Steps:**

1. Doc Chunking

2. Entity Extraction

3. Triple Linking

4. Relationship Linking

5. Tag Graphs

6. Response via U-Retrieval

*Source: [https://arxiv.org/pdf/2408.04187](https://arxiv.org/pdf/2408.04187)*

**Retrieval Flow:**

- **Top-down**: Tag query → traverse tag tree → select entities → generate initial answer.

- **Bottom-up**: Optimize answer using higher-level summaries → merge detail + big picture.

---

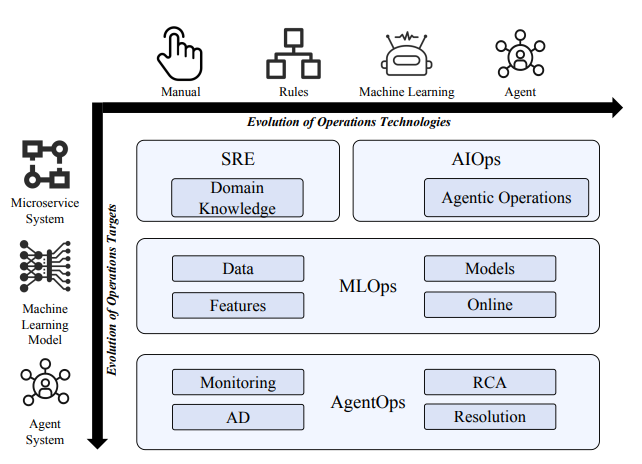

## Trend 3: AgentDevOps — Making AI Agents Controllable

AI Agents need **behavior quality assurance**, not just uptime. **AgentDevOps** extends DevOps for reasoning systems.

**Key Differences vs Traditional DevOps:**

1. **Goal**: From system availability → business outcome accountability.

2. **Observation**: From metrics monitoring → reasoning chain observability.

3. **Debugging**: From code → behavior debugging (trace reasoning path).

4. **Optimization**: From static tuning → continuous data-driven self-optimization.

*Source: [https://arxiv.org/pdf/2508.02121v1](https://arxiv.org/pdf/2508.02121v1)*

> **Example**: [AiToEarn官网](https://aitoearn.ai/) aligns creative output with operational discipline:

> AI content generation + monitoring + ranking ([AI模型排名](https://rank.aitoearn.ai)), fitting AgentDevOps principles on observability and control.

**Critical Capabilities:**

- Replay

- A/B Testing

- Auditing

- Business-level SLO/SLA definition

**China-specific Challenges:**

- Multi-deployment trace capture

- Immature evaluation frameworks

- Incomplete audit logging

- Unclear business metric standards

**Bairong Cloud's Approach:**

1. Full-process engineering

2. Scenario-based performance evaluation

3. Semi-supervised adaptive optimization

4. RL-based online iteration

---

## Trend 4: RaaS — AI Agents Speaking KPI

**RaaS (Results-as-a-Service)** shifts payment models from *software access* to *measurable outcomes*.

Examples:

- **Simple.ai**: Charges per improved satisfaction score.

- **Freightify**: Charges for transport cost savings.

- **Salesforce Agentforce**: $2 per effective AI conversation.

**Challenges:**

- Aligning KPI-based metrics with finance

- Multi-metric evaluation across roles/scenarios

- Transitioning from seat-based billing to result-based SLA

**Best Practice:**

Translate “results” into **SLA items** with numeric targets, e.g.,:

- Connection rate

- Valid conversation rounds

- Conversion volumes

- False positive rates

> **AiToEarn’s Contribution:**

> Real-time analytics + multi-platform publishing show how output can be measured and monetized, aligning with KPI-driven RaaS models.

---

## 2. What Kind of AI Agent Can Truly “Do the Job”?

### Real Scenarios:

#### Finance — Large-Scale Outreach

- Deep parse calls

- Detect intent

- Generate dialogue & summaries

- Match products intelligently

- Personalize strategies

**Example**: **BR-LLM-Speech**

- Active multi-modal modeling

- <200 ms latency

- Sustains 100+ rounds

**Technical Bottlenecks:**

1. Multi-stage ASR → LLM → TTS pipeline latency

2. Parallel model scheduling/resource management

3. Frame-level stability requirements

4. Multimodal scheduling pressure

#### Recruitment / HR

- Automated screening & scheduling

- Pre-screening support for recruiters

- Accurate, consistent answers on job details

- Training Free adaptive prompt optimization

---

## 3. Pre-deployment Checklist for Enterprise AI Agents

**Trend-aligned Checks:**

1. **Connection Protocol Layer** — Seamless, secure integration with internal/external systems.

2. **Knowledge Consistency Layer** — Coverage + version control for key documents/rules.

3. **Observation & Governance Layer** — Full execution traceability + anomaly detection.

4. **Financial Alignment Layer** — Clear, verifiable SLA items tied to business processes.

---

## 4. Conclusion — Toward Role-Specific Experts

Transition from general AI Agents → **role experts**:

- **Data engineering pipelines** for domain-specific excellence

- **Scenario refinement** for nuanced interaction capability

When role-specific AI Agents can be replicated, governance-aligned, and KPI-measured, **large-scale deployment becomes viable**.

> **Final Note:**

> Open ecosystems like [AiToEarn官网](https://aitoearn.ai/) show how AI creation, publishing, analytics, and ranking across Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X can amplify both AI Agents and human creators — enabling scalability and measurable impact.

---