EP185: Docker and Kubernetes

📌 This Week’s System Design Refresher

Here’s what we’ve covered this week:

- Rate Limiter System Design: Token Bucket, Leaky Bucket, Scaling (Includes YouTube video)

- Docker vs Kubernetes

- Batch vs Stream Processing

- Modular Monoliths

- Process vs Thread

- AI Agents: Chaining Tools, Memory, and Reasoning

- Sponsor Us

---

🔹 Rate Limiter System Design: Token Bucket, Leaky Bucket, Scaling

---

🔹 Docker vs Kubernetes

Docker

A container platform that packages applications with their dependencies, enabling execution on any machine.

Workflow:

- Application code and dependencies are defined in a Dockerfile.

- Docker builds a portable container image.

- Image runs via a container runtime on the host machine.

- Networking connects containers and external services.

Kubernetes

A container orchestration platform for running containers across multiple nodes in a cluster.

Key Functions:

- Deploy containers across different nodes.

- Handle scheduling, service discovery, and load balancing automatically.

- Monitor health and restart failed containers.

- Scale workloads up/down based on demand.

---

💡 Analogy: Docker = Packaging; Kubernetes = Orchestration.

For creators, AiToEarn offers similar orchestration for cross-platform AI content publishing, managing distribution, analytics, and monetization — much like Kubernetes does for applications.

---

Inside Kubernetes

- Dockerfile & Build → Container image.

- Kubernetes Runtime → Deploys containers to worker nodes.

- Master Node → Runs API server, `etcd` store, controller manager, and scheduler.

- Worker Nodes → Containers run inside Pods, managed by `Kubelet` & `kube-proxy`.

- Outcome → Distributed, scalable, and self-healing apps.

---

Question: Have you used Docker or Kubernetes in your projects?

---

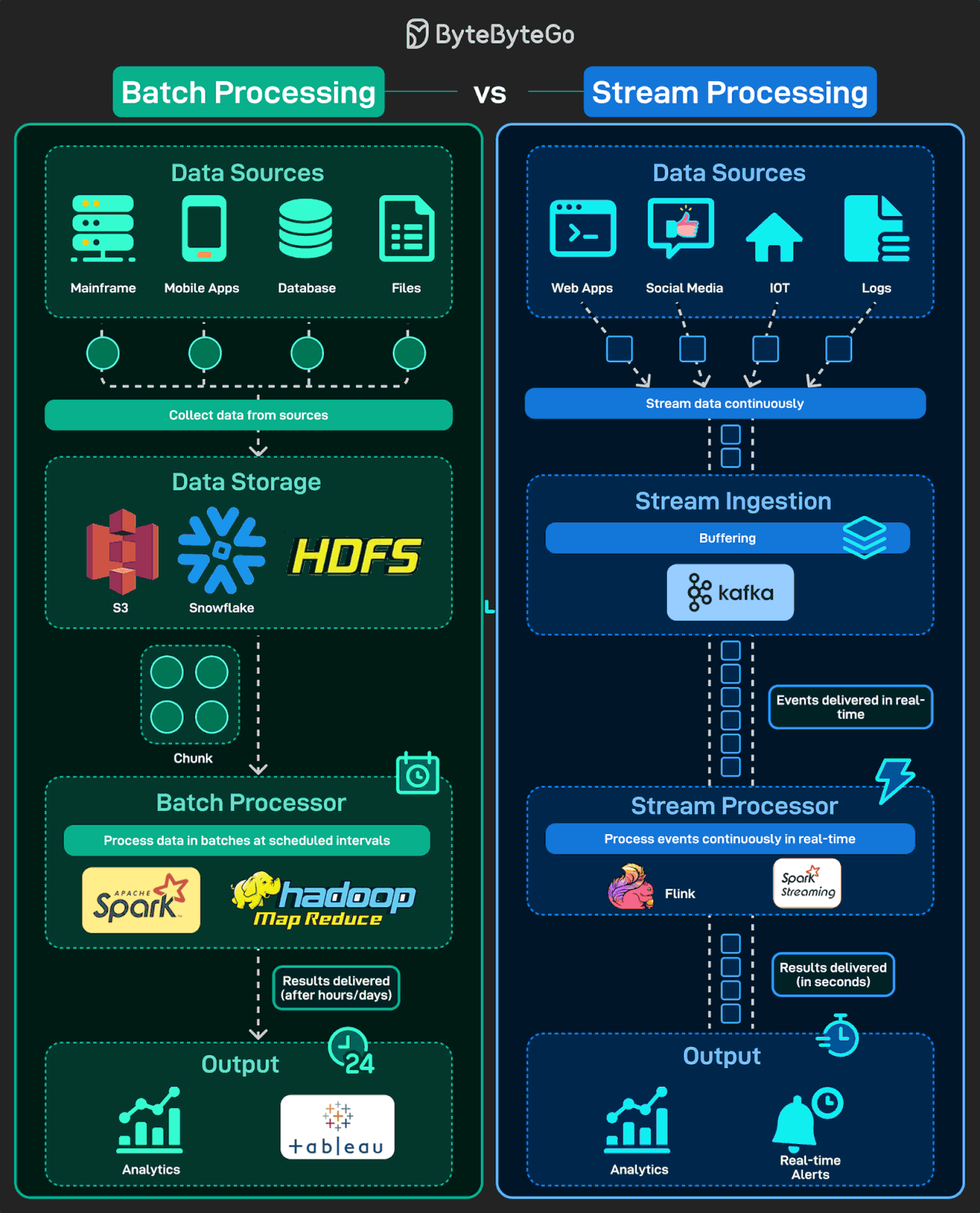

🔹 Batch vs Stream Processing

Data flows endlessly — but processing strategy changes results.

Batch Processing

- Bulk data collected and processed periodically.

- Ideal for reports and historical analysis.

- Strengths: High throughput, accuracy.

Stream Processing

- Continuous, real-time event handling.

- Enables dashboards, alerts, and recommendations.

- Strengths: Low latency, instant insights.

Summary:

- Batch → Historical accuracy, large volumes.

- Stream → Real-time, low latency.

---

Question: Which is harder to scale — batch pipelines or real-time streams?

---

🔹 Modular Monoliths

A modular monolith divides a system into independent modules but keeps a single deployment unit, balancing simplicity with flexibility.

Core Principles:

- Modules are independent.

- Each serves specific functionality.

- Interfaces are well-defined.

---

Advantage:

- Monolith → Simpler, less flexible.

- Microservices → Flexible, complex.

- Modular Monolith → Best of both — logical separation without multi-deployment complexity.

---

🔹 Process vs Thread

Key Differences:

- Independence: Processes are independent; threads are subsets within a process.

- Memory: Processes have separate memory; threads share.

- Creation Cost: Processes are heavier to create/terminate.

- Context Switching: Slower for processes.

- Communication: Faster between threads.

---

🔹 AI Agents — Chaining Tools, Memory, and Reasoning

How They Work

- Reasoning → Agent plans steps based on goals.

- Tools → Executes tasks via APIs/programs (search, calculator, code interpreter).

- Memory → Stores outcomes for context, refinement, and learning.

Cycle:

Reason → Act → Record → Refine → Deliver.

---

Question: Have you worked with AI agents?

---

📣 Help Improve ByteByteGo

Take our 2-minute survey to shape future content.

---

📢 Sponsor Us

- Get your product in front of 1M+ tech professionals.

- Seen by engineering leaders & senior developers.

- Reserve now — spots fill ~4 weeks ahead.

- 📩 sponsorship@bytebytego.com

---

Would you like me to also create diagrams & callout boxes for each section so the final Markdown becomes visually richer and easier to scan? This would make technical readers engage faster.