EXO 1.0 Acceleration: NVIDIA DGX Spark + Apple Mac Studio Boost LLM Inference Performance by 4×

NVIDIA DGX Spark + Apple Mac Studio = 4× Faster LLM Inference with EXO 1.0

EXO Labs connected a 256 GB M3 Ultra Mac Studio to an NVIDIA DGX Spark and achieved a 2.8× performance boost when serving Llama‑3.1 8B (FP16) with an 8,192‑token prompt.

---

Understanding LLM Performance Stages

When running large language models (LLMs), serving a prompt involves two key execution phases:

1. Prefill Phase

Reads the incoming prompt and builds the KV cache for each transformer layer.

- Nature: Compute‑bound — each input token triggers heavy matrix multiplications across all layers to initialize the model’s internal state.

- Impact: Directly affects TTFT (time‑to‑first‑token).

2. Decode Phase

Generates the output one token at a time.

- Nature: Memory‑bandwidth bound — fewer arithmetic operations, but every new token references the full KV cache.

- Impact: Directly affects TPS (tokens per second).

---

Hardware Roles and Bottleneck Optimization

DGX Spark

- Compute: ~100 TFLOPS

- Memory Bandwidth: 273 GB/s

- Strength: Optimized for prefill phase.

Apple M3 Ultra

- Compute: ~26 TFLOPS

- Memory Bandwidth: 819 GB/s

- Strength: Optimized for decode phase.

---

EXO’s Hybrid Execution Strategy

EXO Labs’ architecture splits the workload:

- Prefill on DGX Spark

- Runs compute‑heavy initialization.

- Streams KV cache to the Mac via 10 Gb Ethernet.

- Streams early layers immediately, while later layers are still processing.

- Decode on Mac Studio

- Uses high memory bandwidth to accelerate token generation.

- Outperforms Spark‑only execution in total latency.

Result: Faster inference and smoother token streaming by matching hardware strengths to phase demands.

---

Broader Implications

This setup highlights how compute vs. memory bottlenecks in LLMs can be mitigated by mixed‑hardware configurations — enabling substantial performance gains in both research and production environments.

---

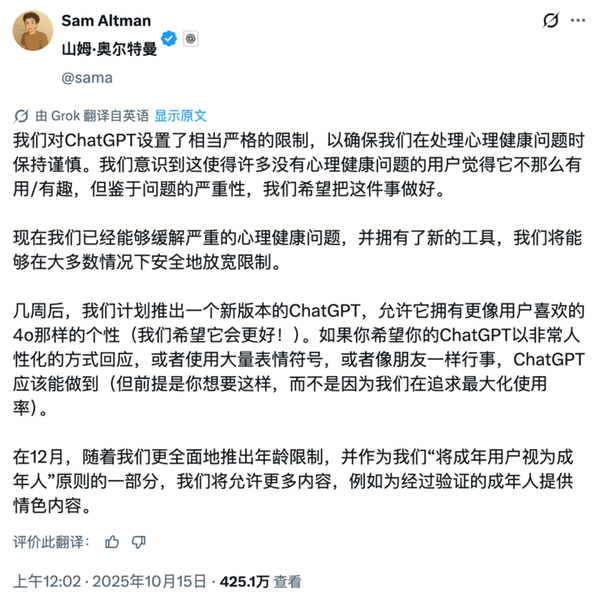

AI Monetization Connection

For creators and developers building AI‑based content workflows or managing multi‑platform publishing, performance insights complement tools like AiToEarn官网:

- Open‑source, global AI monetization platform

- Publishes simultaneously to: Douyin, Kwai, WeChat, Bilibili, Rednote (Xiaohongshu), Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X (Twitter)

- Integrates AI generation, analytics, model ranking

- Designed to help creators efficiently monetize AI‑driven outputs

---

Key takeaway: Matching phase‑specific workloads to the right hardware — and leveraging tools for efficient publishing — can dramatically improve both AI inference speed and content monetization workflows.