Father of Transformers “Defects”: I’m Done with Transformers! Warning: AI Research Is Becoming Too Narrow — We Need New Architectures

The AI Field Is More Funded Than Ever — Yet Research Is Narrowing

> “I’m really done with Transformers.” — Llion Jones, co‑author of the Transformer and co‑founder of Sakana AI

At the TED AI conference in San Francisco, Llion Jones — celebrated as a “founding father of the generative AI era” — made headlines by openly criticizing the current AI research direction and declaring he has moved beyond Transformers in search of the next major breakthrough.

It’s a striking statement, given that Transformers are the core technology behind nearly all mainstream large language models, including ChatGPT, Claude, Gemini, and Llama.

---

AI Research Is Becoming Narrower

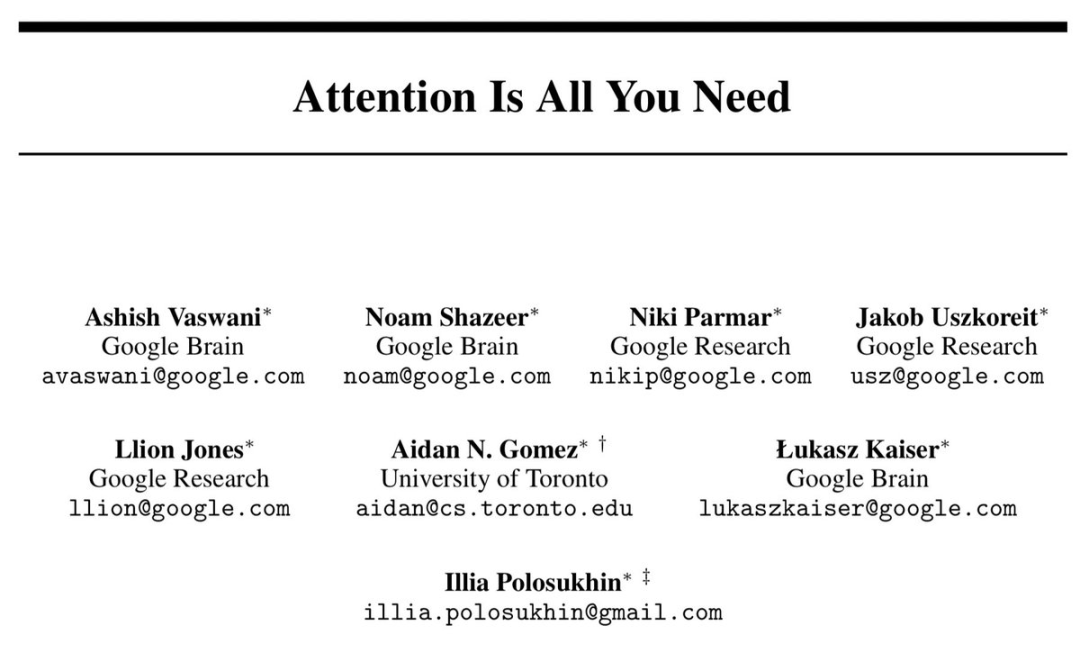

Jones was one of the authors of the seminal 2017 paper Attention Is All You Need — the work that introduced the Transformer and revolutionized AI architectures, earning over 100,000 citations.

On stage at TED AI, Jones asserted:

> “Despite unprecedented funding and talent in AI today, research is becoming narrower and narrower.”

Driving Factors Behind the Narrow Focus

- Capital pressure: Investors demand short‑term returns.

- Competitive anxiety: Researchers focus on safe, publishable projects to avoid being “scooped.”

- Risk aversion: Tweaks to hyperparameters and bigger datasets dominate, rather than entirely new concepts.

The result is what Jones calls “onion peeling” research — self‑replicating cycles within the same framework, without radical innovation.

---

We May Be Missing the Next Breakthrough

Jones used the exploration vs. exploitation analogy:

Too much exploitation (iterating on existing achievements) without exploration leads to local optima — the system gets stuck, missing potentially superior solutions.

> “We’re so obsessed with the Transformer’s success,” Jones explained, “that we’ve stopped looking outward. The next revolutionary architecture could be right around the corner.”

---

How Transformers Were Born — Freedom Over KPIs

Jones recalled the invention process at Google:

> “It was a very free, natural process — no project requirements, no strict metrics. Just lunch discussions and whiteboard sketches.”

Key conditions at the time:

- No OKRs or deadlines

- No investor pressure

- Pure curiosity-driven exploration

Jones emphasized that even with high salaries, most modern research environments push toward low‑risk, high‑publishability outputs instead of true exploration.

---

Sakana AI — A Lab Beyond Transformers

As CTO of Sakana AI, Jones aims to recreate such unpressured environments:

- Nature-inspired research directions

- Minimal output pressure

- No push for competitive publishing

Brian Cheung’s principle serves as a guiding rule:

> “You should only work on research that, if you didn’t do it, no one else in the world would.”

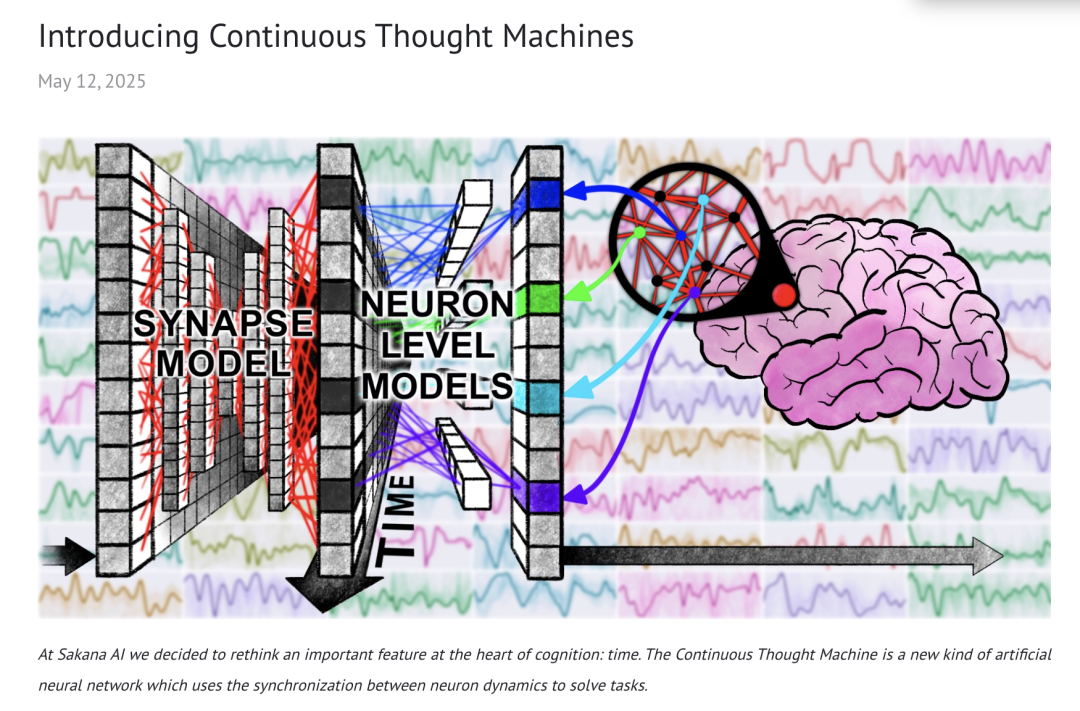

One example, the Continuous Thought Machine architecture, mimics brain‑like neural synchrony. Initially dismissed elsewhere as “a waste of time,” Jones granted a week for free exploration — the project later appeared at NeurIPS as a showcase.

Jones believes freedom itself can be a stronger talent magnet than money.

> “Smart, ambitious people who truly love to explore will naturally seek out such places.”

---

This Is Not Competition — This Is Exploration

Jones urged the industry:

- Share more

- Take bigger risks

- Focus on exploring together rather than competing

A Warning on Scaling Limits

Simply scaling Transformer models may have hit a ceiling. Many leading researchers now suggest architectural innovation could be the key to stronger AI.

However, Jones cautions that breakthroughs won’t happen without changing incentive structures plagued by:

- Money

- Competitions

- Paper counts

- Rankings

---

Where the 8 Transformer Authors Are Now

Eight co‑authors of the 2017 paper have pursued dramatically different paths:

- Ashish Vaswani — Founded Essential AI

- Noam Shazeer — Founded Character.AI; returned to Gemini project

- Aidan Gomez — CEO at Cohere, focusing on enterprise LLMs

- Jakob Uszkoreit — Founded Inceptive, applying AI to biotech

- Llion Jones — Left Transformer development; founded Sakana AI

- Łukasz Kaiser — Joined OpenAI to work on reasoning models

- Illia Polosukhin — Founded NEAR Protocol (blockchain)

- Niki Parmar — Maintains low public profile

This diversity mirrors the Transformer’s impact: a single architecture, spawning multiple futures.

---

Open Exploration and Monetization Platforms

Platforms like AiToEarn官网 align with Jones’s vision — enabling creators to:

- Use AI to generate content

- Publish across major platforms simultaneously (Douyin, Kwai, WeChat, YouTube, Instagram, X)

- Track analytics and model rankings

For documentation, refer to:

---

Related Industry Experiments

Quick adoption examples:

- Claude Code brute‑force deployment of DeepSeek‑OCR in 40 minutes — Read more

- HuggingFace OCR model comparisons — Read more

Try it yourself:

These open‑source experiments showcase how quickly AI tools can be tested by independent creators. Platforms like AiToEarn expand that by integrating content generation, publishing, analytics, and cross‑platform monetization — turning experimental work into sustainable output.

---

Reference:

---

If you’d like, I can prepare a condensed, bullet‑point executive summary version of this rewritten piece for busy readers to absorb in under 2 minutes. Would you like me to do that next?