First Release | Chen Tianqiao’s Shanda Team Launches Powerful Open-Source Memory System EverMemOS

EverMemOS Sets a New Standard in AI Long-Term Memory

Date: 2025-11-16 11:59 — Location: Hong Kong, China

EverMemOS has delivered significantly better performance than previous systems on mainstream benchmarks such as LoCoMo and LongMemEval-S, establishing the new State-of-the-Art (SOTA) in AI long-term memory.

---

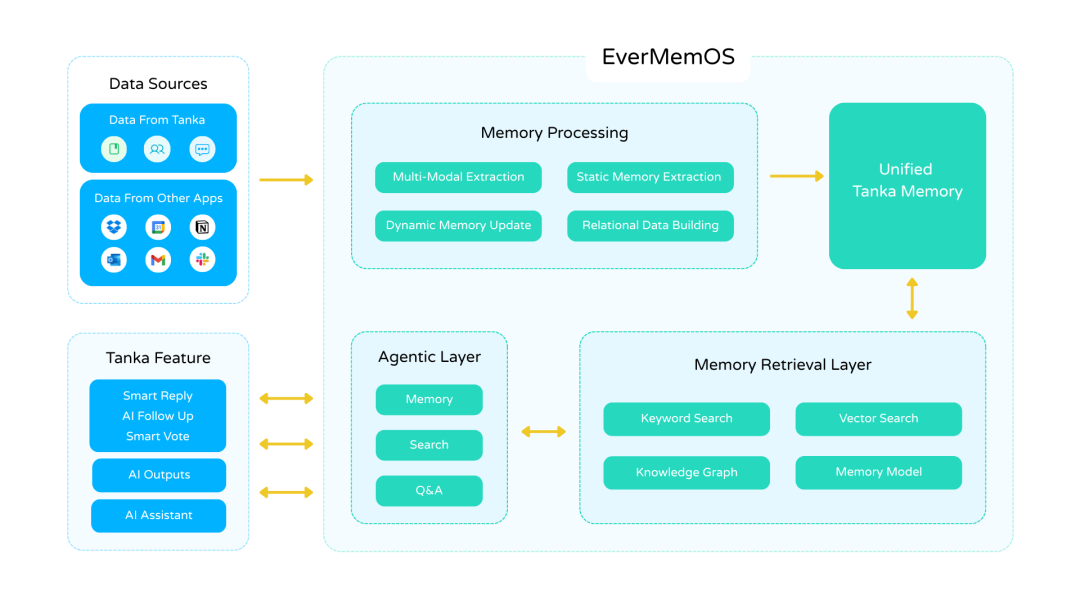

Introducing EverMemOS

The EverMind team has officially launched EverMemOS, a world-class long-term memory operating system for AI agents. Designed to serve as the foundational data infrastructure for next-generation intelligent agents, EverMemOS enables AI to have a lasting, coherent, and evolvable "soul".

Key Achievements

- Outstanding benchmark results:

- EverMemOS achieved 92.3% on LoCoMo and 82% on LongMemEval-S, surpassing all previous systems.

- Industry recognition:

- Considered a breakthrough product in AI infrastructure.

Links:

- Website: http://everm.ai

- GitHub: https://github.com/EverMind-AI/EverMemOS/

---

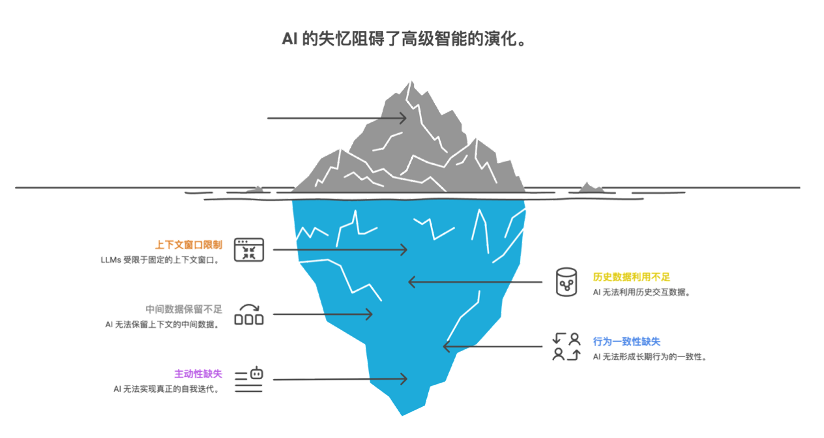

Memory Capacity — The Watershed for Next-Gen AI

The Problem

Large Language Models (LLMs) have fixed context windows, causing them to “forget” over extended tasks. This leads to:

- Broken memory and contradictory facts

- Lack of deep personalization

- Inability to retain intermediate context data

Why It Matters

Without robust long-term memory:

- Agents cannot maintain behavioral consistency

- Self-iteration is impossible

- Personalization, consistency, and initiative — key to advanced AI — remain out of reach

Industry Momentum

Products like Claude and ChatGPT have already made long-term memory a strategic feature, marking memory as the core competitive advantage in future AI applications. Yet, most existing solutions:

- Are fragmented or scenario-limited

- Fail to unify precision, speed, usability, and adaptability

---

Inspired by the Human Brain

Background

EverMind’s team hails from Shanda Group, with roots in tech innovation and investment. Their design closely mirrors biological memory processes:

- Sensory encoding

- Hippocampal indexing

- Cortical long-term storage

- Prefrontal cortex coordination

This human-brain-inspired approach aims to give AI continuity over time, enabling adaptation and long-term growth.

---

The Temporal Structure Paradigm

At the Tianqiao Institute for Brain Science’s AIAS 2025 Symposium, Chen Tianqiao outlined five pillars of discovery-based intelligence, highlighting long-term memory as the bridge between time and intelligence.

Key Insight:

Human brains operate in continuous, dynamic temporal structures, whereas AI typically works in instantaneous, static spatial structures.

EverMemOS is designed to connect time and intelligence by granting AI temporal continuity.

---

Breakthroughs in Scenario Coverage & Performance

- Scenario Coverage:

- First memory system to fully support:

- 1-to-1 conversations

- Multi-user collaboration

- Already integrated into AI Native Tanka.

- Technical Performance:

- Uses bio-inspired “engram” heuristic retrieval to achieve:

- 92.3% (LoCoMo)

- 82% (LongMemEval-S)

- — both SOTA results.

---

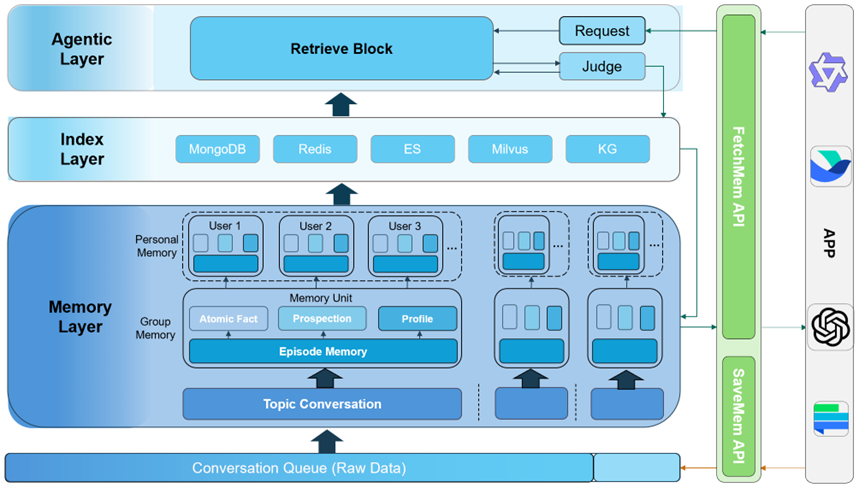

EverMemOS Four-Layer Architecture

Layers & Their Analogy to Brain Regions

- Agentic Layer — Task understanding & executive control (prefrontal cortex)

- Memory Layer — Long-term structured storage (cortical network)

- Index Layer — Fast association via embeddings & knowledge graphs (hippocampus)

- API/MCP Interface Layer — Links AI with external applications (sensory systems)

---

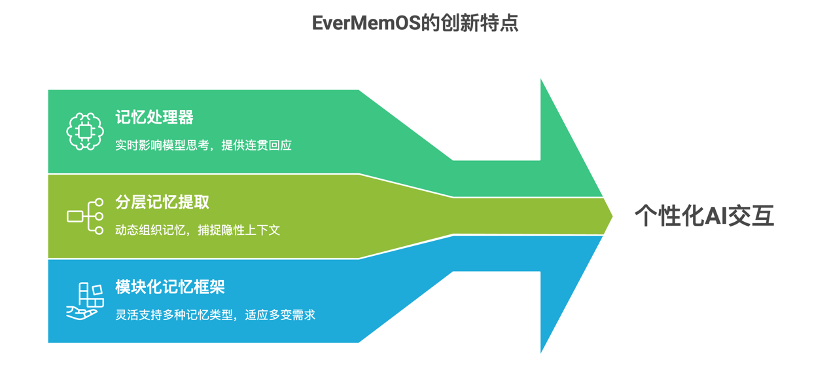

Three Core Features

1. From “Memory Database” to “Memory Processor”

EverMemOS actively applies retrieved memories to reasoning — ensuring every AI output reflects long-term user context.

2. Layered Memory Retrieval & Dynamic Organization

Memories are stored as episodic semantic units, linked dynamically to capture implicit context beyond keyword similarity.

3. Modular & Extensible Memory Framework

Supports varied memory needs:

- High-precision structured data for professional scenarios

- Emotional context & empathy for companion AI scenarios

---

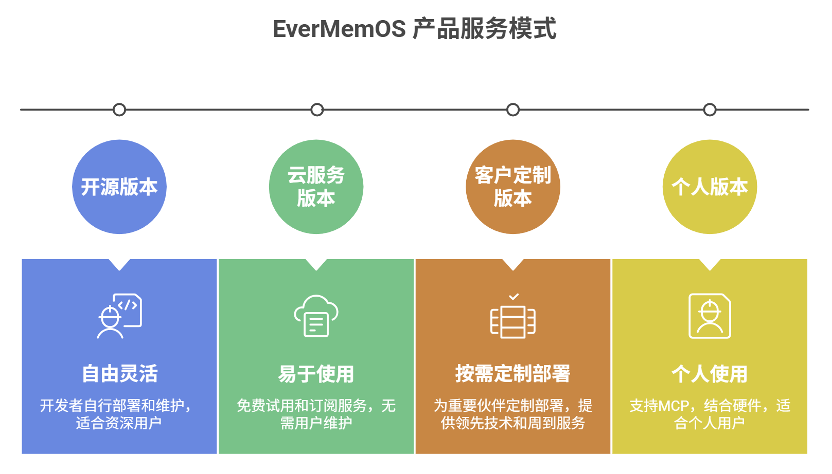

From Open Source to Cloud Services

EverMemOS is available open-source on GitHub:

https://github.com/EverMind-AI/EverMemOS/

Planned cloud service version to offer:

- Enterprise-grade support

- Data persistence

- Scalable deployment

Sign up: http://everm.com for early access.

---

Mission Statement

> “We are confronting one of the most profound challenges in AI — giving machines memory and opening the door to higher-level general intelligence.

> This is not just a job, but a mission to shape the intelligent memory layer of the future.”

> — EverMind Team

---

Complementary Ecosystem — AiToEarn

Platforms like AiToEarn官网 enable:

- AI content generation

- Cross-platform publishing (Douyin, Kwai, WeChat, Bilibili, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X/Twitter)

- Analytics & model ranking (AI模型排名)

When paired with EverMemOS, such tools could revolutionize how intelligent agents create, interact, and monetize content.

---