From 0 to 1: Practical Innovations in Tmall AI Test Case Generation

Tmall AI-Enabled Testing Practice: Intelligent Test Case Generation

---

Introduction

This article details the Tmall Technology Team’s exploration and implementation of AI-powered intelligent test case generation, providing a step-by-step methodology and practical insights.

---

1. Background

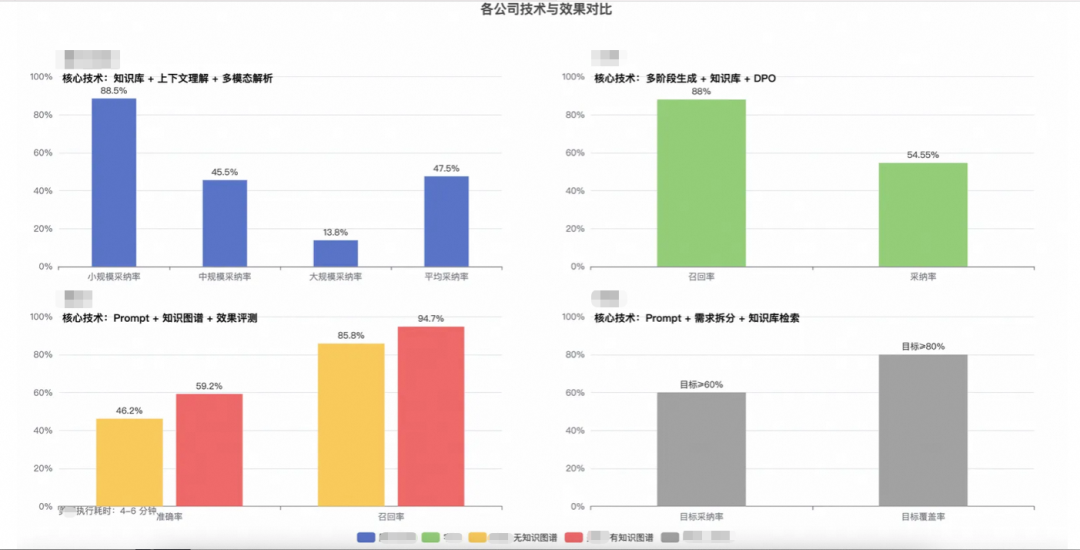

1.1 Industry Analysis & Insights

With large language models (LLMs) advancing rapidly, the testing industry is experimenting with AI-powered methodologies. Most industry solutions use a prompt + RAG (Retrieval-Augmented Generation) pattern to build intelligent agents for specific tasks like:

- Requirement analysis

- Test case generation

- Data construction

Example industry solutions for test case generation:

_Source: QECon Conference & external briefings_

Observations from current solutions:

- Most rely solely on prompt + RAG with no specialized fine-tuning.

- Differentiation occurs in _requirement parsing_, _test analysis_, and _knowledge base construction_.

- High dependency on standardized inputs like PRD (Product Requirement Document) files.

Tmall’s strategy: Create differentiated, industry-tailored approaches for test case generation while improving input standardization.

---

1.2 Tmall Industry Challenges

E-commerce’s rapid pace and rising quality demands place pressure on QA teams:

- Short release cycles & high human resource costs

- Traditional bottlenecks in handling complex & edge test scenarios

Pain points in case design:

- Low efficiency in manual writing

- Inconsistent requirement interpretation

- Weak organizational knowledge retention

- Heavy manual workload in repetitive scenarios

Additionally, Tmall’s diverse business domains require adaptability across five categories:

- Marketing solutions

- Shopping guide scenarios

- Transaction & settlement

- Cross-department collaboration

- Mid-/back-office systems

Core objective:

Use AI to intelligently generate complete, consistent test cases that match industry-wide and domain-specific characteristics.

---

2. Implementation Strategy

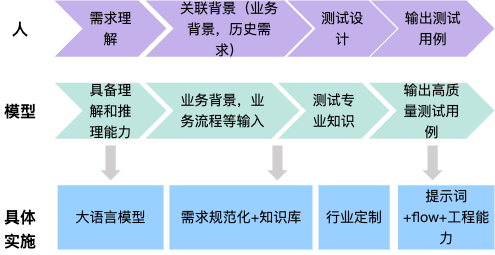

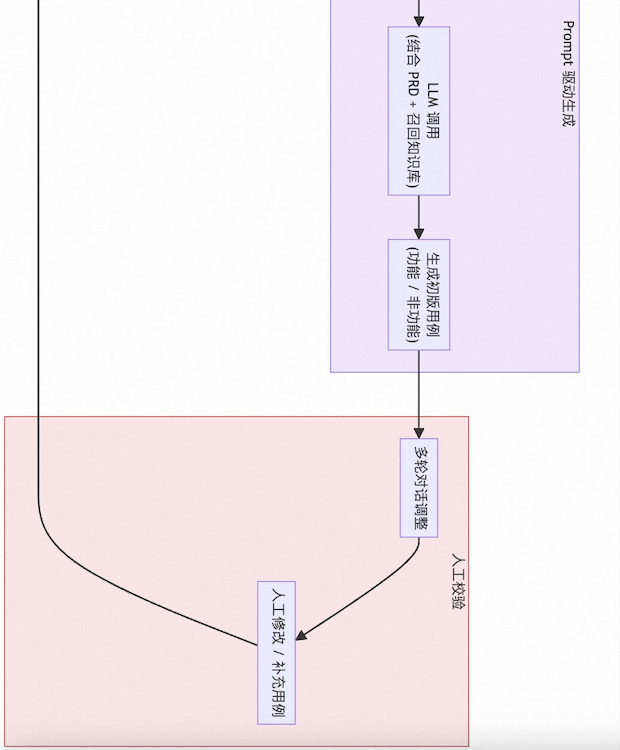

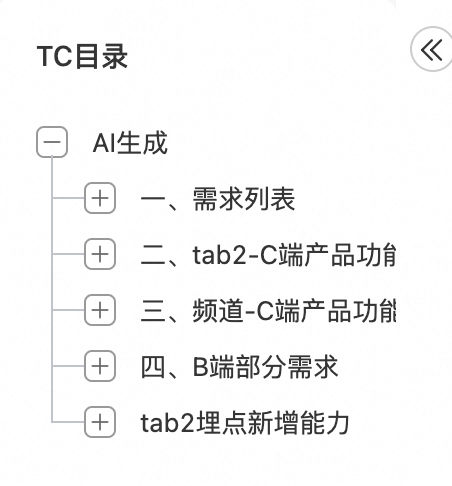

2.1 Test Case Generation Overview

QA workflow around requirements delivery:

- Requirement understanding

- Risk assessment

- Case design

- Case execution

- Defect tracking

- Integration & regression testing

- Release / Go-live

- Feedback tracking

Key stats:

> 70% of QA time is spent from case design to regression.

To reduce this and maintain high quality, AI-assisted design tools leveraging LLMs are introduced.

High-level approach:

---

2.2 Strategy Breakdown

Overall AI Generation Framework:

> Requirements Standardization + Prompt Engineering + Knowledge Base RAG + Platform Integration + AI Agent Enablement

---

Step 1 — Prompt Engineering & Process Optimization

- Refine prompts with business-specific context

- Guide LLMs to produce consistent, high-quality test cases

- Create an end-to-end generation flow integrated into QA’s daily operations

---

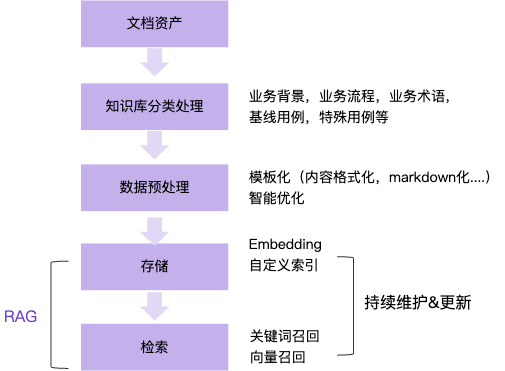

Step 2 — High-Quality Knowledge Base Development

- Capture baseline cases, pitfall scenarios, and asset-loss triggers

- Use RAG to enhance recall precision and maintain relevance

---

Step 3 — Requirements Standardization

- Implement structured PRD templates

- Improve AI output stability and coverage rates

---

Step 4 — AI Agent Enablement

- Deploy agents for:

- Knowledge base auto-construction

- PRD completion

- Data integrity checks

---

Step 5 — Platform Integration

- Embed AI generation capabilities into use case management platforms

- Enable conversational and modular case generation with tools like Test Copilot

---

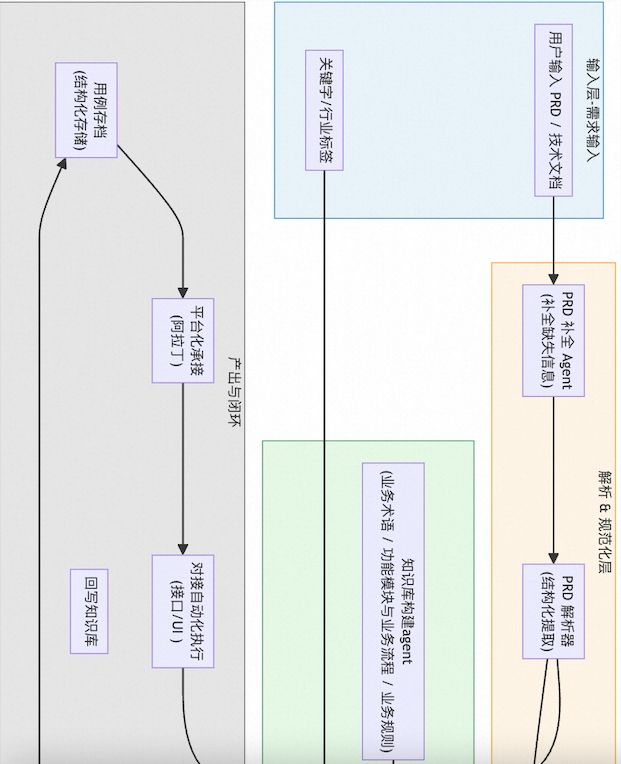

Overall Process Workflow

---

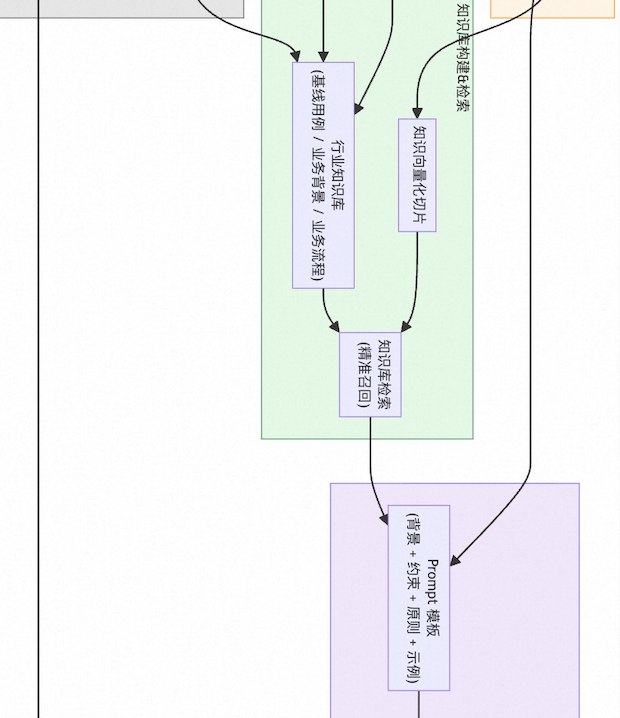

2.2.1 Prompt Engineering Flow

Strategies to ensure alignment across QA teams:

- Generate non-functional cases from functional ones, addressing exceptions & loss-prevention scenarios.

- Break complex requirements into modules, using test copilots for iterative, conversational generation.

- Allow customization for industry-specific cases.

- Run inputs through industry tags to match the right KB, prompts, and examples.

---

2.2.2 Building a Robust Knowledge Base

Scope:

- Test cases: baseline & pitfalls

- Business context: terminology, workflows

- Asset-loss scenarios: conditions & priorities

Best Practices:

- Store in structured formats (Markdown, JSON, tables)

- Use segmentation and keyword recall per smallest functional unit

Automation:

- Auto-build agents extract case-relevant data from docs

- Reconstruction agents reorganize poorly segmented KBs

---

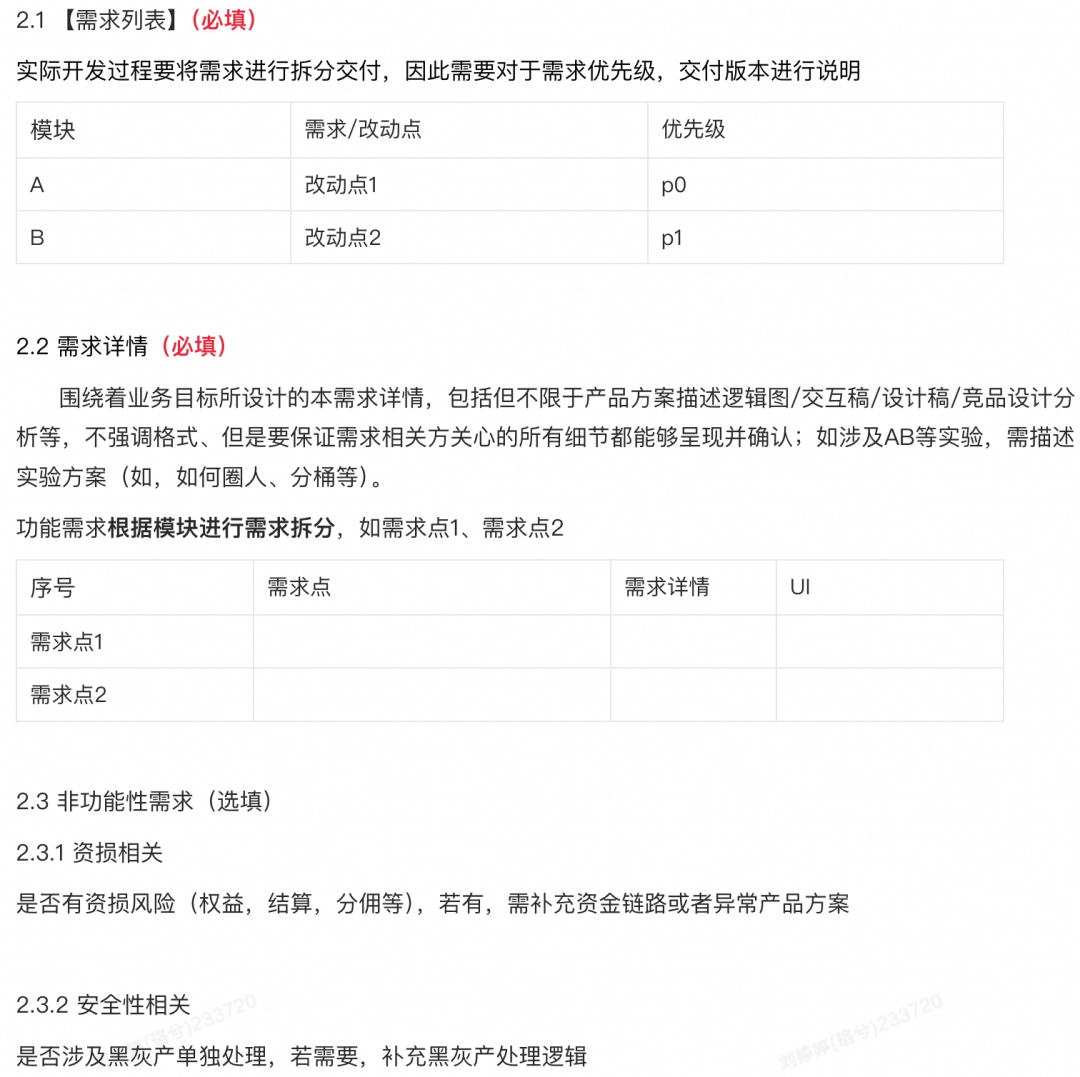

2.2.3 Standardizing Requirements

Result from pilots in Tmall App business domains:

- Higher acceptance & coverage rates

- Clearer module differentiation & improved completeness

---

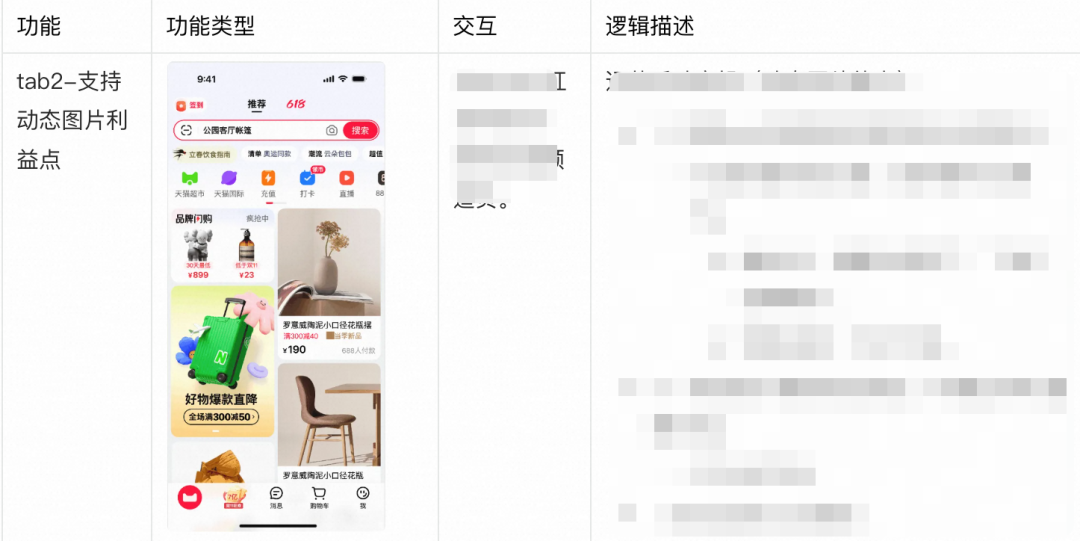

AI-Generated Use Case Examples

---

2.2.4 Platform-Based Integration

Features:

- Visual interface for AI-driven case generation

- Modes: Ai-Test & Test Copilot

- Flexible handling of complex requirements via modular breakdown

---

3. Application Results

Adoption:

- Consumer-end domains → >85% adoption

- Business-end domains → ~40% adoption

Efficiency:

> In marketing solution scenarios, medium & small requirements now take 0.5 hr vs 2 hrs, a 75% time saving.

---

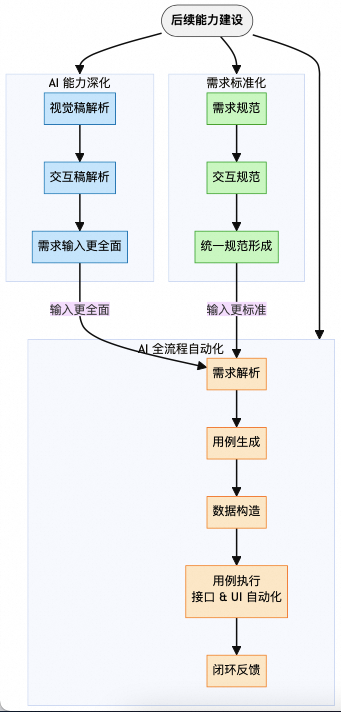

4. Outlook & Roadmap

Remaining challenges:

- Low PRD quality

- Lack of AI handling for visual drafts & interaction diagrams

- Lower performance with highly complex requirements

Future direction:

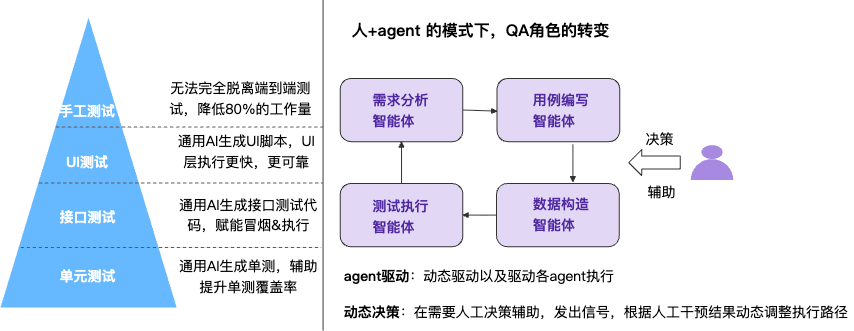

- End-to-end automation:

- _Requirement analysis → Test case generation → Script creation/execution → Defect reporting → Feedback loop_

Transformation goal:

Shift QA from manual labor to mental labor, focusing human expertise on strategy, exploratory testing, and risk identification.

---

Native SQL for Multimodal AI Search

A new solution with Alibaba Cloud PolarDB + Bailian and the Polar_AI plugin allows direct multimodal AI use from databases via SQL, avoiding cross-system redundancy.

---

Cross-domain application idea:

Platforms like AiToEarn官网 combine AI generation, publishing, and monetization across 10+ global channels, showing similar efficiencies achievable in QA workflows.

---

Would you like me to also create a condensed “Executive Summary” section for this Markdown so decision-makers can quickly grasp the AI testing framework? That would make this document more boardroom-ready.