From 0 to 1: Practice and Breakthroughs in Tmall’s AI Test Case Generation

# **Article #121 of 2025**

*(Estimated reading time: 15 minutes)*

---

## **01. Background**

### **1.1 Industry Analysis & Reflection**

With the rapid evolution of large models, the testing industry is exploring **AI-assisted approaches** for various QA scenarios. In **agent generation**, most solutions rely on the **Prompt + RAG** (Retrieval-Augmented Generation) pattern to build intelligent agents for tasks such as:

- Requirement analysis

- Test case generation

- Data construction

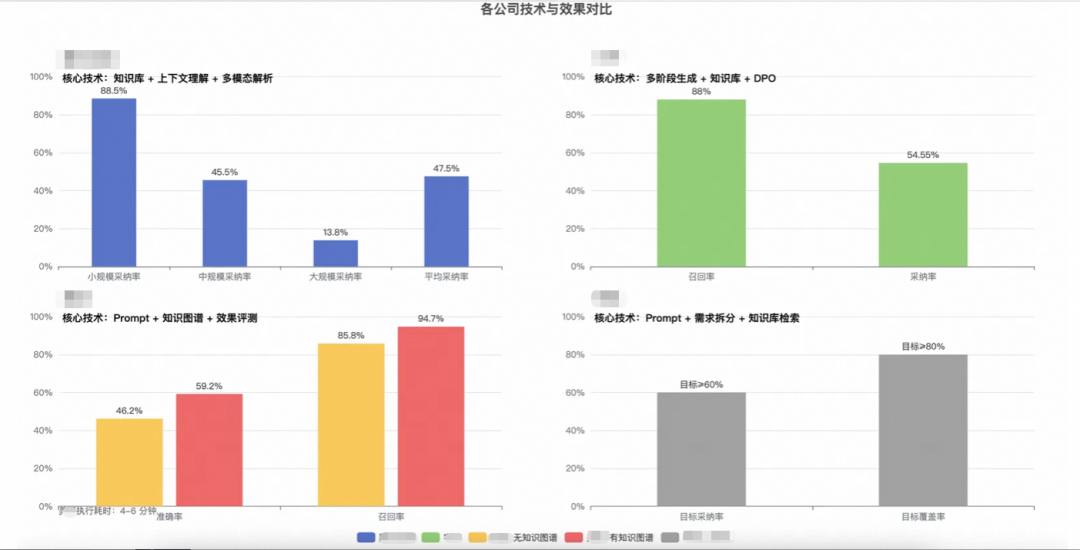

Below is an example from the industry on **case generation** approaches and their comparative effectiveness:

> *Reference: QECon Conference and external sharing*

#### Key Observations:

- Most capabilities extend via **Prompt + RAG** without fine-tuning models.

- Variations exist in handling **requirement parsing**, **test analysis**, and **knowledge base construction**.

- For **Tmall-specific** technical and business contexts, test case generation must be tailored to industry characteristics.

- Standardization of **highly dependent input artifacts** (e.g., PRD) is essential.

---

### **1.2 Tmall Industry Characteristics**

In **e-commerce**, change is constant:

- **Fast releases** and **high product quality demands** intensify QA pressures.

- Traditional testing models face efficiency and coverage bottlenecks.

**Challenges faced by testing teams:**

- **High labor cost under rapid iteration**

- **Manual bottlenecks** in complex case design

- Heavy reliance on **tester experience**

**Pain points in the test case authoring phase:**

1. **Low design efficiency** — time-consuming manual writing.

2. **Requirement misinterpretation** — inconsistent case design understanding.

3. **Poor knowledge retention** — lack of reusable baseline cases and lessons learned.

4. **High repetitive workload** — large volume of pattern-based writing.

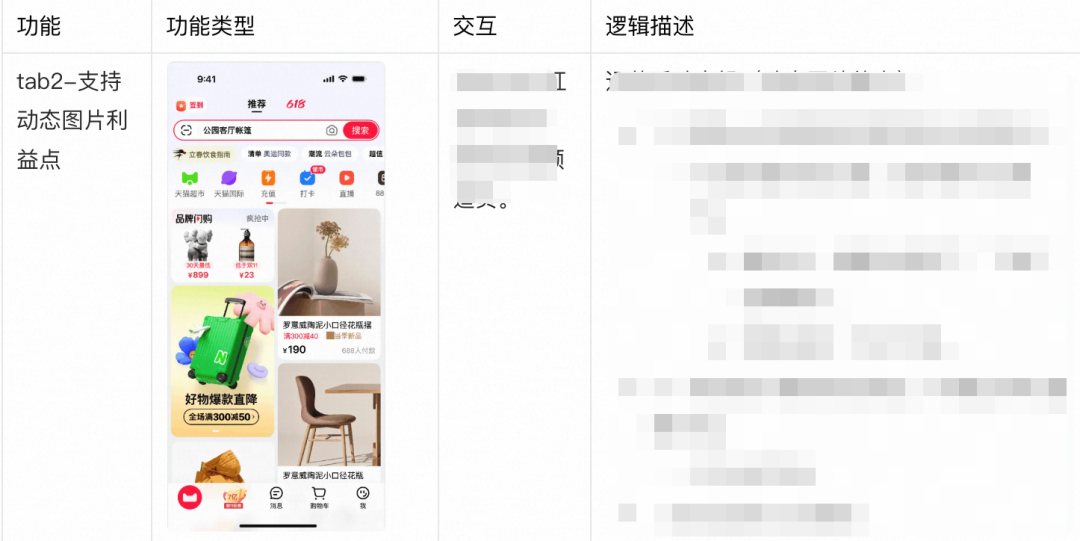

**Business categories in Tmall testing:**

1. Marketing solutions

2. Shopping guidance scenarios

3. Transaction & settlement

4. Cross-department collaboration

5. Middle & back office systems

#### **Objective:**

Leverage **AI technology** to:

- Enable **intelligent test case generation**

- Ensure workflows align with **industry-specific demands**

---

## **02. Implementation Strategy**

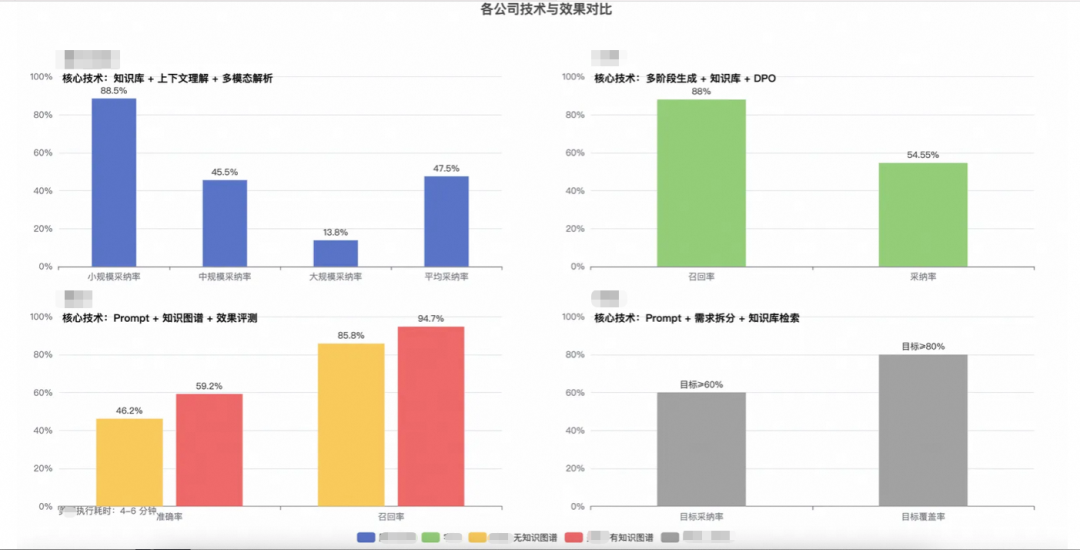

### **2.1 Test Case Generation Approach**

In QA, guaranteeing **business quality and coverage** involves:

`Requirement delivery → Requirement understanding → Risk assessment → Case design → Execution → Defect tracking → Integration/regression → Release → Feedback tracking`

- Case design through regression consumes **~70% of QA time**.

- With **fast release cycles**, **high quality targets**, and **limited manpower**, large models can assist case design and build intelligent tools.

**Approach Overview:**

---

### **2.2 Detailed Implementation Plan**

#### **Core Strategy:**

**Requirement Standardization + Prompt Engineering + Knowledge Base (RAG) + Platform Integration**, supported by **AI Agents** for accelerated KB construction.

---

### **2.2.1 Prompt Engineering & Process Optimization**

Use fine-tuned Prompt design with context to guide LLM output.

**Actions:**

1. Convert functional cases into **non-functional test cases** (exceptions, financial loss scenarios).

2. Automatically split complex requirements into modules; generate cases conversationally via **Test Copilot**.

3. Customizable output per industry with domain-specific examples.

4. End-to-end AI pipeline where:

- Input = Requirements + Industry tags

- Output = Module-specific cases with relevant KB retrieval

---

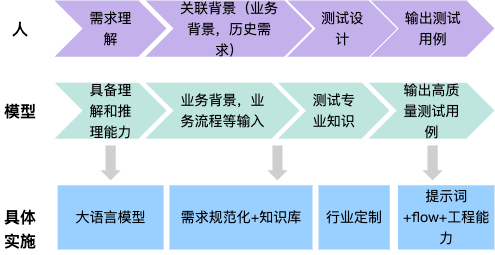

### **2.2.2 High-Quality Knowledge Base Construction**

Build standardized KBs enabling **precision RAG retrieval**.

**KB Structure**:

- **Scope**: Test cases, business process knowledge, financial loss scenarios

- **Formats**: Text, Markdown, JSON, tables

- **Retrieval**: Chunking, indexing, keyword targeting at function-point level

- **Maintenance**: Cleaning, de-duplication, updates

**Automation Agents**:

- Extract business terminology, processes, core functions

- Reconstruct poorly segmented KBs for improved chunk quality

---

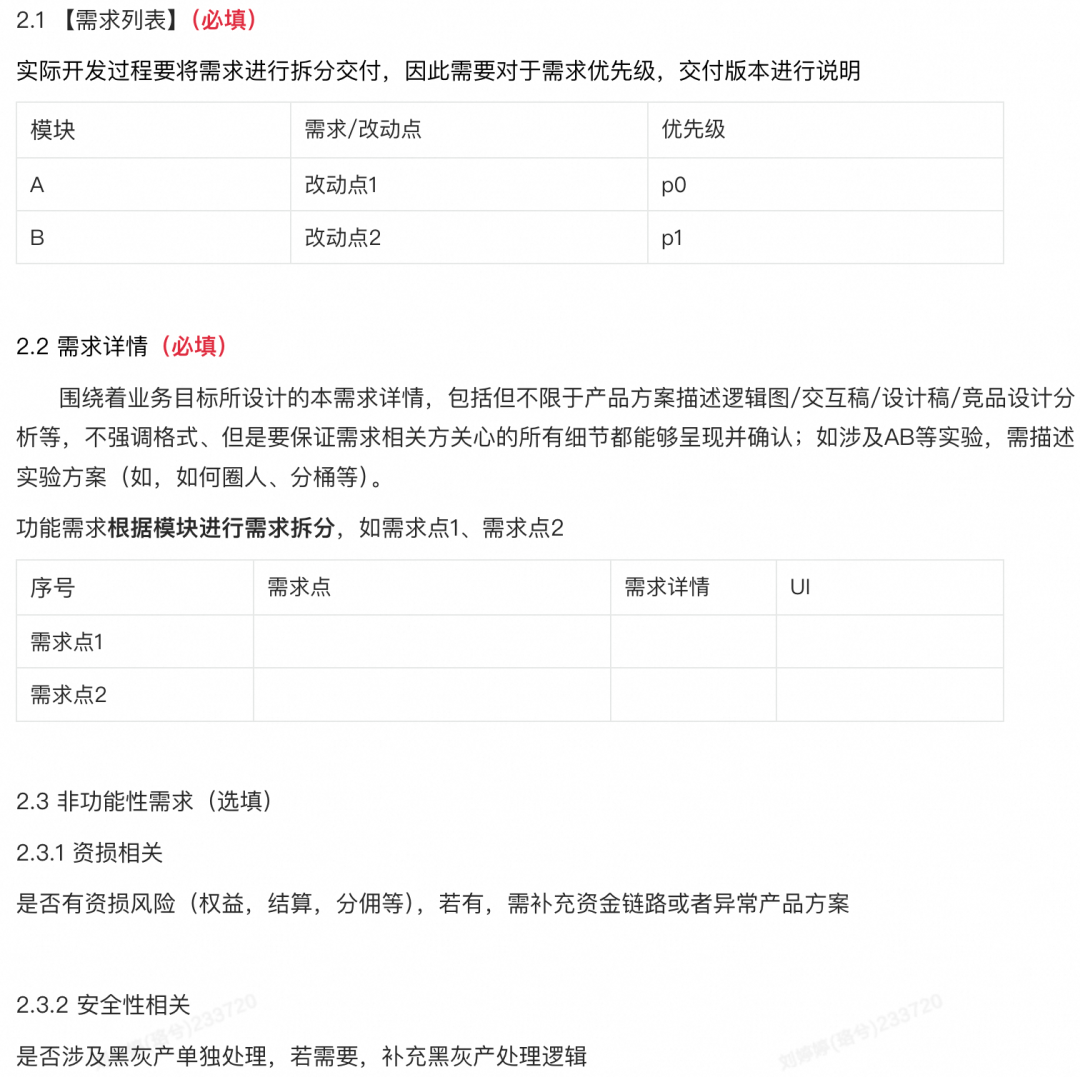

### **2.2.3 Requirement Standardization**

Collaborate with PMs to define a **PRD template**.

**Results from pilot projects (Tmall app)**:

- Higher **case adoption & coverage**

- Better modular structuring of generated cases

---

### **2.2.4 Platform-Based Integration**

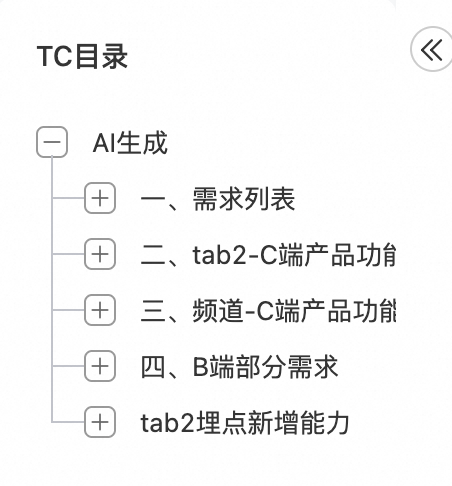

Integrate case generation into the **Test Case Management Platform** with a visual interface.

Features:

- Supports **Ai-Test** and **Test Copilot** modes

- Modular decomposition & conversational generation

- Exploring AI in **data construction, execution, and full-cycle automation**

---

## **03. Application Results**

1. **Adoption Rate**:

- C-end (user-facing) domains: >85% adoption

- B-end (business-facing): <40% adoption

2. **Efficiency Gains**:

- Case creation time reduced from **2 hours to 0.5 hours** (75% time saving) in certain domains

---

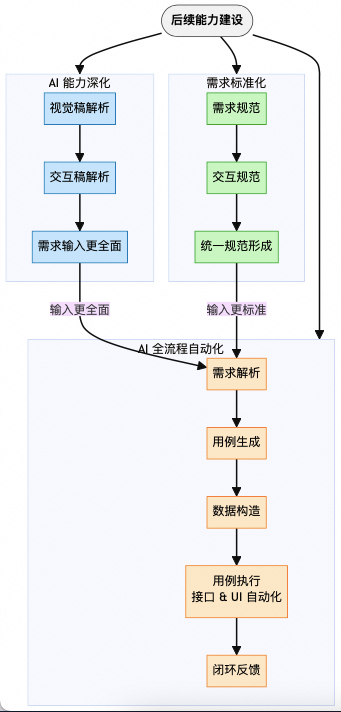

## **04. Outlook**

**Current issues**:

- Low PRD quality

- Weak visual/interaction draft parsing

- Poor handling of complex requirements

**Future plans**:

- Advance towards **AI full-process testing**

- Enhance AI parsing, memory, planning capabilities

---

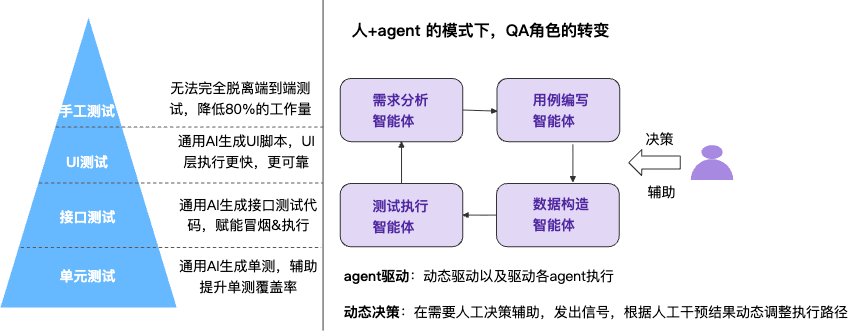

### **QA Role Transformation**

Shift from **physical labor-type testing** → **mental labor-type testing**:

- AI handles **repetitive tasks**

- QA focuses on:

- Business risk identification

- Test strategy development

- Exploratory testing

- User experience analysis

This evolution can break the **“coverage–cost–speed”** constraint.

---

## **Relevant Tool Reference: AiToEarn**

**[AiToEarn](https://aitoearn.ai/)**:

- **Open-source** AI content monetization platform ([GitHub](https://github.com/yikart/AiToEarn))

- Connects AI generation, multi-platform publishing, analytics, and ranking

- Distributes to: Douyin, Kwai, WeChat, Bilibili, Xiaohongshu, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, X

**Relevance**:

- Demonstrates **automated, multi-platform workflows**

- Parallels QA automation goals in deployment & analytics

---This rewrite improves structure & readability, uses clear headings & bullet points, and groups actions into lists for better comprehension. Would you like me to also add a visual process diagram so your AI test case generation workflow is immediately presentation-ready?