From Code Generation to Autonomous Decision-Making: Building a Coding-Driven "Self-Programming" Agent

# Building a Self-Programming Agent

*A Step-by-Step Guide to Creating an AI Assistant That Writes and Executes Its Own Code*

---

## Introduction

In exploring Large Language Model (LLM) applications, using LLMs as the **"brain"** of an Agent opens limitless possibilities. One of the most successful large-scale implementations is **code generation**.

Given that LLMs can produce high-quality code, we ask: why not let them **write and run code to control their own behavior**? This enables the Agent’s operational logic to go beyond simple “next step” reasoning, supporting **complex branching, loops, and advanced automation**.

In this article, you'll learn how we built an Agent capable of **self-programming** — designed as a *junior teammate* ready to contribute actively in DevOps workflows.

---

## Agent System Design

### Overview

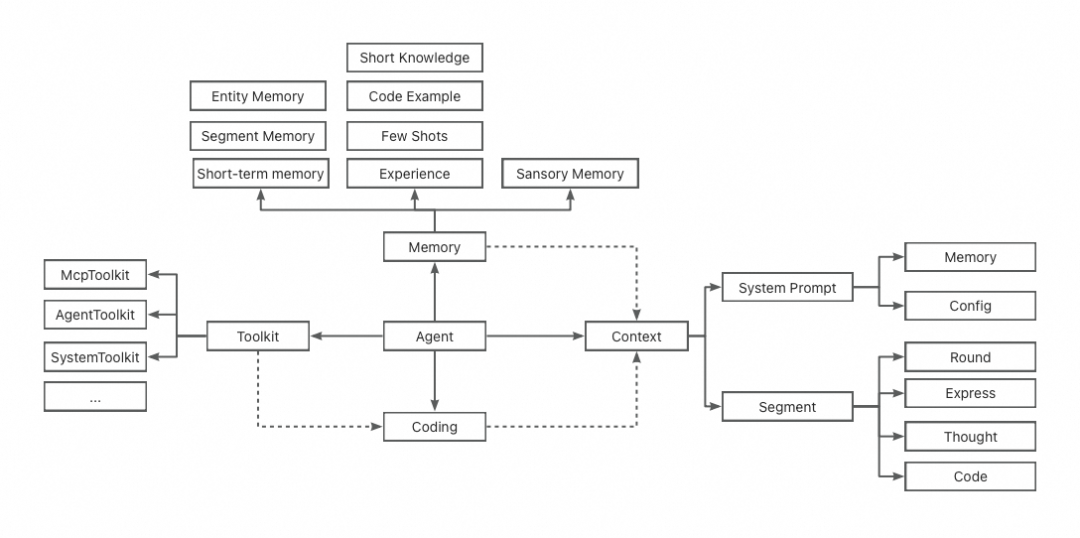

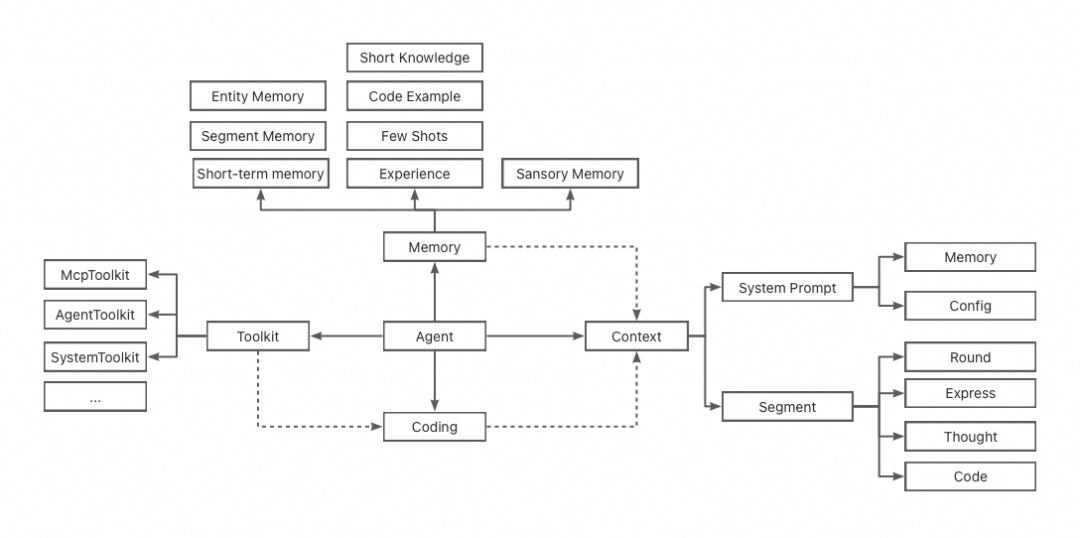

We optimized the **ReAct Agent pattern** to create a more flexible and powerful execution framework.

**Key improvements:**

- **Py4j-based architecture** replacing rigid JSON calls with **Code + generalized invocation**

- **Spring Boot + Spring AI** backend integration with Alibaba AI capabilities

- Full-chain **evaluation and monitoring** via observability platforms

- **MCP protocol** for cross-tool capability integration

- **A2A protocol** for multi-Agent ecosystems

- **Hybrid model strategy**:

- **Qwen3-Turbo** for translation/data extraction (low latency)

- **Qwen3-Coder** for reasoning/dynamic coding

- On-demand Qwen/DeepSeek models for general tasks

*Agent internal module distribution*

---

### Memory Architecture

- **Short-Term Memory**: Session-restricted knowledge

- **Sensory Memory**: Environmental awareness (e.g., page, URL data capture)

- **Experience**: Learned configurations & summaries to guide decision-making

---

### Context System

Our **Context Stack** includes:

- **System Prompt** (dynamic/configurable)

- **Memory content**

- **User Prompt** segment (*Inference Segment*)

- **Round-based execution planning**

---

## Real-World Integration Example

Platforms like [AiToEarn官网](https://aitoearn.ai/) show how agents can expand into **multi-platform creative publishing**. AiToEarn connects AI-generated content with simultaneous distribution to Douyin, Kwai, WeChat, Bilibili, Rednote, Facebook, Instagram, LinkedIn, Threads, YouTube, Pinterest, and X — plus analytics and [AI模型排名](https://rank.aitoearn.ai).

Our aim: Agents capable of internal DevOps tasks and **external monetization workflows**.

---

## Agent Functional Zones

### 1. Perception Zone

Handles **input tracking** from:

- User messages

- Click events

- Sub-Agent async responses

**Capabilities:**

- Language parsing

- Fuzzy interpretation

- Scenario analysis

- Context enhancement

- Environment parsing

---

### 2. Cognitive Zone

**IntentPlanner → Segment Mechanism → Python Execution**

IntentPlanner

├─ SegmentBuilder

├─ InferencePromptConfigManager

└─ PromptBuilders (Thought / Cmd / Custom)

Uses generated **code** to fulfill parsed user intent.

---

### 3. Motor Zone

Executes **complex multi-round tasks**:

- Sets sub-goals each round

- Evaluates task completion status

- Decides whether to continue or abandon

---

### 4. Expression Zone

Manages **output delivery**, including:

- Text messages

- Cards

- Event sequences

---

### 5. Self-Evaluation Zone

Runs **SelfTaskCheck** after goals are completed, re-triggering processes if necessary.

---

## Context Engineering

### Key Components

- **Segments**: Current & historical execution fragments

- **Environmental Contexts**: Session, tenant, and variable configs

- **Tool Contexts**: Available tools, usage descriptions

---

### Prompt Assembly

We use modular prompt building — separating **system, user, and FIM (Fill-In-Middle)** formats:

**FIM Example:**<|fim_prefix|>

Prefix code here

<|fim_suffix|>

Suffix code here

<|fim_middle|>

Model writes continuation

---

## Memory System

We implement **multi-layer memory** inspired by cognitive science:

- **Sensory Memory**

- **Short-Term (Session-level, ES-based)**

- **Long-Term Memory** (persistent knowledge, experience)

- **User Preferences** (role definitions, work habits)

**Goal:** Maintain context continuity, enable reasoning, and support personalization.

---

## Code Execution & Bridging

### Python Execution Engine

- Java triggers Python code execution

- Supports async execution

- Lifecycle management & monitoring

---

### Toolkit Bridge (Py4j)

Connects Python → Java with:

- **Singleton Gateway**

- **Fixed Port (15333)**

- **Dynamic Toolkit Proxy** calls

---

### Parameter Handling

- Named argument unification

- Type conversion (Java → Python)

- JSON serialization for transmission

---

## Toolkit System

We categorize toolkits into:

- **Custom Card toolkit** (UI-based interaction)

- **MCP toolkit** (external integration)

- **Agent toolkit** (multi-Agent cooperation)

- **System toolkit** (core operational functions)

---

## Registration Mechanisms

1. **Dynamic Registration** (interface-based)

2. **Annotation-Based Registration** (declarative)

---

## Engineering Principles

### 1. Prompt Design

Use **structured composition**:

- Introduction → Mechanism → Input → Output → Examples

- Apply Template Method + Builder patterns

### 2. Architecture Engineering

Ensure lifecycle management, logging, observability, & memory strategies are solid.

### 3. Self-Learning

Implement experiential learning cycles:Collect → Process → Store → Retrieve

---

## Outlook

Our target: Agents at **Level 1.5** — on par with a junior programmer.

Optimization focuses:

- Dynamic prompts

- Merging IntentPlanner & TaskExecutor

- Precise context isolation

- Knowledge freshness

- Expanded MCP & Agent capabilities

---

## Closing Thoughts

Advanced AI agents, when combined with cross-platform publishing ecosystems like [AiToEarn官网](https://aitoearn.ai/), can connect **self-programming capabilities** with real-world measurable outputs — from DevOps automation to global creative monetization.

---